CNN

MNIST手写数据

import torch

import torch.nn as nn

import torch.utils.data as Data

import torchvision

import matplotlib.pyplot as plt

EPOCH = 1

BATCH_SIZE = 50

LR = 0.001

DOWNLOAD_MNIST = False # 如果已经下载好了mnist数据改为 False

# Mnist 手写数字

train_data = torchvision.datasets.MNIST(

root='./mnist/', # 保存或者提取位置

train=True, # flase给你的是test data

transform=torchvision.transforms.ToTensor(), # 转换 PIL.Image or numpy.ndarray 成

#将原始的从网上下载的数据改变成什么样(此处Tensor)的数据形式

#原来图片值(0,255)改完之后压缩数据的值(0,1)

download=DOWNLOAD_MNIST, # 没下载就下载, 下载了就不用再下了

)

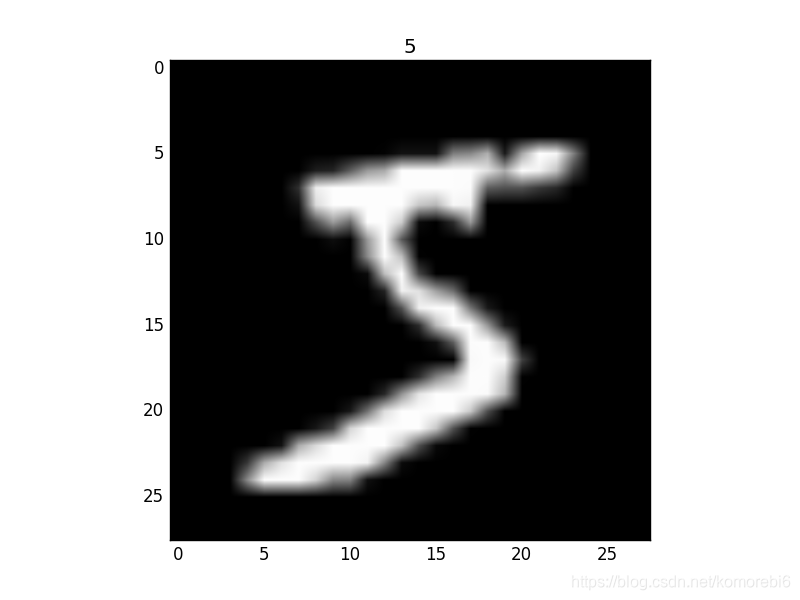

#画图

print(train_data.train_data.size()) # (60000, 28, 28)

print(train_data.train_labels.size()) # (60000)

plt.imshow(train_data.train_data[0].numpy(), cmap='gray')

plt.title('%i' % train_data.train_labels[0])

plt.show() 测试训练数据

测试训练数据

#批处理

train_loader=Data.DataLoader(

dataset=train_data,

batch_size=BATCH_SIZE,

shuffle=True,

)

#测试集

test_data=torchvision.datasets.MNIST(root='./mnist/',train=False)#false说明提取出来的是testdata

test_x = torch.unsqueeze(test_data.test_data, dim=1).type(torch.FloatTensor)[:2000]/255.

test_y = test_data.test_labels[:2000]#只取前2000个CNN模型

这个 CNN 整体流程是 卷积(Conv2d) ->激励函数(ReLU) -> 池化, 向下采样 (MaxPooling) -> 再来一遍 -> 展平多维的卷积成的特征图 ->接入全连接层 (Linear) -> 输出

#建立神经网络

class CNN(nn.Module):

def __init__(self):

super(CNN,self).__init__()

self.conv1=nn.Sequential(#定义卷积层1 图片(1,28,28)的维度

nn.Conv2d(#卷积层

in_channels=1,#这张图片是有多少高度或者说多少层。比如rgb图片3个层 黑白图1层

out_channels = 16,#有多少个扫描(进来)的高度也就是过滤器的个数,相当于一层提取了16个特征

kernel_size=5,#过滤器是5*5个像素点

stride=1,#过滤器的步长

padding=2,#在图片外多加了一层为0的数据

#if stride=1,padding=(kernel_size-1)/2=(5-1)2

),#(1,28,28)变成(16,28,28)因为使用padding长宽没有变

nn.ReLU(),#神经网络

nn.MaxPool2d(kernel_size=2),#池化层()筛选重要的信息

#相当于选择2*2区域最大的值

#(16,28,28)变为(16,14,14)

)

self.conv2=nn.Sequential(

nn.Conv2d(16,32,5,1,2),#(16,14,14)变为(32,14,14)

#conv1输出是16所以接受16层,加工成32层,kernel_size,stride,padding

nn.ReLU(),

nn.MaxPool2d(2),#(32,14,14)变为(32,7,7)

)

#输出层 将三维的数据展平为2维的

self.out=nn.Linear(32*7*7,10)#0、1、2、3.。9个图片共有10个分类,

#展平

def forward(self, x):

x = self.conv1(x)

x = self.conv2(x) #(batch,32,7,7)

x = x.view(x.size(0), -1) # 展平多维的卷积图成 (batch_size, 32 * 7 * 7) -1将32,7,7变成32 * 7 * 7

output = self.out(x)

return output

cnn=CNN()

print(cnn)

#训练

optimizer = torch.optim.Adam(cnn.parameters(), lr=LR)

loss_func = nn.CrossEntropyLoss()

for epoch in range(EPOCH):

for step, (b_x, b_y) in enumerate(train_loader):

output = cnn(b_x)

loss = loss_func(output, b_y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

#每50步看一下训练效果

if step % 50 == 0:

test_output=cnn(test_x)

pred_y = torch.max(test_output, 1)[1].data.numpy()

accuracy = float((pred_y == test_y.data.numpy()).astype(int).sum()) / float(test_y.size(0))

print('Epoch: ', epoch, '| train loss: %.4f' % loss.item(), '| test accuracy: %.2f' % accuracy)

test_output= cnn(test_x[:10])

pred_y = torch.max(test_output, 1)[1].data.numpy()

print(pred_y, '预测数据')

print(test_y[:10].numpy(), '真实数据')

!!输出结果

Epoch: 0 | train loss: 2.2981 | test accuracy: 0.13

Epoch: 0 | train loss: 0.4511 | test accuracy: 0.85

Epoch: 0 | train loss: 0.3665 | test accuracy: 0.89

Epoch: 0 | train loss: 0.2243 | test accuracy: 0.92

Epoch: 0 | train loss: 0.1712 | test accuracy: 0.92

Epoch: 0 | train loss: 0.0470 | test accuracy: 0.94

Epoch: 0 | train loss: 0.2686 | test accuracy: 0.95

Epoch: 0 | train loss: 0.1166 | test accuracy: 0.96

Epoch: 0 | train loss: 0.0311 | test accuracy: 0.96

Epoch: 0 | train loss: 0.2191 | test accuracy: 0.97

Epoch: 0 | train loss: 0.0923 | test accuracy: 0.96

Epoch: 0 | train loss: 0.2028 | test accuracy: 0.96

Epoch: 0 | train loss: 0.1041 | test accuracy: 0.97

Epoch: 0 | train loss: 0.0836 | test accuracy: 0.97

Epoch: 0 | train loss: 0.0224 | test accuracy: 0.97

Epoch: 0 | train loss: 0.1463 | test accuracy: 0.97

Epoch: 0 | train loss: 0.0598 | test accuracy: 0.97

Epoch: 0 | train loss: 0.1540 | test accuracy: 0.98

Epoch: 0 | train loss: 0.2039 | test accuracy: 0.97

Epoch: 0 | train loss: 0.0519 | test accuracy: 0.97

Epoch: 0 | train loss: 0.2456 | test accuracy: 0.98

Epoch: 0 | train loss: 0.0790 | test accuracy: 0.98

Epoch: 0 | train loss: 0.0024 | test accuracy: 0.98

Epoch: 0 | train loss: 0.1731 | test accuracy: 0.98

[7 2 1 0 4 1 4 9 5 9] 预测数据

[7 2 1 0 4 1 4 9 5 9] 真实数据RNN(分类)

MNIST手写数据

#rnn

import torch

from torch import nn

import torchvision.datasets as dsets

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import torch.utils.data as Data

EPOCH=1

BATCH_SIZE=64

TIME_STEP=28#rnn分成多少个时间点的数据(图片28*28长宽,每28步中的一步读取一行信息)

INPUT_STEP=28#每个时间点输入的数据值(一行信息包含28个像素点)

LR=0.01

DOWNLOAD_MNIST=False#如果已经下载了mnist数据就写上 Fasle

train_data=dsets.MNIST(

root='./mnist/',

train=True,

transform=transforms.ToTensor(),

download=DOWNLOAD_MNIST,

)

#批处理

train_loader=Data.DataLoader(

dataset=train_data,

batch_size=BATCH_SIZE,

shuffle=True,

)

#测试集

test_data = dsets.MNIST(root='./mnist/', train=False, transform=transforms.ToTensor())#false说明提取出来的是testdata

test_x = test_data.test_data.type(torch.FloatTensor)[:2000]/255

test_y = test_data.test_labels.numpy()[:2000] #只取前2000个RNN模型训练

(input0, state0) -> LSTM -> (output0, state1);

(input1, state1) -> LSTM -> (output1, state2);

…

(inputN, stateN)-> LSTM -> (outputN, stateN+1);

outputN -> Linear -> prediction.

通过LSTM分析每一时刻的值, 并且将这一时刻和前面时刻的理解合并在一起, 生成当前时刻对前面数据的理解或记忆. 传递这种理解给下一时刻分析.

class RNN(nn.Module):

def __init__(self):

super(RNN,self).__init__()

self.rnn=nn.LSTM(

input_size=INPUT_STEP,

hidden_size=64,#隐藏层节点数

num_layers=1,#隐藏层层数

batch_first=True,#nput & output会是以 batch size 为第一维度的特征集 e.g. (batch, time_step, input_size)

)

#接下来会有rnn数据,数据需要处理

self.out=nn.Linear(64,10) # 输出层 0~9分类

def forward(self, x):

# x shape (batch, time_step, input_size)

# r_out shape (batch, time_step, output_size)

# h_n shape (n_layers, batch, hidden_size) LSTM 有两个 hidden states, h_n 是分线, h_c 是主线

# h_c shape (n_layers, batch, hidden_size)

r_out,(h_n,h_c)=self.rnn(x,None)#分线h_n主线h_c

out=self.out(r_out[:,-1,:])#选取最后一个时间点的output

return out

rnn = RNN()

print(rnn)

optimizer = torch.optim.Adam(rnn.parameters(), lr=LR)

loss_func=nn.CrossEntropyLoss()

for epoch in range(EPOCH):

for step, (b_x, b_y) in enumerate(train_loader):

b_x = b_x.view(-1, 28, 28) # reshape x to (batch, time_step, input_size)

output = rnn(b_x)

loss = loss_func(output, b_y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

if step % 50 == 0:

test_output = rnn(test_x.view(-1, 28, 28)) # (samples, time_step, input_size)

pred_y = torch.max(test_output, 1)[1].data.numpy()

accuracy = float((pred_y == test_y).astype(int).sum()) / float(test_y.size)

print('Epoch: ', epoch, '| train loss: %.4f' % loss.data.numpy(), '| test accuracy: %.2f' % accuracy)

test_output = rnn(test_x[:10].view(-1, 28, 28))

pred_y = torch.max(test_output, 1)[1].data.numpy()

print(pred_y, 'prediction number')

print(test_y[:10], 'real number')训练类似于CNN

RNN(回归)

rnn在每个时间点上都会有输出,在每个点上做输出与真实数据的差别。

如:用sin曲线预测cos(用sin)

#run回归 sin预测cos

import torch

from torch import nn

import numpy as np

import matplotlib.pyplot as plt

# torch.manual_seed(1)

TIME_STEP = 10

INPUT_SIZE = 1#一个时间点的y值 input output都是1

LR = 0.02

DOWNLOAD_MNIST = False

# show data

steps = np.linspace(0, np.pi*2, 100, dtype=np.float32) # float32 for converting torch FloatTensor

x_np = np.sin(steps)

y_np = np.cos(steps)

plt.plot(steps, y_np, 'r-', label='target (cos)')

plt.plot(steps, x_np, 'b-', label='input (sin)')

plt.legend(loc='best')

plt.show()

class RNN(nn.Module):

def __init__(self):

super(RNN,self).__init__()

self.rnn=nn.RNN(

input_size=INPUT_SIZE,

hidden_size=32,#32个神经元

num_layers=1,#隐藏层层数

batch_first=True,#nput & output会是以 batch size 为第一维度的特征集 e.g. (batch, time_step, input_size)

)

#接下来会有rnn数据,数据需要处理

self.out=nn.Linear(32,1) # 32个输入1个输出

def forward(self, x,h_state):

# x (batch, time_step, input_size)

# h_state (n_layers, batch, hidden_size)

# r_out (batch, time_step, hidden_size)

r_out,h_state=self.rnn(x,h_state)#除了x还有h_state(前面的记忆)也当做input传入

outs=[]#因为每一步都要经过linear的加工,把每一次的加工都放在list中作为最终的rnn output出来的东西

#做一个动态的计算图

for time_step in range(r_out.size(1)):#对每一个时间点计算 output

outs.append(self.out(r_out[:,time_step,:]))

return torch.stack(outs, dim=1), h_state#将list压在一起 h_state返回值传给下一个forward

rnn=RNN()

print(rnn)

optimizer = torch.optim.Adam(rnn.parameters(), lr=LR)

loss_func = nn.MSELoss()

h_state = None # 要使用初始 hidden state, 可以设成 None

plt.figure(1, figsize=(12, 5))

plt.ion() # continuously plot

for step in range(100):

start, end = step * np.pi, (step+1)*np.pi # time steps

# sin 预测 cos

steps = np.linspace(start, end, 10, dtype=np.float32)

x_np = np.sin(steps) # float32 for converting torch FloatTensor

y_np = np.cos(steps)

x = torch.from_numpy(x_np[np.newaxis, :, np.newaxis])#shape(batch, time_step, input_size)batch和input_size各加一个维度

y = torch.from_numpy(y_np[np.newaxis, :, np.newaxis])

prediction,h_state =rnn(x, h_state)# rnn 对于每个 step 的 prediction, 还有最后一个 step 的 h_state

# !! 下一步十分重要 !!

h_state =h_state.data #要把 h_state 重新包装一下才能放入下一个 iteration, 不然会报错

loss = loss_func(prediction, y)

optimizer.zero_grad()

loss.backward()

optimizer.step()

# plotting

plt.plot(steps, y_np.flatten(), 'r-')

plt.plot(steps, prediction.data.numpy().flatten(), 'b-')

plt.draw(); plt.pause(0.05)

plt.ioff()

自编码/非监督学习

自编码只用训练集就, 而且只需要训练 training data 的 image, 不用训练 labels.

在脚本中使用ion()命令开启了交互模式,没有使用ioff()关闭的话,则图像会一闪而过,并不会常留。要想防止这种情况,需要在plt.show()之前加上ioff()命令。