from numpy import *

def loadDataSet():

dataMat=[];labelMat=[]

fr=open('testSet.txt')

for line in fr.readlines():

lineArr=line.strip().split()

dataMat.append([1.0,float(lineArr[0]),float(lineArr[1])])

labelMat.append(int(lineArr[2]))

return dataMat,labelMat

def sigmod(inX):

return 1.0/(1+exp(-inX))

def gradAscent(dataMatIn,classLabels):

dataMatrix = mat(dataMatIn)

labelMat = mat(classLabels).transpose()

m,n=shape(dataMatrix)

alpha=0.001

maxCycles=500

weights=ones((n,1))

for k in range(maxCycles):

h=sigmod(dataMatrix*weights)

error=(labelMat-h)

weights=weights+alpha*dataMatrix.transpose()*error

return weights

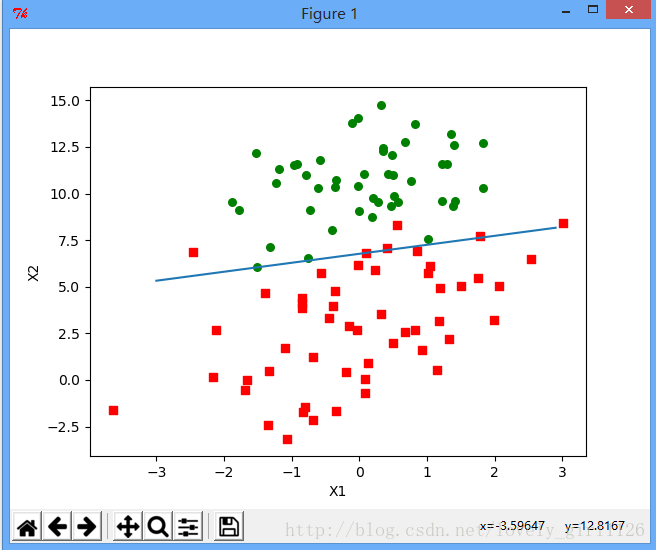

def plotBestFit(weights):

import matplotlib.pyplot as plt

dataMat,labelMat=loadDataSet()

dataArr=array(dataMat)

n=shape(dataArr)[0]

xcord1=[];ycord1=[]

xcord2=[];ycord2=[]

for i in range(n):

if int(labelMat[i])==1:

xcord1.append(dataArr[i,1]);ycord1.append(dataArr[i,2])

else:

xcord2.append(dataArr[i,1]);ycord2.append(dataArr[i,2])

fig=plt.figure()

ax=fig.add_subplot(111)

ax.scatter(xcord1,ycord1,s=30,c='red',marker='s')

ax.scatter(xcord2,ycord2,s=30,c='green')

x=arange(-3.0,3.0,0.1)

y=(-weights[0]-weights[1]*x)/weights[2]

ax.plot(x,y)

plt.xlabel('X1');plt.ylabel('X2')

plt.show()

def stocGradAscent1(dataMatrix,classLabels,numIter=150):

m,n=shape(dataMatrix)

weights=ones(n)

for j in range(numIter):

dataIndex=range(m)

for i in range(m):

alpha=4/(1.0+j+i)+0.01

randIndex=int(random.uniform(0,len(dataIndex)))

h=sigmod(sum(dataMatrix[randIndex]*weights))

error=classLabels[randIndex]-h

weights=weights+alpha*error*dataMatrix[randIndex]

del(dataIndex[randIndex])

return weightsalpha在每次迭代的时候会调整,虽然alpha会随着迭代次数不断减小,但永远不会减小到0