版权声明:原创未经允许不得转载,转载请附链接 https://blog.csdn.net/weixin_40640020/article/details/88697715

continuing。。。。。。。。。。。。。。

- 创建岭回归器

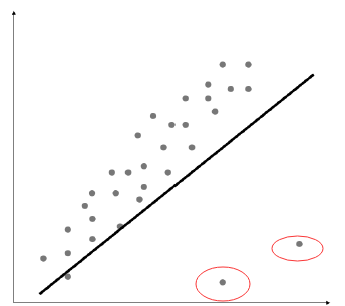

线性回归的主要问题是对异常值敏感。在真实世界的数据收集过程中,经常会遇到错误的度

量结果。而线性回归使用的普通最小二乘法,其目标是使平方误差最小化。这时,由于异常值误差的绝对值很大,因此会引起问题,从而破坏整个模型。例如下图

红色圆圈所圈点为数据的奇异点

右下角的两个数据点明显是异常值,但是这个模型需要拟合所有的数据点,因此导致整个模

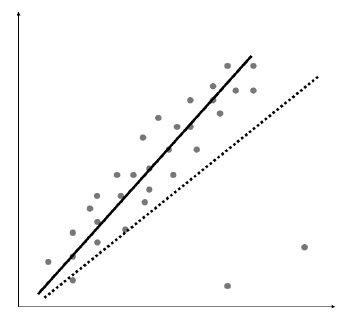

型都错了。仅凭直觉观察,我们就会觉得如下图的拟合结果更好。

普通最小二乘法在建模时会考虑每个数据点的影响,因此,最终模型就会像上图显示的直线那样。显然,我们发现这个模型不是最优的。为了避免这个问题,我们引入正则化项的系数作为阈值来消除异常值的影响。这个方法被称为岭回归。

# -*- coding: utf-8 -*-

"""

Created on Fri Mar 30 17:01:42 2018

@author: imace

"""

import numpy as np

X = []

y = []

with open('C://Users/imace/Desktop/python_execise/Chapter01/data_multivar.txt'

, 'r') as f:

for line in f.readlines():

data = [float(i) for i in line.split(',')]

xt, yt = data[:-1], data[-1]

X.append(xt)

y.append(yt)

# Train/test split

num_training = int(0.8 * len(X))

num_test = len(X) - num_training

# Training data

#X_train = np.array(X[:num_training]).reshape((num_training,1))

X_train = np.array(X[:num_training])

y_train = np.array(y[:num_training])

# Test data

#X_test = np.array(X[num_training:]).reshape((num_test,1))

X_test = np.array(X[num_training:])

y_test = np.array(y[num_training:])

# Create linear regression object

from sklearn import linear_model

linear_regressor = linear_model.LinearRegression()

ridge_regressor = linear_model.Ridge(alpha=0.01, fit_intercept=True, max_iter=10000)

# Train the model using the training sets

linear_regressor.fit(X_train, y_train)

ridge_regressor.fit(X_train, y_train)

# Predict the output

y_test_pred = linear_regressor.predict(X_test)

y_test_pred_ridge = ridge_regressor.predict(X_test)

# Measure performance

import sklearn.metrics as sm

print ("LINEAR:")

print ("Mean absolute error =", round(sm.mean_absolute_error(y_test, y_test_pred), 2) )

print ("Mean squared error =", round(sm.mean_squared_error(y_test, y_test_pred), 2) )

print ("Median absolute error =", round(sm.median_absolute_error(y_test, y_test_pred), 2) )

print ("Explained variance score =", round(sm.explained_variance_score(y_test, y_test_pred), 2) )

print ("R2 score =", round(sm.r2_score(y_test, y_test_pred), 2))

print ("\nRIDGE:")

print ("Mean absolute error =", round(sm.mean_absolute_error(y_test, y_test_pred_ridge), 2) )

print ("Mean squared error =", round(sm.mean_squared_error(y_test, y_test_pred_ridge), 2) )

print ("Median absolute error =", round(sm.median_absolute_error(y_test, y_test_pred_ridge), 2) )

print ("Explained variance score =", round(sm.explained_variance_score(y_test, y_test_pred_ridge), 2) )

print ("R2 score =", round(sm.r2_score(y_test, y_test_pred_ridge), 2))

# Polynomial regression

from sklearn.preprocessing import PolynomialFeatures

polynomial = PolynomialFeatures(degree=10)

X_train_transformed = polynomial.fit_transform(X_train)

datapoint = np.array([0.39,2.78,7.11]).reshape(1,-1)

poly_datapoint = polynomial.fit_transform(datapoint)

poly_linear_model = linear_model.LinearRegression()

poly_linear_model.fit(X_train_transformed, y_train)

print ("\nLinear regression:\n", linear_regressor.predict(datapoint))

print ("\nPolynomial regression:\n", poly_linear_model.predict(poly_datapoint))

# Stochastic Gradient Descent regressor

sgd_regressor = linear_model.SGDRegressor(loss='huber', n_iter=50)

sgd_regressor.fit(X_train, y_train)

print ("\nSGD regressor:\n", sgd_regressor.predict(datapoint))

补充:

atapoint = np.array([0.39,2.78,7.11]).reshape(1,-1)

print(datapoint)

输出一行

[[0.39 2.78 7.11]]

datapoint = np.array([0.39,2.78,7.11]).reshape(-1,1)

print(datapoint)

输出一列

[[0.39]

[2.78]

[7.11]]

程序输出结果为

LINEAR:

Mean absolute error = 3.95

Mean squared error = 23.15

Median absolute error = 3.69

Explained variance score = 0.84

R2 score = 0.83

RIDGE:

Mean absolute error = 3.95

Mean squared error = 23.15

Median absolute error = 3.69

Explained variance score = 0.84

R2 score = 0.83

Linear regression:

[-11.0587295]

Polynomial regression:

[-8.14917282]

SGD regressor:

[-7.93671074]

从上边的结果来看两种算法所得的结果一样,即线性回归和岭回归算法所得的结果一样。

编辑于 2019-03-20