参考链接:https://blog.csdn.net/dy_guox/article/details/79111949

之前参考上述一系列博客在Windows10下面成功运行了TensorFlow Android Demo,接下来就是尝试运用TensorFlow object detection API搭建自己的目标检测模型并迁移到Android上。

一、创建训练测试集

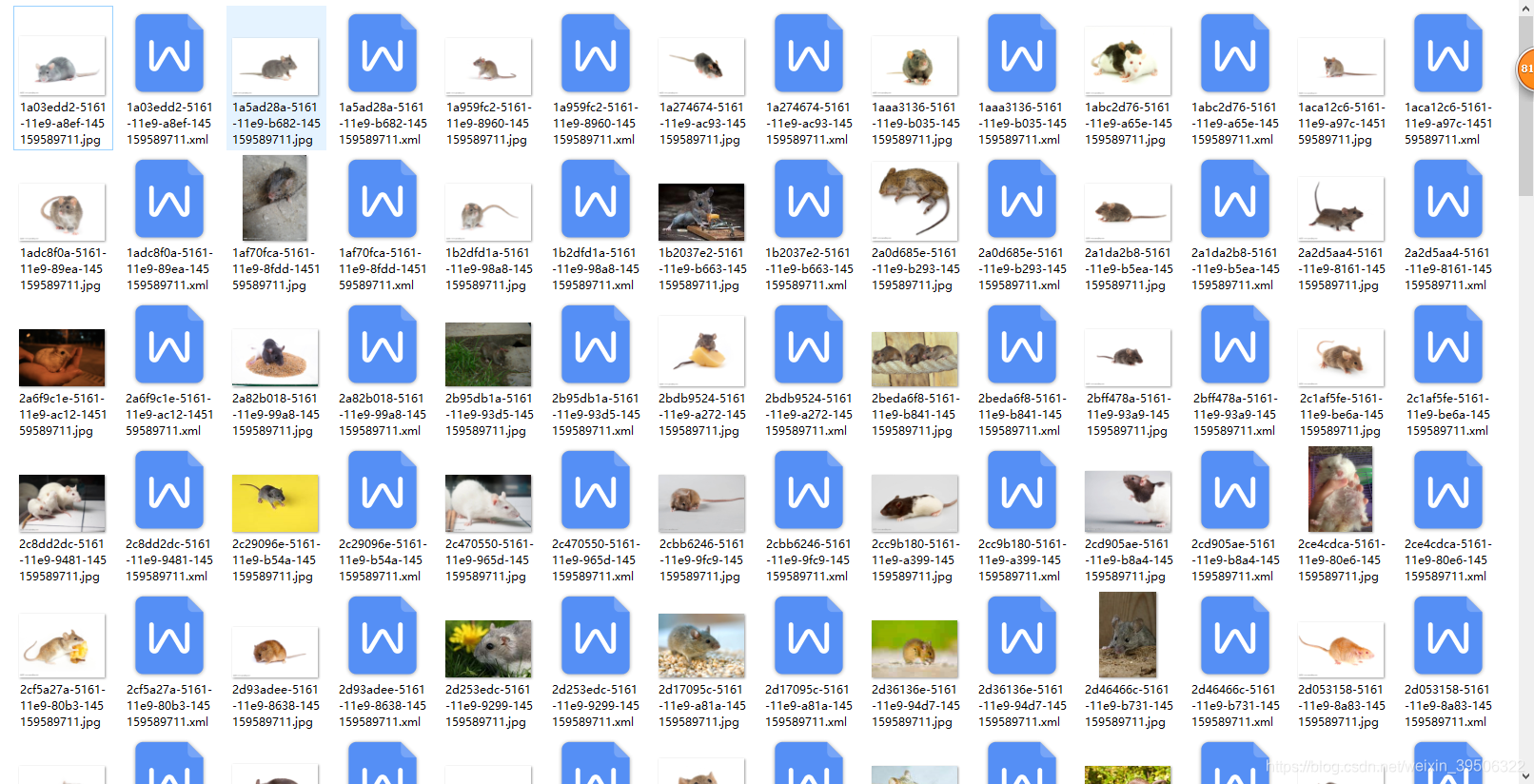

这里我从爬虫在百度图片搜索上爬取了100多张关键词为‘老鼠’的图片,最终选取出train图片160张,test图片12张。爬虫代码如下:

# -*- coding:utf-8 -*-

import re

import uuid

import requests

import os

class DownloadImages:

def __init__(self, download_max, key_word):

self.download_sum = 0

self.download_max = download_max

self.key_word = key_word

self.save_path = '../data/mouse/images/'

def start_download(self):

self.download_sum = 0

gsm = 80

str_gsm = str(gsm)

pn = 0

if not os.path.exists(self.save_path):

os.makedirs(self.save_path)

while self.download_sum < self.download_max:

str_pn = str(self.download_sum)

url = 'http://image.baidu.com/search/flip?tn=baiduimage&ie=utf-8&' \

'word=' + self.key_word + '&pn=' + str_pn + '&gsm=' + str_gsm + '&ct=&ic=0&lm=-1&width=0&height=0'

print (url)

result = requests.get(url)

self.downloadImages(result.text)

print ('下载完成')

def downloadImages(self, html):

img_urls = re.findall('"objURL":"(.*?)",', html, re.S)

print ('找到关键词:' + self.key_word + '的图片,现在开始下载图片...')

for img_url in img_urls:

print ('正在下载第' + str(self.download_sum + 1) + '张图片,图片地址:' + str(img_url))

try:

pic = requests.get(img_url, timeout=50)

pic_name = self.save_path + '/' + str(uuid.uuid1()) + '.jpg'

with open(pic_name, 'wb') as f:

f.write(pic.content)

self.download_sum += 1

if self.download_sum >= self.download_max:

break

except Exception as e:

print ('【错误】当前图片无法下载,%s' % e)

continue

if __name__ == '__main__':

downloadImages = DownloadImages(200, '老鼠')

downloadImages.start_download()

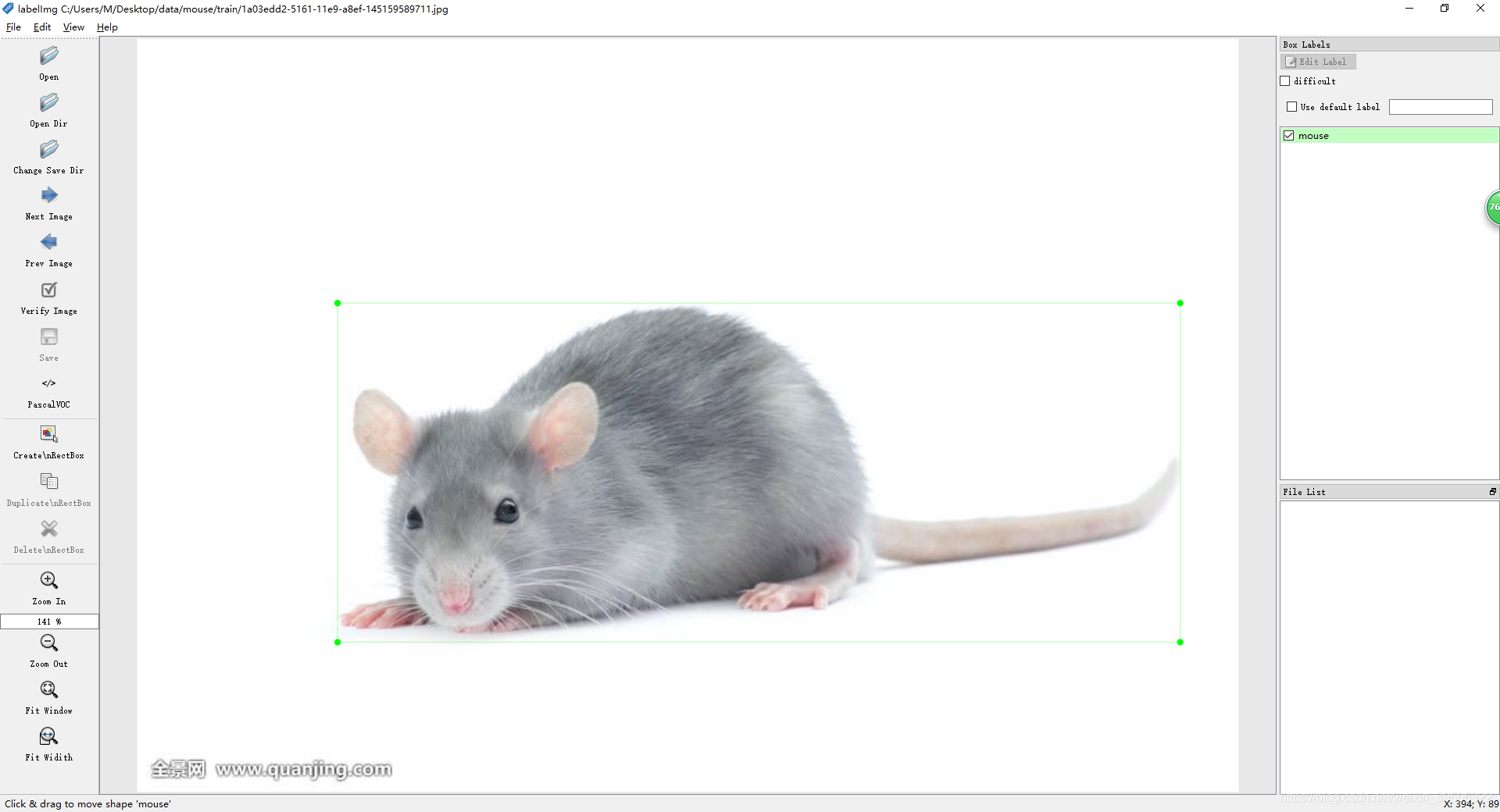

二、使用LabelImg对训练图片和测试图片中的mouse进行rectangle_box的标注,同时生成每一张图片所对应的xml文件。

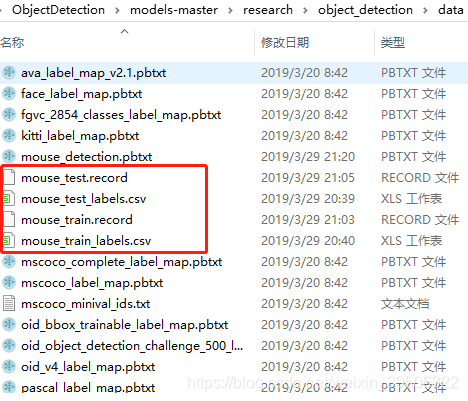

三、把生成的xml文件先是转化成csv格式的文件(xml_to_csv.py),再将csv文件转化成用于TensorFlow训练的tfrecord格式(generate_tfrecord.py)。

xml_to_csv.py代码:

# -*- coding: utf-8 -*-

"""

Created on Tue Jan 16 00:52:02 2018

@author: Xiang Guo

将文件夹内所有XML文件的信息记录到CSV文件中

"""

import os

import glob

import pandas as pd

import xml.etree.ElementTree as ET

os.chdir('C:\\Users\\M\\Desktop\\data\\mouse\\train')

path = 'C:\\Users\\M\\Desktop\\data\\mouse\\train'

def xml_to_csv(path):

xml_list = []

for xml_file in glob.glob(path + '/*.xml'):

tree = ET.parse(xml_file)

root = tree.getroot()

for member in root.findall('object'):

value = (root.find('filename').text,

int(root.find('size')[0].text),

int(root.find('size')[1].text),

member[0].text,

int(member[4][0].text),

int(member[4][1].text),

int(member[4][2].text),

int(member[4][3].text)

)

xml_list.append(value)

column_name = ['filename', 'width', 'height', 'class', 'xmin', 'ymin', 'xmax', 'ymax']

xml_df = pd.DataFrame(xml_list, columns=column_name)

return xml_df

def main():

image_path = path

xml_df = xml_to_csv(image_path)

xml_df.to_csv('mouse_train_labels.csv', index=None)

print('Successfully converted xml to csv.')

main()

generate_tfrecord.py代码:

# -*- coding: utf-8 -*-

"""

Created on Tue Jan 16 01:04:55 2018

@author: Xiang Guo

由CSV文件生成TFRecord文件

"""

"""

Usage:

# From tensorflow/models/

# Create train data:

python generate_tfrecord.py --csv_input=data/mouse_train_labels.csv --output_path=mouse_train.record

# Create test data:

python generate_tfrecord.py --csv_input=data/mouse_test_labels.csv --output_path=mouse_test.record

"""

import os

import io

import pandas as pd

import tensorflow as tf

from PIL import Image

from object_detection.utils import dataset_util

from collections import namedtuple, OrderedDict

os.chdir('E:\\tf\\ObjectDetection\\models-master\\research\\object_detection\\')

flags = tf.app.flags

flags.DEFINE_string('csv_input', '', 'Path to the CSV input')

flags.DEFINE_string('output_path', '', 'Path to output TFRecord')

FLAGS = flags.FLAGS

# TO-DO replace this with label map

# 注意将对应的label改成自己的类别!!!!!!!!!!

def class_text_to_int(row_label):

if row_label == 'mouse':

return 1

else:

None

def split(df, group):

data = namedtuple('data', ['filename', 'object'])

gb = df.groupby(group)

return [data(filename, gb.get_group(x)) for filename, x in zip(gb.groups.keys(), gb.groups)]

def create_tf_example(group, path):

with tf.gfile.GFile(os.path.join(path, '{}'.format(group.filename)), 'rb') as fid:

encoded_jpg = fid.read()

encoded_jpg_io = io.BytesIO(encoded_jpg)

image = Image.open(encoded_jpg_io)

width, height = image.size

filename = group.filename.encode('utf8')

image_format = b'jpg'

xmins = []

xmaxs = []

ymins = []

ymaxs = []

classes_text = []

classes = []

for index, row in group.object.iterrows():

xmins.append(row['xmin'] / width)

xmaxs.append(row['xmax'] / width)

ymins.append(row['ymin'] / height)

ymaxs.append(row['ymax'] / height)

classes_text.append(row['class'].encode('utf8'))

classes.append(class_text_to_int(row['class']))

tf_example = tf.train.Example(features=tf.train.Features(feature={

'image/height': dataset_util.int64_feature(height),

'image/width': dataset_util.int64_feature(width),

'image/filename': dataset_util.bytes_feature(filename),

'image/source_id': dataset_util.bytes_feature(filename),

'image/encoded': dataset_util.bytes_feature(encoded_jpg),

'image/format': dataset_util.bytes_feature(image_format),

'image/object/bbox/xmin': dataset_util.float_list_feature(xmins),

'image/object/bbox/xmax': dataset_util.float_list_feature(xmaxs),

'image/object/bbox/ymin': dataset_util.float_list_feature(ymins),

'image/object/bbox/ymax': dataset_util.float_list_feature(ymaxs),

'image/object/class/text': dataset_util.bytes_list_feature(classes_text),

'image/object/class/label': dataset_util.int64_list_feature(classes),

}))

return tf_example

def main(_):

writer = tf.python_io.TFRecordWriter(FLAGS.output_path)

path = os.path.join(os.getcwd(), 'images')

examples = pd.read_csv(FLAGS.csv_input)

grouped = split(examples, 'filename')

for group in grouped:

tf_example = create_tf_example(group, path)

writer.write(tf_example.SerializeToString())

writer.close()

output_path = os.path.join(os.getcwd(), FLAGS.output_path)

print('Successfully created the TFRecords: {}'.format(output_path))

if __name__ == '__main__':

tf.app.run()

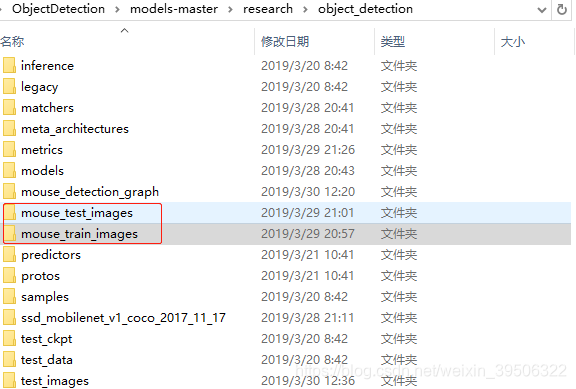

四、配置文件和模型

把我们自己做的数据集,放在objection下面,文件结构如下:

配置一:

--object_detection

--mouse_train_images

--训练的图片

--训练的图片生成的xml文件

--mouse_test_images

--测试的图片

--测试的图片生成的xml文件

配置二:

--object_detection

--data

--mouse_train_labels.csv

--mouse_train.record

--mouse_test_labels.csv

--mouse_test.record

配置三:

接下来需要设置配置文件, 进入 Object Detection github 对应页面 寻找配置文件的Sample。

以 ssd_mobilenet_v1_coco.config 为例,在 object_dection文件夹下,解压 ssd_mobilenet_v1_coco_2017_11_17.tar.gz,

将ssd_mobilenet_v1_coco.config 放在training(需要自己新建一个training文件夹) 文件夹下,用文本编辑器打开(我用的sublime 3),进行如下操作:

1、搜索其中的 PATH_TO_BE_CONFIGURED ,将对应的路径改为自己的路径,注意不要把test跟train弄反了;

2、将 num_classes 按照实际情况更改,我的例子中是1;

3、batch_size 原本是24,我在运行的时候出现显存不足的问题,为了保险起见,改为1,如果1还是出现类似问题的话,建议换电脑……

4、fine_tune_checkpoint: "ssd_mobilenet_v1_coco_11_06_2017/model.ckpt"

from_detection_checkpoint: true

这两行是设置checkpoint,但是会出现显存不足的问题(电脑GPU显存不足的情况下),这里是从预先训练的模型中寻找checkpoint,可能是因为原先的模型是基于较大规模的公开数据集训练的,因此配置到本地的时候出现了问题,删除这两行后,相当于从头开始训练,最后正常了,因此如果是从头开始训练,建议把这两行删除。

# SSD with Mobilenet v1 configuration for MSCOCO Dataset.

# Users should configure the fine_tune_checkpoint field in the train config as

# well as the label_map_path and input_path fields in the train_input_reader and

# eval_input_reader. Search for "PATH_TO_BE_CONFIGURED" to find the fields that

# should be configured.

model {

ssd {

num_classes: 1

box_coder {

faster_rcnn_box_coder {

y_scale: 10.0

x_scale: 10.0

height_scale: 5.0

width_scale: 5.0

}

}

matcher {

argmax_matcher {

matched_threshold: 0.5

unmatched_threshold: 0.5

ignore_thresholds: false

negatives_lower_than_unmatched: true

force_match_for_each_row: true

}

}

similarity_calculator {

iou_similarity {

}

}

anchor_generator {

ssd_anchor_generator {

num_layers: 6

min_scale: 0.2

max_scale: 0.95

aspect_ratios: 1.0

aspect_ratios: 2.0

aspect_ratios: 0.5

aspect_ratios: 3.0

aspect_ratios: 0.3333

}

}

image_resizer {

fixed_shape_resizer {

height: 300

width: 300

}

}

box_predictor {

convolutional_box_predictor {

min_depth: 0

max_depth: 0

num_layers_before_predictor: 0

use_dropout: false

dropout_keep_probability: 0.8

kernel_size: 1

box_code_size: 4

apply_sigmoid_to_scores: false

conv_hyperparams {

activation: RELU_6,

regularizer {

l2_regularizer {

weight: 0.00004

}

}

initializer {

truncated_normal_initializer {

stddev: 0.03

mean: 0.0

}

}

batch_norm {

train: true,

scale: true,

center: true,

decay: 0.9997,

epsilon: 0.001,

}

}

}

}

feature_extractor {

type: 'ssd_mobilenet_v1'

min_depth: 16

depth_multiplier: 1.0

conv_hyperparams {

activation: RELU_6,

regularizer {

l2_regularizer {

weight: 0.00004

}

}

initializer {

truncated_normal_initializer {

stddev: 0.03

mean: 0.0

}

}

batch_norm {

train: true,

scale: true,

center: true,

decay: 0.9997,

epsilon: 0.001,

}

}

}

loss {

classification_loss {

weighted_sigmoid {

}

}

localization_loss {

weighted_smooth_l1 {

}

}

hard_example_miner {

num_hard_examples: 3000

iou_threshold: 0.99

loss_type: CLASSIFICATION

max_negatives_per_positive: 3

min_negatives_per_image: 0

}

classification_weight: 1.0

localization_weight: 1.0

}

normalize_loss_by_num_matches: true

post_processing {

batch_non_max_suppression {

score_threshold: 1e-8

iou_threshold: 0.6

max_detections_per_class: 100

max_total_detections: 100

}

score_converter: SIGMOID

}

}

}

train_config: {

batch_size: 1

optimizer {

rms_prop_optimizer: {

learning_rate: {

exponential_decay_learning_rate {

initial_learning_rate: 0.004

decay_steps: 800720

decay_factor: 0.95

}

}

momentum_optimizer_value: 0.9

decay: 0.9

epsilon: 1.0

}

}

#fine_tune_checkpoint: "PATH_TO_BE_CONFIGURED/model.ckpt"

#from_detection_checkpoint: true

# Note: The below line limits the training process to 200K steps, which we

# empirically found to be sufficient enough to train the pets dataset. This

# effectively bypasses the learning rate schedule (the learning rate will

# never decay). Remove the below line to train indefinitely.

num_steps: 200000

data_augmentation_options {

random_horizontal_flip {

}

}

data_augmentation_options {

ssd_random_crop {

}

}

}

train_input_reader: {

tf_record_input_reader {

input_path: "data/mouse_train.record"

}

label_map_path: "data/mouse_detection.pbtxt"

}

eval_config: {

num_examples: 8000

# Note: The below line limits the evaluation process to 10 evaluations.

# Remove the below line to evaluate indefinitely.

max_evals: 10

}

eval_input_reader: {

tf_record_input_reader {

input_path: "data/mouse_test.record"

}

label_map_path: "data/mouse_detection.pbtxt"

shuffle: false

num_readers: 1

}上一个config文件中 label_map_path: "data/mouse_detection.pbtxt" 必须始终保持一致。

此时在对应目录(/data)下,创建一个 mouse_detection.pbtxt的文本文件(可以复制一个其他名字的文件,然后用文本编辑软件打开修改),写入我们的标签,我的例子中是两个,id序号注意与前面创建CSV文件时保持一致,从1开始。

item {

id: 1

name: 'mouse'

}五、训练模型

模型的训练是基于有GPU的情形下的,在Tensorflow Object Detection API最新版本中,训练文件已经改为 :

model_main.py用Anaconda Prompt 定位到 models\research\object_detection文件夹下,运行如下命令:

# From the tensorflow/models/research/object_detection directory

python model_main.py \

--pipeline_config_path=training/ssd_mobilenet_v1_coco.config \

--model_dir=training \

--num_train_steps=20000 \

--num_eval_steps=2000 \

--alsologtostderr

设置训练步数为20000,评估步数为2000

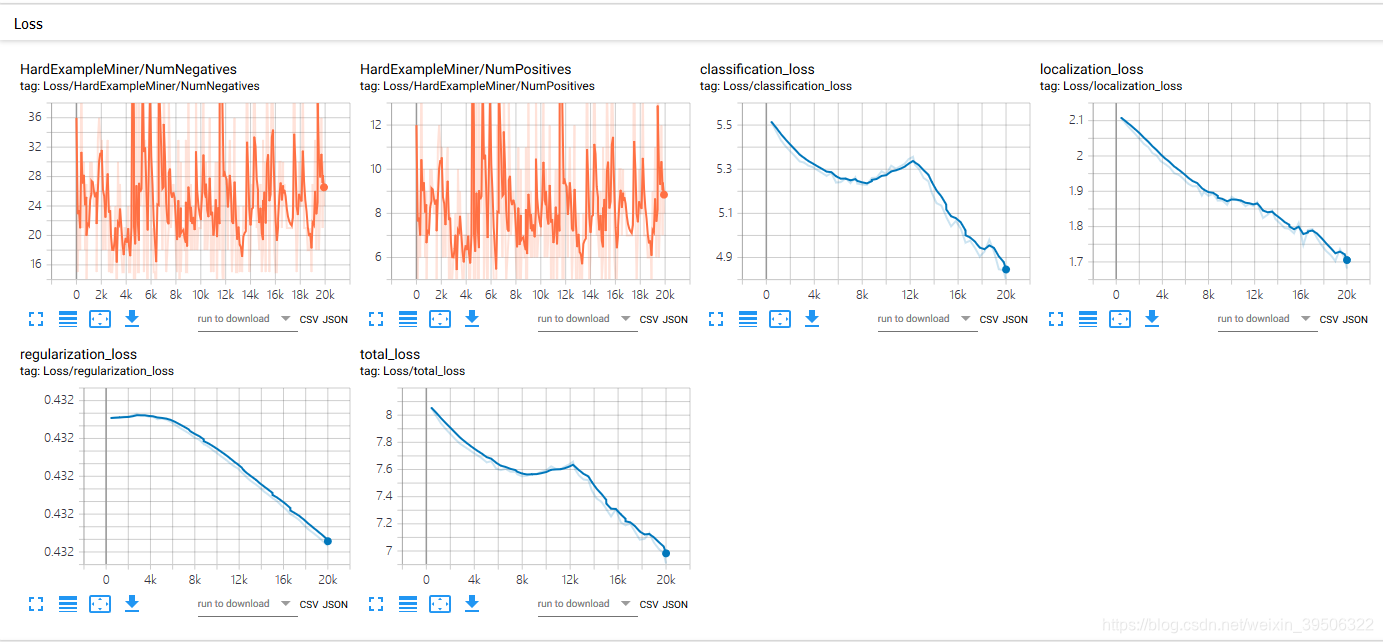

同时我们可以用Tensorboard来可视化训练过程。

Anaconda Prompt 定位到 models\research\object_detection 文件夹下,运行

tensorboard --logdir=training

可视化图如下:

接着我们可以测试一下目前的模型效果,关闭命令行。在 models\research\object_detection 文件夹下找到 export_inference_graph.py 文件,要运行这个文件,还需要传入config以及checkpoint的相关参数。

Anaconda Prompt 定位到 models\research\object_detection 文件夹下,运行

python export_inference_graph.py \

--input_type image_tensor \

--pipeline_config_path training/ssd_mobilenet_v1_coco.config \

--trained_checkpoint_prefix training/model.ckpt-20000 \

--output_directory mouse_detection_graph

--trained_checkpoint_prefix training/model.ckpt-20000 这个checkpoint(.ckpt-后面的数字)可以在training文件夹下找到你自己训练的模型的情况,填上对应的数字(如果有多个,选迭代步数最大的)。

--output_directory mouse_detection_graph 改成自己的名字

注意,训练的时候可能会报错,提示ModuleNotFoundError: No module named 'pycocotools'

解决方法:

1、pip install Cython

2、conda install git

3、pip install git+https://github.com/philferriere/cocoapi.git#egg=pycocotools^&subdirectory=PythonAPI

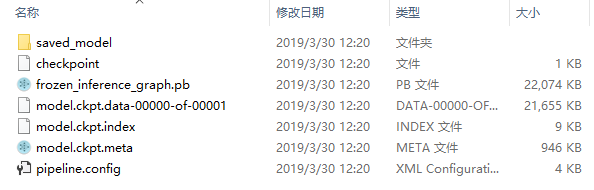

运行完后,可以在mouse_detection_graph 文件夹下发现若干文件,有saved_model、checkpoint、frozen_inference_graph.pb等。 .pb结尾的就是最重要的frozen model了,就是我们在后面(测试和模型迁移到Android)要用到的部分。

四、测试模型并输出

现在已有对应的训练模型,然后将models\research\object_detection文件夹下的object_detection_tutorial.ipynb文件进行复制粘贴到当前路径下,然后重命名为object_detection_mouse.ipynb(你自己文件的名字),接着用Anaconda Prompt定位到models\research\object_detection 文件夹下并打开jupyter notebook,找到object_detection_mouse.ipynb文件并根据自己的实际情况修改路径等参数。

打开jupyter notebook如下所示:

(tensorflow-3.6) E:\tf\ObjectDetection\models-master\research\object_detection>jupyter notebook --NotebookApp.iopub_data_rate_limit=2147483647测试代码如下:

import numpy as np

import os

import six.moves.urllib as urllib

import sys

import tarfile

import tensorflow as tf

import zipfile

from distutils.version import StrictVersion

from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image

# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

from object_detection.utils import ops as utils_ops

if StrictVersion(tf.__version__) < StrictVersion('1.12.0'):

raise ImportError('Please upgrade your TensorFlow installation to v1.12.*.')

# This is needed to display the images.

%matplotlib inline

# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

from utils import label_map_util

from utils import visualization_utils as vis_util

# 下载模型的名字

MODEL_NAME = 'mouse_detection_graph'

#MODEL_FILE = MODEL_NAME + '.tar.gz'

#DOWNLOAD_BASE = 'http://download.tensorflow.org/models/object_detection/'

# Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_CKPT = MODEL_NAME + '/frozen_inference_graph.pb'

# List of the strings that is used to add correct label for each box.

PATH_TO_LABELS = os.path.join('data', 'mouse_detection.pbtxt')

NUM_CLASSES = 1

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES, use_display_name=True)

category_index = label_map_util.create_category_index(categories)

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

# For the sake of simplicity we will use only 2 images:

# image1.jpg

# image2.jpg

# If you want to test the code with your images, just add path to the images to the TEST_IMAGE_PATHS.

PATH_TO_TEST_IMAGES_DIR = 'test_images'

TEST_IMAGE_PATHS = [ os.path.join(PATH_TO_TEST_IMAGES_DIR, 'image{}.jpg'.format(i)) for i in range(1, 13) ]

# Size, in inches, of the output images.

IMAGE_SIZE = (12, 8)

with detection_graph.as_default():

with tf.Session(graph=detection_graph) as sess:

# Definite input and output Tensors for detection_graph

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0')

# Each box represents a part of the image where a particular object was detected.

detection_boxes = detection_graph.get_tensor_by_name('detection_boxes:0')

# Each score represent how level of confidence for each of the objects.

# Score is shown on the result image, together with the class label.

detection_scores = detection_graph.get_tensor_by_name('detection_scores:0')

detection_classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0')

for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

# Actual detection.

(boxes, scores, classes, num) = sess.run(

[detection_boxes, detection_scores, detection_classes, num_detections],

feed_dict={image_tensor: image_np_expanded})

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=8)

plt.figure(figsize=IMAGE_SIZE)

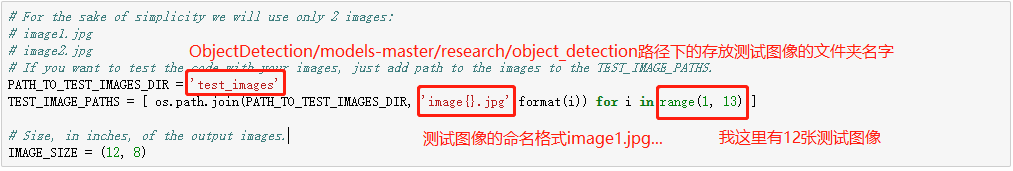

plt.imshow(image_np)代码需要修改的地方包括:

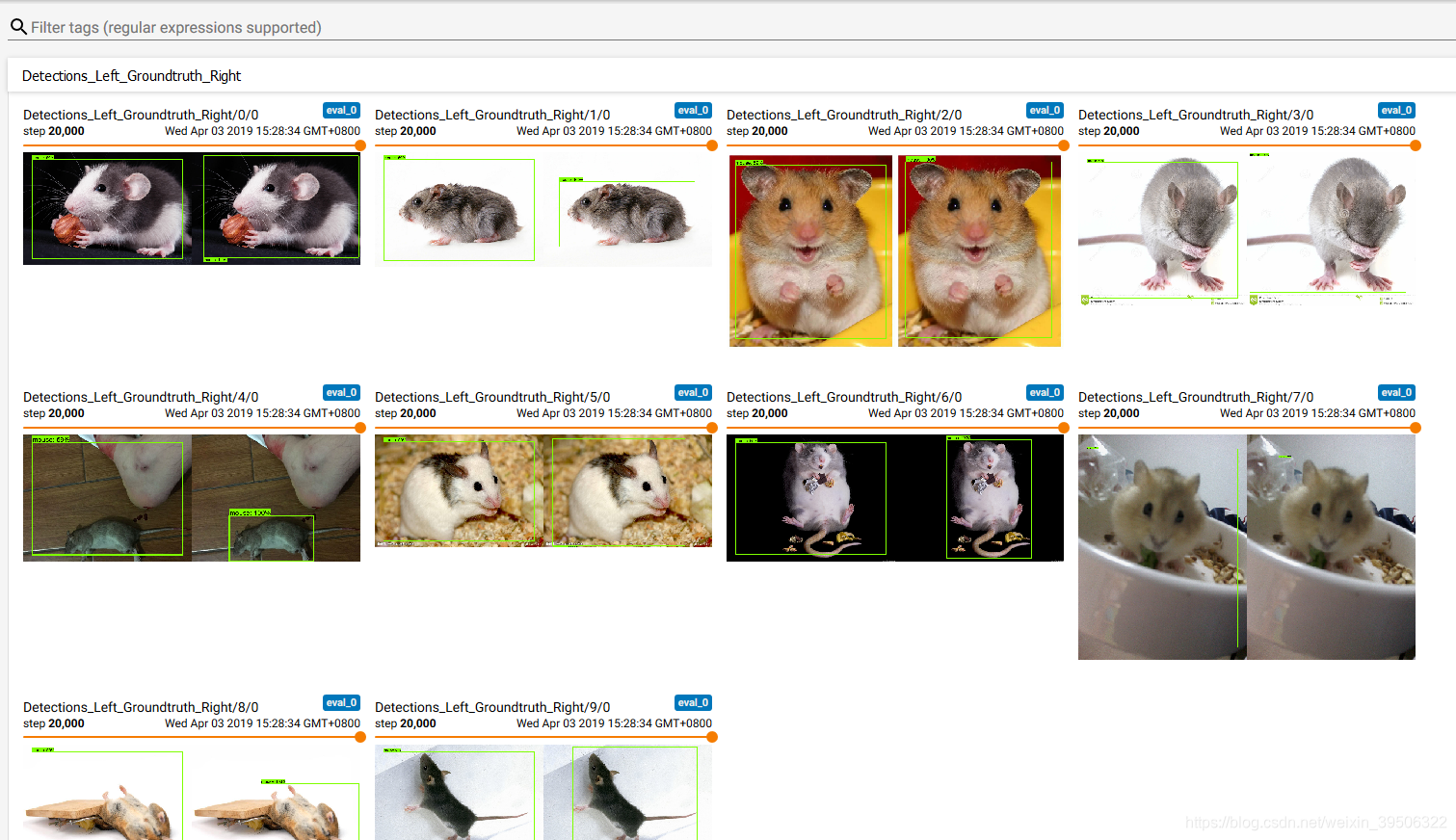

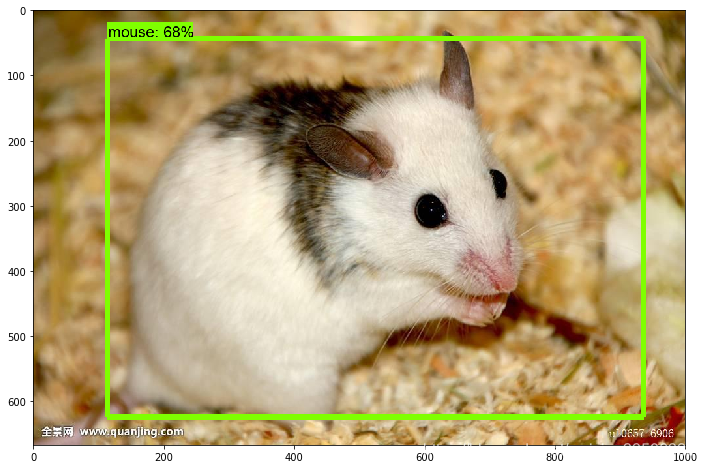

测试结果

测试结果:

五、把自己训练生成的模型迁移到Android上

首先先根据提示把Demo跑起来,后面的工作在此基础上进行。可参考:

在Windows10上运行TensorFlow Android Demo实例 https://blog.csdn.net/weixin_39506322/article/details/88871309

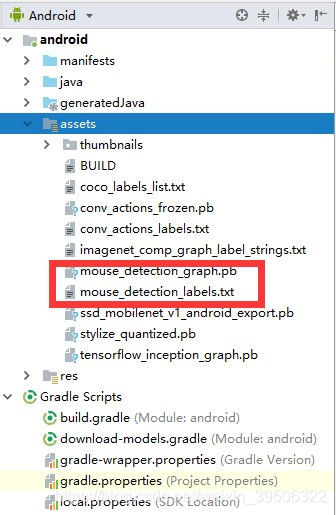

文件配置:

1)将之前训练好的识别老鼠mouse的模型 mouse_detection_graph.pb 放在 Android文件夹中的 assets

文件夹下。

2)继续在当前文件夹下,能发现数个 ‘...labels.txt’ 文件,复制其中任意一个,重命名为 “mouse_detection_labels.txt”用于储存标签,名字随意起,只要你自己能理解就行。打开 “mouse_detection_labels.txt”,建议用 Sublime Text,直接用记事本打开所有标签会挤在同一行。在Sublime text中,发现第一行是“???”,不用管,后面每一行是一个标签,由于我的任务只有一个,就是“mouse”,注意标签跟之前训练模型时候保持一致。保存退出。、

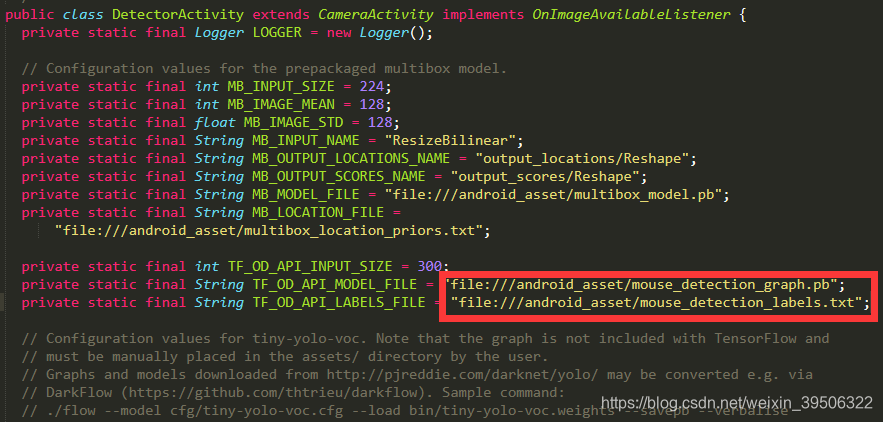

3) 查找 “DetectorActivity.java”文件,用Sublime text 打开,找到 TF_OD_API_MODEL_FILE 与 TF_OD_API_LABELS_FILE 这两个变量,前一个改成自己模型的路径,如 "file:///android_asset/mouse_detection_graph.pb",后一个改成标签 .txt文件的路径,如 "file:///android_asset/mouse_detection_labels.txt"

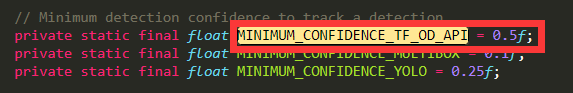

4 )“DetectorActivity.java” 文件中 MINIMUM_CONFIDENCE_TF_OD_API 变量,决定最低多少置信度画识别的框,如果发现移植到手机上的模型效果不理想,可以把这个值调低,就会更容易识别出物体(但是也更容易误识别),根据自己的实际运行效果测试修改。

5 )参考Demo中进行手机调试和“run”,重新生成的新的TF Detect将是我们训练好的用于检测mouse的模型。