ps:网上有很多记录这个api的使用啦,这里记录一下我在自己电脑上的实现情况:

欢迎大家光临我的博客

下载

首先就是下载这个API

TensorFlow 模型库包含了很多开源的模型,包括图像分类、检测、自然语言处理NLP、视频预测、图像理解等等,我们要学习的对象检测API也包括在这里面,可以用git checkout到本地,也可以直接在github下载zip包

安装

安装可以参照这篇文章:

值得注意的是我在这里之前使用tensorflow2版本后续一直会出现错误,要不就是缺少某些模块(ImportError),要不就是缺少属性(AttributeError)

所以我最后新建了一个环境采用的是tensorflow1.14.0版本。

在运行代码:python object_detection/builders/model_builder_test.py 进行测试时,可能会出现这样的错误:

ModuleNotFoundError: No module named 'object_detection'

好多说这是由于环境变量设置的问题,然后balabala,

但我是通过这个解决的 上链接

测试

我们来通过一个程序来测试一下:

import numpy as np

import os

import six.moves.urllib as urllib

import sys

import tarfile

import tensorflow as tf

import zipfile

from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image

# # This is needed to display the images.

# %matplotlib inline

# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

# from utils import label_map_util

# from utils import visualization_utils as vis_util

from research.object_detection.utils import label_map_util

from research.object_detection.utils import visualization_utils as vis_util

# What model to download.

MODEL_NAME = 'ssd_mobilenet_v1_coco_2017_11_17'

MODEL_FILE = MODEL_NAME + '.tar.gz'

DOWNLOAD_BASE = 'http://download.tensorflow.org/models/object_detection/'

# Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_CKPT = MODEL_NAME + '/frozen_inference_graph.pb'

# List of the strings that is used to add correct label for each box.

#PATH_TO_LABELS = os.path.join('data', 'mscoco_label_map.pbtxt')

PATH_TO_LABELS='/home/daniel/tensorflow/models/research/object_detection/data/mscoco_label_map.pbtxt'

NUM_CLASSES = 90

# download model

#opener = urllib.request.URLopener()

# 下载模型,如果已经下载好了下面这句代码可以注释掉

#opener.retrieve(DOWNLOAD_BASE + MODEL_FILE, MODEL_FILE)

#tar_file = tarfile.open(MODEL_FILE)

#for file in tar_file.getmembers():

# file_name = os.path.basename(file.name)

# if 'frozen_inference_graph.pb' in file_name:

# tar_file.extract(file, os.getcwd())

# Load a (frozen) Tensorflow model into memory.

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

# Loading label map

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES,

use_display_name=True)

category_index = label_map_util.create_category_index(categories)

# Helper code

def load_image_into_numpy_array(image):

(im_width, im_height) = image.size

return np.array(image.getdata()).reshape(

(im_height, im_width, 3)).astype(np.uint8)

# For the sake of simplicity we will use only 2 images:

# image1.jpg

# image2.jpg

# If you want to test the code with your images, just add path to the images to the TEST_IMAGE_PATHS.

PATH_TO_TEST_IMAGES_DIR = '/home/daniel/tensorflow/models/research/object_detection/test_images'

TEST_IMAGE_PATHS = [os.path.join(PATH_TO_TEST_IMAGES_DIR, 'image{}.jpg'.format(i)) for i in range(1, 5)]

# Size, in inches, of the output images.

IMAGE_SIZE = (12, 8)

with detection_graph.as_default():

with tf.Session(graph=detection_graph) as sess:

# Definite input and output Tensors for detection_graph

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0')

# Each box represents a part of the image where a particular object was detected.

detection_boxes = detection_graph.get_tensor_by_name('detection_boxes:0')

# Each score represent how level of confidence for each of the objects.

# Score is shown on the result image, together with the class label.

detection_scores = detection_graph.get_tensor_by_name('detection_scores:0')

detection_classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0')

for image_path in TEST_IMAGE_PATHS:

image = Image.open(image_path)

# the array based representation of the image will be used later in order to prepare the

# result image with boxes and labels on it.

image_np = load_image_into_numpy_array(image)

# Expand dimensions since the model expects images to have shape: [1, None, None, 3]

image_np_expanded = np.expand_dims(image_np, axis=0)

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0')

# Each box represents a part of the image where a particular object was detected.

boxes = detection_graph.get_tensor_by_name('detection_boxes:0')

# Each score represent how level of confidence for each of the objects.

# Score is shown on the result image, together with the class label.

scores = detection_graph.get_tensor_by_name('detection_scores:0')

classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0')

# Actual detection.

(boxes, scores, classes, num_detections) = sess.run(

[boxes, scores, classes, num_detections],

feed_dict={

image_tensor: image_np_expanded})

# Visualization of the results of a detection.

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=8)

plt.figure(figsize=IMAGE_SIZE)

plt.imshow(image_np)

plt.show()

值得注意的点:

1。 如果用代码形式来下载模型文件可能会很慢,所以我把下载的这段代码给注释掉了

#opener = urllib.request.URLopener()

# 下载模型,如果已经下载好了下面这句代码可以注释掉

#opener.retrieve(DOWNLOAD_BASE + MODEL_FILE, MODEL_FILE)

#tar_file = tarfile.open(MODEL_FILE)

#for file in tar_file.getmembers():

# file_name = os.path.basename(file.name)

# if 'frozen_inference_graph.pb' in file_name:

# tar_file.extract(file, os.getcwd())

我们可以事先下载好。模型文件请摸这里

然后解压到相应目录(我这里解压缩到/home/daniel/tensorflow/models/research/object_detection目录下),确保存在object_detection/ssd_mobilenet_v1_coco_2017_11_17/frozen_inference_graph.pb 文件

frozen_inference_graph.pb文件它保存了网络的结构和数据。后续我们就需要利用它来预测图像中的物体。

mscoco_label_map.pbtxt文件主要是将网络预测后的结果与实际要表述的物体相匹配,例如网络中输出[1,0,0,0,0,0,0,…]’ 则表述的是person。 若输出[0,1,0,0,0,…]则表述的是bicycle。

2。路径问题:

之前使用

#PATH_TO_LABELS = os.path.join('data', 'mscoco_label_map.pbtxt')

它一直提醒我找不到路径,我也不知道是为啥。

所以我就直接使用了绝对路径。ps:有知道的小伙伴麻烦告知一下是为什么。

3。 matplotlib 问题:

运行时,可能会出现图片显示不出来的原因。报这个错误:

UserWarning: Matplotlib is currently using agg, which is a non-GUI backend.

这是因为我们设置了matplotlib的显示方式。

解决办法:编辑tensorflow所在目录下的 models/research/object_detection/uitls/visualization_utils.py

修改26行的Agg为Qt5Agg

import matplotlib; matplotlib.use('Qt5Agg')

问题解决!!!

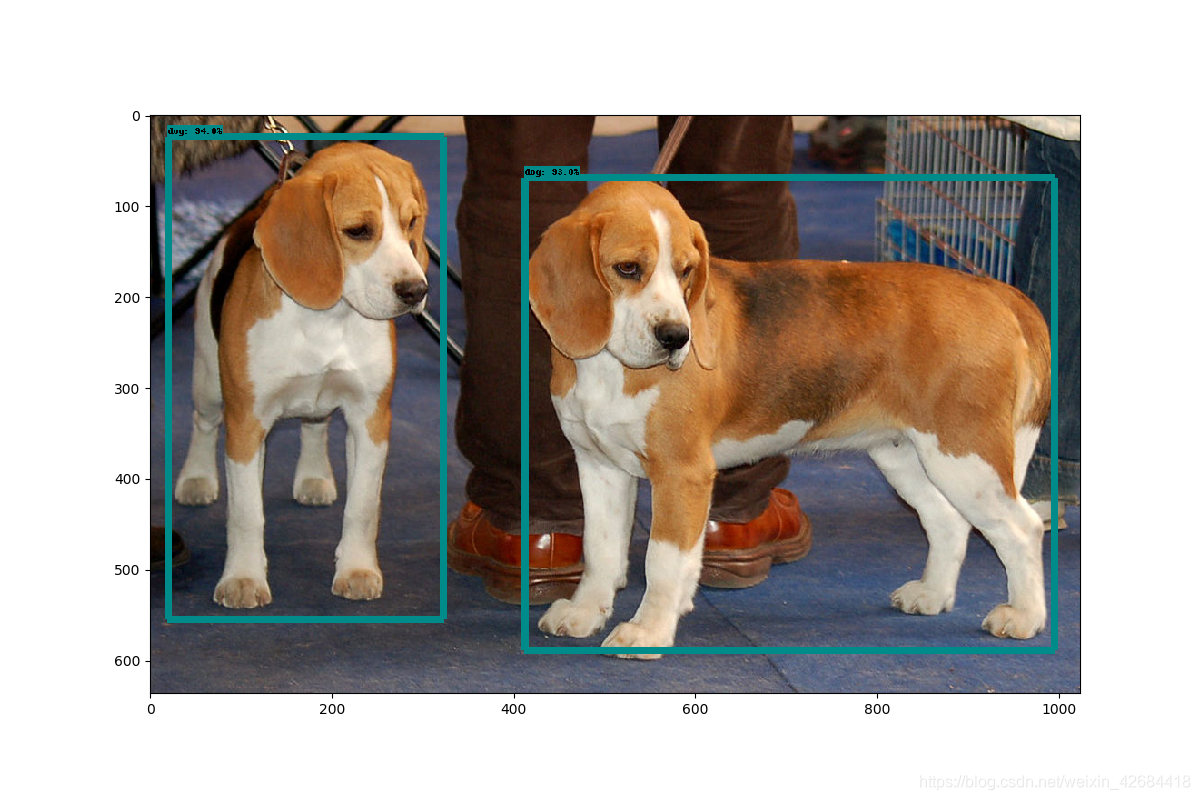

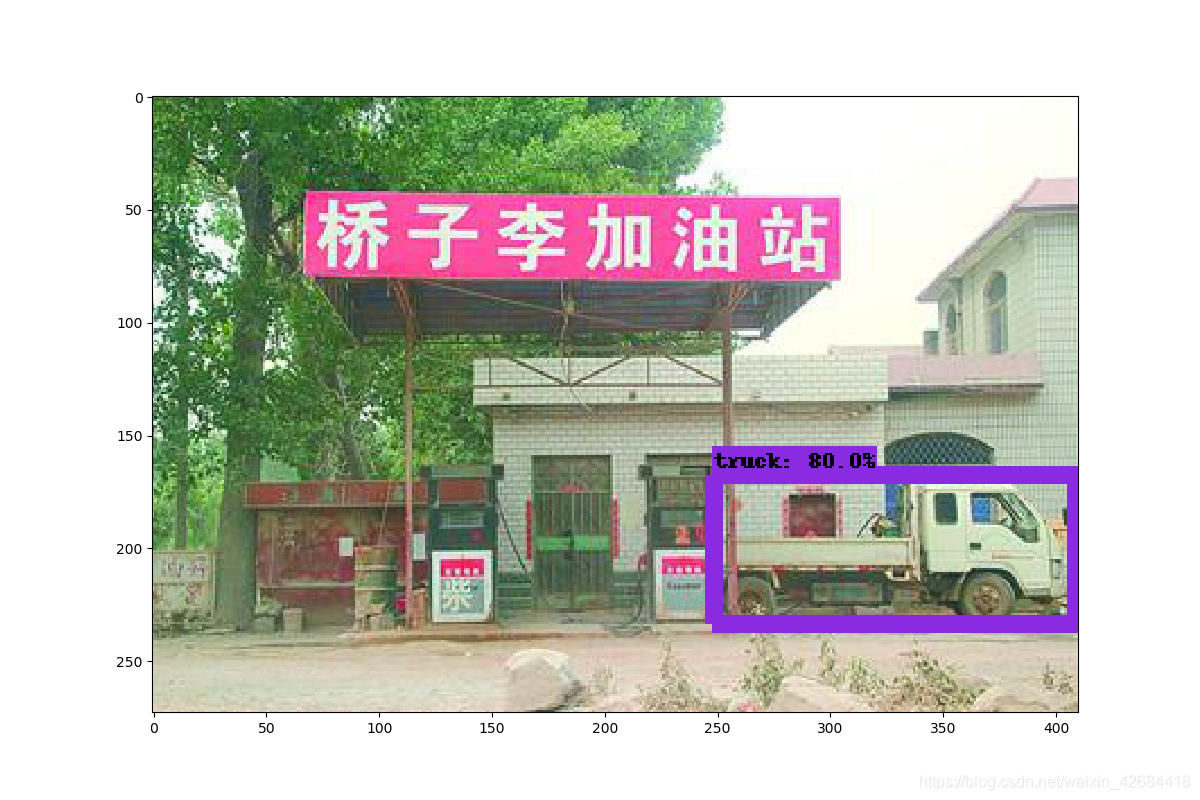

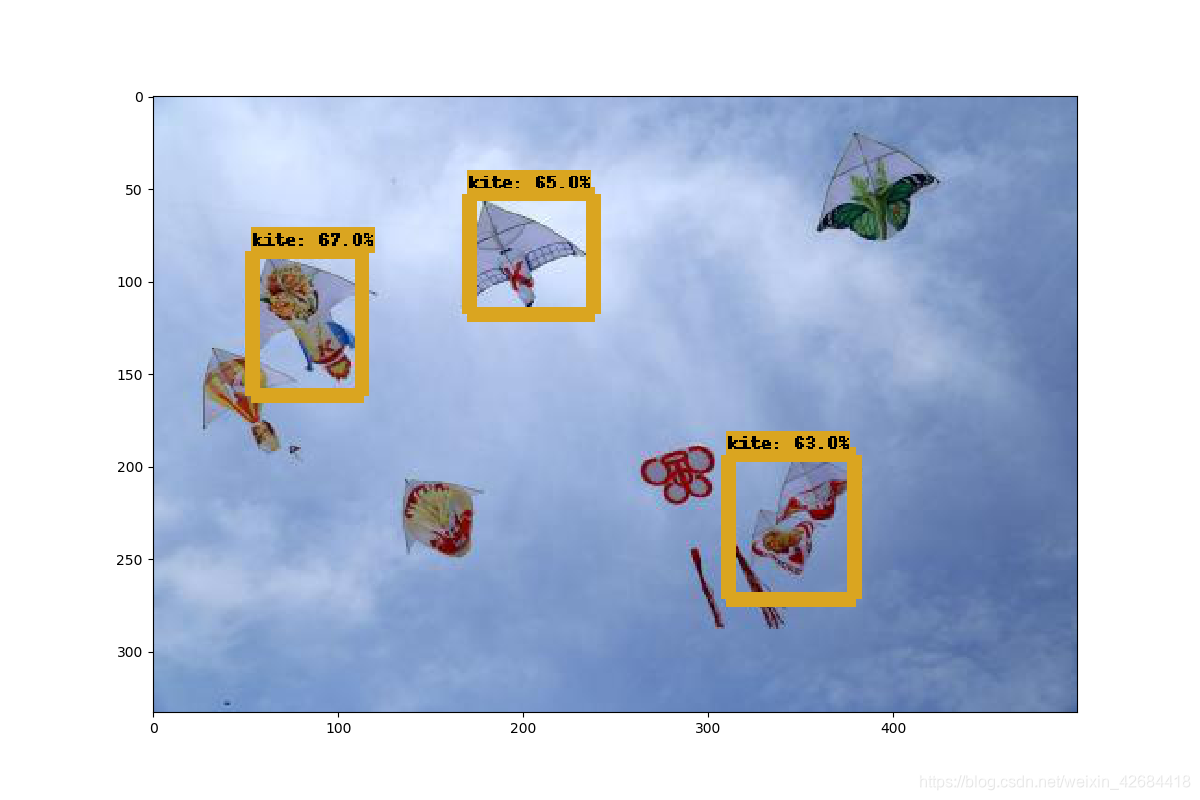

显示的图像如下所示(我自己又补了两张):

效果还是不错滴!!!

效果还是不错滴!!!

检测视频中的物体

代码参考:https://blog.csdn.net/hh_2018/article/details/79887231

# -*- coding: utf-8 -*-

"""

Created on Thu Jan 11 16:55:43 2018

@author: Xiang Guo

"""

#Imports

import numpy as np

import os

import six.moves.urllib as urllib

import sys

import time

sys.path.remove('/opt/ros/kinetic/lib/python2.7/dist-packages')

import tarfile

import tensorflow as tf

import zipfile

import cv2

from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image

import imageio

import skimage

#if tf.__version__ < '1.4.0':

# raise ImportError('Please upgrade your tensorflow installation to v1.4.* or later!')

#Env setup

# This is needed to display the images.

#%matplotlib inline

# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

#Object detection imports

from research.object_detection.utils import label_map_util

from research.object_detection.utils import visualization_utils as vis_util

#Model preparation

# What model to download.

#MODEL_NAME = 'tv_vehicle_inference_graph'

#MODEL_NAME = 'tv_vehicle_inference_graph_fasterCNN'

#MODEL_NAME = 'tv_vehicle_inference_graph_ssd_mobile'

#MODEL_NAME = 'ssd_mobilenet_v1_coco_2017_11_17' #[30,21] best

#MODEL_NAME = 'ssd_inception_v2_coco_2017_11_17' #[42,24]

#MODEL_NAME = 'faster_rcnn_inception_v2_coco_2017_11_08' #[58,28]

#MODEL_NAME = 'faster_rcnn_resnet50_coco_2017_11_08' #[89,30]

#MODEL_NAME = 'faster_rcnn_resnet50_lowproposals_coco_2017_11_08' #[64, ]

#MODEL_NAME = 'rfcn_resnet101_coco_2017_11_08' #[106,32]

'''

MODEL_FILE = MODEL_NAME + '.tar.gz'

DOWNLOAD_BASE = 'http://download.tensorflow.org/models/object_detection/'

'''

# What model to download.

MODEL_NAME = 'ssd_mobilenet_v1_coco_2017_11_17'

MODEL_FILE = MODEL_NAME + '.tar.gz'

DOWNLOAD_BASE = 'http://download.tensorflow.org/models/object_detection/'

# Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_CKPT = MODEL_NAME + '/frozen_inference_graph.pb'

# List of the strings that is used to add correct label for each box.

#PATH_TO_LABELS = os.path.join('data', 'mscoco_label_map.pbtxt')

PATH_TO_LABELS='/home/daniel/tensorflow/models/research/object_detection/data/mscoco_label_map.pbtxt'

NUM_CLASSES = 90

'''

#Download Model

opener = urllib.request.URLopener()

opener.retrieve(DOWNLOAD_BASE + MODEL_FILE, MODEL_FILE)

tar_file = tarfile.open(MODEL_FILE)

for file in tar_file.getmembers():

file_name = os.path.basename(file.name)

if 'frozen_inference_graph.pb' in file_name:

tar_file.extract(file, os.getcwd())

'''

#Load a (frozen) Tensorflow model into memory.

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

# Loading label map

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES, use_display_name=True)

category_index = label_map_util.create_category_index(categories)

#cap = cv2.VideoCapture("test3.MOV")

cap = cv2.VideoCapture(0)

with detection_graph.as_default():

with tf.Session(graph=detection_graph) as sess:

while (1):

start = time.clock()

# 按帧读视

ret, frame = cap.read()

if cv2.waitKey(1) & 0xFF == ord('q'):

break

image_np = frame

image_np_expanded = np.expand_dims(image_np, axis=0)

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0')

boxes = detection_graph.get_tensor_by_name('detection_boxes:0')

scores = detection_graph.get_tensor_by_name('detection_scores:0')

classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0')

# Actual detection.

(boxes, scores, classes, num_detections) = sess.run(

[boxes, scores, classes, num_detections],

feed_dict={

image_tensor: image_np_expanded})

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=8)

end = time.clock()

#print('frame:', 1.0 / (end - start))

cv2.imshow("capture", image_np)

cv2.waitKey(1)

# 释放捕捉的对象和内存

cap.release()

cv2.destroyAllWindows()

cap = cv2.VideoCapture(0)表示打开摄像头检测摄像头中的物体

#cap = cv2.VideoCapture(“test3.MOV”) 检查本地的视频中的物体

但是我电脑上检测本地视频总是出错,frame这个值总是空,检查发现 cap.isopen总是为false。

查了查原因,发现是opencv-ffmpeg这个文件的位置出错了所以打不开本地视频中的文件,但是坑爹的是,我也始终找不到这个文件,所以只能采用其他的办法来打开这个视频了

这里我们参考这篇文章:https://blog.csdn.net/guoyunfei20/article/details/77977482

在Python中,可以读视频的库,除了opencv外,还有一个——imageio 我们可以利用这个库来读取这个文件,然后利用skimage将读取出来的对象转化成np对象,再按opencv的套路进行物体识别。

# -*- coding: utf-8 -*-

"""

Created on Thu Jan 11 16:55:43 2018

@author: Xiang Guo

"""

#Imports

import numpy as np

import os

import six.moves.urllib as urllib

import sys

import time

sys.path.remove('/opt/ros/kinetic/lib/python2.7/dist-packages')

import tarfile

import tensorflow as tf

import zipfile

import cv2

from collections import defaultdict

from io import StringIO

from matplotlib import pyplot as plt

from PIL import Image

import imageio

import skimage

#if tf.__version__ < '1.4.0':

# raise ImportError('Please upgrade your tensorflow installation to v1.4.* or later!')

#Env setup

# This is needed to display the images.

#%matplotlib inline

# This is needed since the notebook is stored in the object_detection folder.

sys.path.append("..")

#Object detection imports

from research.object_detection.utils import label_map_util

from research.object_detection.utils import visualization_utils as vis_util

#Model preparation

# What model to download.

#MODEL_NAME = 'tv_vehicle_inference_graph'

#MODEL_NAME = 'tv_vehicle_inference_graph_fasterCNN'

#MODEL_NAME = 'tv_vehicle_inference_graph_ssd_mobile'

#MODEL_NAME = 'ssd_mobilenet_v1_coco_2017_11_17' #[30,21] best

#MODEL_NAME = 'ssd_inception_v2_coco_2017_11_17' #[42,24]

#MODEL_NAME = 'faster_rcnn_inception_v2_coco_2017_11_08' #[58,28]

#MODEL_NAME = 'faster_rcnn_resnet50_coco_2017_11_08' #[89,30]

#MODEL_NAME = 'faster_rcnn_resnet50_lowproposals_coco_2017_11_08' #[64, ]

#MODEL_NAME = 'rfcn_resnet101_coco_2017_11_08' #[106,32]

'''

MODEL_FILE = MODEL_NAME + '.tar.gz'

DOWNLOAD_BASE = 'http://download.tensorflow.org/models/object_detection/'

'''

# What model to download.

MODEL_NAME = 'ssd_mobilenet_v1_coco_2017_11_17'

MODEL_FILE = MODEL_NAME + '.tar.gz'

DOWNLOAD_BASE = 'http://download.tensorflow.org/models/object_detection/'

# Path to frozen detection graph. This is the actual model that is used for the object detection.

PATH_TO_CKPT = MODEL_NAME + '/frozen_inference_graph.pb'

# List of the strings that is used to add correct label for each box.

#PATH_TO_LABELS = os.path.join('data', 'mscoco_label_map.pbtxt')

PATH_TO_LABELS='/home/daniel/tensorflow/models/research/object_detection/data/mscoco_label_map.pbtxt'

NUM_CLASSES = 90

'''

#Download Model

opener = urllib.request.URLopener()

opener.retrieve(DOWNLOAD_BASE + MODEL_FILE, MODEL_FILE)

tar_file = tarfile.open(MODEL_FILE)

for file in tar_file.getmembers():

file_name = os.path.basename(file.name)

if 'frozen_inference_graph.pb' in file_name:

tar_file.extract(file, os.getcwd())

'''

#Load a (frozen) Tensorflow model into memory.

detection_graph = tf.Graph()

with detection_graph.as_default():

od_graph_def = tf.GraphDef()

with tf.gfile.GFile(PATH_TO_CKPT, 'rb') as fid:

serialized_graph = fid.read()

od_graph_def.ParseFromString(serialized_graph)

tf.import_graph_def(od_graph_def, name='')

# Loading label map

label_map = label_map_util.load_labelmap(PATH_TO_LABELS)

categories = label_map_util.convert_label_map_to_categories(label_map, max_num_classes=NUM_CLASSES, use_display_name=True)

category_index = label_map_util.create_category_index(categories)

#cap = cv2.VideoCapture('object_detection/jjp_picdata/model_test_image/xiaoying.mp4')

#cap = cv2.VideoCapture(0)

#cap = cv2.VideoCapture("test3.MOV")

#cap = cv2.VideoCapture(0)

strPath='/home/daniel/tensorflow/models/research/object_detection/jjp_picdata/model_test_image/2.mp4'

if(os.path.exists(strPath)==False):

print("file not exist")

curVideo=imageio.get_reader(strPath)

frameNum=curVideo.get_length()

with detection_graph.as_default():

with tf.Session(graph=detection_graph) as sess:

frameid=0;

while (1):

start = time.clock()

# 按帧读视

frameimage=curVideo.get_data(frameid)

image_np = skimage.img_as_ubyte(frameimage, True)

image_np_expanded = np.expand_dims(image_np, axis=0)

image_tensor = detection_graph.get_tensor_by_name('image_tensor:0')

boxes = detection_graph.get_tensor_by_name('detection_boxes:0')

scores = detection_graph.get_tensor_by_name('detection_scores:0')

classes = detection_graph.get_tensor_by_name('detection_classes:0')

num_detections = detection_graph.get_tensor_by_name('num_detections:0')

# Actual detection.

(boxes, scores, classes, num_detections) = sess.run(

[boxes, scores, classes, num_detections],

feed_dict={

image_tensor: image_np_expanded})

vis_util.visualize_boxes_and_labels_on_image_array(

image_np,

np.squeeze(boxes),

np.squeeze(classes).astype(np.int32),

np.squeeze(scores),

category_index,

use_normalized_coordinates=True,

line_thickness=8)

end = time.clock()

#print('frame:', 1.0 / (end - start))

cv2.imshow("capture", image_np)

cv2.waitKey(1)

frameid=frameid+1

# 释放捕捉的对象和内存

cap.release()

cv2.destroyAllWindows()

参考文章:

https://blog.csdn.net/chenmaolin88/article/details/79371891

https://www.cnblogs.com/wind-chaser/p/11339284.html

https://blog.csdn.net/zj1131190425/article/details/80711857

https://blog.csdn.net/neninee/article/details/87972040

TensorFlow Object Detection API 默认提供了 5 个预训练模型

ImportError: /opt/ros/kinetic/lib/python2.7/dist-packages/cv2.so: undefined symbol: PyCObject_Type

The function is not implemented. Rebuild the library with Windows, GTK+ 2.x or Carbon support

ubuntu系统 - python中用cv2.VieoCapture()读取视频失败

Tensorflow之调用object_detection中的API识别视频