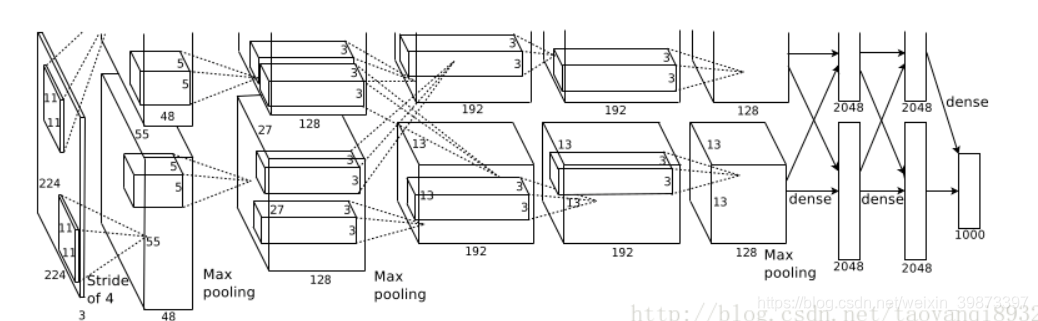

1. AlexNet网络结构

第一个卷积层

输入的图片大小为:224*224*3(或者是227*227*3)

第一个卷积层为:11*11*96即尺寸为11*11,有96个卷积核,步长为4,卷积层后跟ReLU,因此输出的尺寸为 224/4=56,去掉边缘为55,因此其输出的每个feature map 为 55*55*96,同时后面跟LRN层,尺寸不变.

最大池化层,核大小为3*3,步长为2,因此feature map的大小为:27*27*96.

第二层卷积层

输入的tensor为27*27*96

卷积和的大小为: 5*5*256,步长为1,尺寸不会改变,同样紧跟ReLU,和LRN层.

最大池化层,和大小为3*3,步长为2,因此feature map为:13*13*256

第三层至第五层卷积层

输入的tensor为13*13*256

第三层卷积为 3*3*384,步长为1,加上ReLU

第四层卷积为 3*3*384,步长为1,加上ReLU

第五层卷积为 3*3*256,步长为1,加上ReLU

第五层后跟最大池化层,核大小3*3,步长为2,因此feature map:6*6*256

第六层至第八层全连接层

接下来的三层为全连接层,分别为:

1. FC : 4096 + ReLU

2. FC:4096 + ReLU

3. FC: 1000 最后一层为softmax为1000类的概率值.

2. AlexNet中的trick

AlexNet将CNN用到了更深更宽的网络中,其效果分类的精度更高相比于以前的LeNet,其中有一些trick是必须要知道的.

AlexNet使用ReLU代替了Sigmoid,其能更快的训练,同时解决sigmoid在训练较深的网络中出现的梯度消失,或者说梯度弥散的问题.

随机忽略一些神经元,以避免过拟合,

在以前的CNN中普遍使用平均池化层,AlexNet全部使用最大池化层,避免了平均池化层的模糊化的效果,并且步长比池化的核的尺寸小,这样池化层的输出之间有重叠,提升了特征的丰富性.

局部响应归一化,对局部神经元创建了竞争的机制,使得其中响应小打的值变得更大,并抑制反馈较小的.

使用了gpu加速神经网络的训练

数据增强

使用数据增强的方法缓解过拟合现象.

3. Tensorflow实现AlexNet

def print_activations(t):

print(t.op.name, ' ', t.get_shape().as_list())上面的函数为输出当前层的参数的信息.下面是我对开源实现做了一些参数上的修改,代码如下:

def inference(images):

"""Build the AlexNet model.

Args:

images: Images Tensor

Returns:

pool5: the last Tensor in the convolutional component of AlexNet.

parameters: a list of Tensors corresponding to the weights and biases of the

AlexNet model.

"""

parameters = []

# conv1

with tf.name_scope('conv1') as scope:

kernel = tf.Variable(tf.truncated_normal([11, 11, 3, 96], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(images, kernel, [1, 4, 4, 1], padding='VALID')

biases = tf.Variable(tf.constant(0.0, shape=[96], dtype=tf.float32),

trainable=True, name='biases')

bias = tf.nn.bias_add(conv, biases)

conv1 = tf.nn.relu(bias, name=scope)

print_activations(conv1)

parameters += [kernel, biases]

# lrn1

# TODO(shlens, jiayq): Add a GPU version of local response normalization.

# pool1

pool1 = tf.nn.max_pool(conv1,

ksize=[1, 3, 3, 1],

strides=[1, 2, 2, 1],

padding='VALID',

name='pool1')

print_activations(pool1)

# conv2

with tf.name_scope('conv2') as scope:

kernel = tf.Variable(tf.truncated_normal([5, 5, 96, 256], dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(pool1, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[256], dtype=tf.float32),

trainable=True, name='biases')

bias = tf.nn.bias_add(conv, biases)

conv2 = tf.nn.relu(bias, name=scope)

parameters += [kernel, biases]

print_activations(conv2)

# pool2

pool2 = tf.nn.max_pool(conv2,

ksize=[1, 3, 3, 1],

strides=[1, 2, 2, 1],

padding='VALID',

name='pool2')

print_activations(pool2)

# conv3

with tf.name_scope('conv3') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 256, 384],

dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(pool2, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[384], dtype=tf.float32),

trainable=True, name='biases')

bias = tf.nn.bias_add(conv, biases)

conv3 = tf.nn.relu(bias, name=scope)

parameters += [kernel, biases]

print_activations(conv3)

# conv4

with tf.name_scope('conv4') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 384, 384],

dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(conv3, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[384], dtype=tf.float32),

trainable=True, name='biases')

bias = tf.nn.bias_add(conv, biases)

conv4 = tf.nn.relu(bias, name=scope)

parameters += [kernel, biases]

print_activations(conv4)

# conv5

with tf.name_scope('conv5') as scope:

kernel = tf.Variable(tf.truncated_normal([3, 3, 384, 256],

dtype=tf.float32,

stddev=1e-1), name='weights')

conv = tf.nn.conv2d(conv4, kernel, [1, 1, 1, 1], padding='SAME')

biases = tf.Variable(tf.constant(0.0, shape=[256], dtype=tf.float32),

trainable=True, name='biases')

bias = tf.nn.bias_add(conv, biases)

conv5 = tf.nn.relu(bias, name=scope)

parameters += [kernel, biases]

print_activations(conv5)

# pool5

pool5 = tf.nn.max_pool(conv5,

ksize=[1, 3, 3, 1],

strides=[1, 2, 2, 1],

padding='VALID',

name='pool5')

print_activations(pool5)

return pool5, parameters

def time_tensorflow_run(session, target, info_string):

"""Run the computation to obtain the target tensor and print timing stats.

Args:

session: the TensorFlow session to run the computation under.

target: the target Tensor that is passed to the session's run() function.

info_string: a string summarizing this run, to be printed with the stats.

Returns:

None

"""

num_steps_burn_in = 10

total_duration = 0.0

total_duration_squared = 0.0

for i in xrange(FLAGS.num_batches + num_steps_burn_in):

start_time = time.time()

_ = session.run(target)

duration = time.time() - start_time

if i >= num_steps_burn_in:

if not i % 10:

print ('%s: step %d, duration = %.3f' %

(datetime.now(), i - num_steps_burn_in, duration))

total_duration += duration

total_duration_squared += duration * duration

mn = total_duration / FLAGS.num_batches

vr = total_duration_squared / FLAGS.num_batches - mn * mn

sd = math.sqrt(vr)

print ('%s: %s across %d steps, %.3f +/- %.3f sec / batch' %

(datetime.now(), info_string, FLAGS.num_batches, mn, sd))

测试的函数:

image是随机生成的数据,不是真实的数据

def run_benchmark():

"""Run the benchmark on AlexNet."""

with tf.Graph().as_default():

# Generate some dummy images.

image_size = 224

# Note that our padding definition is slightly different the cuda-convnet.

# In order to force the model to start with the same activations sizes,

# we add 3 to the image_size and employ VALID padding above.

images = tf.Variable(tf.random_normal([FLAGS.batch_size,

image_size,

image_size, 3],

dtype=tf.float32,

stddev=1e-1))

# Build a Graph that computes the logits predictions from the

# inference model.

pool5, parameters = inference(images)

# Build an initialization operation.

init = tf.global_variables_initializer()

# Start running operations on the Graph.

config = tf.ConfigProto()

config.gpu_options.allocator_type = 'BFC'

sess = tf.Session(config=config)

sess.run(init)

# Run the forward benchmark.

time_tensorflow_run(sess, pool5, "Forward")

# Add a simple objective so we can calculate the backward pass.

objective = tf.nn.l2_loss(pool5)

# Compute the gradient with respect to all the parameters.

grad = tf.gradients(objective, parameters)

# Run the backward benchmark.

time_tensorflow_run(sess, grad, "Forward-backward")

def main(_):

run_benchmark()

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument(

'--batch_size',

type=int,

default=128,

help='Batch size.'

)

parser.add_argument(

'--num_batches',

type=int,

default=100,

help='Number of batches to run.'

)

FLAGS, unparsed = parser.parse_known_args()

tf.app.run(main=main, argv=[sys.argv[0]] + unparsed)

输出的结果为:

下面为输出的尺寸,具体的分析过程上面已经说的很详细了.

conv1 [128, 54, 54, 96]

pool1 [128, 26, 26, 96]

conv2 [128, 26, 26, 256]

pool2 [128, 12, 12, 256]

conv3 [128, 12, 12, 384]

conv4 [128, 12, 12, 384]

conv5 [128, 12, 12, 256]

pool5 [128, 5, 5, 256]下面是训练的前后向耗时,可以看到后向传播比前向要慢3倍.

2018-11-27 17:49:36.936271: step 0, duration = 0.085

2018-11-27 17:49:37.860652: step 10, duration = 0.085

2018-11-27 17:49:38.794103: step 20, duration = 0.100

2018-11-27 17:49:39.726452: step 30, duration = 0.099

2018-11-27 17:49:40.637597: step 40, duration = 0.088

2018-11-27 17:49:41.546659: step 50, duration = 0.078

2018-11-27 17:49:42.471295: step 60, duration = 0.085

2018-11-27 17:49:43.389295: step 70, duration = 0.095

2018-11-27 17:49:44.306961: step 80, duration = 0.085

2018-11-27 17:49:45.225164: step 90, duration = 0.085

2018-11-27 17:49:46.058470: Forward across 100 steps, 0.092 +/- 0.008 sec / batch

2018-11-27 17:49:50.335397: step 0, duration = 0.281

2018-11-27 17:49:53.041129: step 10, duration = 0.279

2018-11-27 17:49:55.747921: step 20, duration = 0.269

2018-11-27 17:49:58.454006: step 30, duration = 0.269

2018-11-27 17:50:01.176237: step 40, duration = 0.285

2018-11-27 17:50:03.882712: step 50, duration = 0.269

2018-11-27 17:50:06.573259: step 60, duration = 0.269

2018-11-27 17:50:09.286011: step 70, duration = 0.270

2018-11-27 17:50:12.007992: step 80, duration = 0.275

2018-11-27 17:50:14.706777: step 90, duration = 0.262

2018-11-27 17:50:17.138761: Forward-backward across 100 steps, 0.271 +/- 0.006 sec / batch

An exception has occurred, use %tb to see the full traceback.