由于项目的需求,需要完成一个目标检测的任务,经过个人一段时间的实践,现将自己实现的功能以及体验过的事情在这里做个总结,以便后续查看,也让其它人少走一些弯路,在这个过程中参考了一些博客,便于入门与提升。

个人将大多数的时间花费在yolov3上,其精度效果会比yolov2的效果要好,但仿真和测试时间会花费一倍左右的时间,并且将yolov3的过程弄明白之后,yolov2如何跑动只是更改部分参数和预训练模型罢了。注意,如果想训练自己的数据集,最好是有一台带GPU的服务器,一般运行到3w次左右其损失值会下降到0.0x的量级,CPU跑一个batchsize很慢,不建议使用CPU训练,但可以使用CPU进行测试,使用CPU进行测试时有个小技巧,能够加快一倍的测试时间。

文章分为以下几个部分:

1.准备工具

软硬件环境:本地MacBook Pro,阿里云服务器(P100显卡)

1.1 yolo网络下载

yolo官方网站:https://pjreddie.com/darknet/yolo/

github项目地址:https://github.com/pjreddie/darknet/tree/master/data

1.2 labelImg(有缺陷)

github项目地址: https://github.com/tzutalin/labelImg

2.安装

2.1 yolo包的安装

参考官方文档:https://pjreddie.com/darknet/install/

2.1.1 CPU版本

git clone https://github.com/pjreddie/darknet.git

cd darkness

make这里如果自己的电脑支持Openmp的话,也可以更改Makefile文件将其中的OPENMP的值更改为1,会加快训练和测试速度

GPU=0

CUDNN=0

OPENCV=0

OPENMP=0 # 若电脑支持Openmp时,可以将其设置为1

DEBUG=02.1.2 GPU版本

git clone https://github.com/pjreddie/darknet.git

cd darknet

vim Makefile

make对于GPU的Makefile更改的地方较多:

GPU=1 # 设置为1

CUDNN=1 # 设置为1

OPENCV=0 # 若后续想用opencv进行处理,可以将其设置为1

OPENMP=0

DEBUG=0

ARCH= -gencode arch=compute_30,code=sm_30 \

-gencode arch=compute_35,code=sm_35 \

-gencode arch=compute_50,code=[sm_50,compute_50] \

-gencode arch=compute_52,code=[sm_52,compute_52] \

-gencode arch=compute_60,code=[sm_60,compute_60] # 这个地方是根据自己的GPU架构进行设置,不同架构的GPU的运算能力不一样,本文使用的是帕斯卡结构,查阅英伟达官网查看对应的计算能力为6.0 # -gencode arch=compute_20,code=[sm_20,sm_21] \ This one is deprecated? # This is what I use, uncomment if you know your arch and want to specify

# ARCH= -gencode arch=compute_52,code=compute_52

VPATH=./src/:./examples

SLIB=libdarknet.so

ALIB=libdarknet.a

EXEC=darknet

OBJDIR=./obj/

CC=gcc

NVCC=nvcc # 这个地方若没有定义为环境变量,最好是使用绝对路径,大概位于`/usr/local/cuda/bin/nvcc`对于GPU版本的安装,需要根据对应的地方更改Makefile文件。

2.2 labelImg的安装

两种安装方式:

2.2.1 文件包安装的方式:

labelImg的文件包安装见github的地址:https://github.com/tzutalin/labelImg

2.2.2 pip安装:

pip install labelImg

or

brew install labelImg注意,经过实践,发现labelImg对.png格式图像不友好,不支持对.png图像的标注,即使标注出来其标签文件也不对。

3.数据集的准备与制作

数据集的准备安装网上教程即可:

3.1 数据集标注

labelImg的使用方法一些博客都有讲解:参考博客 https://blog.csdn.net/xunan003/article/details/78720189/

有几个关键的地方需要强调一下:

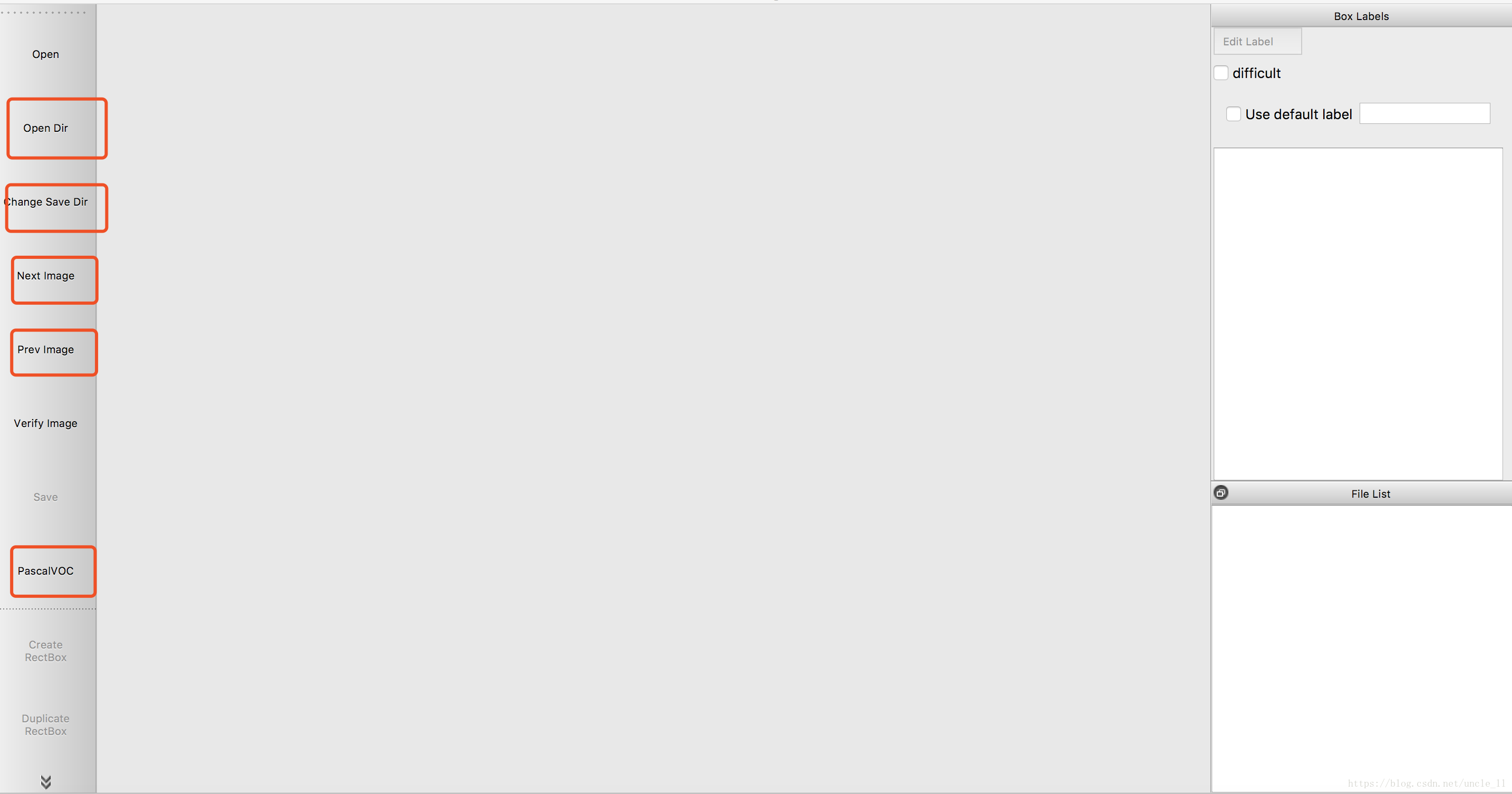

OpenDir 是要标注图像的文件地址

Change Save Dir 是修改保存标记文件的地址

Next Image 标注完点击这个进行下一张的标注

Prev Image 想查看之前标注的情况

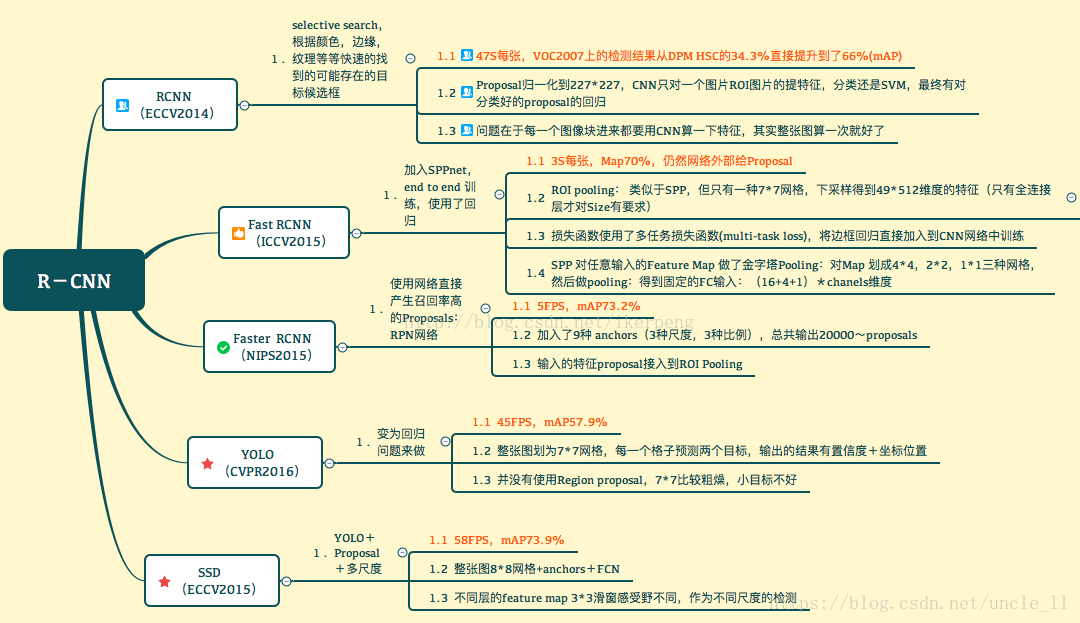

PascalVOC/YOLO 这个可选,前一种是得到的格式为xml的标签文件,后一种是直接得到格式为txt的标签文件,后一种适用于YOLO网络,前一种适合RCNN系列文章,根据自身选择,本文由于之前尝试过使用RCNN系列模型,就先标记为xml文件,这里不用担心,darknet提供了转换程序./scripts/voc_label.py。

3.2 数据集xml转成yolo

对于使用tensorfloe-objection detection api的人来说,标签格式是xml,好在darknet中提供了将xml格式的标签转换为txt标签的函数,darknet提供了转换程序./scripts/voc_label.py。注意这里需要修改的地方:

-

import xml.etree.ElementTree

as ET

-

import pickle

-

import os

-

from os

import listdir, getcwd

-

from os.path

import join

-

-

# sets=[('2012', 'train'), ('2012', 'val'), ('2007', 'train'), ('2007', 'val'), ('2007', 'test')]

-

# 前一个表示年份,后一个表示训练或测试集文件

-

sets=[(

'2007',

'train'),(

'2007',

'test')]

-

# classes = ["aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow", "diningtable", "dog", "horse", "motorbike", "person", "pottedplant", "sheep", "sofa", "train", "tvmonitor"]

-

classes = [

"1",

"2",

"3"]

-

# classes表示自己的类别名称

-

-

-

def convert(size, box):

-

dw =

1./(size[

0])

-

dh =

1./(size[

1])

-

x = (box[

0] + box[

1])/

2.0 -

1

-

y = (box[

2] + box[

3])/

2.0 -

1

-

w = box[

1] - box[

0]

-

h = box[

3] - box[

2]

-

x = x*dw

-

w = w*dw

-

y = y*dh

-

h = h*dh

-

return (x,y,w,h)

-

-

def convert_annotation(year, image_id):

-

in_file = open(

'VOCdevkit/VOC%s/Annotations/%s.xml'%(year, image_id))

-

out_file = open(

'VOCdevkit/VOC%s/labels/%s.txt'%(year, image_id),

'w')

-

tree=ET.parse(in_file)

-

root = tree.getroot()

-

size = root.find(

'size')

-

w = int(size.find(

'width').text)

-

h = int(size.find(

'height').text)

-

-

for obj

in root.iter(

'object'):

-

difficult = obj.find(

'difficult').text

-

cls = obj.find(

'name').text

-

if cls

not

in classes

or int(difficult)==

1:

-

continue

-

cls_id = classes.index(cls)

-

xmlbox = obj.find(

'bndbox')

-

b = (float(xmlbox.find(

'xmin').text), float(xmlbox.find(

'xmax').text), float(xmlbox.find(

'ymin').text), float(xmlbox.find(

'ymax').text))

-

bb = convert((w,h), b)

-

out_file.write(str(cls_id) +

" " +

" ".join([str(a)

for a

in bb]) +

'\n')

-

-

wd = getcwd()

-

-

for year, image_set

in sets:

-

if

not os.path.exists(

'VOCdevkit/VOC%s/labels/'%(year)):

-

os.makedirs(

'VOCdevkit/VOC%s/labels/'%(year))

-

image_ids = open(

'VOCdevkit/VOC%s/ImageSets/Main/%s.txt'%(year, image_set)).read().strip().split()

-

list_file = open(

'%s_%s.txt'%(year, image_set),

'w')

-

for image_id

in image_ids:

-

list_file.write(

'%s/VOCdevkit/VOC%s/JPEGImages/%s.jpg\n'%(wd, year, image_id))

-

convert_annotation(year, image_id)

-

list_file.close()

-

-

#这里将最后两行注射掉,运行后得到的训练集和测试集 组合在一起是整个数据集,而不是将训练集和测试集和一块作为训练集

-

#os.system("cat 2007_train.txt 2007_val.txt 2012_train.txt 2012_val.txt > train.txt")

-

#os.system("cat 2007_train.txt 2007_val.txt 2007_test.txt 2012_train.txt 2012_val.txt > train.all.txt")

运行之后,在./scripts文件夹就得到训练集和测试集txt

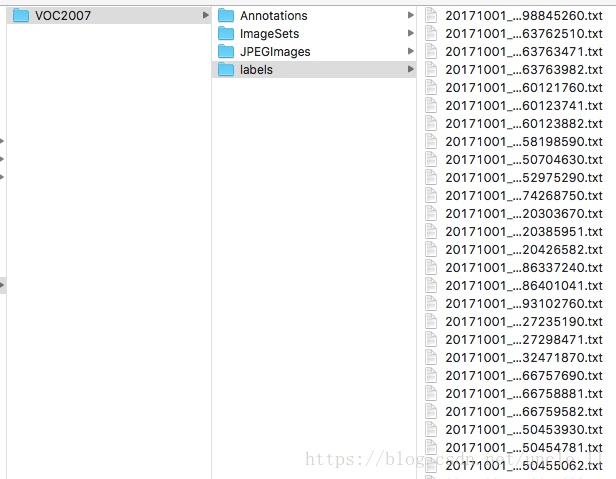

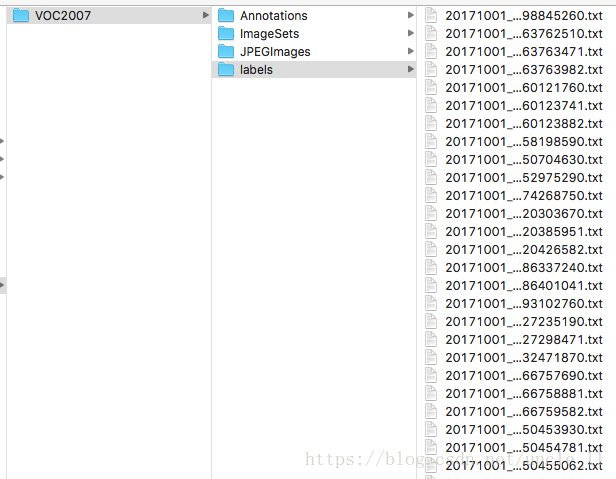

对应的label文件夹中有了转换好的txt格式的label:

4. 网络模型的训练与测试

4.1 网络模型的训练

4.1.1 需要更改的地方

修改cfg/voc.data

-

# 注意路径,相对路径和绝对路径都可以

-

classes= n

#类别数为n 你分几类就将n设置为几

-

train = ./scripts/

2007_train.txt

#对应刚才生成的训练集txt

-

valid = ./scripts/

2007_test.txt

-

names = data/voc.names

-

backup = ./results/

#网络模型训练好的参数保存路径

修改data/voc.names

-

#在这个地方输入你标签的名称,每类一行,比如我分三类,分别为“ni”,"hao","ma",则下面是

-

ni

-

has

-

ma

修改cfg/yolov3-voc.cfg 网络模型参数

-

[net]

-

# 将头部代码更改为train,batch数量根据你自身的电脑能力设置,默认设置是64

-

# Testing

-

# batch=1

-

# subdivisions=1

-

# Training

-

batch=

64

-

subdivisions=

16

-

width=

416

-

height=

416

-

channels=

3

-

momentum=

0.9

-

decay=

0.0005

-

angle=

0

-

saturation =

1.5

-

exposure =

1.5

-

hue=

.1

-

-

learning_rate=

0.001

-

burn_in=

1000

-

max_batches =

50200

#最大迭代batches数

-

policy=steps

-

steps=

20000,

35000

# 每迭代多少次改变一次学习率,这里是*0.1

-

scales=

.1,

.1

-

-

[convolutional]

-

size=

1

-

stride=

1

-

pad=

1

-

filters=

24

#这里filters数量更改,与类别有关,一般公式是(classes_nums + 5) *3

-

activation=linear

-

-

[yolo]

-

mask =

0,

1,

2

-

anchors =

10,

13,

16,

30,

33,

23,

30,

61,

62,

45,

59,

119,

116,

90,

156,

198,

373,

326

-

classes=

3

# 修改成你自己的类别数

-

num=

9

-

jitter=

.3

-

ignore_thresh =

.5

-

truth_thresh =

1

-

random=

0

4.1.2 预训练模型训练

目前都是使用迁移学习,将成熟网络的部分参数直接用过来,这里也一样:

下装与训练模型:

wget https://pjreddie.com/media/files/darknet53.conv.74

训练:

./darknet detector train cfg/voc.data cfg/yolov3-voc.cfg darknet53.conv.74

4.1.3 中断后继续训练

当训练进行到一半的时候,可能中途中断或者是停了想继续进行时,只需将上面的语句最后的预训练权重更换为之前在voc.data中设置的模型训练保存路径中存在的权重即可,这里以yolov3.weights表示:

./darknet detector train cfg/voc.data cfg/yolov3-voc.cfg results/yolov3.weights

4.2 网络模型的测试

4.2.1 单张测试

单张测试就是指定一张图像名称进行测试,可类似于darknet网站中给定的例子那样,只不过需要修改相关路径及被测图片名称:

./darknet detect cfg/yolov3.cfg yolov3.weights data/dog.jpg

./darknet detect cfg/yolov3.cfg result/yolov3.weights /path/to/your picture

4.2.2 批量测试

如果想进行批量测试,则需要修改对应的源码,参考博客 https://blog.csdn.net/mieleizhi0522/article/details/79989754

但存在一个问题是无法将检测后的图像保存时,其名称与原始名称一样,有时候出错为null,在其基础上对其GetFilename函数进行修改。

-

#include "darknet.h"

-

-

static

int coco_ids[] = {

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

27,

28,

31,

32,

33,

34,

35,

36,

37,

38,

39,

40,

41,

42,

43,

44,

46,

47,

48,

49,

50,

51,

52,

53,

54,

55,

56,

57,

58,

59,

60,

61,

62,

63,

64,

65,

67,

70,

72,

73,

74,

75,

76,

77,

78,

79,

80,

81,

82,

84,

85,

86,

87,

88,

89,

90};

-

//获取文件的名字

-

-

-

char* GetFilename(char *fullname)

-

{

-

int from,to,i;

-

char *newstr,*temp;

-

if(fullname!=

NULL)

-

{

-

if((temp=

strchr(fullname,

'.'))==

NULL)

//if not find dot

-

newstr = fullname;

-

else

-

{

-

from =

strlen(fullname) -

1;

-

to = (temp-fullname);

//the first dot's index;

-

for(i=from;i--;i<=to)

-

if(fullname[i]==

'.')

break;

//find the last dot

-

newstr = (

char*)

malloc(i+

1);

-

strncpy(newstr,fullname,i);

-

*(newstr+i)=

0;

-

}

-

}

-

char name[

50] = {

""};

-

char *q =

strrchr(newstr,

'/') +

1;

-

strncpy(name,q,

40);

-

return name;

-

}

-

-

-

-

void train_detector(char *datacfg, char *cfgfile, char *weightfile, int *gpus, int ngpus, int clear)

-

{

-

list *options = read_data_cfg(datacfg);

-

char *train_images = option_find_str(options,

"train",

"data/train.list");

-

char *backup_directory = option_find_str(options,

"backup",

"/backup/");

-

-

srand(time(

0));

-

char *base = basecfg(cfgfile);

-

printf(

"%s\n", base);

-

float avg_loss =

-1;

-

network **nets =

calloc(ngpus,

sizeof(network));

-

-

srand(time(

0));

-

int seed = rand();

-

int i;

-

for(i =

0; i < ngpus; ++i){

-

srand(seed);

-

#ifdef GPU

-

cuda_set_device(gpus[i]);

-

#endif

-

nets[i] = load_network(cfgfile, weightfile, clear);

-

nets[i]->learning_rate *= ngpus;

-

}

-

srand(time(

0));

-

network *net = nets[

0];

-

-

int imgs = net->batch * net->subdivisions * ngpus;

-

printf(

"Learning Rate: %g, Momentum: %g, Decay: %g\n", net->learning_rate, net->momentum, net->decay);

-

data train, buffer;

-

-

layer l = net->layers[net->n -

1];

-

-

int classes = l.classes;

-

float jitter = l.jitter;

-

-

list *plist = get_paths(train_images);

-

//int N = plist->size;

-

char **paths = (

char **)list_to_array(plist);

-

-

load_args args = get_base_args(net);

-

args.coords = l.coords;

-

args.paths = paths;

-

args.n = imgs;

-

args.m = plist->size;

-

args.classes = classes;

-

args.jitter = jitter;

-

args.num_boxes = l.max_boxes;

-

args.d = &buffer;

-

args.type = DETECTION_DATA;

-

//args.type = INSTANCE_DATA;

-

args.threads =

64;

-

-

pthread_t load_thread = load_data(args);

-

double time;

-

int count =

0;

-

//while(i*imgs < N*120){

-

while(get_current_batch(net) < net->max_batches){

-

if(l.random && count++%

10 ==

0){

-

printf(

"Resizing\n");

-

int dim = (rand() %

10 +

10) *

32;

-

if (get_current_batch(net)+

200 > net->max_batches) dim =

608;

-

//int dim = (rand() % 4 + 16) * 32;

-

printf(

"%d\n", dim);

-

args.w = dim;

-

args.h = dim;

-

-

pthread_join(load_thread,

0);

-

train = buffer;

-

free_data(train);

-

load_thread = load_data(args);

-

-

#pragma omp parallel for

-

for(i =

0; i < ngpus; ++i){

-

resize_network(nets[i], dim, dim);

-

}

-

net = nets[

0];

-

}

-

time=what_time_is_it_now();

-

pthread_join(load_thread,

0);

-

train = buffer;

-

load_thread = load_data(args);

-

-

/*

-

int k;

-

for(k = 0; k < l.max_boxes; ++k){

-

box b = float_to_box(train.y.vals[10] + 1 + k*5);

-

if(!b.x) break;

-

printf("loaded: %f %f %f %f\n", b.x, b.y, b.w, b.h);

-

}

-

*/

-

/*

-

int zz;

-

for(zz = 0; zz < train.X.cols; ++zz){

-

image im = float_to_image(net->w, net->h, 3, train.X.vals[zz]);

-

int k;

-

for(k = 0; k < l.max_boxes; ++k){

-

box b = float_to_box(train.y.vals[zz] + k*5, 1);

-

printf("%f %f %f %f\n", b.x, b.y, b.w, b.h);

-

draw_bbox(im, b, 1, 1,0,0);

-

}

-

show_image(im, "truth11");

-

cvWaitKey(0);

-

save_image(im, "truth11");

-

}

-

*/

-

-

printf(

"Loaded: %lf seconds\n", what_time_is_it_now()-time);

-

-

time=what_time_is_it_now();

-

float loss =

0;

-

#ifdef GPU

-

if(ngpus ==

1){

-

loss = train_network(net, train);

-

}

else {

-

loss = train_networks(nets, ngpus, train,

4);

-

}

-

#else

-

loss = train_network(net, train);

-

#endif

-

if (avg_loss <

0) avg_loss = loss;

-

avg_loss = avg_loss*

.9 + loss*

.1;

-

-

i = get_current_batch(net);

-

printf(

"%ld: %f, %f avg, %f rate, %lf seconds, %d images\n", get_current_batch(net), loss, avg_loss, get_current_rate(net), what_time_is_it_now()-time, i*imgs);

-

if(i%

100==

0){

-

#ifdef GPU

-

if(ngpus !=

1) sync_nets(nets, ngpus,

0);

-

#endif

-

char buff[

256];

-

sprintf(buff,

"%s/%s.backup", backup_directory, base);

-

save_weights(net, buff);

-

}

-

if(i%

10000==

0 || (i <

1000 && i%

100 ==

0)){

-

#ifdef GPU

-

if(ngpus !=

1) sync_nets(nets, ngpus,

0);

-

#endif

-

char buff[

256];

-

sprintf(buff,

"%s/%s_%d.weights", backup_directory, base, i);

-

save_weights(net, buff);

-

}

-

free_data(train);

-

}

-

#ifdef GPU

-

if(ngpus !=

1) sync_nets(nets, ngpus,

0);

-

#endif

-

char buff[

256];

-

sprintf(buff,

"%s/%s_final.weights", backup_directory, base);

-

save_weights(net, buff);

-

}

-

-

-

static int get_coco_image_id(char *filename)

-

{

-

char *p =

strrchr(filename,

'/');

-

char *c =

strrchr(filename,

'_');

-

if(c) p = c;

-

return atoi(p+

1);

-

}

-

-

static void print_cocos(FILE *fp, char *image_path, detection *dets, int num_boxes, int classes, int w, int h)

-

{

-

int i, j;

-

int image_id = get_coco_image_id(image_path);

-

for(i =

0; i < num_boxes; ++i){

-

float xmin = dets[i].bbox.x - dets[i].bbox.w/

2.;

-

float xmax = dets[i].bbox.x + dets[i].bbox.w/

2.;

-

float ymin = dets[i].bbox.y - dets[i].bbox.h/

2.;

-

float ymax = dets[i].bbox.y + dets[i].bbox.h/

2.;

-

-

if (xmin <

0) xmin =

0;

-

if (ymin <

0) ymin =

0;

-

if (xmax > w) xmax = w;

-

if (ymax > h) ymax = h;

-

-

float bx = xmin;

-

float by = ymin;

-

float bw = xmax - xmin;

-

float bh = ymax - ymin;

-

-

for(j =

0; j < classes; ++j){

-

if (dets[i].prob[j])

fprintf(fp,

"{\"image_id\":%d, \"category_id\":%d, \"bbox\":[%f, %f, %f, %f], \"score\":%f},\n", image_id, coco_ids[j], bx, by, bw, bh, dets[i].prob[j]);

-

}

-

}

-

}

-

-

void print_detector_detections(FILE **fps, char *id, detection *dets, int total, int classes, int w, int h)

-

{

-

int i, j;

-

for(i =

0; i < total; ++i){

-

float xmin = dets[i].bbox.x - dets[i].bbox.w/

2. +

1;

-

float xmax = dets[i].bbox.x + dets[i].bbox.w/

2. +

1;

-

float ymin = dets[i].bbox.y - dets[i].bbox.h/

2. +

1;

-

float ymax = dets[i].bbox.y + dets[i].bbox.h/

2. +

1;

-

-

if (xmin <

1) xmin =

1;

-

if (ymin <

1) ymin =

1;

-

if (xmax > w) xmax = w;

-

if (ymax > h) ymax = h;

-

-

for(j =

0; j < classes; ++j){

-

if (dets[i].prob[j])

fprintf(fps[j],

"%s %f %f %f %f %f\n", id, dets[i].prob[j],

-

xmin, ymin, xmax, ymax);

-

}

-

}

-

}

-

-

void print_imagenet_detections(FILE *fp, int id, detection *dets, int total, int classes, int w, int h)

-

{

-

int i, j;

-

for(i =

0; i < total; ++i){

-

float xmin = dets[i].bbox.x - dets[i].bbox.w/

2.;

-

float xmax = dets[i].bbox.x + dets[i].bbox.w/

2.;

-

float ymin = dets[i].bbox.y - dets[i].bbox.h/

2.;

-

float ymax = dets[i].bbox.y + dets[i].bbox.h/

2.;

-

-

if (xmin <

0) xmin =

0;

-

if (ymin <

0) ymin =

0;

-

if (xmax > w) xmax = w;

-

if (ymax > h) ymax = h;

-

-

for(j =

0; j < classes; ++j){

-

int

class = j;

-

if (dets[i].prob[class])

fprintf(fp,

"%d %d %f %f %f %f %f\n", id, j+

1, dets[i].prob[class],

-

xmin, ymin, xmax, ymax);

-

}

-

}

-

}

-

-

void validate_detector_flip(char *datacfg, char *cfgfile, char *weightfile, char *outfile)

-

{

-

int j;

-

list *options = read_data_cfg(datacfg);

-

char *valid_images = option_find_str(options,

"valid",

"data/train.list");

-

char *name_list = option_find_str(options,

"names",

"data/names.list");

-

char *prefix = option_find_str(options,

"results",

"results");

-

char **names = get_labels(name_list);

-

char *mapf = option_find_str(options,

"map",

0);

-

int *

map =

0;

-

if (mapf)

map = read_map(mapf);

-

-

network *net = load_network(cfgfile, weightfile,

0);

-

set_batch_network(net,

2);

-

fprintf(

stderr,

"Learning Rate: %g, Momentum: %g, Decay: %g\n", net->learning_rate, net->momentum, net->decay);

-

srand(time(

0));

-

-

list *plist = get_paths(valid_images);

-

char **paths = (

char **)list_to_array(plist);

-

-

layer l = net->layers[net->n

-1];

-

int classes = l.classes;

-

-

char buff[

1024];

-

char *type = option_find_str(options,

"eval",

"voc");

-

FILE *fp =

0;

-

FILE **fps =

0;

-

int coco =

0;

-

int imagenet =

0;

-

if(

0==

strcmp(type,

"coco")){

-

if(!outfile) outfile =

"coco_results";

-

snprintf(buff,

1024,

"%s/%s.json", prefix, outfile);

-

fp = fopen(buff,

"w");

-

fprintf(fp,

"[\n");

-

coco =

1;

-

}

else

if(

0==

strcmp(type,

"imagenet")){

-

if(!outfile) outfile =

"imagenet-detection";

-

snprintf(buff,

1024,

"%s/%s.txt", prefix, outfile);

-

fp = fopen(buff,

"w");

-

imagenet =

1;

-

classes =

200;

-

}

else {

-

if(!outfile) outfile =

"comp4_det_test_";

-

fps =

calloc(classes,

sizeof(FILE *));

-

for(j =

0; j < classes; ++j){

-

snprintf(buff,

1024,

"%s/%s%s.txt", prefix, outfile, names[j]);

-

fps[j] = fopen(buff,

"w");

-

}

-

}

-

-

int m = plist->size;

-

int i=

0;

-

int t;

-

-

float thresh =

.005;

-

float nms =

.45;

-

-

int nthreads =

4;

-

image *val =

calloc(nthreads,

sizeof(image));

-

image *val_resized =

calloc(nthreads,

sizeof(image));

-

image *buf =

calloc(nthreads,

sizeof(image));

-

image *buf_resized =

calloc(nthreads,

sizeof(image));

-

pthread_t *thr =

calloc(nthreads,

sizeof(

pthread_t));

-

-

image input = make_image(net->w, net->h, net->c*

2);

-

-

load_args args = {

0};

-

args.w = net->w;

-

args.h = net->h;

-

//args.type = IMAGE_DATA;

-

args.type = LETTERBOX_DATA;

-

-

for(t =

0; t < nthreads; ++t){

-

args.path = paths[i+t];

-

args.im = &buf[t];

-

args.resized = &buf_resized[t];

-

thr[t] = load_data_in_thread(args);

-

}

-

double start = what_time_is_it_now();

-

for(i = nthreads; i < m+nthreads; i += nthreads){

-

fprintf(

stderr,

"%d\n", i);

-

for(t =

0; t < nthreads && i+t-nthreads < m; ++t){

-

pthread_join(thr[t],

0);

-

val[t] = buf[t];

-

val_resized[t] = buf_resized[t];

-

}

-

for(t =

0; t < nthreads && i+t < m; ++t){

-

args.path = paths[i+t];

-

args.im = &buf[t];

-

args.resized = &buf_resized[t];

-

thr[t] = load_data_in_thread(args);

-

}

-

for(t =

0; t < nthreads && i+t-nthreads < m; ++t){

-

char *path = paths[i+t-nthreads];

-

char *id = basecfg(path);

-

copy_cpu(net->w*net->h*net->c, val_resized[t].data,

1, input.data,

1);

-

flip_image(val_resized[t]);

-

copy_cpu(net->w*net->h*net->c, val_resized[t].data,

1, input.data + net->w*net->h*net->c,

1);

-

-

network_predict(net, input.data);

-

int w = val[t].w;

-

int h = val[t].h;

-

int num =

0;

-

detection *dets = get_network_boxes(net, w, h, thresh,

.5,

map,

0, &num);

-

if (nms) do_nms_sort(dets, num, classes, nms);

-

if (coco){

-

print_cocos(fp, path, dets, num, classes, w, h);

-

}

else

if (imagenet){

-

print_imagenet_detections(fp, i+t-nthreads+

1, dets, num, classes, w, h);

-

}

else {

-

print_detector_detections(fps, id, dets, num, classes, w, h);

-

}

-

free_detections(dets, num);

-

free(id);

-

free_image(val[t]);

-

free_image(val_resized[t]);

-

}

-

}

-

for(j =

0; j < classes; ++j){

-

if(fps) fclose(fps[j]);

-

}

-

if(coco){

-

fseek(fp,

-2, SEEK_CUR);

-

fprintf(fp,

"\n]\n");

-

fclose(fp);

-

}

-

fprintf(

stderr,

"Total Detection Time: %f Seconds\n", what_time_is_it_now() - start);

-

}

-

-

-

void validate_detector(char *datacfg, char *cfgfile, char *weightfile, char *outfile)

-

{

-

int j;

-

list *options = read_data_cfg(datacfg);

-

char *valid_images = option_find_str(options,

"valid",

"data/train.list");

-

char *name_list = option_find_str(options,

"names",

"data/names.list");

-

char *prefix = option_find_str(options,

"results",

"results");

-

char **names = get_labels(name_list);

-

char *mapf = option_find_str(options,

"map",

0);

-

int *

map =

0;

-

if (mapf)

map = read_map(mapf);

-

-

network *net = load_network(cfgfile, weightfile,

0);

-

set_batch_network(net,

1);

-

fprintf(

stderr,

"Learning Rate: %g, Momentum: %g, Decay: %g\n", net->learning_rate, net->momentum, net->decay);

-

srand(time(

0));

-

-

list *plist = get_paths(valid_images);

-

char **paths = (

char **)list_to_array(plist);

-

-

layer l = net->layers[net->n

-1];

-

int classes = l.classes;

-

-

char buff[

1024];

-

char *type = option_find_str(options,

"eval",

"voc");

-

FILE *fp =

0;

-

FILE **fps =

0;

-

int coco =

0;

-

int imagenet =

0;

-

if(

0==

strcmp(type,

"coco")){

-

if(!outfile) outfile =

"coco_results";

-

snprintf(buff,

1024,

"%s/%s.json", prefix, outfile);

-

fp = fopen(buff,

"w");

-

fprintf(fp,

"[\n");

-

coco =

1;

-

}

else

if(

0==

strcmp(type,

"imagenet")){

-

if(!outfile) outfile =

"imagenet-detection";

-

snprintf(buff,

1024,

"%s/%s.txt", prefix, outfile);

-

fp = fopen(buff,

"w");

-

imagenet =

1;

-

classes =

200;

-

}

else {

-

if(!outfile) outfile =

"comp4_det_test_";

-

fps =

calloc(classes,

sizeof(FILE *));

-

for(j =

0; j < classes; ++j){

-

snprintf(buff,

1024,

"%s/%s%s.txt", prefix, outfile, names[j]);

-

fps[j] = fopen(buff,

"w");

-

}

-

}

-

-

-

int m = plist->size;

-

int i=

0;

-

int t;

-

-

float thresh =

.005;

-

float nms =

.45;

-

-

int nthreads =

4;

-

image *val =

calloc(nthreads,

sizeof(image));

-

image *val_resized =

calloc(nthreads,

sizeof(image));

-

image *buf =

calloc(nthreads,

sizeof(image));

-

image *buf_resized =

calloc(nthreads,

sizeof(image));

-

pthread_t *thr =

calloc(nthreads,

sizeof(

pthread_t));

-

-

load_args args = {

0};

-

args.w = net->w;

-

args.h = net->h;

-

//args.type = IMAGE_DATA;

-

args.type = LETTERBOX_DATA;

-

-

for(t =

0; t < nthreads; ++t){

-

args.path = paths[i+t];

-

args.im = &buf[t];

-

args.resized = &buf_resized[t];

-

thr[t] = load_data_in_thread(args);

-

}

-

double start = what_time_is_it_now();

-

for(i = nthreads; i < m+nthreads; i += nthreads){

-

fprintf(

stderr,

"%d\n", i);

-

for(t =

0; t < nthreads && i+t-nthreads < m; ++t){

-

pthread_join(thr[t],

0);

-

val[t] = buf[t];

-

val_resized[t] = buf_resized[t];

-

}

-

for(t =

0; t < nthreads && i+t < m; ++t){

-

args.path = paths[i+t];

-

args.im = &buf[t];

-

args.resized = &buf_resized[t];

-

thr[t] = load_data_in_thread(args);

-

}

-

for(t =

0; t < nthreads && i+t-nthreads < m; ++t){

-

char *path = paths[i+t-nthreads];

-

char *id = basecfg(path);

-

float *X = val_resized[t].data;

-

network_predict(net, X);

-

int w = val[t].w;

-

int h = val[t].h;

-

int nboxes =

0;

-

detection *dets = get_network_boxes(net, w, h, thresh,

.5,

map,

0, &nboxes);

-

if (nms) do_nms_sort(dets, nboxes, classes, nms);

-

if (coco){

-

print_cocos(fp, path, dets, nboxes, classes, w, h);

-

}

else

if (imagenet){

-

print_imagenet_detections(fp, i+t-nthreads+

1, dets, nboxes, classes, w, h);

-

}

else {

-

print_detector_detections(fps, id, dets, nboxes, classes, w, h);

-

}

-

free_detections(dets, nboxes);

-

free(id);

-

free_image(val[t]);

-

free_image(val_resized[t]);

-

}

-

}

-

for(j =

0; j < classes; ++j){

-

if(fps) fclose(fps[j]);

-

}

-

if(coco){

-

fseek(fp,

-2, SEEK_CUR);

-

fprintf(fp,

"\n]\n");

-

fclose(fp);

-

}

-

fprintf(

stderr,

"Total Detection Time: %f Seconds\n", what_time_is_it_now() - start);

-

}

-

-

void validate_detector_recall(char *datacfg, char *cfgfile, char *weightfile)

-

{

-

network *net = load_network(cfgfile, weightfile,

0);

-

set_batch_network(net,

1);

-

fprintf(

stderr,

"Learning Rate: %g, Momentum: %g, Decay: %g\n", net->learning_rate, net->momentum, net->decay);

-

srand(time(

0));

-

-

list *options = read_data_cfg(datacfg);

-

char *valid_images = option_find_str(options,

"valid",

"data/train.list");

-

list *plist = get_paths(valid_images);

-

char **paths = (

char **)list_to_array(plist);

-

-

layer l = net->layers[net->n

-1];

-

-

int j, k;

-

-

int m = plist->size;

-

int i=

0;

-

-

float thresh =

.001;

-

float iou_thresh =

.5;

-

float nms =

.4;

-

-

int total =

0;

-

int correct =

0;

-

int proposals =

0;

-

float avg_iou =

0;

-

-

for(i =

0; i < m; ++i){

-

char *path = paths[i];

-

image orig = load_image_color(path,

0,

0);

-

image sized = resize_image(orig, net->w, net->h);

-

char *id = basecfg(path);

-

network_predict(net, sized.data);

-

int nboxes =

0;

-

detection *dets = get_network_boxes(net, sized.w, sized.h, thresh,

.5,

0,

1, &nboxes);

-

if (nms) do_nms_obj(dets, nboxes,

1, nms);

-

-

char labelpath[

4096];

-

find_replace(path,

"images",

"labels", labelpath);

-

find_replace(labelpath,

"JPEGImages",

"labels", labelpath);

-

find_replace(labelpath,

".jpg",

".txt", labelpath);

-

find_replace(labelpath,

".JPEG",

".txt", labelpath);

-

-

int num_labels =

0;

-

box_label *truth = read_boxes(labelpath, &num_labels);

-

for(k =

0; k < nboxes; ++k){

-

if(dets[k].objectness > thresh){

-

++proposals;

-

}

-

}

-

for (j =

0; j < num_labels; ++j) {

-

++total;

-

box t = {truth[j].x, truth[j].y, truth[j].w, truth[j].h};

-

float best_iou =

0;

-

for(k =

0; k < l.w*l.h*l.n; ++k){

-

float iou = box_iou(dets[k].bbox, t);

-

if(dets[k].objectness > thresh && iou > best_iou){

-

best_iou = iou;

-

}

-

}

-

avg_iou += best_iou;

-

if(best_iou > iou_thresh){

-

++correct;

-

}

-

}

-

-

fprintf(

stderr,

"%5d %5d %5d\tRPs/Img: %.2f\tIOU: %.2f%%\tRecall:%.2f%%\n", i, correct, total, (

float)proposals/(i+

1), avg_iou*

100/total,

100.*correct/total);

-

free(id);

-

free_image(orig);

-

free_image(sized);

-

}

-

}

-

-

void test_detector(char *datacfg, char *cfgfile, char *weightfile, char *filename, float thresh, float hier_thresh, char *outfile, int fullscreen)

-

{

-

list *options = read_data_cfg(datacfg);

-

char *name_list = option_find_str(options,

"names",

"data/names.list");

-

char **names = get_labels(name_list);

-

-

image **alphabet = load_alphabet();

-

network *net = load_network(cfgfile, weightfile,

0);

-

set_batch_network(net,

1);

-

srand(

2222222);

-

double time;

-

char buff[

256];

-

char *input = buff;

-

float nms=

.45;

-

int i=

0;

-

while(

1){

-

if(filename){

-

strncpy(input, filename,

256);

-

image im = load_image_color(input,

0,

0);

-

image sized = letterbox_image(im, net->w, net->h);

-

//image sized = resize_image(im, net->w, net->h);

-

//image sized2 = resize_max(im, net->w);

-

//image sized = crop_image(sized2, -((net->w - sized2.w)/2), -((net->h - sized2.h)/2), net->w, net->h);

-

//resize_network(net, sized.w, sized.h);

-

layer l = net->layers[net->n

-1];

-

-

-

float *X = sized.data;

-

time=what_time_is_it_now();

-

network_predict(net, X);

-

printf(

"%s: Predicted in %f seconds.\n", input, what_time_is_it_now()-time);

-

int nboxes =

0;

-

detection *dets = get_network_boxes(net, im.w, im.h, thresh, hier_thresh,

0,

1, &nboxes);

-

//printf("%d\n", nboxes);

-

//if (nms) do_nms_obj(boxes, probs, l.w*l.h*l.n, l.classes, nms);

-

if (nms) do_nms_sort(dets, nboxes, l.classes, nms);

-

draw_detections(im, dets, nboxes, thresh, names, alphabet, l.classes);

-

free_detections(dets, nboxes);

-

if(outfile)

-

{

-

save_image(im, outfile);

-

}

-

else{

-

//save_image(im, "predictions");

-

char image[

2048];

-

sprintf(image,

"./data/predict/%s",GetFilename(filename));

-

save_image(im,image);

-

printf(

"predict %s successfully!\n",GetFilename(filename));

-

#ifdef OPENCV

-

cvNamedWindow(

"predictions", CV_WINDOW_NORMAL);

-

if(fullscreen){

-

cvSetWindowProperty(

"predictions", CV_WND_PROP_FULLSCREEN, CV_WINDOW_FULLSCREEN);

-

}

-

show_image(im,

"predictions");

-

cvWaitKey(

0);

-

cvDestroyAllWindows();

-

#endif

-

}

-

free_image(im);

-

free_image(sized);

-

if (filename)

break;

-

}

-

else {

-

printf(

"Enter Image Path: ");

-

fflush(

stdout);

-

input = fgets(input,

256,

stdin);

-

if(!input)

return;

-

strtok(input,

"\n");

-

-

list *plist = get_paths(input);

-

char **paths = (

char **)list_to_array(plist);

-

printf(

"Start Testing!\n");

-

int m = plist->size;

-

if(access(

"./data/out",

0)==

-1)

//"/home/FENGsl/darknet/data"修改成自己的路径

-

{

-

if (mkdir(

"./data/out",

0777))

//"/home/FENGsl/darknet/data"修改成自己的路径

-

{

-

printf(

"creat file bag failed!!!");

-

}

-

}

-

for(i =

0; i < m; ++i){

-

char *path = paths[i];

-

image im = load_image_color(path,

0,

0);

-

image sized = letterbox_image(im, net->w, net->h);

-

//image sized = resize_image(im, net->w, net->h);

-

//image sized2 = resize_max(im, net->w);

-

//image sized = crop_image(sized2, -((net->w - sized2.w)/2), -((net->h - sized2.h)/2), net->w, net->h);

-

//resize_network(net, sized.w, sized.h);

-

layer l = net->layers[net->n

-1];

-

-

-

float *X = sized.data;

-

time=what_time_is_it_now();

-

network_predict(net, X);

-

printf(

"Try Very Hard:");

-

printf(

"%s: Predicted in %f seconds.\n", path, what_time_is_it_now()-time);

-

int nboxes =

0;

-

detection *dets = get_network_boxes(net, im.w, im.h, thresh, hier_thresh,

0,

1, &nboxes);

-

//printf("%d\n", nboxes);

-

//if (nms) do_nms_obj(boxes, probs, l.w*l.h*l.n, l.classes, nms);

-

if (nms) do_nms_sort(dets, nboxes, l.classes, nms);

-

draw_detections(im, dets, nboxes, thresh, names, alphabet, l.classes);

-

free_detections(dets, nboxes);

-

if(outfile){

-

save_image(im, outfile);

-

}

-

else{

-

-

char b[

2048];

-

sprintf(b,

"./data/out/%s",GetFilename(path));

//"/home/FENGsl/darknet/data"修改成自己的路径

-

-

save_image(im, b);

-

printf(

"save %s successfully!\n",GetFilename(path));

-

#ifdef OPENCV

-

cvNamedWindow(

"predictions", CV_WINDOW_NORMAL);

-

if(fullscreen){

-

cvSetWindowProperty(

"predictions", CV_WND_PROP_FULLSCREEN, CV_WINDOW_FULLSCREEN);

-

}

-

show_image(im,

"predictions");

-

cvWaitKey(

0);

-

cvDestroyAllWindows();

-

#endif

-

}

-

-

free_image(im);

-

free_image(sized);

-

if (filename)

break;

-

}

-

}

-

}

-

}

-

-

void run_detector(int argc, char **argv)

-

{

-

char *prefix = find_char_arg(argc, argv,

"-prefix",

0);

-

float thresh = find_float_arg(argc, argv,

"-thresh",

.5);

-

float hier_thresh = find_float_arg(argc, argv,

"-hier",

.5);

-

int cam_index = find_int_arg(argc, argv,

"-c",

0);

-

int frame_skip = find_int_arg(argc, argv,

"-s",

0);

-

int avg = find_int_arg(argc, argv,

"-avg",

3);

-

if(argc <

4){

-

fprintf(

stderr,

"usage: %s %s [train/test/valid] [cfg] [weights (optional)]\n", argv[

0], argv[

1]);

-

return;

-

}

-

char *gpu_list = find_char_arg(argc, argv,

"-gpus",

0);

-

char *outfile = find_char_arg(argc, argv,

"-out",

0);

-

int *gpus =

0;

-

int gpu =

0;

-

int ngpus =

0;

-

if(gpu_list){

-

printf(

"%s\n", gpu_list);

-

int len =

strlen(gpu_list);

-

ngpus =

1;

-

int i;

-

for(i =

0; i < len; ++i){

-

if (gpu_list[i] ==

',') ++ngpus;

-

}

-

gpus =

calloc(ngpus,

sizeof(

int));

-

for(i =

0; i < ngpus; ++i){

-

gpus[i] = atoi(gpu_list);

-

gpu_list =

strchr(gpu_list,

',')+

1;

-

}

-

}

else {

-

gpu = gpu_index;

-

gpus = &gpu;

-

ngpus =

1;

-

}

-

-

int clear = find_arg(argc, argv,

"-clear");

-

int fullscreen = find_arg(argc, argv,

"-fullscreen");

-

int width = find_int_arg(argc, argv,

"-w",

0);

-

int height = find_int_arg(argc, argv,

"-h",

0);

-

int fps = find_int_arg(argc, argv,

"-fps",

0);

-

//int class = find_int_arg(argc, argv, "-class", 0);

-

-

char *datacfg = argv[

3];

-

char *cfg = argv[

4];

-

char *weights = (argc >

5) ? argv[

5] :

0;

-

char *filename = (argc >

6) ? argv[

6]:

0;

-

if(

0==

strcmp(argv[

2],

"test")) test_detector(datacfg, cfg, weights, filename, thresh, hier_thresh, outfile, fullscreen);

-

else

if(

0==

strcmp(argv[

2],

"train")) train_detector(datacfg, cfg, weights, gpus, ngpus, clear);

-

else

if(

0==

strcmp(argv[

2],

"valid")) validate_detector(datacfg, cfg, weights, outfile);

-

else

if(

0==

strcmp(argv[

2],

"valid2")) validate_detector_flip(datacfg, cfg, weights, outfile);

-

else

if(

0==

strcmp(argv[

2],

"recall")) validate_detector_recall(datacfg,cfg, weights);

-

else

if(

0==

strcmp(argv[

2],

"demo")) {

-

list *options = read_data_cfg(datacfg);

-

int classes = option_find_int(options,

"classes",

20);

-

char *name_list = option_find_str(options,

"names",

"data/names.list");

-

char **names = get_labels(name_list);

-

demo(cfg, weights, thresh, cam_index, filename, names, classes, frame_skip, prefix, avg, hier_thresh, width, height, fps, fullscreen);

-

}

-

//else if(0==strcmp(argv[2], "extract")) extract_detector(datacfg, cfg, weights, cam_index, filename, class, thresh, frame_skip);

-

//else if(0==strcmp(argv[2], "censor")) censor_detector(datacfg, cfg, weights, cam_index, filename, class, thresh, frame_skip);

-

}

-

./darknet detector

test cfg/voc.data cfg/yolov3-voc.cfg backup/yolov3-voc_final.weights

-

layer filters size input output

-

0 conv 32 3 x 3 / 1 416 x 416 x 3 -> 416 x 416 x 32 0.299 BFLOPs

-

1 conv 64 3 x 3 / 2 416 x 416 x 32 -> 208 x 208 x 64 1.595 BFLOPs

-

.......

-

104 conv 256 3 x 3 / 1 52 x 52 x 128 -> 52 x 52 x 256 1.595 BFLOPs

-

105 conv 255 1 x 1 / 1 52 x 52 x 256 -> 52 x 52 x 255 0.353 BFLOPs

-

106 detection

-

Loading weights from yolov3.weights...Done!

-

Enter Image Path:

这里让输入图像路径,一个txt保存的路径即可,我在这里输入的是之前生成的2007_test.txt

5. Python接口

darknet提供了python接口,直接使用python即可调用程序得到检测结果,python接口在`./darknet/python`文件夹中,调用的是编译时生成的libdarknet.so文件,不同的机器平台编译生成的文件不一样,如果换机器或使用cpu或gpu运行时,请重新编译一下。

该文件有两个文件夹:darknet.py 与provertbot.py,目前的版本支持python2.7,适当修改代码使其支持python3.x,个人做好的api已上传到github上,方便使用。

python darknet.py

输出结果形式为: res.append((meta.names[i], dets[j].prob[i], (b.x, b.y, b.w, b.h)))

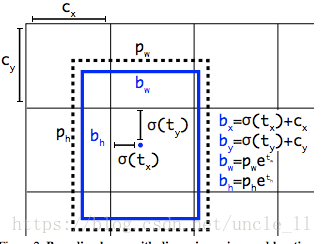

依次为检测出物体的名称,概率,检测框大小范围(在原图中所处的位置),其中x,y表示方框中心,w和h分别表示中心到两边的宽度和高度,如下图所示:

本人的api对其进行了更改,输出的是方框的横纵坐标的范围(x1,x2,y1,y2),且个人只分三类,区分服装上衣,下衣及全身装。读者有需要的话只需修改你训练的model位置及配置文件cfg即可。

https://github.com/UncleLLD/img-detect-yolov3

7. 计算MAP和recall

1.生成检测结果文件

./darknet detector valid cfg/car.data cfg/car.cfg backup/car_final.weights -out car.txt -gpu 0 -thresh .5

2.把car.txt 用faster rcnn 中voc_eval计算mAP

-

/home/sam/src/caffeup2date_pyfasterrcnn/lib/datasets/compute_mAP.py

-

from voc_eval

import voc_eval

-

print(voc_eval(

'/home/sam/src/darknet/results/{}.txt',/home/sam/datasets/car2/VOC2007/Annotations/{}.xml

','/home/sam/datasets/car2/VOC2007/ImageSets/Main/test.txt

', 'ca

r', '.

')

第三个结果就是

如果只想计算大于0.3的输出结果的mAP,把 voc_eval.py文件中如下代码更改

-

sorted_ind = np.argsort(-confidence)

-

sorted_ind1 = np.where(confidence[sorted_ind] >=

.3)[

0]

#np.argsort(-confidence<=-.3)

-

sorted_ind = sorted_ind[sorted_ind1]

3.计算recall

./darknet detector recall cfg/car.data cfg/car.cfg backup/car_final.weights -out car.txt -gpu 0 -thresh .5

7.参考

YOLO V3

参考:

*YOLOv3批量测试图片并保存在自定义文件夹下(批量测试)

注:文件夹内容保存图片命名问题,*GetFilename(char *p)函数中限制了文件名长度,修改即可

* YOLOv3 ubuntu 配置及训练自己的VOC格式数据集(配置及训练)

* YOLOv3: 训练自己的数据(训练为主,部分测试问题可以参考)

* YOLO 网络终端输出参数意义

英文:https://timebutt.github.io/static/understanding-yolov2-training-output/

中文:https://blog.csdn.net/dcrmg/article/details/78565440

* yolo官方文档:https://pjreddie.com/darknet/yolo/

YOLO V2

参考:

* YOLOv2训练自己数据集的一些心得----VOC格式 (可视化)

* YOLOv2训练自己的数据集

* 使用YOLO v2训练自己的数据

后续待完善...