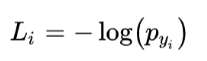

首先,对softmax loss 有一个简单的理解

Softmax loss =

![]()

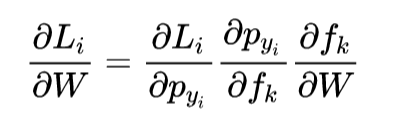

对参数W求导

至于推导过程,李弘毅老师的课程中在讲 logistic regression 的时候大概讲过类似的推导过程:

下面是代码部分:

- 数据加载及预处理

import random

import numpy as np

from cs231n.data_utils import load_CIFAR10

import matplotlib.pyplot as plt

def get_CIFAR10_data(num_training=49000, num_validation=1000, num_test=1000, num_dev=500):

"""

Load the CIFAR-10 dataset from disk and perform preprocessing to prepare

it for the linear classifier. These are the same steps as we used for the

SVM, but condensed to a single function.

"""

# Load the raw CIFAR-10 data

cifar10_dir = 'F:\pycharmFile\KNN\cifar-10-batches-py'

X_train, y_train, X_test, y_test = load_CIFAR10(cifar10_dir)

# subsample the data

mask = list(range(num_training, num_training + num_validation))

X_val = X_train[mask]

y_val = y_train[mask]

mask = list(range(num_training))

X_train = X_train[mask]

y_train = y_train[mask]

mask = list(range(num_test))

X_test = X_test[mask]

y_test = y_test[mask]

mask = np.random.choice(num_training, num_dev, replace=False)

X_dev = X_train[mask]

y_dev = y_train[mask]

# Preprocessing: reshape the image data into rows

X_train = np.reshape(X_train, (X_train.shape[0], -1))

X_val = np.reshape(X_val, (X_val.shape[0], -1))

X_test = np.reshape(X_test, (X_test.shape[0], -1))

X_dev = np.reshape(X_dev, (X_dev.shape[0], -1))

# Normalize the data: subtract the mean image

mean_image = np.mean(X_train, axis=0)

X_train -= mean_image

X_val -= mean_image

X_test -= mean_image

X_dev -= mean_image

# add bias dimension and transform into columns

X_train = np.hstack([X_train, np.ones((X_train.shape[0], 1))])

X_val = np.hstack([X_val, np.ones((X_val.shape[0], 1))])

X_test = np.hstack([X_test, np.ones((X_test.shape[0], 1))])

X_dev = np.hstack([X_dev, np.ones((X_dev.shape[0], 1))])

return X_train, y_train, X_val, y_val, X_test, y_test, X_dev, y_dev

# Invoke the above function to get our data.

X_train, y_train, X_val, y_val, X_test, y_test, X_dev, y_dev = get_CIFAR10_data()

# print('Train data shape: ', X_train.shape)

# print('Train labels shape: ', y_train.shape)

# print('Validation data shape: ', X_val.shape)

# print('Validation labels shape: ', y_val.shape)

# print('Test data shape: ', X_test.shape)

# print('Test labels shape: ', y_test.shape)

# print('dev data shape: ', X_dev.shape)

# print('dev labels shape: ', y_dev.shape)- 使用循环方式实现softmax loss

from cs231n.classifiers.softmax import softmax_loss_naive

import time

# Generate a random softmax weight matrix and use it to compute the loss.

W = np.random.randn(3073, 10) * 0.0001

loss, grad = softmax_loss_naive(W, X_dev, y_dev, 0.0)

# As a rough sanity check, our loss should be something close to -log(0.1).

print('loss: %f' % loss)

print('sanity check: %f' % (-np.log(0.1)))实现过程 softmax_loss_naive 在文件 softmax.py 中

def softmax_loss_naive(W, X, y, reg):

# Initialize the loss and gradient to zero.

loss = 0.0

dW = np.zeros_like(W)

#############################################################################

# TODO: Compute the softmax loss and its gradient using explicit loops. #

# Store the loss in loss and the gradient in dW. If you are not careful #

# here, it is easy to run into numeric instability. Don't forget the #

# regularization! #

#############################################################################

num_train = X.shape[0]

num_classes = W.shape[1]

for i in range(num_train):

# 算出score

f_i = X[i].dot(W)

# 为避免数据不稳定问题,每个分值向量都减去向量中的最大值

f_i -= np.max(f_i)

# 计算Loss

sum_j = np.sum(np.exp(f_i))

p = lambda k : np.exp(f_i[k]) / sum_j

loss += -np.log(p(y[i]))

# 计算梯度

for k in range(num_classes):

p_k = p(k)

dW[:,k] += (p_k - (k == y[i])) * X[i]

loss /= num_train

# 加上正则项

loss += 0.5 * reg * np.sum(W * W)

dW /= num_train

dW += reg * W

#############################################################################

# END OF YOUR CODE #

#############################################################################

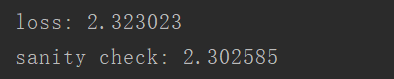

return loss, dW结果如图所示:

这里为什么要用 -np.log(0.1)) 来验证结果呢?

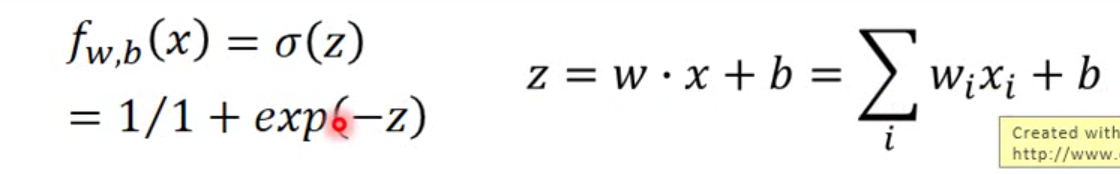

我们假设要分成C类,分类(W*X)后所得分数都近似等于0,那么:

再由

可得在初始第一次的loss为 -np.log(0.1)) (C = 10时)

- 使用数值梯度来验证

# 使用数值梯度来检查验证

loss, grad = softmax_loss_naive(W, X_dev, y_dev, 0.0)

# As we did for the SVM, use numeric gradient checking as a debugging tool.

# The numeric gradient should be close to the analytic gradient.

from cs231n.gradient_check import grad_check_sparse

f = lambda w: softmax_loss_naive(w, X_dev, y_dev, 0.0)[0]

grad_numerical = grad_check_sparse(f, W, grad, 10)

# similar to SVM case, do another gradient check with regularization

loss, grad = softmax_loss_naive(W, X_dev, y_dev, 5e1)

f = lambda w: softmax_loss_naive(w, X_dev, y_dev, 5e1)[0]

grad_numerical = grad_check_sparse(f, W, grad, 10)- 实现全向量的loss和gradient

# 实现向量版的 softmax

tic = time.time()

loss_naive, grad_naive = softmax_loss_naive(W, X_dev, y_dev, 0.000005)

toc = time.time()

print('naive loss: %e computed in %fs' % (loss_naive, toc - tic))

from cs231n.classifiers.softmax import softmax_loss_vectorized

tic = time.time()

loss_vectorized, grad_vectorized = softmax_loss_vectorized(W, X_dev, y_dev, 0.000005)

toc = time.time()

print('vectorized loss: %e computed in %fs' % (loss_vectorized, toc - tic))

# As we did for the SVM, we use the Frobenius norm to compare the two versions

# of the gradient.

grad_difference = np.linalg.norm(grad_naive - grad_vectorized, ord='fro')

print('Loss difference: %f' % np.abs(loss_naive - loss_vectorized))

print('Gradient difference: %f' % grad_difference)

softmax_loss_vectorized 在 softmax.py中实现

def softmax_loss_vectorized(W, X, y, reg):

# Initialize the loss and gradient to zero.

loss = 0.0

dW = np.zeros_like(W)

#############################################################################

# TODO: Compute the softmax loss and its gradient using no explicit loops. #

# Store the loss in loss and the gradient in dW. If you are not careful #

# here, it is easy to run into numeric instability. Don't forget the #

# regularization! #

#############################################################################

num_train = X.shape[0]

f = X.dot(W)

f -= np.max(f, axis=1, keepdims=True)

sum_f = np.sum(np.exp(f), axis=1, keepdims=True)

p = np.exp(f) / sum_f

loss = np.sum(-np.log(p[np.arange(num_train), y]))

ind = np.zeros_like(p)

ind[np.arange(num_train), y] = 1

dW = X.T.dot(p - ind)

loss /= num_train

loss += 0.5 * reg * np.sum(W * W)

dW /= num_train

dW += reg * W

#############################################################################

# END OF YOUR CODE #

#############################################################################

return loss, dW

- 对超参数learing rate 和 regulization 调优

# 超参数调优

from cs231n.classifiers import Softmax

results = {}

best_val = -1

best_softmax = None

learning_rates = [7e-7, 9e-7, 1.1e-6, 1.3e-6, 1.5e-6]

regularization_strengths = [2.5e4, 5e4, 7e4]

iters = 100

for lr in learning_rates:

for rs in regularization_strengths:

softmax = Softmax()

loss_ = softmax.train(X_train, y_train, learning_rate=lr, reg=rs, num_iters=iters)

y_train_pred = softmax.predict(X_train)

acc_train = np.mean(y_train == y_train_pred)

y_val_pred = softmax.predict(X_val)

acc_val = np.mean(y_val == y_val_pred)

results[(lr, rs)] = (acc_train, acc_val)

if best_val < acc_val:

best_val = acc_val

best_softmax = softmax

best_lr = lr

best_rs = rs

# 查看acc_train曲线

softmax = Softmax()

loss_, acc_ = softmax.train(X_train, y_train, learning_rate=best_lr, reg=best_rs, num_iters=iters, batch_size=200, acc_train_his=True)

plt.plot(acc_)

plt.xlabel('Iteration number')

plt.ylabel('Acc value')

plt.show()

# 画出loss曲线

plt.plot(loss_hist)

plt.xlabel('Iteration number')

plt.ylabel('Loss value')

plt.show()

# Print out results.

for lr, reg in sorted(results):

train_accuracy, val_accuracy = results[(lr, reg)]

print('lr %e reg %e train accuracy: %f val accuracy: %f' % (

lr, reg, train_accuracy, val_accuracy))

print('best validation accuracy achieved during cross-validation: %f' % best_val)画出最优超参的loss曲线

画出在training data 中每个batch后acc的变化,对acc的变化有一个直观的认识

softmax.train 和 softmax.predect 在 linear_classifier.py 中

from __future__ import print_function

import numpy as np

from cs231n.classifiers.linear_svm import *

from cs231n.classifiers.softmax import *

class LinearClassifier(object):

def __init__(self):

self.W = None

def train(self, X, y, learning_rate=1e-3, reg=1e-5, num_iters=100,

batch_size=200, verbose=False, acc_train_his=False):

num_train, dim = X.shape

num_classes = np.max(y) + 1 # assume y takes values 0...K-1 where K is number of classes

if self.W is None:

# lazily initialize W

self.W = 0.001 * np.random.randn(dim, num_classes)

# Run stochastic gradient descent to optimize W

loss_history = []

acc_his = []

for it in range(num_iters):

X_batch = None

y_batch = None

#对每个batch

if acc_train_his:

y_train_pred = self.predict(X)

acc_train = np.mean(y == y_train_pred)

acc_his.append(acc_train)

batch_inx = np.random.choice(num_train, batch_size)

X_batch = X[batch_inx,:]

y_batch = y[batch_inx]

#########################################################################

# END OF YOUR CODE #

#########################################################################

# evaluate loss and gradient

loss, grad = self.loss(X_batch, y_batch, reg)

loss_history.append(loss)

# perform parameter update

#########################################################################

# TODO: #

# Update the weights using the gradient and the learning rate. #

#########################################################################

self.W = self.W - learning_rate * grad

#########################################################################

# END OF YOUR CODE #

#########################################################################

if verbose and it % 10 == 0:

print('iteration %d / %d: loss %f' % (it, num_iters, loss))

return loss_history, acc_his

def predict(self, X):

"""

Use the trained weights of this linear classifier to predict labels for

data points.

Inputs:

- X: A numpy array of shape (N, D) containing training data; there are N

training samples each of dimension D.

Returns:

- y_pred: Predicted labels for the data in X. y_pred is a 1-dimensional

array of length N, and each element is an integer giving the predicted

class.

"""

y_pred = np.zeros(X.shape[0])

###########################################################################

# TODO: #

# Implement this method. Store the predicted labels in y_pred. #

###########################################################################

pred = np.dot(X, self.W)

y_pred = np.argmax(pred,axis=1)

###########################################################################

# END OF YOUR CODE #

###########################################################################

return y_pred在testing data上进行预测

# 预测

y_test_pred = best_softmax.predict(X_test)

test_accuracy = np.mean(y_test == y_test_pred)

print('softmax on raw pixels final test set accuracy: %f' % (test_accuracy, ))![]()

正确率约为 35%

- 画出 Weight 模板内容

# Visualize the learned weights for each class

w = best_softmax.W[:-1, :] # strip out the bias

w = w.reshape(32, 32, 3, 10)

w_min, w_max = np.min(w), np.max(w)

classes = ['plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck']

for i in range(10):

plt.subplot(2, 5, i + 1)

# Rescale the weights to be between 0 and 255

wimg = 255.0 * (w[:, :, :, i].squeeze() - w_min) / (w_max - w_min)

plt.imshow(wimg.astype('uint8'))

plt.axis('off')

plt.title(classes[i])

plt.show()