系列连载目录

- 请查看博客 《Paper》 4.1 小节 【Keras】Classification in CIFAR-10 系列连载

学习借鉴

- github:BIGBALLON/cifar-10-cnn

- 知乎专栏:写给妹子的深度学习教程

- Inception v3 Caffe 代码:https://github.com/soeaver/caffe-model/blob/master/cls/inception/deploy_inception-v3.prototxt

- Inception v4 keras 代码:https://github.com/titu1994/Inception-v4/blob/master/inception_v4.py (1×3,3×1)

- GoogleNet网络详解与keras实现:https://blog.csdn.net/qq_25491201/article/details/78367696

参考

代码下载

链接:https://pan.baidu.com/s/1ZiYlTnA4EsUeWAqj5zyKWw

提取码:vr59

硬件

- TITAN XP

1 理论基础

参考【BN】《Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift》

2 代码实现

[D] Why aren’t Inception-style networks successful on CIFAR-10/100?

2.1 Inception_v3

1)导入库,设置好超参数

import os

os.environ["CUDA_DEVICE_ORDER"]="PCI_BUS_ID"

os.environ["CUDA_VISIBLE_DEVICES"]="1"

import keras

import numpy as np

import math

from keras.datasets import cifar10

from keras.layers import Conv2D, MaxPooling2D, AveragePooling2D, ZeroPadding2D, GlobalAveragePooling2D

from keras.layers import Flatten, Dense, Dropout,BatchNormalization,Activation, Convolution2D

from keras.models import Model

from keras.layers import Input, concatenate

from keras import optimizers, regularizers

from keras.preprocessing.image import ImageDataGenerator

from keras.initializers import he_normal

from keras.callbacks import LearningRateScheduler, TensorBoard, ModelCheckpoint

num_classes = 10

batch_size = 64 # 64 or 32 or other

epochs = 300

iterations = 782

USE_BN=True

LRN2D_NORM = True

DROPOUT=0.2

CONCAT_AXIS=3

weight_decay=1e-4

DATA_FORMAT='channels_last' # Theano:'channels_first' Tensorflow:'channels_last'

log_filepath = './inception_v3'

2)数据预处理并设置 learning schedule

def color_preprocessing(x_train,x_test):

x_train = x_train.astype('float32')

x_test = x_test.astype('float32')

mean = [125.307, 122.95, 113.865]

std = [62.9932, 62.0887, 66.7048]

for i in range(3):

x_train[:,:,:,i] = (x_train[:,:,:,i] - mean[i]) / std[i]

x_test[:,:,:,i] = (x_test[:,:,:,i] - mean[i]) / std[i]

return x_train, x_test

def scheduler(epoch):

if epoch < 100:

return 0.01

if epoch < 200:

return 0.001

return 0.0001

# load data

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

y_train = keras.utils.to_categorical(y_train, num_classes)

y_test = keras.utils.to_categorical(y_test, num_classes)

x_train, x_test = color_preprocessing(x_train, x_test)

3)定义网络结构

- 3×3 → 3×1 + 1×3

def conv_block(x, nb_filter, nb_row, nb_col, border_mode='same', subsample=(1,1), bias=False):

x = Convolution2D(nb_filter, nb_row, nb_col, subsample=subsample, border_mode=border_mode, bias=bias,

init="he_normal",dim_ordering='tf',W_regularizer=regularizers.l2(weight_decay))(x)

x = BatchNormalization(momentum=0.9, epsilon=1e-5)(x)

x = Activation('relu')(x)

return x

- Inception module 1

average pooling

def inception_module1(x,params,concat_axis,padding='same',data_format=DATA_FORMAT,use_bias=True,kernel_initializer="he_normal",bias_initializer='zeros',kernel_regularizer=None,bias_regularizer=None,activity_regularizer=None,kernel_constraint=None,bias_constraint=None,lrn2d_norm=LRN2D_NORM,weight_decay=weight_decay):

(branch1,branch2,branch3,branch4)=params

if weight_decay:

kernel_regularizer=regularizers.l2(weight_decay)

bias_regularizer=regularizers.l2(weight_decay)

else:

kernel_regularizer=None

bias_regularizer=None

#1x1

pathway1=Conv2D(filters=branch1[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

#1x1->3x3

pathway2=Conv2D(filters=branch2[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2=Conv2D(filters=branch2[1],kernel_size=(3,3),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway2)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

#1x1->3x3+3x3

pathway3=Conv2D(filters=branch3[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway3 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway3))

pathway3=Conv2D(filters=branch3[1],kernel_size=(3,3),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway3)

pathway3 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway3))

pathway3=Conv2D(filters=branch3[1],kernel_size=(3,3),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway3)

pathway3 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway3))

#3x3->1x1

pathway4=AveragePooling2D(pool_size=(3,3),strides=1,padding=padding,data_format=DATA_FORMAT)(x)

pathway4=Conv2D(filters=branch4[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway4)

pathway4 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway4))

return concatenate([pathway1,pathway2,pathway3,pathway4],axis=concat_axis)

- inception reduce 1

max pooling

figure 10

去掉 pathway 1 中的 1×1

def inception_reduce1(x,params,concat_axis,padding='same',data_format=DATA_FORMAT,use_bias=True,kernel_initializer="he_normal",bias_initializer='zeros',kernel_regularizer=None,bias_regularizer=None,activity_regularizer=None,kernel_constraint=None,bias_constraint=None,lrn2d_norm=LRN2D_NORM,weight_decay=weight_decay):

(branch1,branch2)=params

if weight_decay:

kernel_regularizer=regularizers.l2(weight_decay)

bias_regularizer=regularizers.l2(weight_decay)

else:

kernel_regularizer=None

bias_regularizer=None

#1x1

pathway1 = Conv2D(filters=branch1[0],kernel_size=(3,3),strides=2,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

#1x1->3x3+3x3

pathway2 = Conv2D(filters=branch2[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2 = Conv2D(filters=branch2[1],kernel_size=(3,3),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway2)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2 = Conv2D(filters=branch2[1],kernel_size=(3,3),strides=2,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway2)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

#3x3->1x1

pathway3 = MaxPooling2D(pool_size=(3,3),strides=2,padding=padding,data_format=DATA_FORMAT)(x)

return concatenate([pathway1,pathway2,pathway3],axis=concat_axis)

- Inception module 2

average pooling

def inception_module2(x,params,concat_axis,padding='same',data_format=DATA_FORMAT,use_bias=True,kernel_initializer="he_normal",bias_initializer='zeros',kernel_regularizer=None,bias_regularizer=None,activity_regularizer=None,kernel_constraint=None,bias_constraint=None,lrn2d_norm=LRN2D_NORM,weight_decay=weight_decay):

(branch1,branch2,branch3,branch4)=params

if weight_decay:

kernel_regularizer=regularizers.l2(weight_decay)

bias_regularizer=regularizers.l2(weight_decay)

else:

kernel_regularizer=None

bias_regularizer=None

#1x1

pathway1=Conv2D(filters=branch1[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

#1x1->1x7->7x1

pathway2=Conv2D(filters=branch2[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2 = conv_block(pathway2,branch2[1],1,7)

pathway2 = conv_block(pathway2,branch2[2],7,1)

#1x1->7x1->1x7->7x1->1x7

pathway3=Conv2D(filters=branch3[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway3 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway3))

pathway3 = conv_block(pathway3,branch3[1],7,1)

pathway3 = conv_block(pathway3,branch3[2],1,7)

pathway3 = conv_block(pathway3,branch3[3],7,1)

pathway3 = conv_block(pathway3,branch3[4],1,7)

#3x3->1x1

pathway4=AveragePooling2D(pool_size=(3,3),strides=1,padding=padding,data_format=DATA_FORMAT)(x)

pathway4=Conv2D(filters=branch4[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway4)

pathway4 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway4))

return concatenate([pathway1,pathway2,pathway3,pathway4],axis=concat_axis)

- inception reduce 2

max pooling

pathway 1:1×1(1)→3×3(2)

pathway 2:1×1(1)→1×7(1)→7×1(1)→3×3(2)

pathway 3:max pooling 3×3(2)

def inception_reduce2(x,params,concat_axis,padding='same',data_format=DATA_FORMAT,use_bias=True,kernel_initializer="he_normal",bias_initializer='zeros',kernel_regularizer=None,bias_regularizer=None,activity_regularizer=None,kernel_constraint=None,bias_constraint=None,lrn2d_norm=LRN2D_NORM,weight_decay=weight_decay):

(branch1,branch2)=params

if weight_decay:

kernel_regularizer=regularizers.l2(weight_decay)

bias_regularizer=regularizers.l2(weight_decay)

else:

kernel_regularizer=None

bias_regularizer=None

#1x1->3x3

pathway1 = Conv2D(filters=branch1[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

pathway1 = Conv2D(filters=branch1[1],kernel_size=(3,3),strides=2,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway1)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

#1x1->1x7->7x1->3x3

pathway2 = Conv2D(filters=branch2[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2 = conv_block(pathway2,branch2[1],1,7)

pathway2 = conv_block(pathway2,branch2[2],7,1)

pathway2 = Conv2D(filters=branch2[3],kernel_size=(3,3),strides=2,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway2)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

#3x3->1x1

pathway3 = MaxPooling2D(pool_size=(3,3),strides=2,padding=padding,data_format=DATA_FORMAT)(x)

return concatenate([pathway1,pathway2,pathway3],axis=concat_axis)

- Inception module 3

average pooling

figure 7

def inception_module3(x,params,concat_axis,padding='same',data_format=DATA_FORMAT,use_bias=True,kernel_initializer="he_normal",bias_initializer='zeros',kernel_regularizer=None,bias_regularizer=None,activity_regularizer=None,kernel_constraint=None,bias_constraint=None,lrn2d_norm=LRN2D_NORM,weight_decay=weight_decay):

(branch1,branch2,branch3,branch4)=params

if weight_decay:

kernel_regularizer=regularizers.l2(weight_decay)

bias_regularizer=regularizers.l2(weight_decay)

else:

kernel_regularizer=None

bias_regularizer=None

#1x1

pathway1=Conv2D(filters=branch1[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway1 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway1))

#1x1->1x3+3x1

pathway2=Conv2D(filters=branch2[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway2 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway2))

pathway2_1 = conv_block(pathway2,branch2[1],1,3)

pathway2_2 = conv_block(pathway2,branch2[2],3,1)

#1x1->3x3->1x3+3x1

pathway3=Conv2D(filters=branch3[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(x)

pathway3 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway3))

pathway3=Conv2D(filters=branch3[1],kernel_size=(3,3),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway3)

pathway3 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway3))

pathway3_1 = conv_block(pathway3,branch3[2],1,3)

pathway3_2 = conv_block(pathway3,branch3[3],3,1)

#3x3->1x1

pathway4=AveragePooling2D(pool_size=(3,3),strides=1,padding=padding,data_format=DATA_FORMAT)(x)

pathway4=Conv2D(filters=branch4[0],kernel_size=(1,1),strides=1,padding=padding,data_format=data_format,use_bias=use_bias,kernel_initializer=kernel_initializer,bias_initializer=bias_initializer,kernel_regularizer=kernel_regularizer,bias_regularizer=bias_regularizer,activity_regularizer=activity_regularizer,kernel_constraint=kernel_constraint,bias_constraint=bias_constraint)(pathway4)

pathway4 = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(pathway4))

return concatenate([pathway1,pathway2_1,pathway2_2,pathway3_1,pathway3_2,pathway4],axis=concat_axis)

4)搭建网络

valid 都用的 same

def create_model(img_input):

x = Conv2D(32,kernel_size=(3,3),strides=(2,2),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(img_input)

x = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x))

x = Conv2D(32,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x))

x = Conv2D(64,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x))

x=MaxPooling2D(pool_size=(3,3),strides=2,padding='same',data_format=DATA_FORMAT)(x)

x = Conv2D(80,kernel_size=(1,1),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x))

x = Conv2D(192,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(x)

x = Activation('relu')(BatchNormalization(momentum=0.9, epsilon=1e-5)(x))

x=MaxPooling2D(pool_size=(3,3),strides=2,padding='same',data_format=DATA_FORMAT)(x)

x=inception_module1(x,params=[(64,),(48,64),(64,96),(32,)],concat_axis=CONCAT_AXIS) #3a 256

x=inception_module1(x,params=[(64,),(48,64),(64,96),(64,)],concat_axis=CONCAT_AXIS) #3b 288

x=inception_module1(x,params=[(64,),(48,64),(64,96),(64,)],concat_axis=CONCAT_AXIS) #3c 288

x=inception_reduce1(x,params=[(384,),(64,96)],concat_axis=CONCAT_AXIS) # 768

x=inception_module2(x,params=[(192,),(128,128,192),(128,128,128,128,192),(192,)],concat_axis=CONCAT_AXIS) #4a 768

x=inception_module2(x,params=[(192,),(160,160,192),(160,160,160,160,192),(192,)],concat_axis=CONCAT_AXIS) #4b 768

x=inception_module2(x,params=[(192,),(160,160,192),(160,160,160,160,192),(192,)],concat_axis=CONCAT_AXIS) #4c 768

x=inception_module2(x,params=[(192,),(160,160,192),(160,160,160,160,192),(192,)],concat_axis=CONCAT_AXIS) #4d 768

x=inception_module2(x,params=[(192,),(192,192,192),(192,192,192,192,192),(192,)],concat_axis=CONCAT_AXIS) #4e 768

x=inception_reduce2(x,params=[(192,320),(192,192,192,192)],concat_axis=CONCAT_AXIS) # 1280

x=inception_module3(x,params=[(320,),(384,384,384),(448,384,384,384),(192,)],concat_axis=CONCAT_AXIS) #4e 2048

x=inception_module3(x,params=[(320,),(384,384,384),(448,384,384,384),(192,)],concat_axis=CONCAT_AXIS) #4e 2048

x=GlobalAveragePooling2D()(x)

x=Dropout(DROPOUT)(x)

x = Dense(num_classes,activation='softmax',kernel_initializer="he_normal",

kernel_regularizer=regularizers.l2(weight_decay))(x)

return x

5)生成模型

img_input=Input(shape=(32,32,3))

output = create_model(img_input)

model=Model(img_input,output)

model.summary()

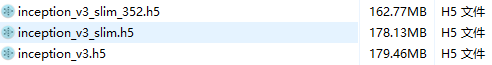

inception v3 参数量

Total params: 23,397,866

Trainable params: 23,359,978

Non-trainable params: 37,888

v1:Total params: 5,984,936

v2:Total params: 10,210,090

6)开始训练

# set optimizer

sgd = optimizers.SGD(lr=.1, momentum=0.9, nesterov=True)

model.compile(loss='categorical_crossentropy', optimizer=sgd, metrics=['accuracy'])

# set callback

tb_cb = TensorBoard(log_dir=log_filepath, histogram_freq=0)

change_lr = LearningRateScheduler(scheduler)

cbks = [change_lr,tb_cb]

# set data augmentation

datagen = ImageDataGenerator(horizontal_flip=True,

width_shift_range=0.125,

height_shift_range=0.125,

fill_mode='constant',cval=0.)

datagen.fit(x_train)

# start training

model.fit_generator(datagen.flow(x_train, y_train,batch_size=batch_size),

steps_per_epoch=iterations,

epochs=epochs,

callbacks=cbks,

validation_data=(x_test, y_test))

model.save('inception_v3.h5')

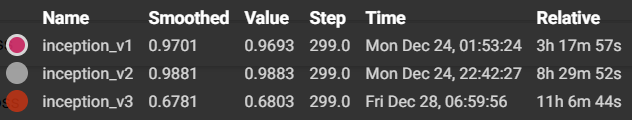

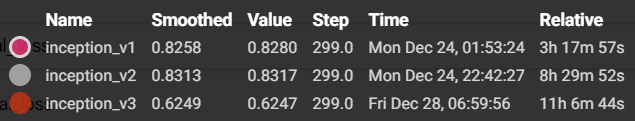

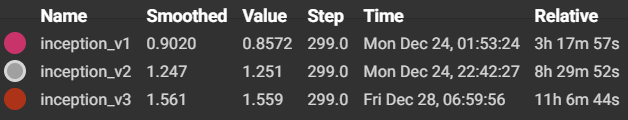

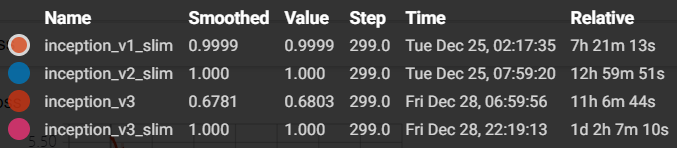

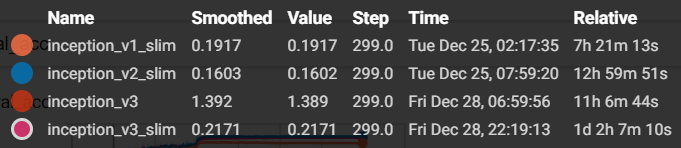

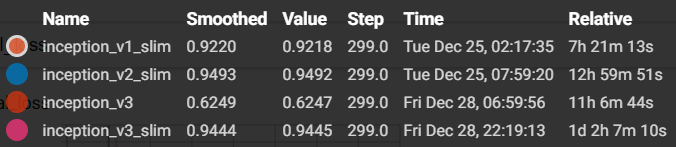

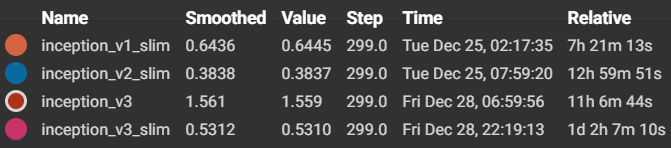

7)结果分析

training accuracy 和 training loss

- accuracy

- loss

因为前面的 stem 把 feature map 的分辨率降到了 4,后面 inception module 中 1x7 与 7x1 的效果可想而知,都是在处理 padding, 差,相当的差。

test accuracy 和 test loss

- accuracy

- loss

…………

结果意料之中

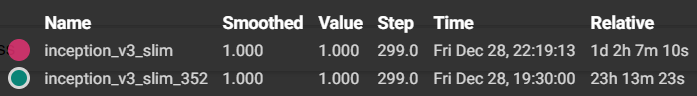

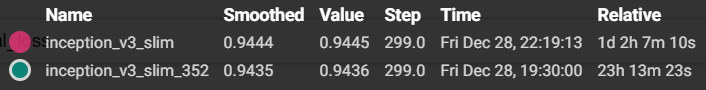

2.2 Inception_v3_slim

把 Inception_v3 中 stern 结构直接替换成一个卷积,inception 结构不变,因为stern结果会把原图降到1/8的分辨率,对于 ImageNet 还行,CIFRA-10的话有些吃不消了

- 调整网络结构

def create_model(img_input):

x = Conv2D(192,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(img_input)

x=inception_module1(x,params=[(64,),(48,64),(64,96),(32,)],concat_axis=CONCAT_AXIS) #3a 256

x=inception_module1(x,params=[(64,),(48,64),(64,96),(64,)],concat_axis=CONCAT_AXIS) #3b 288

x=inception_module1(x,params=[(64,),(48,64),(64,96),(64,)],concat_axis=CONCAT_AXIS) #3c 288

x=inception_reduce1(x,params=[(384,),(64,96)],concat_axis=CONCAT_AXIS) # 768

x=inception_module2(x,params=[(192,),(128,128,192),(128,128,128,128,192),(192,)],concat_axis=CONCAT_AXIS) #4a 768

x=inception_module2(x,params=[(192,),(160,160,192),(160,160,160,160,192),(192,)],concat_axis=CONCAT_AXIS) #4b 768

x=inception_module2(x,params=[(192,),(160,160,192),(160,160,160,160,192),(192,)],concat_axis=CONCAT_AXIS) #4c 768

x=inception_module2(x,params=[(192,),(160,160,192),(160,160,160,160,192),(192,)],concat_axis=CONCAT_AXIS) #4d 768

x=inception_module2(x,params=[(192,),(192,192,192),(192,192,192,192,192),(192,)],concat_axis=CONCAT_AXIS) #4e 768

x=inception_reduce2(x,params=[(192,320),(192,192,192,192)],concat_axis=CONCAT_AXIS) # 1280

x=inception_module3(x,params=[(320,),(384,384,384),(448,384,384,384),(192,)],concat_axis=CONCAT_AXIS) #4e 2048

x=inception_module3(x,params=[(320,),(384,384,384),(448,384,384,384),(192,)],concat_axis=CONCAT_AXIS) #4e 2048

x=GlobalAveragePooling2D()(x)

x=Dropout(DROPOUT)(x)

x = Dense(num_classes,activation='softmax',kernel_initializer="he_normal",

kernel_regularizer=regularizers.l2(weight_decay))(x)

return x

参数量如下:

Total params: 23,229,370

Trainable params: 23,192,282

Non-trainable params: 37,088

v1:Total params: 5,984,936

v2:Total params: 10,210,090

v3:Total params: 23,397,866

结果分析如下:

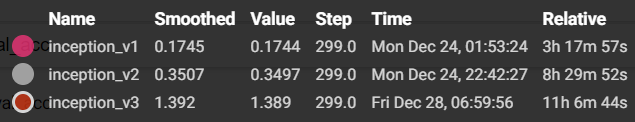

training accuracy 和 training loss

-

accuracy

-

loss

test accuracy 和 test loss

- accuracy

- loss

OK,精度 94%+,还行,hyper parameters 么有怎么调整

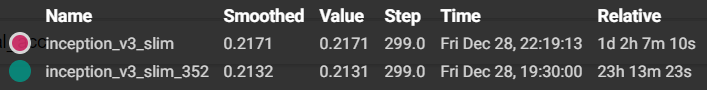

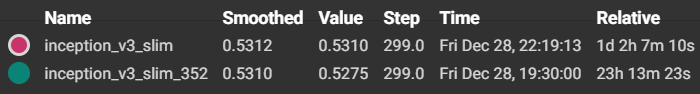

2.3 Inception_v3_slim_352

2.2 小节中的 inception module 1,2,3 内部重复的模块还有些细微的参数变动,现在将他们 inception module 重复的模块参数统一起来

修改如下

def create_model(img_input):

x = Conv2D(192,kernel_size=(3,3),strides=(1,1),padding='same',

kernel_initializer="he_normal",kernel_regularizer=regularizers.l2(weight_decay))(img_input)

# inception 3a-3c 288

for _ in range(3):

x=inception_module1(x,params=[(64,),(48,64),(64,96),(64,)],concat_axis=CONCAT_AXIS)

x=inception_reduce1(x,params=[(384,),(64,96)],concat_axis=CONCAT_AXIS) # 768

# inception 4a-4e 768

for _ in range(5):

x=inception_module2(x,params=[(192,),(128,128,192),(128,128,128,128,192),(192,)],concat_axis=CONCAT_AXIS)

x=inception_reduce2(x,params=[(192,320),(192,192,192,192)],concat_axis=CONCAT_AXIS) # 1280

# inception 5a-5b 2048

for _ in range(2):

x=inception_module3(x,params=[(320,),(384,384,384),(448,384,384,384),(192,)],concat_axis=CONCAT_AXIS)

x=GlobalAveragePooling2D()(x)

x=Dropout(DROPOUT)(x)

x = Dense(num_classes,activation='softmax',kernel_initializer="he_normal",

kernel_regularizer=regularizers.l2(weight_decay))(x)

return x

参数量:

Total params: 21,215,770

Trainable params: 21,180,538

Non-trainable params: 35,232

v1:Total params: 5,984,936

v2:Total params: 10,210,090

v3:Total params: 23,397,866

v3_slim:Total params: 23,229,370

结果分析如下:

training accuracy 和 training loss

- accuracy

- loss

test accuracy 和 test loss

- accuracy

- loss

差别不大,inception module 内部参数递增效果要比都一样的好一点点

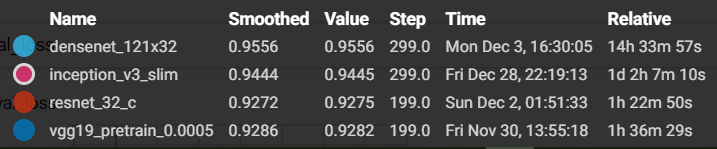

2.4 纵向对比

3 总结

模型大小