xxx\doubanmovie\doubanmovie\items

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://doc.scrapy.org/en/latest/topics/items.html # import scrapy from scrapy import Item, Field class DoubanmovieItem(Item): # define the fields for your item here like: # name = scrapy.Field() title = Field() movieInfo = Field() star = Field() quote = Field() ##Items.py定义需要抓取并需要后期处理的数据。就像字典一样的容器 ##settings.py文件配置scrapy,从而修改user-agent,设定爬取时间间隔,设置代理,配置各种中间件等等。 ## pipeline.py用于存放后期数据处理功能,从而使得数据的爬取和处理分开。

xxx\doubanmovie\doubanmovie\settings

# -*- coding: utf-8 -*- # Scrapy settings for doubanmovie project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://doc.scrapy.org/en/latest/topics/settings.html # https://doc.scrapy.org/en/latest/topics/downloader-middleware.html # https://doc.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'doubanmovie' SPIDER_MODULES = ['doubanmovie.spiders'] NEWSPIDER_MODULE = 'doubanmovie.spiders' USER_AGENT = 'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/65.0.3325.181 Safari/537.36' #将爬取的信息保存在下面的文件夹下面,并保存为csv文件 FEED_URI = u'file:///F:/xxx/doubanmovie/douban.csv' FEED_FORMAT = 'CSV' # Crawl responsibly by identifying yourself (and your website) on the user-agent # USER_AGENT = 'doubanmovie (+http://www.yourdomain.com)' # Obey robots.txt rules ROBOTSTXT_OBEY = True # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://doc.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://doc.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'doubanmovie.middlewares.DoubanmovieSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'doubanmovie.middlewares.DoubanmovieDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://doc.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://doc.scrapy.org/en/latest/topics/item-pipeline.html #ITEM_PIPELINES = { # 'doubanmovie.pipelines.DoubanmoviePipeline': 300, #} # Enable and configure the AutoThrottle extension (disabled by default) # See https://doc.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://doc.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'

xxx\doubanmovie\doubanmovie\spiders\doubanspider

#encoding=utf-8 #-*- coding:utf-8 -*- ##Items.py定义需要抓取并需要后期处理的数据。就像字典一样的容器 ##settings.py文件配置scrapy,从而修改user-agent,设定爬取时间间隔,设置代理,配置各种中间件等等。 ## pipeline.py用于存放后期数据处理功能,从而使得数据的爬取和处理分开。 ######User-Agent要加在settings.py文件中 #scrapy生成一个project,然后爬取网页 # from scrapy.contrib.spiders import CrawlSpider from scrapy.spiders import CrawlSpider from scrapy.http import Request from scrapy.selector import Selector from doubanmovie.items import DoubanmovieItem # from items import DoubanmovieItem # from douban.items import DoubanmovieItem class Douban(CrawlSpider): name = "doubanTest" start_urls = ['https://movie.douban.com/top250'] # start_urls = ['http://www.jikexueyuan.com/course/?pageNum=1'] url = 'https://movie.douban.com/top250' def parse(self,response): # print response.body # print response.url # a = response.url # b = 1 item = DoubanmovieItem()#将类实例化为item selector = Selector(response)#用Selector()来处理reponse,response是一个对象,里面包含很多的属性 Movies = selector.xpath('//div[@class="info"]')#抓取大 for eachMovie in Movies: title = eachMovie.xpath('div[@class="hd"]/a/span/text()').extract()#scrapy中的XPath和lxml中的XPath有一点不相同,要提取信息需要加上后面的.extract() # print title fullTitle = '' for each in title: fullTitle += each # print fullTitle movieInfo = eachMovie.xpath('div[@class="bd"]/p/text()').extract() # print movieInfo # star = eachMovie.xpath('div[@class="bd"]/div[@class="star"]/span[@class="rating_num"]/text()').extract()[0] star = eachMovie.xpath('div[@class="bd"]/div[@class="star"]/span[@class="rating_num"]/text()').extract() # print star quote = eachMovie.xpath('div[@class="bd"]/p/span/text()').extract() # print quote #quote可能为空,进行判断,如果不为空,取第一个元素,如果为空,变成空字符 if quote: quote = quote[0] else: quote = '' item['title'] = fullTitle item['movieInfo'] = ';'.join(movieInfo) item['star'] = star item['quote'] = quote yield item#后面再介绍,这么做将他生成到CSV文件中 nextLink = selector.xpath('//span[@class="next"]/link/@href').extract() #第十页是最后一页,没有下一页的链接 if nextLink: nextLink = nextLink[0] print nextLink yield Request(self.url+nextLink,callback=self.parse)#添加了一个回调函数,函数自身,递归给这个函数自己,并重新爬取

xxx\doubanmovie\main

#encoding=utf-8 from scrapy import cmdline cmdline.execute("scrapy crawl doubanTest".split()) #使用scrapy里面负责执行Windows命令的一个类,执行scrapy crawl doubanTest,这个命令是爬虫运行,这与 # 平常的python程序不一样,之前运行程序是使用python,然后是这个程序的名字, #cmd对应文件夹(doubantest)下中输入scrapy crawl doubanTest

注释:

报错问题:

# from doubanmovie.items import DoubanmovieItem # ImportError: No module named doubanmovie.items #解决办法: #不要将工程放在PycharmProjects下,要将doubanmovie1放在独立工程下,将doubanmovie1从PycharmProjects拿出去放到桌面或其它方便的任何 # 目录,然后Pycharm->file->open打开doubanmovie1->选择in new windows。

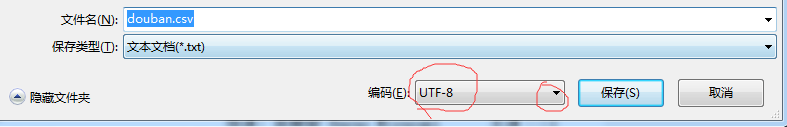

cvs文件用excel打开中文乱码问题解决办法:

1、用记事本打开CVS文件

2、文件另存为utf-8格式

3、保存就可以了,再打开文件,就不会中文乱码了