数据分析

Titanic数据集(892行,12列的数据表格)

PassengerID,Name这两个数据明显与生存率无关,丢弃。

Carbin:仓位,虽然与生存率有一定关系,但数据大量丢失,而且没有更多的数据来对船舱进行归类,所以也丢弃。

Embarked:乘客登船的港口。需要把S/C/Q等数据转换为数值型数据!

Sex:性别。同样要转换成0,1数值型数据。

对于缺失数据,进行填充。

最后必须提取Survived列作为标注数据。

Pandas处理表格数据

将只有两个属性的特征(性别)转换为0,1可用布尔运算!下文还有一种方法:LabelEncoder()

将有多个属性的特征转换为0,1,2等数值型数据,用到:

unique():Return unique values in the object

tolist():Return a list of the values

apply():Applies function along input axis of DataFrame

index():Immutable ndarray implementing an ordered, sliceable set.

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

def read_dataset(fname):

# 指定第一列作为行索引

data = pd.read_csv(fname, index_col=0)

# 丢弃无用的数据

data.drop(['Name', 'Ticket', 'Cabin'], axis=1, inplace=True)

# 处理性别数据

# 男性设为1,女性即为0

data['Sex'] = (data['Sex'] == 'male').astype('int')

# 处理登船港口数据

labels = data['Embarked'].unique().tolist()

data['Embarked'] = data['Embarked'].apply(lambda n: labels.index(n))

# 处理缺失数据

data = data.fillna(0)

return data

train = read_dataset(r'C:\Users\Qiuyi\Desktop\titanic\train.csv')

train.head()

提取Survived标注数据,并划分训练集和测试集:

from sklearn.model_selection import train_test_split

y = train['Survived'].values

X = train.drop(['Survived'], axis=1).values

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

print('train dataset: {0}; test dataset: {1}'.format(

X_train.shape, X_test.shape))

train dataset: (712, 7); test dataset: (179, 7)

用决策树训练

from sklearn.tree import DecisionTreeClassifier

clf = DecisionTreeClassifier()

clf.fit(X_train, y_train)

train_score = clf.score(X_train, y_train)

test_score = clf.score(X_test, y_test)

print('train score: {0}; test score: {1}'.format(train_score, test_score))

train score: 0.9859550561797753; test score: 0.7932960893854749

过拟合!

解决办法是剪枝,scikit-learn不支持后剪枝,因此用max_depth等参数进行前剪枝,当决策树达到限定深度时,就不再进行分裂了。这样就可以在一定程度上避免过拟合。

DRY(Do not Repeat Yourself),不要一个一个去试参数,构造参数范围,在范围内分别计算模型评分,并找出评分最高的模型所对应的参数。

np.argmax():

Returns the indices of the maximum values along an axis.

def cv_score(d):

clf = DecisionTreeClassifier(max_depth=d)

clf.fit(X_train, y_train)

tr_score = clf.score(X_train, y_train)

cv_score = clf.score(X_test, y_test)

return (tr_score, cv_score)

depths = range(2, 15)

scores = [cv_score(d) for d in depths]

tr_scores = [s[0] for s in scores]

cv_scores = [s[1] for s in scores]

best_score_index = np.argmax(cv_scores)

best_score = cv_scores[best_score_index]

best_param = depths[best_score_index]

print('best param: {0}; best score: {1}'.format(best_param, best_score))

plt.figure(figsize=(10, 6), dpi=144)

plt.grid()

plt.xlabel('max depth of decision tree')

plt.ylabel('score')

plt.plot(depths, cv_scores, '.g-', label='cross-validation score')

plt.plot(depths, tr_scores, '.r--', label='training score')

plt.legend()

best param: 5; best score: 0.8435754189944135

<matplotlib.legend.Legend at 0x2091fc70278>

使用同样的方法考察参数 min_impurity_split,这个参数指定信息熵或基尼不纯度的阈值,决策树分裂后,其信息增益低于这个阈值时,则不再分裂。

# 训练模型,并计算评分

def cv_score(val):

clf = DecisionTreeClassifier(criterion='gini', min_impurity_decrease=val)

#此处将criterion='gini'改成'entropy'结果差不多

clf.fit(X_train, y_train)

tr_score = clf.score(X_train, y_train)

cv_score = clf.score(X_test, y_test)

return (tr_score, cv_score)

# 指定参数范围,分别训练模型,并计算评分

values = np.linspace(0, 0.005, 50)

scores = [cv_score(v) for v in values]

tr_scores = [s[0] for s in scores]

cv_scores = [s[1] for s in scores]

# 找出评分最高的模型参数

best_score_index = np.argmax(cv_scores)

best_score = cv_scores[best_score_index]

best_param = values[best_score_index]

print('best param: {0}; best score: {1}'.format(best_param, best_score))

# 画出模型参数与模型评分的关系

plt.figure(figsize=(10, 6), dpi=144)

plt.grid()

plt.xlabel('threshold of entropy')

plt.ylabel('score')

plt.plot(values, cv_scores, '.g-', label='cross-validation score')

plt.plot(values, tr_scores, '.r--', label='training score')

plt.legend()

best param: 0.0012244897959183673; best score: 0.8212290502793296

<matplotlib.legend.Legend at 0x209200fdba8>

sklearn.model_selection.GridSearchCV:

Exhaustive search over specified parameter values for an estimator

def plot_curve(train_sizes, cv_results, xlabel):

train_scores_mean = cv_results['mean_train_score']

train_scores_std = cv_results['std_train_score']

test_scores_mean = cv_results['mean_test_score']

test_scores_std = cv_results['std_test_score']

plt.figure(figsize=(10, 6), dpi=144)

plt.title('parameters turning')

plt.grid()

plt.xlabel(xlabel)

plt.ylabel('score')

plt.fill_between(train_sizes,

train_scores_mean - train_scores_std,

train_scores_mean + train_scores_std,

alpha=0.1, color="r")

plt.fill_between(train_sizes,

test_scores_mean - test_scores_std,

test_scores_mean + test_scores_std,

alpha=0.1, color="g")

plt.plot(train_sizes, train_scores_mean, '.--', color="r",

label="Training score")

plt.plot(train_sizes, test_scores_mean, '.-', color="g",

label="Cross-validation score")

plt.legend(loc="best")

from sklearn.model_selection import GridSearchCV

thresholds = np.linspace(0, 0.005, 50)

# Set the parameters by cross-validation

param_grid = {'min_impurity_decrease': thresholds}

clf = GridSearchCV(DecisionTreeClassifier(), param_grid, cv=5, return_train_score=True)

clf.fit(X, y)

print("best param: {0}\nbest score: {1}".format(clf.best_params_,

clf.best_score_))

plot_curve(thresholds, clf.cv_results_, xlabel='gini thresholds')

best param: {‘min_impurity_decrease’: 0.0012244897959183673}

best score: 0.8114478114478114

将 max_depth 和 min_impurity_decrease 两个参数结合起来,同时观察对训练的影响以得到最优参数。

sklearn.tree.DecisionTreeClassifier

min_samples_split : int, float, optional (default=2)

The minimum number of samples required to split an internal node:

from sklearn.model_selection import GridSearchCV

entropy_thresholds = np.linspace(0, 0.01, 50)

gini_thresholds = np.linspace(0, 0.005, 50)

# Set the parameters by cross-validation

param_grid = [{'max_depth': range(2, 10)},

{'criterion': ['entropy'], 'min_impurity_decrease': entropy_thresholds},

{'criterion': ['gini'], 'min_impurity_decrease': gini_thresholds},

{'min_samples_split': range(2, 30, 2)}]

clf = GridSearchCV(DecisionTreeClassifier(), param_grid, cv=5, return_train_score=True)

clf.fit(X, y)

print("best param: {0}\nbest score: {1}".format(clf.best_params_,

clf.best_score_))

best param: {‘criterion’: ‘entropy’, ‘min_impurity_decrease’: 0.002857142857142857}

best score: 0.8226711560044894

为什么 max_depth 和 min_samples_split 都不返回最优参数呢?

clf = DecisionTreeClassifier(criterion='entropy', min_impurity_decrease=0.002857142857142857)

clf.fit(X_train, y_train)

train_score = clf.score(X_train, y_train)

test_score = clf.score(X_test, y_test)

print('train score: {0}; test score: {1}'.format(train_score, test_score))

train score: 0.8890449438202247; test score: 0.8100558659217877

导出 titanic.dot 文件:

with open("titanic.dot", 'w') as f:

f = export_graphviz(clf, out_file=f)

# 1. 在电脑上安装 graphviz

# 2. 运行 `dot -Tpng titanic.dot -o titanic.png`

# 3. 在当前目录查看生成的决策树 titanic.png

关于dot -Tpng titanic.dot -o titanic.png运行失败:

>>>cd C:\Users\Qiuyi\Desktop\titanic

>>>python3

>>>import graphviz

>>>dot -Tpng titanic.dot -o titanic.png

SyntaxError: invalid syntax

‘dot’ 不是内部或外部命令,也不是可运行的程序 或批处理文件。

解决办法:

首先要安装 graphviz,不是pip3 install graphviz,而是下载 graphviz-2.38.msi 安装包,安装在电脑里,还要将安装目录内的bin文件夹地址添加到path里:

打开控制面板—系统与安全—-系统—-高级系统设置—-高级—–环境变量—-在系统变量中找到Path—-点击编辑—–新建——粘贴地址——保存

分析决策树:

参考知乎:Python决策树模型做 Titanic数据集预测并可视化

可以看到根节点判断的条件是sex,sex<0.5为true,则因为sex只有0和1,分别代表female和male,就是true则为女性,false为男性。

最后Value中的两个数字左边代表在这些样本中的死亡数,右边的是幸存数。根据这一点结合上面的决策树就可以看到,首先利用性别,划分了女性253(根据Values得知其中62死,191幸存),男性459人(根据Value得知371死,88生),基本上Titanic上的男性在面对生离死别时还是很考虑到女性的~总共712人是 train.csv 分成训练集后的人数。

LabelEncoder()

from sklearn.preprocessing import LabelEncoder

df=pd.read_csv(r'C:\Users\Qiuyi\Desktop\titanic\train.csv')

le = LabelEncoder()

le.fit(df['Sex'])

#用离散值转化标签值

df['Sex']=le.transform(df['Sex'])

print(df.Sex)

0 1

1 0

2 0

…

889 1

890 1

Name: Sex, Length: 891, dtype: int64

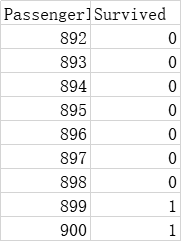

预测测试集并上传打分

X_test = read_dataset(r'C:\Users\Qiuyi\Desktop\titanic\test.csv')

y_predict = clf.predict(X_test)

X_test.head()

X_test.insert(0,'Survived',y_predict)

final=X_test.iloc[:,0:1]

final.to_csv(r'C:\Users\Qiuyi\Desktop\titanic\PredictTitanic.csv',index=True)

直接提交只有0.73205,哈哈哈。