论文全称:《U-Net: Convolutional Networks for Biomedical Image Segmentation》

论文地址:https://arxiv.org/pdf/1505.04597v1.pdf

论文代码:https://github.com/jakeret/tf_unet

目录

提出动机

首先,以往的深度学习模型大部分都是分类模型,但是很多视觉任务,特别是医学影像的处理方面,需要的是语义分割,具体到每一个像素上的分类。

其次,很多任务没有imagenet那样大规模的数据集,收集的成本非常高。

最后,之前的方法太慢了,对于定位和使用图像中的上下文是一个tradeoff,最近很多方法都是利用多层features,本文也不例外。

综述

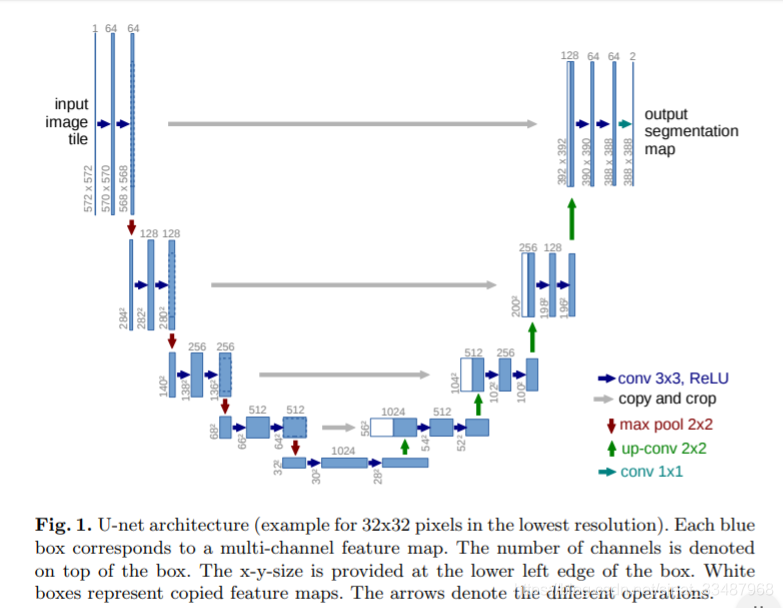

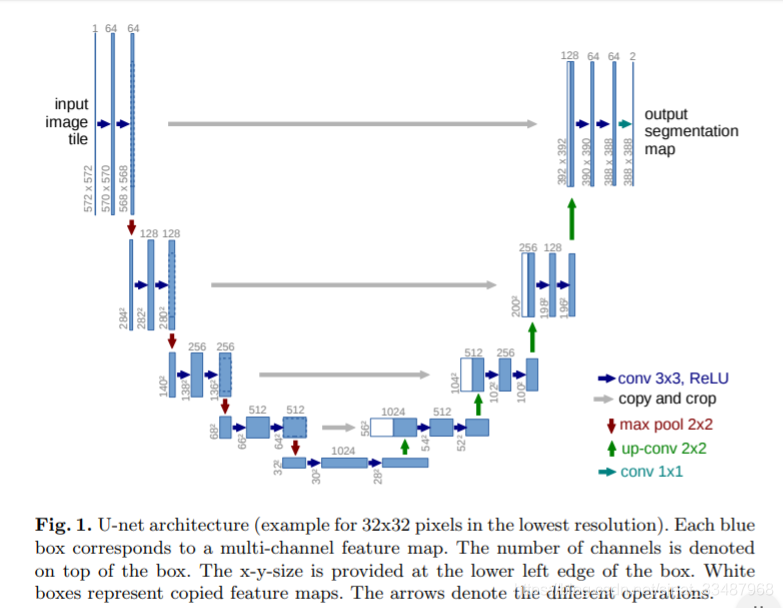

UNet是基于全卷积网络,可以参考[深度学习]Semantic Segmentation语义分割之FCN(1),UNet的主要思想就是在常规的卷积网络后面添加连续的层,这些层的目的是上采样。上采样提高了output的输出精度,但是为了更准确地定位,所以结合了上游的feature。Unet中一个比较重要的修改就是在上采样的部分依然保留大量的特征通道,这样一来便能将上下文信息传播到更高的分辨率层。所以整个Unet网络结构看上去就像一个“U”字形。与FCN一样,网络中没有使用全连接层,全是卷积层。

UNet这篇论文实现过程遇到一些challenge,包括数据太少以及粘连object的分离问题。前者使用elastic deformations弹性形变做了数据增强,这使得网络可以学习这种形变的不变性。后者作者提出了一种加权损失的方法,在这种方法中,接触细胞之间的背景标签的分离在损失函数中获得了较大的权重。

网络结构

网络架构如上图所示。它由收缩路径(左侧)和膨胀路径(右侧)组成。收缩路径遵循卷积网络的典型架构。它包括两个3x3卷积(unpadded)的重复应用,每个卷积后面是一个整流的线性单元(ReLU)和一个2x2 max pooling运算用于下采样。在每个下采样步骤中,将特征通道的数量增加一倍。扩展路径中的每一步都包含一个向上采样的feature map,然后是一个2x2卷积(“up-convolution”),该卷积将feature channel的数量减半,与收缩路径中相应裁剪的feature map进行连接,以及两个3x3卷积,每个卷积之后是一个ReLU。这种裁剪是必要的,因为在每次卷积中都会丢失边界像素。在最后一层,使用1x1卷积将每个64分量的特征向量映射到所需的类数。该网络共有23个卷积层。

实验结果

代码详解

github : https://github.com/zhixuhao/unet 代码基于keras。

再次看回UNet的网络结构图,可以看到右下角有关于箭头的标记。

横向移动的蓝色箭头代表了3*3的conv+ReLU,例如最开始的部分如下面的定义

conv1 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(inputs)

conv1 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv1)红色向下箭头代表了max pool 2*2,如

pool1 = MaxPooling2D(pool_size=(2, 2))(conv1)绿色向上箭头代表了up-conv 2*2,如

up6 = Conv2D(512, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(drop5))灰色横向箭头代表了与上游的feature拼接,注意是拼接不是相加。如

merge6 = concatenate([drop4,up6], axis = 3)最后右上角的那个箭头是一个普通的1*1 conv,后接一个sigmoid

conv10 = Conv2D(1, 1, activation = 'sigmoid')(conv9)

完整的Unet定义:

import numpy as np

import os

import skimage.io as io

import skimage.transform as trans

import numpy as np

from keras.models import *

from keras.layers import *

from keras.optimizers import *

from keras.callbacks import ModelCheckpoint, LearningRateScheduler

from keras import backend as keras

def unet(pretrained_weights = None,input_size = (256,256,1)):

inputs = Input(input_size)

conv1 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(inputs)

conv1 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv1)

pool1 = MaxPooling2D(pool_size=(2, 2))(conv1)

conv2 = Conv2D(128, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(pool1)

conv2 = Conv2D(128, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv2)

pool2 = MaxPooling2D(pool_size=(2, 2))(conv2)

conv3 = Conv2D(256, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(pool2)

conv3 = Conv2D(256, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv3)

pool3 = MaxPooling2D(pool_size=(2, 2))(conv3)

conv4 = Conv2D(512, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(pool3)

conv4 = Conv2D(512, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv4)

drop4 = Dropout(0.5)(conv4)

pool4 = MaxPooling2D(pool_size=(2, 2))(drop4)

conv5 = Conv2D(1024, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(pool4)

conv5 = Conv2D(1024, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv5)

drop5 = Dropout(0.5)(conv5)

up6 = Conv2D(512, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(drop5))

merge6 = concatenate([drop4,up6], axis = 3)

conv6 = Conv2D(512, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(merge6)

conv6 = Conv2D(512, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv6)

up7 = Conv2D(256, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(conv6))

merge7 = concatenate([conv3,up7], axis = 3)

conv7 = Conv2D(256, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(merge7)

conv7 = Conv2D(256, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv7)

up8 = Conv2D(128, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(conv7))

merge8 = concatenate([conv2,up8], axis = 3)

conv8 = Conv2D(128, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(merge8)

conv8 = Conv2D(128, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv8)

up9 = Conv2D(64, 2, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(UpSampling2D(size = (2,2))(conv8))

merge9 = concatenate([conv1,up9], axis = 3)

conv9 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(merge9)

conv9 = Conv2D(64, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv9)

conv9 = Conv2D(2, 3, activation = 'relu', padding = 'same', kernel_initializer = 'he_normal')(conv9)

conv10 = Conv2D(1, 1, activation = 'sigmoid')(conv9)

model = Model(input = inputs, output = conv10)

model.compile(optimizer = Adam(lr = 1e-4), loss = 'binary_crossentropy', metrics = ['accuracy'])

#model.summary()

if(pretrained_weights):

model.load_weights(pretrained_weights)

return model