TBN:Convolutional Neural Network with Ternary Inputs and Binary Weights

ECCV_2018 paper

TBN 用 高效的 XOR, AND 及位运算 代替 传统CNN 中的 算术运算

TBN replaces the arithmetical operations in standard CNNs with efficient XOR, AND and bitcount operations, and

thus provides an optimal tradeoff between memory, efficiency and performance.

provides ∼ 32× memory savings and 40× faster convolutional operations

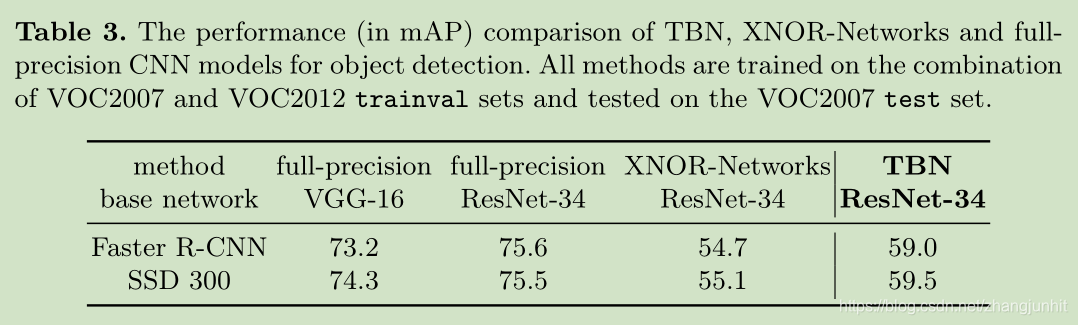

TBN 的性能 比 XNOR-Network 要 高 5% 点左右

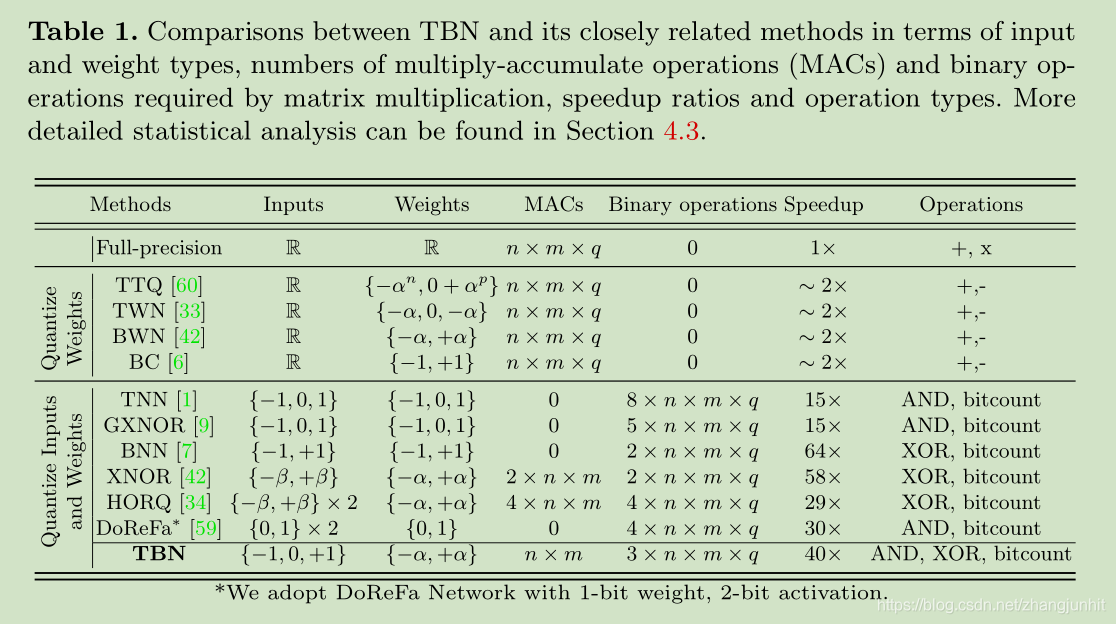

如果我们只对网络的权重参数进行二值化,那么得到的结果是 内存空间降低32倍,计算速度提升2倍(因为避免了卷积中的乘法运算)

Binarizing the network weights can directly result in ∼ 32× memory saving over the real-valued counterparts, and meanwhile bring ∼ 2× computational efficiency by avoiding the multiplication operation in convolutions

如果我们对网络的权重和网络层的输入信号同时进行二值化,通过 XNOR and bitcount operations 代替卷积中的算术运算,那么可以提速 58倍。当然这么的问题就是性能下降较大。

On the other hand, simultaneously binarizing both weights and the input signals can result in 58× computational

efficiency by replacing the arithmetical operations in convolutions with XNOR and bitcount operations.

对此我们提出使用三值化网络层的输入信号来提升性能 ternary inputs constrain input signal values into −1, 0, and 1

Ternary-Binary Network (TBN) = 网络权重参数的二值化 + 网络层输入的三值化

By incorporating ternary layer-wise inputs with binary network weights, we propose a Ternary-Binary Network (TBN) that provides an optimal tradeoff between the performance and computational efficiency

TBN can provide ∼ 32× memory saving and 40× speedup over its real-valued CNN counterparts

下图显示不同量化策略对速度的提升影响

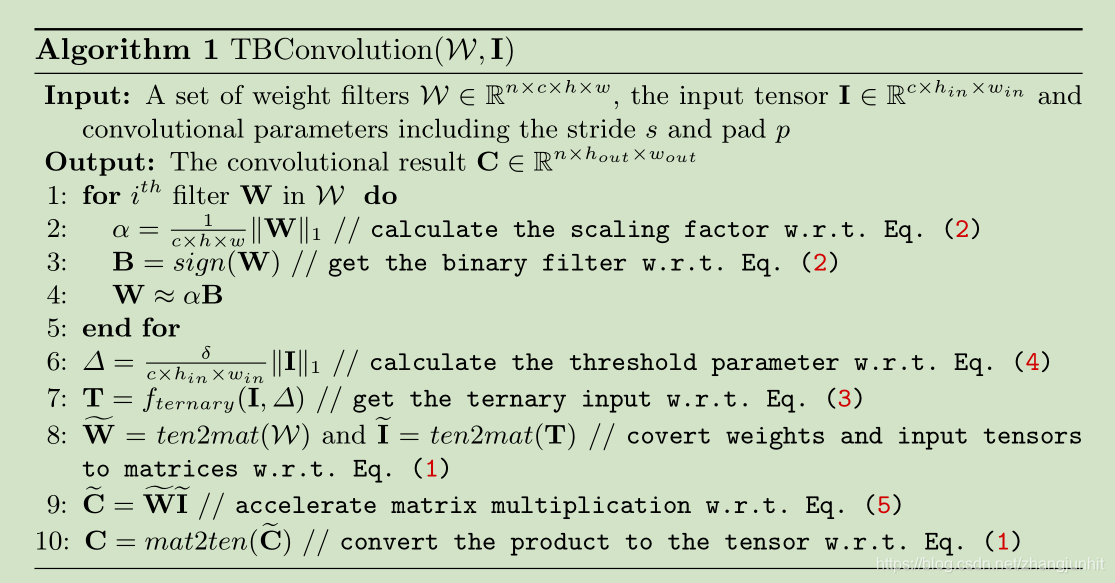

量化卷积过程

加速策略:

AND, XOR and bitcount operations

训练过程

分类性能对比

检测性能对比

11