pytorch实现简单的pointer networks

部分代码参照该GitHub以及该博客。纯属个人模仿实验。

- python3

- pytorch 0.4.0

Pointer Networks

Our model solves the problem of variable size output dictionaries using a recently proposed mechanism of neural attention. It differs from the previous attention attempts in that, instead of using attention to blend hidden units of an encoder to a context vector at each decoder step, it uses attention as a pointer to select a member of the input sequence as the output.—— [ Pointer Networks ]

个人理解Pointer Networks是attention的变体,attention是把encoder的所有输出加权求和然后映射到输出字典每个词的概率,但这样无法处理变长输入的情况,最好我们希望将decoder某个时间步的输出以及encoder所有输出共同映射到输入序列长度的概率分布,这样我们不光考虑了上下文(encoder所有输出,类似attention),最主要的是我们得到了输入序列相关位置的概率,即该模型充分考虑了输入序列的位置信息。确实,不同于句子,有些问题的输入中每个元素之间可能是不相关的,传统的seq2seq模型可能无法很好的解决。

数据格式

这里我仿照写了一段pointer networks的seq2seq模型,主要用来判断一个序列数值大小起伏的边界。边界值有两个,左边一段元素都在1~5之间,中间一段元素值都在6~10之间,右边一段元素值都在1~5之间,每段长度都在5~10之间,即两个边界点是不固定的。最大序列长度为30,不足用0填充,例如:

| input | target |

|---|---|

| [1,1,5,4,1,6,9,10,8,6,3,2,1] | [5, 9] |

| [2,3,4,1,4,3,7,8,6,7,9,10,6,2,5,4,2,4,1] | [6, 12] |

def generate_single_seq(length=30, min_len=5, max_len=10):

seq_before = [(random.randint(1, 5)) for x in range(random.randint(min_len, max_len))]

seq_during = [(random.randint(6, 10)) for x in range(random.randint(min_len, max_len))]

seq_after = [random.randint(1, 5) for x in range(random.randint(min_len, max_len))]

seq = seq_before + seq_during + seq_after

seq = seq + ([0] * (length - len(seq)))

return seq, len(seq_before), len(seq_before) + len(seq_during) - 1seq2seq模型

这里我将encoder和decoder写在了一起,decoder采用GRUCell循环计算目标序列长度次,训练时每次用target作为decoder的输入,测试时则用预测值作为输入。注意每次计算的output被映射到了输入序列长的概率(B, L)。

class PtrNet(nn.Module):

def __init__(self, input_dim, output_dim, embedding_dim, hidden_dim):

super().__init__()

self.input_dim = input_dim

self.output_dim = output_dim

self.embedding_dim = embedding_dim

self.hidden_dim = hidden_dim

self.encoder_embedding = nn.Embedding(input_dim, embedding_dim)

self.decoder_embedding = nn.Embedding(output_dim, embedding_dim)

self.encoder = nn.GRU(embedding_dim, hidden_dim)

self.decoder = nn.GRUCell(embedding_dim, hidden_dim)

self.W1 = nn.Linear(hidden_dim, hidden_dim, bias=False)

self.W2 = nn.Linear(hidden_dim, hidden_dim, bias=False)

self.v = nn.Linear(hidden_dim, 1, bias=False)

def forward(self, inputs, targets):

batch_size = inputs.size(1)

max_len = targets.size(0)

# (L, B)

embedded = self.encoder_embedding(inputs)

targets = self.decoder_embedding(targets)

# (L, B, E)

encoder_outputs, hidden = self.encoder(embedded)

# (L, B, H), (1, B, H)

# initialize

decoder_outputs = torch.zeros((max_len, batch_size, self.output_dim)).to(device)

decoder_input = torch.zeros((batch_size, self.embedding_dim)).to(device)

hidden = hidden.squeeze(0) # (B, H)

for i in range(max_len):

hidden = self.decoder(decoder_input, hidden)

# (B, H)

projection1 = self.W1(encoder_outputs)

# (L, B, H)

projection2 = self.W2(hidden)

# (B, H)

output = F.log_softmax(self.v(F.relu(projection1 + projection2)).squeeze(-1).transpose(0, 1), -1)

# (B, L)

decoder_outputs[i] = output

decoder_input = targets[i]

return decoder_outputs

def predict(self, inputs, max_trg_len):

batch_size = inputs.size(1)

# (L, B)

embedded = self.encoder_embedding(inputs)

# (L, B, E)

encoder_outputs, hidden = self.encoder(embedded)

# (L, B, H), (1, B, H)

# initialize

decoder_outputs = torch.zeros(max_trg_len, batch_size, self.output_dim).to(device)

decoder_input = torch.zeros((batch_size, self.embedding_dim)).to(device)

hidden = hidden.squeeze(0) # (B, H)

for i in range(max_trg_len):

hidden = self.decoder(decoder_input, hidden)

# (B, H)

projection1 = self.W1(encoder_outputs)

# (L, B, H)

projection2 = self.W2(hidden)

# (B, H)

a = self.v(F.relu(projection1 + projection2))

output = F.log_softmax(self.v(F.relu(projection1 + projection2)).squeeze(-1).transpose(0, 1), -1)

decoder_outputs[i] = output

_, indices = torch.max(output, 1)

decoder_input = self.decoder_embedding(indices)

return decoder_outputs测试结果

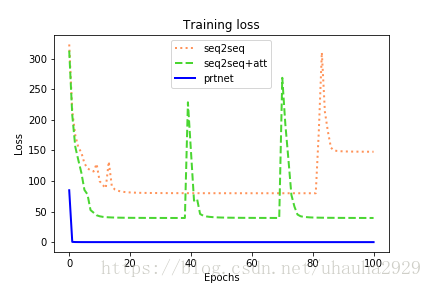

我用的训练集9000,测试集1000。我同时比较了基本的seq2seq加不加attention的效果,发现基本的seq2seq难以收敛,甚至要迭代100~300个epoch才能到达较高的准确率。而pointer networks能够迅速收敛,loss甚至能降为0,只要迭代20个epoch,准确率可以达到100%。loss结果如下,可以看出在第一个epoch,loss迅速下降,这是最明显的不同。

epoch: 0 | total loss: 86.9745

epoch: 1 | total loss: 0.3416

epoch: 2 | total loss: 0.0915

epoch: 3 | total loss: 0.0412

epoch: 4 | total loss: 0.0231

epoch: 5 | total loss: 0.0147

epoch: 6 | total loss: 0.0101

epoch: 7 | total loss: 0.0073

epoch: 8 | total loss: 0.0055

epoch: 9 | total loss: 0.0043

epoch: 10 | total loss: 0.0034

epoch: 11 | total loss: 0.0028

epoch: 12 | total loss: 0.0023

epoch: 13 | total loss: 0.0019

epoch: 14 | total loss: 0.0016

epoch: 15 | total loss: 0.0014

epoch: 16 | total loss: 0.0012

epoch: 17 | total loss: 0.0010

epoch: 18 | total loss: 0.0009

epoch: 19 | total loss: 0.0008

epoch: 20 | total loss: 0.0007Acc: 100.00% (1000/1000)最后是3中模型loss在100个epoch的比较结果:

完整代码见这里。