下载MNIST数据集(28*28,输入维度为784)

import tensorflow as tf

#下载MNIST数据集(28*28,输入维度为784)

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)一、定义Weight变量、biase变量、卷积层、池化层

#定义Weight变量,输入shape,返回变量的参数

#随机初始化,tf.truncated_normal(shape, mean=0.0, stddev(标准差)=1.0, dtype=tf.float32, seed=None, name=None)

def weight_variable(shape):

init = tf.truncated_normal(shape, stddev=0.1)

return tf.Variable(init)

#定义biase变量,传入shape ,返回变量的一些参数

#用不为0的数初始化

def weight_biase(shape):

init = tf.constant(0.1,shape = shape)

return tf.Variable(init)

#定义卷积,传入x和参数,Wtf.nn.conv2d(input, filter, strides, padding, use_cudnn_on_gpu=None, name=None)

def conv2d(x,W):

return tf.nn.conv2d(x, W, strides=[1, 1, 1, 1], padding='SAME')

#stride的第一位和第四位默认为1,第二、三位为x,y移动的距离

#定义池化,传入x,池化层无参数,pooling,tf.nn.max_pool(value, ksize, strides, padding, name=None)

def max_poo_2x2(x):

return tf.nn.max_pool(x,ksize=[1,2,2,1],strides=[1,2,2,1],padding = 'SAME')

#ksize为卷积核大小2*2,第一位和第四位默认为2

#stride的第一位和第四位默认为1,第二、三位为x,y移动的距离#定义一个计算准确率的函数

def compute_accuracy(v_xs, v_ys):

global prediction

y_pre = sess.run(prediction, feed_dict={xs: v_xs,keep_prob: 1})

correct_prediction = tf.equal(tf.argmax(y_pre,1), tf.argmax(v_ys,1))

accuracy = tf.reduce_mean(tf.cast(correct_prediction, tf.float32))

result = sess.run(accuracy, feed_dict={xs: v_xs, ys: v_ys,keep_prob: 1})

return result#定义输入的placeholder

xs = tf.placeholder(tf.float32, [None, 784])#图片28*28维

ys = tf.placeholder(tf.float32, [None, 10])

keep_prob = tf.placeholder(tf.float32)

#将xs的形状reshape为[-1,28,28,1],-1为任意sample数,1为chanel数,图片为28,28,1

x_image = tf.reshape(xs,[-1,28,28,1])

#第一层:convolutional layer1 + max pooling+relu

W_conv1 = weight_variable([5,5,1,32])#filter大小为5*5,channel=1,32个filter

b_conv1 = weight_biase([32])

#注意:输入conv1的卷积层是x_image,是reshape后的28,28,1

h_conv1 = tf.nn.relu(conv2d(x_image,W_conv1)+b_conv1)

h_pool1 = max_poo_2x2(h_conv1)

#第二层:convolutional layer2 + max pooling+relu

W_conv2 = weight_variable([5,5,32,64])#filter大小为5*5,channel=32,64个filter

b_conv2 = weight_biase([64])

#注意:输入conv1的卷积层是x_image,是reshape后的28,28,1

h_conv2 = tf.nn.relu(conv2d(h_pool1,W_conv2)+b_conv2)

h_pool2 = max_poo_2x2(h_conv2)

#第三层:fully connected layer1 +relu+ dropout

#注意:将输出的7,7,64展开为一维向量!!!

h_pool2 = tf.reshape(h_pool2,[-1,7*7*64])

W_fc1 = weight_variable([7*7*64,1024])

b_fc1 = weight_biase([1024])

h_fc1 = tf.nn.relu(tf.matmul(h_pool2,W_fc1)+b_fc1)

#考虑过拟合问题,加入一个dropout处理

h_fc1_drop = tf.nn.dropout(h_fc1,keep_prob)

#第四层:fully connected layer2 to prediction+softmax分类

W_fc2 = weight_variable([1024,10])

b_fc2 = weight_biase([10])

prediction = tf.nn.softmax(tf.matmul(h_fc1_drop,W_fc2)+b_fc2)

#损失函数(cross_entropy)和优化方法

cross_entropy = tf.reduce_mean(-tf.reduce_sum(ys*tf.log(prediction),

reduction_indices=[1]))

train_step = tf.train.AdamOptimizer(1e-4).minimize(cross_entropy)

#session会话控制

sess = tf.Session()

sess.run(tf.global_variables_initializer())

#训练1000次,每训练50次输出测试数据的训练精度

for i in range(1000):

#开始训练,训练集中每次取100个数据(batch_xs, batch_ys)

batch_xs, batch_ys = mnist.train.next_batch(100)

sess.run(train_step, feed_dict={xs: batch_xs, ys: batch_ys, keep_prob: 0.5})#placeholder和feed_dict同时出现

if i%50 == 0:

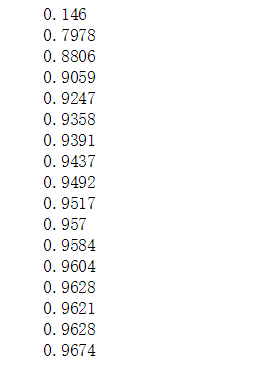

print(compute_accuracy( mnist.test.images, mnist.test.labels))结果:(跑的时间太长,提前暂停了,可以看到第二个epoch精确度就达到了0.79)