论文链接:Going deeper with convolutions

代码下载:

- Abstract

We propose a deep convolutional neural network architecture codenamed Inception that achieves the new state of the art for classification and detection in the ImageNet Large-Scale Visual Recognition Challenge 2014(ILSVRC14). The main hallmark of this architecture is the improved utilization of the computing resources inside the network. #我们提出一种称为Inception的深度卷积神经网络框架,并且在ImageNet大规模视觉识别挑战赛2014(ILSVRC14)分类和检测任务中实现了新的state-of-the-art.这个结构的主要特点是增加对网络内部计算资源的利用.

By a carefully crafted design, we increased the depth and width of the network while keeping the computational budget constant. To optimize quality, the architectural decisions were based on the Hebbian principle and the intuition of multi-scale processing. One particular incarnation used in our submission for ILSVRC14 is called GoogLeNet, a 22 layers deep network, the quality of which is assessed in the context of classification and detection

#通过精心地手动设计,我们在确保不增加计算量的情况下扩展网络的深度和宽度.为了优化性能,网络设计基于Hebbian准则以及多尺度处理机制.我们在ILSVRC14上提交的作品中最典型的被称为GoogLeNet,一个22层的深度神经网络.它的性能通过文本检测和目标检测进行评估.

- Introduction

In the last three years, our object classification and detection capabilities have dramatically improved due to advances in deep learning and convolutional networks [10].One encouraging news is that most of this progress is not just the result of more powerful hardware, larger datasets and bigger models, but mainly a consequence of new ideas,algorithms and improved network architectures. #在过去的三年里,由于深度学习和神经网络[10]的进步,我们在目标分类和检测上取得了很大的进步。一个令人鼓舞的消息是大多数进步都不只是更加强大的硬件,更广泛的数据以及更大的模型堆砌的结果,而是新思路,算法和改进的网络架构的综合结果. No new data sources were used, for example, by the top entries in the ILSVRC 2014 competition besides the classification dataset of the same competition for detection purposes. Our GoogLeNet submission to ILSVRC 2014 actually uses 12 times fewer parameters than the winning architecture of Krizhevsky et al [9] from two years ago, while being significantly more accurate. #例如,截止到ILSVRC14中的顶级作品,除了比赛中用于检测的分类数据集,没有使用新的数据.事实上我们在ILSVRC14挑战赛中提交的作品中所使用的模型参数少于两年前Krizhevsky et al[9]使用的获胜模型的1/12,却在准确率上有了显著的提升. On the object detection front, the biggest gains have not come from naive application of bigger and bigger deep networks, but from the synergy of deep architectures and classical computer vision, like the R-CNN algorithm by Girshick et al [6].

#在目标检测前沿,最大的进步并非来源于简单使用越来越大的网络,而是来自深度模型和经典计算机视觉的融合,例如Girshick et al[6]提出的R-CNN算法.

Another notable factor is that with the ongoing traction of mobile and embedded computing, the efficiency of our algorithms – especially their power and memory use – gains importance. It is noteworthy that the considerations leading to the design of the deep architecture presented in this paper included this factor rather than having a sheer fixation on accuracy numbers. #另一个显著的因素是随着计算机设备不断嵌入式化,我们算法的高效性————尤其是运算能力和内存的使用上————变得重要.值得注意的是本文提出的深度框架设计思路包含这个因素,而不是偏执于准确率的数值. For most of the experiments, the models were designed to keep a computational budget of 1.5 billion multiply-adds at inference time, so that the they do not end up to be a purely academic curiosity, but could be put to real world use, even on large datasets, at a reasonable cost.

#对于大多数实验,模型在设计时都为了保证推理阶段的计算量在15亿次乘加指令以内,因此它们不会单纯以学术兴趣收尾,而是可以应用到现实世界,即使在大型数据集上的表现也是可以接收的.

In this paper, we will focus on an efficient deep neural network architecture for computer vision, codenamed Inception, which derives its name from the Network in network paper by Lin et al [12] in conjunction with the famous “we need to go deeper” internet meme [1]. In our case, the word “deep” is used in two different meanings: first of all,in the sense that we introduce a new level of organization in the form of the “Inception module” and also in the more direct sense of increased network depth. #在这篇文章中,我们将侧重于构建一个适用于计算机视觉的高效深度神经网络框架,称为Inception,取自Lin et al[12]的Network in Network卢文,与著名的互联网基因"we need to go deeper"[1]相呼应.在我们的例子中,"deep"包含了两层含义:首先在于我们以"Inception module"的形式引入了一个新组织,更直接地在于增加网络的深度. In general, one can view the Inception model as a logical culmination of [12] while taking inspiration and guidance from the theoretical work by Arora et al [2]. The benefits of the architecture are experimentally verified on the ILSVRC 2014 classification and detection challenges, where it significantly outperforms the current state of the art.

#通常,人们谈论起Arora et al理论作品中的inspiration and guidance,便会把Inception模块当作是逻辑的顶峰.框架的优点在ILSVRC 2014分类和检测挑战赛中得到了验证,并且超越了当时的state-of-the-art.

- Related Work

Starting with LeNet-5[10], convolutional neural networks (CNN) have typically had a standard structure stacked convolutional layers (optionally followed by contrast normalization and max-pooling) are followed by one or more fully-connected layers. Variants of this basic design are prevalent in the image classification literature and have yielded the best results to-date on MNIST, CIFAR and most notably on the ImageNet classification challenge [9, 21]. #自LeNet-5[10]以来,卷积神经网络(CNN)用了一个典型的由卷积层堆积(后面选择性的跟随了对比度归一化和最大池化)的基本结构,并且后面跟随了一层或更多的全连接层.这个基本设计的变种在图像分类作品中开始变得流形,并且超越了MINST,CIFAR上至今为止最好的表现以及ImageNet分类挑战赛[9,21]中最显耀的作品.

For larger datasets such as Imagenet, the recent trend has been to increase the number of layers [12] and layer size [21, 14], while using dropout [7] to address the problem of overfitting.

#对于类似ImageNet的大型数据集,现在的趋势是增加网络的数量[12]和尺寸[21,14],同时使用dropout[7]来处理过拟合的问题.

Despite concerns that max-pooling layers result in loss of accurate spatial information, the same convolutional network architecture as [9] has also been successfully employed for localization [9, 14], object detection [6, 14, 18, 5] and human pose estimation [19].

#除了关心最大池化层可能导致空间精确信息的丢失,相同的卷积网络例如[9]已经成功应用在目标定位[9,14],目标检测[6,14,18,5]和人体姿态估算[19].

Inspired by a neuroscience model of the primate visual cortex, Serre et al. [15] used a series of fixed Gabor filters of different sizes to handle multiple scales. We use a similar strategy here.

#受灵长类动物视觉拼成的神经学模型启发,Serre et al[15]使用了一系列不同固定尺寸的Gavor滤波器来处理多尺度.这里我们使用了一种近似的策略.

However, contrary to the fixed 2-layer deep model of [15], all filters in the Inception architecture are learned. Furthermore, Inception layers are repeated many times, leading to a 22-layer deep model in the case of the GoogLeNet model.

#不过,相比于[15]中固定两层深度模型,Inception结构中所有的滤波器都是学习得来的.进一步说,Inception层重复了许多次,最终形成一个拥有22层深度的GoogLeNet模型.

Network-in-Network is an approach proposed by Lin et al. [12] in order to increase the representational power of neural networks. In their model, additional 1 × 1 convolutional layers are added to the network, increasing its depth.We use this approach heavily in our architecture. #Network-in-Network是Lin et al.[12]为了增加神经网络的表达能力提出的一种方法.在他们的模型中,在网络上额外增加一层1*1的卷积层,可以增加它的深度。我们的框架中大量应用这种方式.

However,in our setting, 1 × 1 convolutions have dual purpose: most critically, they are used mainly as dimension reduction modules to remove computational bottlenecks, that would otherwise limit the size of our networks. This allows for not just increasing the depth, but also the width of our networks without a significant performance penalty.

#但是,我们的设置里,1*1卷积层主要有两层目的:最重要的是它们主要充当降维模块以避免计算瓶颈,否则这将限制网络的尺寸.这样不仅能增加网络的深度,也可以在不影响网络的性能情况下增加网络的宽度.

Finally, the current state of the art for object detection is the Regions with Convolutional Neural Networks (R-CNN) method by Girshick et al. [6].R-CNN decomposes the over all detection problem into two subproblems: utilizing low level cues such as color and texture in order to generate object location proposals in a category-agnostic fashion and using CNN classifiers to identify object categories at those locations. #最终,当前目标检测的state-of-the-art是Girshick et al.[6]提出的基于区域的卷积神经网络(R-CNN).R-CNN将检测问题总体上分为两个子问题:利用颜色和纹理等低层次特征,以跨类别的方式产生目标候选区域,随后使用CNN分类器来区分这些位置上的物体类别. Such a two stage approach leverages the accuracy of bounding box segmentation with low-level cues, as well as the highly powerful classification power of state-of-the-art CNNs. We adopted a similar pipeline in our detection submissions, but have explored enhancements in both stages, such as multi-box [5] prediction for higher object bounding box recall, and ensemble approaches for better categorization of bounding box proposals.

#这样一个分为两个阶段的方法利用了低层次特征分割边框的准确性,并利用了state-of-the-art CNNs强大的分类能力.我们在检测作品中引入了类似的框架,但是在这个阶段都作了拓展和改善,例如用于高目标边框recall的multi-box[5]预测以及融合更加准确的边框候选分类方法.

- Motivation and High Level Considerations

The most straightforward way of improving the performance of deep neural networks is by increasing their size.This includes both increasing the depth – the number of network levels – as well as its width: the number of units at each level. This is an easy and safe way of training higher quality models, especially given the availability of a large amount of labeled training data. However, this simple solution comes with two major drawbacks.

#改善深度神经网络性能最直接的方法是增加网络的尺寸,这包括增加深度————工作的网络层数————以及它的宽度:每个层的党员数量.这是训练高质量模型的一个简单而安全的方法,尤其是在提供了大量的带有标签的训练数据.但是,这个简单的解决方案有两个主要的缺点.

Figure 1: Two distinct classes from the 1000 classes of the ILSVRC 2014 classification challenge. Domain knowledge is required to distinguish between these classes.

Bigger size typically means a larger number of parameters, which makes the enlarged network more prone to overfitting, especially if the number of labeled examples in the training set is limited. This is a major bottleneck as strongly labeled datasets are laborious and expensive to obtain, often requiring expert human raters to distinguish between various fine-grained visual categories such as those in ImageNet(even in the 1000-class ILSVRC subset) as shown in Figure 1.

#更大的尺寸通常意味着大量的参数,这使得扩大后的网络更容易过拟合,尤其是训练过程中带标签样本是有限的.这是一个主要的瓶颈,因为带有强标注数据集的获取是费力并且昂贵的,经常需要专业人员来评分区分那些例如图1所示具有不同细密纹理的ImageNet视觉目录.

The other drawback of uniformly increased network size is the dramatically increased use of computational resources. For example, in a deep vision network, if two convolutional layers are chained, any uniform increase in the number of their filters results in a quadratic increase of computation. #网络尺寸增加的另外一个缺点是计算资源消耗的大幅增长.举个例子,在一个深度视觉网络中,如果两个卷积层是相连的.它们中间任何滤波器数量的增长都会引起计算量以该数量的二次方增长. If the added capacity is used inefficiently (for example, if most weights end up to be close to zero), then much of the computation is wasted. As the computational budget is always finite, an efficient distribution of computing resources is preferred to an indiscriminate increase of size, even when the main objective is to increase the quality of performance.

#如果增加上的网络容量没有被有效利用(例如,如果大多数权值都是接近零),那么大多数计算都是浪费的.既然计算资源通常是有限的,计算资源的合理有效分布是需要优先考虑的,以适应尺寸的任意增长,即使最主要的目标是提高网络的性能.

A fundamental way of solving both of these issues would be to introduce sparsity and replace the fully connected layers by the sparse ones, even inside the convolutions. Besides mimicking biological systems, this would also have the advantage of firmer theoretical underpinnings due to the groundbreaking work of Arora et al. [2]. #解决上述问题的一个基础方案是引入稀疏,使用稀疏层替换全连接层,即使在卷积层内部.除了模仿生物系统,这个方案还有更加坚实的理论基础.由于Arora et al[2]的开创性工作. Their main result states that if the probability distribution of the dataset is representable by a large, very sparse deep neural network,then the optimal network topology can be constructed layer after layer by analyzing the correlation statistics of the preceding layer activations and clustering neurons with highly correlated outputs. Although the strict mathematical proof requires very strong conditions, the fact that this statement resonates with the well known Hebbian principle–neurons that fire together, wire together–suggests that the underlying idea is applicable even under less strict conditions, in practice.

#他们主要的结果说明如果数据集的概率分布可以有一个大的非常稀疏的神经网络表示,那么最优的网络拓扑可以通过前一层激活与输出高度相关的神经元聚类的相关性.虽然严格的数学证明需要非常眼科的条件,事实上这个论述与著名的Hebbian准则(一起放电的神经元串联在一起)有着异曲同工之妙,意味着这个想法在实践中即使条件没有那么严苛也是适用的.

Unfortunately, today’s computing infrastructures are very inefficient when it comes to numerical calculation on non-uniform sparse data structures. Even if the number of arithmetic operations is reduced by 100×, the overhead of lookups and cache misses would dominate: switching to sparse matrices might not pay off. #遗憾的是,今天的计算机架构在处理非均匀分布稀疏数据结构的数值计算时是非常低效的,即使数值运算量舰队到1/100,查找开销和缓存丢失会占据主导地位:切换到稀疏矩阵可能没有回报. The gap is widened yet further by the use of steadily improving and highly tuned numerical libraries that allow for extremely fast dense matrix multiplication, exploiting the minute details of the underlying CPU or GPU hardware [16, 9]. Also, non-uniform sparse models require more sophisticated engineering and computing infrastructure. #即使引入温补改进和高度优化的数值计算库,可以极为快速地完成稠密矩阵乘法,并且利用底层CPU和GPU[16,9]硬件上的微小细节,差距仍然在增大.同样的,不均匀稀疏模型需要更加复杂的工程和计算基础设施. Most current vision oriented machine learning systems utilize sparsity in the spatial domain just by the virtue of employing convolutions. However, convolutions are implemented as collections of dense connections to the patches in the earlier layer. #大多数当前的定位于视觉的机器学习系统仅仅通过引入卷积实现空间上的稀疏性.但是,早前的卷积是以一系列层间的紧密连接实现的. ConvNets have traditionally used random and sparse connection tables in the feature dimensions since [11] in order to break the symmetry and improve learning, yet the trend changed back to full connections with [9] in order to further optimize parallel computation. Current state-of-the-art architectures for computer vision have uniform structure. The large number of filters and greater batch size allows for the efficient use of dense computation.

#自从[11]以来,卷积神经网络传统上在特征维度上使用随机稀疏连接表以破坏对称性并改善学习,然而又趋向于使用[9]中的全连接层以实现并行计算的进一步优化.当前使用于计算机视觉的state-of-the-art结构拥有均匀的结构,大量的滤波器和更大的batch size实现稠密运算的高效利用.

This raises the question of whether there is any hope for a next, intermediate step: an architecture that makes use of filter-level sparsity, as suggested by the theory, but exploits our current hardware by utilizing computations on dense matrices. The vast literature on sparse matrix computations (e.g. [3]) suggests that clustering sparse matrices into relatively dense submatrices tends to give competitive performance for sparse matrix multiplication. It does not seem far-fetched to think that similar methods would be utilized for the automated construction of non-uniform deep-learning architectures in the near future.

#这引发一个问题:是否存在一种中间步骤:一个使用滤波器级别稀疏性的结构,如理论建议一样,但是通过利用稠密矩阵上的计算来拓展我们当前的计算机硬件.大量关于稀疏矩阵计算的作品(e.g.[3])建议将稀疏矩阵聚类成相对稠密的子矩阵趋向于为稀疏矩阵乘法带来具有竞争性的表现.这看起来并没有不着边际,不久的将来相似的方法将会被用于不均匀深度学习框架的自动化构建.

The Inception architecture started out as a case study for assessing the hypothetical output of a sophisticated network topology construction algorithm that tries to approximate a sparse structure implied by [2] for vision networks and covering the hypothesized outcome by dense, readily available components. Despite being a highly speculative undertaking, modest gains were observed early on when compared with reference networks based on [12]. #Inception结构开始是为了验证复杂网络拓扑构造算法输出假设的一个案例学习,这个算法尝试接近[2]隐含用于计算机视觉网络的稀疏结构并包含稠密的现有模块假设输出.尽管这是一个高度投机的事业,早期在对比基于[12]的参考网络有一定的收获。 With a bit of tuning the gap widened and Inception proved to be especially useful in the context of localization and object detection as the base network for [6] and [5]. Interestingly, while most of the original architectural choices have been questioned and tested thoroughly in separation, they turned out to be close to optimal locally. #随着差异在一定程度上的调整变大,Inception被证明是尤其作为语义定位[6]和目标定位[5]基础网络是非常有效的.有趣的是,绝大多数原始框架选择被怀疑并且完全分离测试,它们表现出来接近局部最优。 One must be cautious though: although the Inception architecture has become a success for computer vision, it is still questionable whether this can be attributed to the guiding principles that have lead to its construction. Making sure of this would require a much more thorough analysis and verification.

#但是仍然需要保持谨慎:尽管Inception框架在计算机视觉获得成功,仍然需要怀疑这是否可以被归纳于构建网络的指导性原则。确定这一点需要更多完整的分析和验证。

- Architectural Details

The main idea of the Inception architecture is to consider how an optimal local sparse structure of a convolutional vision network can be approximated and covered by readily available dense components. Note that assuming translation invariance means that our network will be built from convolutional building blocks. #Inception框架的主要实现是考虑一个局部优化稀疏结构的卷积视觉网络如何利用现有稠密组件来近似和覆盖。注意假设平移不变性意味着我们的网络由卷积积木构成。 All we need is to find the optimal local construction and to repeat it spatially. Arora et al. [2] suggests a layer-by layer construction where one should analyze the correlation statistics of the last layer and cluster them into groups of units with high correlation. #我们所要做的便是寻找到局部最优的构建方式并在空间上重复。Arora et al.[2]提出一种分析前层的统计学相关信息,并将它们聚类成高度相关的逐层构建方式。

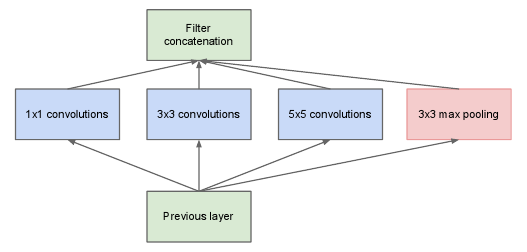

These clusters form the units of the next layer and are connected to the units in the previous layer. We assume that each unit from an earlier layer corresponds to some region of the input image and these units are grouped into filter banks. In the lower layers (the ones close to the input) correlated units would concentrate in local regions. Thus, we would end up with a lot of clusters concentrated in a single region and they can be covered by a layer of 1×1 convolutions in the next layer, as suggested in [12]. However, one can also expect that there will be a smaller number of more spatially spread out clusters that can be covered by convolutions over larger patches, and there will be a decreasing number of patches over larger and larger regions. In order to avoid patch-alignment issues, current incarnations of the Inception architecture are restricted to filter sizes 1×1, 3×3 and 5×5; this decision was based more on convenience rather than necessity. It also means that the suggested architecture is a combination of all those layers with their output filter banks concatenated into a single output vector forming the input of the next stage. Additionally, since pooling operations have been essential for the success of current convolutional networks, it suggests that adding an alternative parallel pooling path in each such stage should have additional beneficial effect, too (see Figure 2(a)).

#这些聚类形成下一层的单元并与上一层相连。我们假设前一层中每个单元与输入图像的某个区域相关,这些单元被分到滤波器组。低层级卷积层(接近输入层)相互关联的单元将会聚集在局部区域。因此,最终我们将会形成一个很多聚类的局部区域,同时他们可以被下一层的11个卷积所包含,如[12]所述,但是人们也会期待.

As these “Inception modules” are stacked on top of each other, their output correlation statistics are bound to vary: as features of higher abstraction are captured by higher layers, their spatial concentration is expected to decrease. This suggests that the ratio of 3×3 and 5×5 convolutions should increase as we move to higher layers.

#由于这些"Inception"模块在彼此的顶部堆叠,它们的输出相关统计必然会有变化:由于较高层将捕捉较高层的抽象特征,其空间聚集度预计会减少。这表明随着转移到较高层,3X3和5X5卷积的比例将会增加。

(a) Inception module, naı̈ve version

One big problem with the above modules, at least in this naı̈ve form, is that even a modest number of 5×5 convolutions can be prohibitively expensive on top of a convolutional layer with a large number of filters. This problem becomes even more pronounced once pooling units are added to the mix: the number of output filters equals to the number of filters in the previous stage. #上述模块的一个大问题在于,至少在原始形式上,即使适量的5X5卷积堆积在具有大量滤波器卷积层上面也是极为昂贵的。一旦混合中加入池化单元,这个问题甚至变得特别突出:输出滤波器数目等于上一阶段滤波器数量。 The merging of output of the pooling layer with outputs of the convolutional layers would lead to an inevitable increase in the number of outputs from stage to stage. While this architecture might cover the optimal sparse structure, it would do it very inefficiently, leading to a computational blow up within a few stages.

#池化层输出与卷积层输出的融合将会不可避免地导致这一阶段到下一阶段输出数量的增长。虽然这个框架可以覆盖最优的稀疏结构,过程可能非常低效,若干阶段后可能会造成计算爆炸。

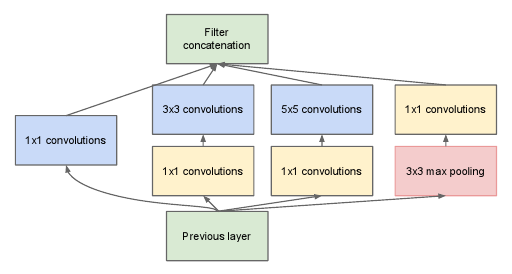

This leads to the second idea of the Inception architecture: judiciously reducing dimension wherever the computational requirements would increase too much otherwise.This is based on the success of embeddings: even low dimensional embeddings might contain a lot of information about a relatively large image patch. However, embeddings represent information in a dense, compressed form and compressed information is harder to process. The representation should be kept sparse at most places (as required by the conditions of [2]) and compress the signals only whenever they have to be aggregated en masse. #这促使Inception框架的第二个想法:明智地较少维度,否则可能会造成计算需求的极大增长。这是基于嵌入的成功:即使低维度的嵌入可能包含相对较大图像集的大量信息。但是,嵌入以稠密和压缩形式表达信息,而压缩信息更难处理。这种表达应该在大多地方保持稀疏(如条件2所要求的)并且在需要整体聚集的时候才进行信号的压缩。 That is,1×1 convolutions are used to compute reductions before the expensive 3×3 and 5×5 convolutions. Besides being used as reductions, they also include the use of rectified linear activation making them dual-purpose. The final result is depicted in Figure 2(b).

#就是在3X3和5X5卷积层之前使用1X1卷积来减少计算量。除了用作减少运算量,他们也包含rectified linear激活,使他具有双重作用。最终的结果呈现在表2(b)。

In general, an Inception network is a network consisting of modules of the above type stacked upon each other,with occasional max-pooling layers with stride 2 to halve the resolution of the grid. For technical reasons (memory efficiency during training), it seemed beneficial to start using Inception modules only at higher layers while keeping the lower layers in traditional convolutional fashion. This is not strictly necessary, simply reflecting some infrastructural inefficiencies in our current implementation.

#通常来说,一个Inception网络是一个包含上述彼此堆叠的模块,以及偶尔使用步长为2的最大池化层来减半网络分辨率。由于技术原因(训练过程过程的内存效率),看似来只在较高层使用Inception模块而保持较低层为传统卷积结构是有效的。这不是严格必要的,只是反应我们当前实现中一些基础设施中的抵消部分。

(b) Inception module with dimensionality reduction

A useful aspect of this architecture is that it allows for increasing the number of units at each stage significantly without an uncontrolled blow-up in computational complexity at later stages. This is achieved by the ubiquitous use of dimensionality reduction prior to expensive convolutions with larger patch sizes. #这个结构中有用的地方在于它允许增加每个阶段显著地增加单元数而不会造成较后的卷积层中计算量失控地爆炸式增长。这是通过普遍存在的昂贵的较大尺寸卷积层前降维的使用。 Furthermore, the design follows the practical intuition that visual information should be processed at various scales and then aggregated so that the next stage can abstract features from the different scales simultaneously.

#进一步说,这个设计遵循一个实践指南,视觉信息需要被处理成不同尺度,然后聚集在一起,因而下一个阶段可以立即抽取不同尺度的特征

The improved use of computational resources allows for increasing both the width of each stage as well as the number of stages without getting into computational difficulties. One can utilize the Inception architecture to create slightly inferior, but computationally cheaper versions of it. #计算资源的改善利用允许增加各个阶段的网络宽度以及在不造成计算瓶颈的情况下增加阶段数量。人们可以使用Inception模块来创建稍微低级的,但是计算相对便宜的计算机视觉版本。 We have found that all the available knobs and levers allow for a controlled balancing of computational resources resulting in networks that are 3 − 10× faster than similarly performing networks with non-Inception architecture, however this requires careful manual design at this point.

#我们发现有助于计算资源均衡的可用旋钮和杠杠使用网络速度相对于未使用Inception模块的网络有了3到10倍的提升,但是这一点上需要仔细的手动设计。

- GoogLeNet

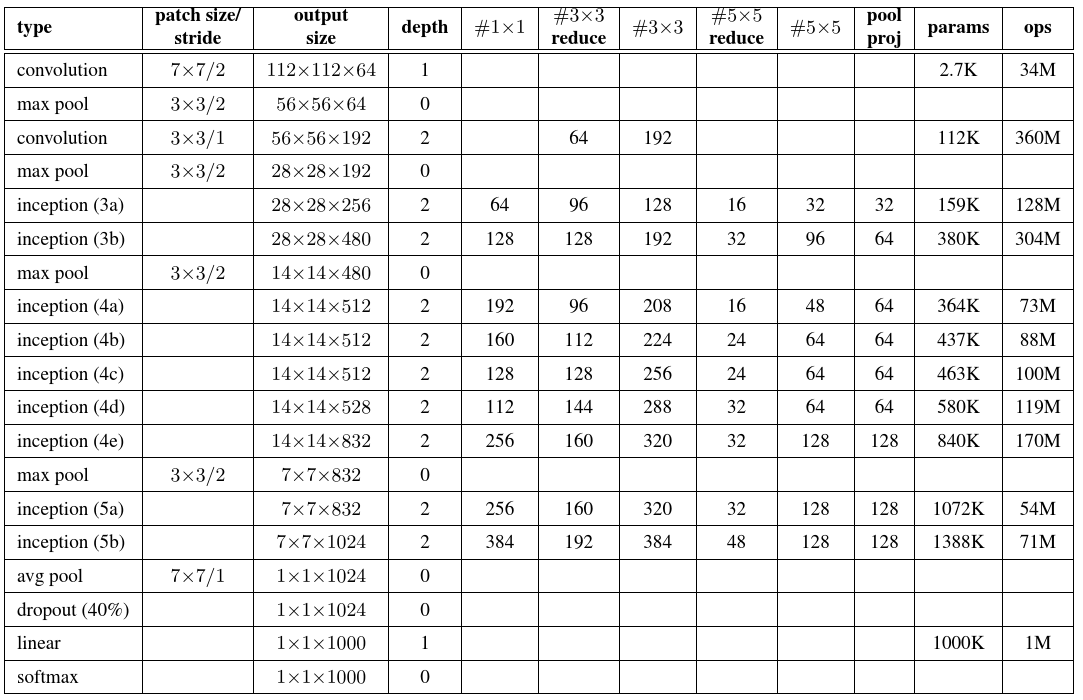

By the “GoogLeNet” name we refer to the particular incarnation of the Inception architecture used in our submission for the ILSVRC 2014 competition. We also used one deeper and wider Inception network with slightly superior quality, but adding it to the ensemble seemed to improve the results only marginally. #通过GoogLeNet这个名字我们指的是ILSVRC 2014挑战赛上提交作品中的Inception框架的特定体现。我们也用了另一个更深更宽性能稍微改善的Inception网络,但是把它加进融合中似乎只改善边缘的结果 We omit the details of that network,as empirical evidence suggests that the influence of the exact architectural parameters is relatively minor. Table 1 illustrates the most common instance of Inception used in the competition. This network (trained with different image patch sampling methods) was used for 6 out of the 7 models in our ensemble.

#我们省略了网络的细节,由于经验告诉我们准确架构参数的影响是相对较小的。表1阐述了比赛中使用多数常用的Inception实例。我们的融合中使用了7个这些网络(使用不同图像分块采样方式训练)的6个

Table 1: GoogLeNet incarnation of the Inception architecture

All the convolutions, including those inside the Inception modules, use rectified linear activation. The size of the receptive field in our network is 224×224 in the RGB color space with zero mean. “#3×3 reduce” and “#5×5 reduce” stands for the number of 1×1 filters in the reduction layer used before the 3×3 and 5×5 convolutions. One can see the number of 1×1 filters in the projection layer after the built-in max-pooling in the pool proj column. All these reduction/projection layers use rectified linear activation as well.

#所有卷积,包括那些位于Inception内部的,使用ReLU激活函数。我们的网络中RGB零均值颜色空间的感受野尺寸是224X224。 “#3×3 reduce” 和 “#5×5 reduce”代表3×3以及5×5卷积层前降维层的1X1滤波器数量。可以在pool proj这列看到最大池化层后的预测层中1X1滤波器数量。所有这些降维层/预测层也都使用ReLU激活函数。

The network was designed with computational efficiency and practicality in mind, so that inference can be run on individual devices including even those with limited computational resources, especially with low-memory footprint.The network is 22 layers deep when counting only layers with parameters (or 27 layers if we also count pooling). The overall number of layers (independent building blocks) used for the construction of the network is about 100. The exact number depends on how layers are counted by the machine learning infrastructure. #网络设计之初就考虑了计算效率和实践性,所以接口可以在包括有限计算资源尤其是小内存的个人设备上运行。统计时只考虑参数层,网络具有22层(或者把池化层也计算在内则为27层)。构建网络总共使用了100层(独立的构建模块)。额外的数目取决于如何计算机器学习基础框架 The use of average pooling before the classifier is based on [12], although our implementation has an additional linear layer. The linear layer enables us to easily adapt our networks to other label sets, however it is used mostly for convenience and we do not expect it to have a major effect. We found that a move from fully connected layers to average pooling improved the top-1 accuracy by about 0.6%, however the use of dropout remained essential even after removing the fully connected layers.

#分类器前的平均池化层是基于文献[12],尽管我们的实现中有一层额外的线性层。线性层使我们的网络模型更容易适用于其他含标签数据集,但多数情况是处于便利性,并没有期待它会起到主要的作用。我们发现将全连接层替换为平均池化层将top-1准确率提升了0.6个百分点,但即使移除了全连接层dropout仍然是必要的

Given relatively large depth of the network, the ability to propagate gradients back through all the layers in an effective manner was a concern. The strong performance of shallower networks on this task suggests that the features produced by the layers in the middle of the network should be very discriminative. By adding auxiliary classifiers connected to these intermediate layers, discrimination in the lower stages in the classifier was expected. #给定了相对大的深度网络,有效将梯度通过所有网络层进行传播的能力值得担忧。在这个任务上更浅的网络强大的性能说明网络中间层产生的特征应该是非常有判别力的。期待通过增加额外的分类器连接到这些中间层使较低阶段的分类器具有判别力 This was thought to combat the vanishing gradient problem while providing regularization. These classifiers take the form of smaller convolutional networks put on top of the output of the Inception (4a) and (4d) modules. During training, their loss gets added to the total loss of the network with a discount weight (the losses of the auxiliary classifiers were weighted by 0.3). #这被认为是提供正则化来抵抗梯度弥散问题。这些分类器通过在Inception(4a)和Inception(4d)输出的顶层放置较小卷积网络的形式。在训练阶段,它们的损失按照一定的比例权重加到网络的总体损失上(辅助分类器的权重为0.3。 At inference time, these auxiliary networks are discarded. Later control experiments have shown that the effect of the auxiliary networks is relatively minor (around 0.5%) and that it required only one of them to achieve the same effect.

#在推理阶段,这些辅助网络被抛弃。后续的受控实验证明了辅助网络的作用是相对较小的(约0.5%),并且只需要使用其中的一种即可实现相同的效果。

The exact structure of the extra network on the side, including the auxiliary classifier, is as follows: # 边上包含辅助分类器的额外网络准确结构如下所示: 1. An average pooling layer with 5×5 filter size and stride 3, resulting in an 4×4×512 output for the (4a),and 4×4×528 for the (4d) stage.

# 1.使用卷积尺寸为5*5,stride为3的平均池化层,产生了(4a)阶段中的4x4x512输出以及(4d)阶段中的4x4x528输出 2. A 1×1 convolution with 128 filters for dimension reduction and rectified linear activation.

# 2.一个拥有128个滤波器用于降维的1x1卷积层和RLU激活层 3. A fully connected layer with 1024 units and rectified linear activation.

# 3.一个拥有1024个单元的全连接层和RLU激活层 4. A dropout layer with 70% ratio of dropped outputs.

# 4.一个丢弃率为70%的dropout层 5. A linear layer with softmax loss as the classifier (predicting the same 1000 classes as the main classifier, but removed at inference time). # 5.一个使用softmax损失函数的线性层作为分类器(作为主分类器预测相同的1000种类物体,但是在推理阶段移除)

A schematic view of the resulting network is depicted in Figure 3

#最终网络的图标展现在表3中

- Training Methodology

GoogLeNet networks were trained using the DistBelief [4] distributed machine learning system using modest amount of model and data-parallelism. Although we used a CPU based implementation only, a rough estimate suggests that the GoogLeNet network could be trained to convergence using few high-end GPUs within a week, the main limitation being the memory usage. #GoogLeNet使用DistBelief[4]分布式机器学习系统以及数量模型和并行数据进行训练。尽管我们只是用CPU实现,粗略估计如果使用少量高性能GPUs,模型可以在一周内收敛,主要的限制是内存的使用量。 Our training used asynchronous stochastic gradient descent with 0.9 momentum [17], fixed learning rate schedule (decreasing the learning rate by 4% every 8 epochs). Polyak averaging [13] was used to create the final model used at inference time.

#我们的训练使用异步随机梯度下降法,其中momentum[17]为0.9,固定的学习率机制(每8个epochs将学习率降低4%)。Polyak平均[13]用于创建推理阶段最终使用的模型。

Image sampling methods have changed substantially over the months leading to the competition, and already converged models were trained on with other options, sometimes in conjunction with changed hyperparameters, such as dropout and the learning rate. Therefore, it is hard to give a definitive guidance to the most effective single way to train these networks. To complicate matters further, some of the models were mainly trained on smaller relative crops, others on larger ones, inspired by [8]. #图像采样方法在竞赛这个月潜意识中被改变了,已经覆盖使用其他方式训练的模型,有时候与可变超参数一起,例如dropout以及学习率。因此,对于大多数网络训练很难给出一个确切唯一的高效指导。对于后续更复杂的事情,一些模型主要在集中在更小的关联数据训练,有一些模型集中在更大数据集上训练。 Still, one prescription that was verified to work very well after the competition, includes sampling of various sized patches of the image whose size is distributed evenly between 8% and 100% of the image area with aspect ratio constrained to the interval [ 34 , 3 4 ]. Also, we found that the photometric distortionsof Andrew Howard [8] were useful to combat overfitting to the imaging conditions of training data.

#竞赛结束后仍然验证了一个方法的有效性,包括采样不同尺寸的图像块,甚至图像面积分布在8%到100%并保持宽高比在3/4到4/3之间。我们同时发现Andrew Howard[8]的亮度失真在处理训练过程中的过拟合非常有效。

- ILSVRC 2014 Classification Challenge Setup and Results

The ILSVRC 2014 classification challenge involves the task of classifying the image into one of 1000 leaf-node categories in the Imagenet hierarchy. There are about 1.2 million images for training, 50,000 for validation and 100,000 images for testing. Each image is associated with one ground truth category, and performance is measured based on the highest scoring classifier predictions. #ILSVRC 2014分类挑战赛包含将图像分成ImageNet分级中1000个之类中的其中一类。训练中使用了120万张图像,验证中使用了5万张图像,测试中使用了10万张图像。每张图像都与一个真是类别相关,性能都是基于最高得分分类器预测结果进行衡量的。 Two numbers are usually reported: the top-1 accuracy rate, which compares the ground truth against the first predicted class,and the top-5 error rate, which compares the ground truth against the first 5 predicted classes: an image is deemed Figure correctly classified if the ground truth is among the top-5,regardless of its rank in them. The challenge uses the top-5 error rate for ranking purposes,

#报告中通常会体现两个数值:将第一预测序列与实际结果进行对比的top-1准确率,以及将前五个预测序列与实际结果进行对比的top-5准确率:如果实际结果在top-5之中这判定图像被正确分类,而不管它们中的排名.比赛使用top-5错误率进行排名.

We participated in the challenge with no external data used for training. In addition to the training techniques aforementioned in this paper, we adopted a set of techniques during testing to obtain a higher performance, which we describe next. # 我们在比赛中没有使用多余的数据进行训练。除了本文中提到的训练技巧外,我们在测试阶段引入了一系列技巧来获取更高的性能,将在下文中阐述。 1.We independently trained 7 versions of the same GoogLeNet model (including one wider version), and performed ensemble prediction with them.These models were trained with the same initialization (even with the same initial weights, due to an oversight) and learning rate policies. They differed only in sampling methodologies and the randomized input image order. # 1.我们独立训练了7个版本相同的GoogLeNet模型(包括一个相对更宽的版本),并将它们的预测结果进行融合。这些模型使用相同的初始化进行训练(甚至是相同的初始化权值,由于一个疏忽)以及学习率策略。它们只在采样方法和输入图像随机顺序上有差异。 2.During testing, we adopted a more aggressive cropping approach than that of Krizhevsky et al. [9]. Specifically, we resized the image to 4 scales where the shorter dimension (height or width) is 256, 288, 320 and 352 respectively, take the left, center and right square of these resized images (in the case of portrait images, we take the top, center and bottom squares).For each square, we then take the 4 corners and the center 224×224 crop as well as the square resized to 224×224, and their mirrored versions. # 2.在测试阶段,我们引入了一个比Krizhevsky et al.[9]更加激进的裁剪方法.特别是,我们将图像分别放大了4个比例,其中具有代表性的短边(高度或宽度)分别为256,288,320以及352,取缩放后图像的左边,中间和右边(在人像例子中,我们取了上边,中间和底边)。对于每条边,我们随后取4个角以及中间224*224图像块以及缩放到224*224的方块图像和它的镜像。 This leads to 4×3×6×2 = 144 crops per image. A similar approach was used by Andrew Howard [8] in the previous year’s entry, which we empirically verified to perform slightly worse than the proposed scheme. We note that such aggressive cropping may not be necessary in real applications, as the benefit of more crops becomes marginal after a reasonable number of crops are present (as we will show later on) # 这使得每张图片中有4x3x6x2 = 144 个图片块。Andrew Howard[8]在去年挑战赛中使用了类似的方法,后来被证明稍微逊于我们所提出的机制。我们注意到这种激进的分块操作在实际应用中并非必要,在达到合理数量的图像块后(我们稍后会说明),使用更多分块所带来的边际效应微乎其微。 3.The softmax probabilities are averaged over multiple crops and over all the individual classifiers to obtain the final prediction. In our experiments we analyzed alternative approaches on the validation data, such as max pooling over crops and averaging over classifiers,but they lead to inferior performance than the simple averaging。

# 3.softmax概率是通过在各种图像块以及所有独立分类器上取平均以获取最终的预测结果。在我们的实验中,我们分析了验证集上的可选方法,例如在分块上的最大池化操作以及分类器上取平均,但是它们带来的效果并没有单一平均来得好。

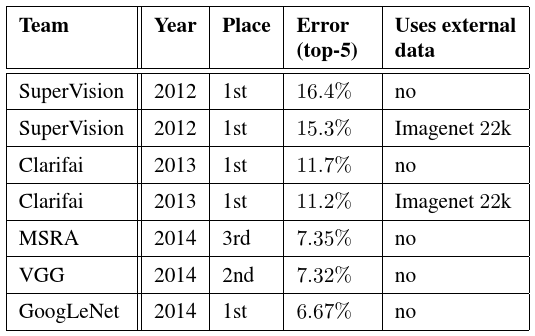

In the remainder of this paper, we analyze the multiple factors that contribute to the overall performance of the final submission. Our final submission to the challenge obtains a top-5 error of 6.67% on both the validation and testing data, ranking the first among other participants. This is a 56.5% relative reduction compared to the SuperVision approach in 2012,and about 40% relative reduction compared to the previous year’s best approach (Clarifai), both of which used external data for training the classifiers. Table 2 shows the statistics of some of the top-performing approaches over the past 3 years.

Table 2: Classification performance.

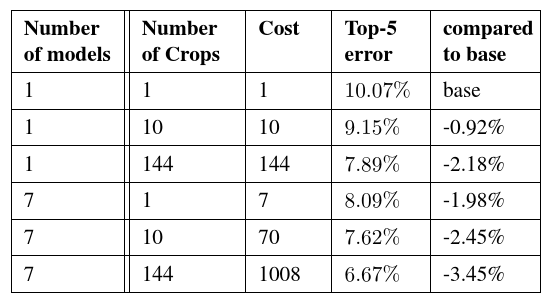

Table 3: GoogLeNet classification performance break down.

We also analyze and report the performance of multiple testing choices, by varying the number of models and the number of crops used when predicting an image in Table 3.When we use one model, we chose the one with the lowest top-1 error rate on the validation data. All numbers are reported on the validation dataset in order to not overfit to the testing data statistics.

- ILSVRC 2014 Detection Challenge Setup and Results

The ILSVRC detection task is to produce bounding boxes around objects in images among 200 possible classes.Detected objects count as correct if they match the class of the groundtruth and their bounding boxes overlap by at least 50% (using the Jaccard index). Extraneous detections count as false positives and are penalized. Contrary to the classification task, each image may contain many objects or none, and their scale may vary. Results are reported using the mean average precision (mAP). #ILSVRC检测任务要求在图像内的200种可能的物体周边产生边界框.被测物体如果与真实类别匹配且他们的边界框与实际位置相交超过50%(使用Jaccard index)则认定为正确.无关的检测则被认定为错误的并且给予惩罚.相对于分类任务,每个图像可能包含很多物体或者不包含,而且他们的尺寸可能变化比较大.结构使用平均准确率(mAP)进行汇报. The approach taken by GoogLeNet for detection is similar to the R-CNN by [6], but is augmented with the Inception model as the region classifier. Additionally, the region proposal step is improved by combining the selective search [20] approach with multibox [5] predictions for higher object bounding box recall.In order to reduce the number of false positives, the superpixel size was increased by 2×. This halves the proposals coming from the selective search algorithm. #GoogLeNet在检测任务中使用的方法与[6]所述R-CNN类似,但使用Inception模块作为区域分类器进行扩展.额外地,区域检测步骤混合使用selective search [20]方法和multibox [5]预测方法进行改善,为了达到更高的边界框recall.为了减少错误分类数量,超分辨率尺寸增大为原来的2倍.这将selective search算法中的建议减半. We added back 200 region proposals coming from multi-box [5] resulting, in total, in about 60% of the proposals used by [6], while increasing the coverage from 92% to 93%. The overall effect of cutting the number of proposals with increased coverage is a 1% improvement of the mean average precision for the single model case. Finally, we use an ensemble of 6 GoogLeNets when classifying each region. This leads to an increase in accuracy from 40% to 43.9%. Note that contrary to R-CNN, we did not use bounding box regression due to lack of time.

#我们将multi-box[5]算法结果中200个区域建议加回去,总体上,[6]使用了60%的边框建议,这将准确率从92%提高到93%.对于这例单一模型,总体上减少边框建议带来的准确率增长是1%.最后,我们在对每个区域进行分类的时候使用了6个GoogLeNets的融合.这将准确率从40%提高到43.9%.注意相对于R-CNN,由于时间关系我们没有使用边框回归.

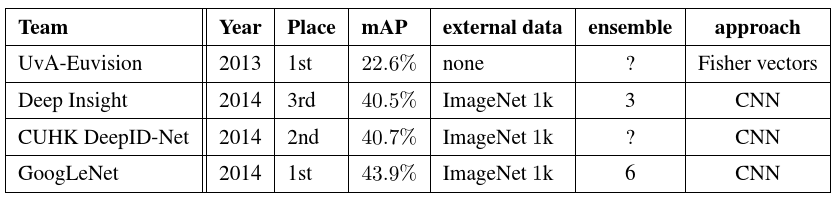

We first report the top detection results and show the progress since the first edition of the detection task. Compared to the 2013 result, the accuracy has almost doubled.The top performing teams all use convolutional networks.We report the official scores in Table 4 and common strategies for each team: the use of external data, ensemble models or contextual models.

#我们首先报告了顶尖的检测结果并展示了第一版检测任务后的进步.相比与2013的结果,准确率几乎翻倍了.成绩最好的队伍都是用卷积网络.我们在表4中汇总了各团队的官方成绩以及他们使用的策略:使用额外的数据,模型融合或语境模型.

Table 4: Comparison of detection performances. Unreported values are noted with question marks

The external data is typically the ILSVRC12 classification data for pre-training a model that is later refined on the detection data. Some teams also mention the use of the localization data. Since a good portion of the localization task bounding boxes are not included in the detection dataset, one can pre-train a general bounding box regressor with this data the same way classification is used for pre-training. The GoogLeNet entry did not use the localization data for pretraining.

#额外的数据是典型用于模型预训练的ILSVRC12分类数据集,这个模型随后在检测数据集上进行提炼.有一些队伍也提到定位数据的使用.由于一个好的定位任务边界框部分并没有包含在检测数据集上,很难使用该数据集进行通用边界框回归器的预训练.GoogLeNet作品中并没有使用定位数据进行预训练.

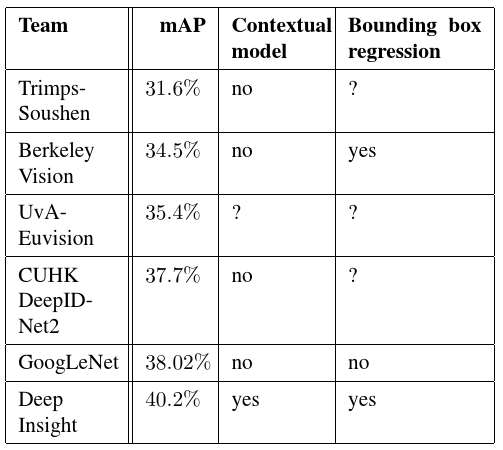

In Table 5, we compare results using a single model only.The top performing model is by Deep Insight and surprisingly only improves by 0.3 points with an ensemble of 3 models while the GoogLeNet obtains significantly stronger results with the ensemble.

#在表5中,我们只将结果与一个模型进行比较.表现最好的模型是Deep Insight实现的,令人惊讶的是它使用3个模型的融合仅提高了0.3个百分点,而GoogLeNet使用融合后取得明显更强的结果.

Table 5: Single model performance for detection.

- Conclusions

Our results yield a solid evidence that approximating the expected optimal sparse structure by readily available dense building blocks is a viable method for improving neural networks for computer vision. The main advantage of this method is a significant quality gain at a modest increase of computational requirements compared to shallower and narrower architectures.

#我们的结果拥有坚实的证据说明使用现有稠密模块近似期望最优稀疏结构是改善计算机视觉的一个切实可行的方法.这个方法的主要优点是相比于更浅和更窄的框架在计算需求的少量增长下实现显著的质量提升.

Our object detection work was competitive despite not utilizing context nor performing bounding box regression,suggesting yet further evidence of the strengths of the Inception architecture.

#我们的目标检测作品具有竞争力的,即使没有利用上下文信息也没有展开边框回归,这说明Inception框架未来会有发掘出更多优势.

For both classification and detection, it is expected that similar quality of result can be achieved by much more expensive non-Inception-type networks of similar depth and width.Still, our approach yields solid evidence that moving to sparser architectures is feasible and useful idea in general. This suggest future work towards creating sparser and more refined structures in automated ways on the basis of [2], as well as on applying the insights of the Inception architecture to other domains

#对于分类和检测任务,可以预见获得相似质量的结果通过相似深度和宽度的非Inception类型网络来实现会昂贵的多.同时,我们的方法拥有坚定的证据说明通常使用稀疏结构是可行有效的.这建议未来的工作朝着基于[2]实现自动建立稀疏和简洁的结构,并将Inception框架应用到其他领域.