转自:jack-Cui 老师的 http://blog.csdn.net/c406495762

运行平台: Windows

Python版本: Python3.x

IDE: Sublime text3

一、Beautiful Soup简介

简单来说,Beautiful Soup是python的一个库,最主要的功能是从网页抓取数据。官方解释如下:

-

Beautiful Soup提供一些简单的、python式的函数用来处理导航、搜索、修改分析树等功能。它是一个工具箱,通过解析文档为用户提供需要抓取的数据,因为简单,所以不需要多少代码就可以写出一个完整的应用程序。

-

Beautiful Soup自动将输入文档转换为Unicode编码,输出文档转换为utf-8编码。你不需要考虑编码方式,除非文档没有指定一个编码方式,这时,Beautiful Soup就不能自动识别编码方式了。然后,你仅仅需要说明一下原始编码方式就可以了。

-

Beautiful Soup已成为和lxml、html6lib一样出色的python解释器,为用户灵活地提供不同的解析策略或强劲的速度。

废话不多说,直接开始动手吧!

二、实战

1.背景介绍

小说网站-笔趣看:

URL:http://www.biqukan.com/

笔趣看是一个盗版小说网站,这里有很多起点中文网的小说,该网站小说的更新速度稍滞后于起点中文网正版小说的更新速度。并且该网站只支持在线浏览,不支持小说打包下载。因此,本次实战就是从该网站爬取并保存一本名为《一念永恒》的小说,该小说是耳根正在连载中的一部玄幻小说。PS:本实例仅为交流学习,支持耳根大大,请上起点中文网订阅。

2.Beautiful Soup安装

我们我可以使用pip3或者easy_install来安装,在cmd命令窗口中的安装命令分别如下:

a)pip3安装

<span style="color:#000000"><code>pip3 <span style="color:#000088">install</span> beautifulsoup4</code></span>- 1

b)easy_install安装

<span style="color:#000000"><code>easy_install beautifulsoup4</code></span>- 1

3.预备知识

更为详细内容,可参考官方文档:

URL:http://beautifulsoup.readthedocs.io/zh_CN/latest/

a)创建Beautiful Soup对象

<span style="color:#000000"><code>from bs4 import BeautifulSoup

#html为解析的页面获得html信息,为方便讲解,自己定义了一个html文件

html = """

<span style="color:#006666"><<span style="color:#4f4f4f">html</span>></span>

<span style="color:#006666"><<span style="color:#4f4f4f">head</span>></span>

<span style="color:#006666"><<span style="color:#4f4f4f">title</span>></span>Jack_Cui<span style="color:#006666"></<span style="color:#4f4f4f">title</span>></span>

<span style="color:#006666"></<span style="color:#4f4f4f">head</span>></span>

<span style="color:#006666"><<span style="color:#4f4f4f">body</span>></span>

<span style="color:#006666"><<span style="color:#4f4f4f">p</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"title"</span> <span style="color:#4f4f4f">name</span>=<span style="color:#009900">"blog"</span>></span><span style="color:#006666"><<span style="color:#4f4f4f">b</span>></span>My Blog<span style="color:#006666"></<span style="color:#4f4f4f">b</span>></span><span style="color:#006666"></<span style="color:#4f4f4f">p</span>></span>

<span style="color:#006666"><<span style="color:#4f4f4f">li</span>></span><span style="color:#880000"><!--注释--></span><span style="color:#006666"></<span style="color:#4f4f4f">li</span>></span>

<span style="color:#006666"><<span style="color:#4f4f4f">a</span> <span style="color:#4f4f4f">href</span>=<span style="color:#009900">"http://blog.csdn.net/c406495762/article/details/58716886"</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"sister"</span> <span style="color:#4f4f4f">id</span>=<span style="color:#009900">"link1"</span>></span>Python3网络爬虫(一):利用urllib进行简单的网页抓取<span style="color:#006666"></<span style="color:#4f4f4f">a</span>></span><span style="color:#006666"><<span style="color:#4f4f4f">br</span>/></span>

<span style="color:#006666"><<span style="color:#4f4f4f">a</span> <span style="color:#4f4f4f">href</span>=<span style="color:#009900">"http://blog.csdn.net/c406495762/article/details/59095864"</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"sister"</span> <span style="color:#4f4f4f">id</span>=<span style="color:#009900">"link2"</span>></span>Python3网络爬虫(二):利用urllib.urlopen发送数据<span style="color:#006666"></<span style="color:#4f4f4f">a</span>></span><span style="color:#006666"><<span style="color:#4f4f4f">br</span>/></span>

<span style="color:#006666"><<span style="color:#4f4f4f">a</span> <span style="color:#4f4f4f">href</span>=<span style="color:#009900">"http://blog.csdn.net/c406495762/article/details/59488464"</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"sister"</span> <span style="color:#4f4f4f">id</span>=<span style="color:#009900">"link3"</span>></span>Python3网络爬虫(三):urllib.error异常<span style="color:#006666"></<span style="color:#4f4f4f">a</span>></span><span style="color:#006666"><<span style="color:#4f4f4f">br</span>/></span>

<span style="color:#006666"></<span style="color:#4f4f4f">body</span>></span>

<span style="color:#006666"></<span style="color:#4f4f4f">html</span>></span>

"""

#创建Beautiful Soup对象

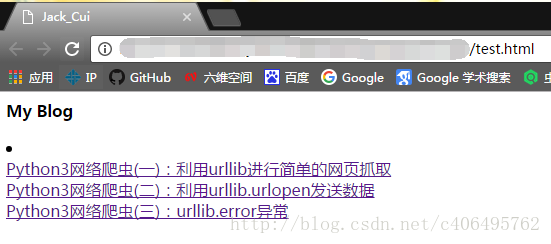

soup = BeautifulSoup(html,'lxml')</code></span>如果将上述的html的信息写入一个html文件,打开效果是这样的(<!–注释–>为注释内容,不会显示):

同样,我们还可以使用本地HTML文件来创建对象,代码如下:

<span style="color:#000000"><code>soup = BeautifulSoup(<span style="color:#4f4f4f">open</span>(test.html),<span style="color:#009900">'lxml'</span>)</code></span>- 1

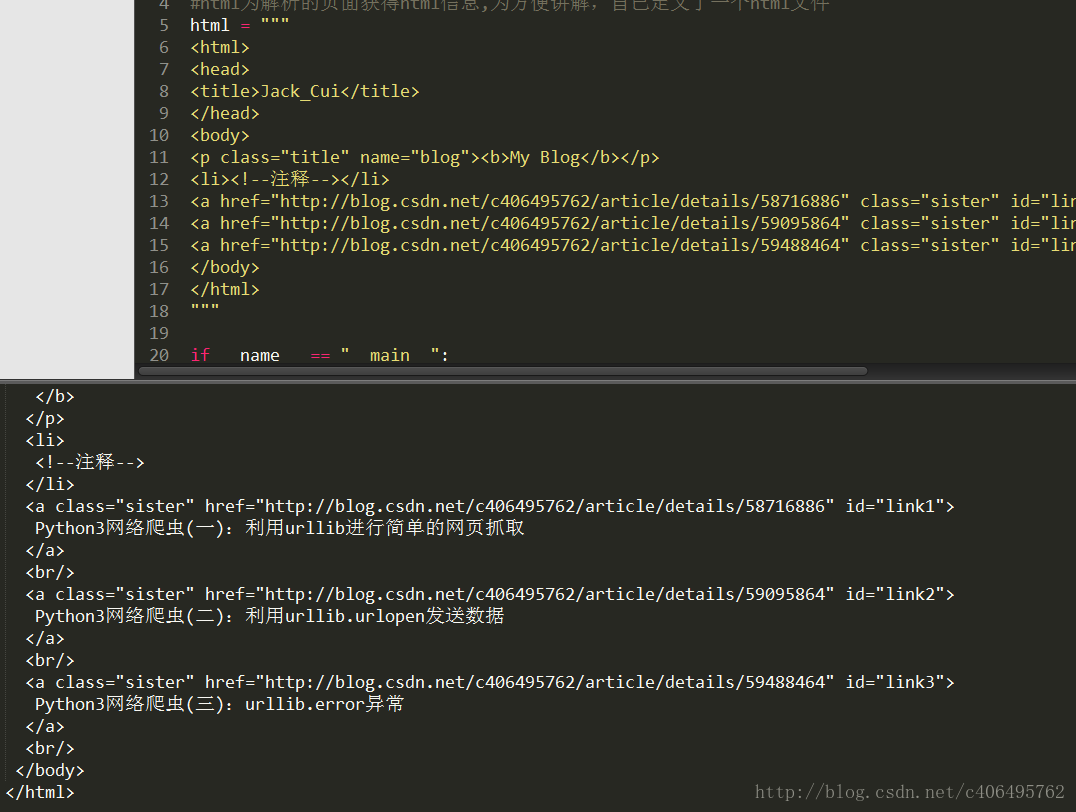

使用如下代码格式化输出:

<span style="color:#000000"><code>print(soup.prettify())</code></span>- 1

b)Beautiful Soup四大对象

Beautiful Soup将复杂HTML文档转换成一个复杂的树形结构,每个节点都是Python对象,所有对象可以归纳为4种:

- Tag

- NavigableString

- BeautifulSoup

- Comment

(1)Tag

Tag通俗点讲就是HTML中的一个个标签,例如

<span style="color:#000000"><code><span style="color:#006666"><<span style="color:#4f4f4f">title</span>></span>Jack_Cui<span style="color:#006666"></<span style="color:#4f4f4f">title</span>></span></code></span>- 1

上面的title就是HTML标签,标签加入里面包括的内容就是Tag,下面我们来感受一下怎样用 Beautiful Soup 来方便地获取 Tags。

下面每一段代码中注释部分即为运行结果:

<span style="color:#000000"><code>print(soup.title)

#<span style="color:#006666"><<span style="color:#4f4f4f">title</span>></span>Jack_Cui<span style="color:#006666"></<span style="color:#4f4f4f">title</span>></span>

print(soup.head)

#<span style="color:#006666"><<span style="color:#4f4f4f">head</span>></span> <span style="color:#006666"><<span style="color:#4f4f4f">title</span>></span>Jack_Cui<span style="color:#006666"></<span style="color:#4f4f4f">title</span>></span><span style="color:#006666"></<span style="color:#4f4f4f">head</span>></span>

print(soup.a)

#<span style="color:#006666"><<span style="color:#4f4f4f">a</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"sister"</span> <span style="color:#4f4f4f">href</span>=<span style="color:#009900">"http://blog.csdn.net/c406495762/article/details/58716886"</span> <span style="color:#4f4f4f">id</span>=<span style="color:#009900">"link1"</span>></span>Python3网络爬虫(一):利用urllib进行简单的网页抓取<span style="color:#006666"></<span style="color:#4f4f4f">a</span>></span>

print(soup.p)

#<span style="color:#006666"><<span style="color:#4f4f4f">p</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"title"</span> <span style="color:#4f4f4f">name</span>=<span style="color:#009900">"blog"</span>></span><span style="color:#006666"><<span style="color:#4f4f4f">b</span>></span>My Blog<span style="color:#006666"></<span style="color:#4f4f4f">b</span>></span><span style="color:#006666"></<span style="color:#4f4f4f">p</span>></span></code></span>我们可以利用 soup加标签名轻松地获取这些标签的内容,是不是感觉比正则表达式方便多了?不过有一点是,它查找的是在所有内容中的第一个符合要求的标签,如果要查询所有的标签,我们在后面进行介绍。

我们也可验证一下这些对象的类型:

<span style="color:#000000"><code><span style="color:#009900">print</span>(<span style="color:#000088">type</span>(<span style="color:#009900">soup</span>.<span style="color:#009900">title</span>))

<span style="color:#009900">#<class 'bs4.element.Tag'></span></code></span>- 1

- 2

对于Tag,有两个重要的属性:name和attrs

name:

<span style="color:#000000"><code>print(soup<span style="color:#009900">.name</span>)

print(soup<span style="color:#009900">.title</span><span style="color:#009900">.name</span>)

<span style="color:#009900">#[document]</span>

<span style="color:#009900">#title</span></code></span>soup 对象本身比较特殊,它的 name 即为 [document],对于其他内部标签,输出的值便为标签本身的名称。

attrs:

<span style="color:#000000"><code>print(soup.a.attrs)

#{<span style="color:#009900">'class'</span><span style="color:#009900">:</span> [<span style="color:#009900">'sister'</span>], <span style="color:#009900">'href'</span><span style="color:#009900">:</span> <span style="color:#009900">'http://blog.csdn.net/c406495762/article/details/58716886'</span>, <span style="color:#009900">'id'</span><span style="color:#009900">:</span> <span style="color:#009900">'link1'</span>}</code></span>在这里,我们把 a 标签的所有属性打印输出了出来,得到的类型是一个字典。

如果我们想要单独获取某个属性,可以这样,例如我们获取a标签的class叫什么,两个等价的方法如下:

<span style="color:#000000"><code>print(soup.a[<span style="color:#009900">'class'</span>])

print(soup.a.<span style="color:#000088">get</span>(<span style="color:#009900">'class'</span>))

<span style="color:#009900">#['sister']</span>

<span style="color:#009900">#['sister']</span></code></span>(2)NavigableString

既然我们已经得到了标签的内容,那么问题来了,我们要想获取标签内部的文字怎么办呢?很简单,用 .string 即可,例如

<span style="color:#000000"><code>print(soup.title.<span style="color:#000088">string</span>)

<span style="color:#009900">#Jack_Cui</span></code></span>(3)BeautifulSoup

BeautifulSoup 对象表示的是一个文档的全部内容.大部分时候,可以把它当作 Tag 对象,是一个特殊的 Tag,我们可以分别获取它的类型,名称,以及属性:

<span style="color:#000000"><code><span style="color:#000088">print</span>(type(soup.name))

<span style="color:#000088">print</span>(soup.name)

<span style="color:#000088">print</span>(soup.attrs)

<span style="color:#880000">#<class 'str'></span>

<span style="color:#880000">#[document]</span>

<span style="color:#880000">#{}</span></code></span>(4)Comment

Comment对象是一个特殊类型的NavigableString对象,其实输出的内容仍然不包括注释符号,但是如果不好好处理它,可能会对我们的文本处理造成意想不到的麻烦。

<span style="color:#000000"><code>print(soup.li)

print(soup.li.string)

print(type(soup.li.string))

#<span style="color:#006666"><<span style="color:#4f4f4f">li</span>></span><span style="color:#880000"><!--注释--></span><span style="color:#006666"></<span style="color:#4f4f4f">li</span>></span>

#注释

#<span style="color:#006666"><<span style="color:#4f4f4f">class</span> '<span style="color:#4f4f4f">bs4.element.Comment</span>'></span></code></span>li标签里的内容实际上是注释,但是如果我们利用 .string 来输出它的内容,我们发现它已经把注释符号去掉了,所以这可能会给我们带来不必要的麻烦。

我们打印输出下它的类型,发现它是一个 Comment 类型,所以,我们在使用前最好做一下判断,判断代码如下:

<span style="color:#000000"><code><span style="color:#4f4f4f">from</span> bs4 import <span style="color:#000088">element</span>

<span style="color:#000088">if</span> type(soup.li.<span style="color:#000088">string</span>) == <span style="color:#000088">element</span>.Comment:

print(soup.li.<span style="color:#000088">string</span>)</code></span>上面的代码中,我们首先判断了它的类型,是否为 Comment 类型,然后再进行其他操作,如打印输出。

c)遍历文档数

(1)直接子节点(不包含孙节点)

contents:

tag的content属性可以将tag的子节点以列表的方式输出:

<span style="color:#000000"><code>print(soup.body.contents)

#['\n', <span style="color:#006666"><<span style="color:#4f4f4f">p</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"title"</span> <span style="color:#4f4f4f">name</span>=<span style="color:#009900">"blog"</span>></span><span style="color:#006666"><<span style="color:#4f4f4f">b</span>></span>My Blog<span style="color:#006666"></<span style="color:#4f4f4f">b</span>></span><span style="color:#006666"></<span style="color:#4f4f4f">p</span>></span>, '\n', <span style="color:#006666"><<span style="color:#4f4f4f">li</span>></span><span style="color:#880000"><!--注释--></span><span style="color:#006666"></<span style="color:#4f4f4f">li</span>></span>, '\n', <span style="color:#006666"><<span style="color:#4f4f4f">a</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"sister"</span> <span style="color:#4f4f4f">href</span>=<span style="color:#009900">"http://blog.csdn.net/c406495762/article/details/58716886"</span> <span style="color:#4f4f4f">id</span>=<span style="color:#009900">"link1"</span>></span>Python3网络爬虫(一):利用urllib进行简单的网页抓取<span style="color:#006666"></<span style="color:#4f4f4f">a</span>></span>, <span style="color:#006666"><<span style="color:#4f4f4f">br</span>/></span>, '\n', <span style="color:#006666"><<span style="color:#4f4f4f">a</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"sister"</span> <span style="color:#4f4f4f">href</span>=<span style="color:#009900">"http://blog.csdn.net/c406495762/article/details/59095864"</span> <span style="color:#4f4f4f">id</span>=<span style="color:#009900">"link2"</span>></span>Python3网络爬虫(二):利#用urllib.urlopen发送数据<span style="color:#006666"></<span style="color:#4f4f4f">a</span>></span>, <span style="color:#006666"><<span style="color:#4f4f4f">br</span>/></span>, '\n', <span style="color:#006666"><<span style="color:#4f4f4f">a</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"sister"</span> <span style="color:#4f4f4f">href</span>=<span style="color:#009900">"http://blog.csdn.net/c406495762/article/details/59488464"</span> <span style="color:#4f4f4f">id</span>=<span style="color:#009900">"link3"</span>></span>Python3网络爬虫(三):urllib.error异常<span style="color:#006666"></<span style="color:#4f4f4f">a</span>></span>, <span style="color:#006666"><<span style="color:#4f4f4f">br</span>/></span>, '\n']</code></span>输出方式为列表,我们可以用列表索引来获取它的某一个元素:

<span style="color:#000000"><code>print(soup.body.contents[1])

<span style="color:#006666"><<span style="color:#4f4f4f">p</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"title"</span> <span style="color:#4f4f4f">name</span>=<span style="color:#009900">"blog"</span>></span><span style="color:#006666"><<span style="color:#4f4f4f">b</span>></span>My Blog<span style="color:#006666"></<span style="color:#4f4f4f">b</span>></span><span style="color:#006666"></<span style="color:#4f4f4f">p</span>></span></code></span>children:

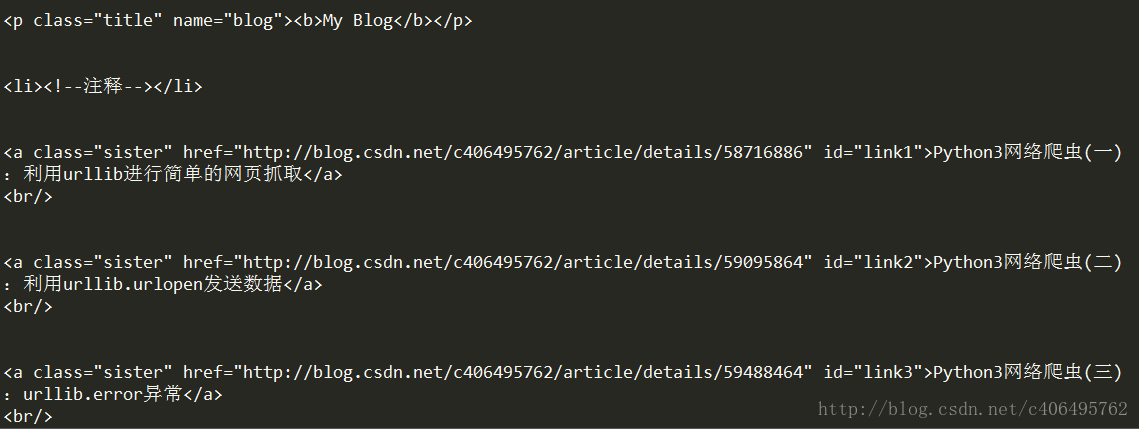

它返回的不是一个 list,不过我们可以通过遍历获取所有子节点,它是一个 list 生成器对象:

<span style="color:#000000"><code><span style="color:#000088">for</span> child <span style="color:#000088">in</span> soup.body.children:

<span style="color:#4f4f4f">print</span>(child)</code></span>结果如下图所示:

(2)搜索文档树

find_all(name, attrs, recursive, text, limit, **kwargs):

find_all() 方法搜索当前tag的所有tag子节点,并判断是否符合过滤器的条件。

1) name参数:

name 参数可以查找所有名字为 name 的tag,字符串对象会被自动忽略掉。

传递字符:

最简单的过滤器是字符串,在搜索方法中传入一个字符串参数,Beautiful Soup会查找与字符串完整匹配的内容,下面的例子用于查找文档中所有的<a>标签:

<span style="color:#000000"><code>print(soup.find_all('a'))

#['\n', <span style="color:#006666"><<span style="color:#4f4f4f">p</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"title"</span> <span style="color:#4f4f4f">name</span>=<span style="color:#009900">"blog"</span>></span><span style="color:#006666"><<span style="color:#4f4f4f">b</span>></span>My Blog<span style="color:#006666"></<span style="color:#4f4f4f">b</span>></span><span style="color:#006666"></<span style="color:#4f4f4f">p</span>></span>, '\n', <span style="color:#006666"><<span style="color:#4f4f4f">li</span>></span><span style="color:#880000"><!--注释--></span><span style="color:#006666"></<span style="color:#4f4f4f">li</span>></span>, '\n', <span style="color:#006666"><<span style="color:#4f4f4f">a</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"sister"</span> <span style="color:#4f4f4f">href</span>=<span style="color:#009900">"http://blog.csdn.net/c406495762/article/details/58716886"</span> <span style="color:#4f4f4f">id</span>=<span style="color:#009900">"link1"</span>></span>Python3网络爬虫(一):利用urllib进行简单的网页抓取<span style="color:#006666"></<span style="color:#4f4f4f">a</span>></span>, <span style="color:#006666"><<span style="color:#4f4f4f">br</span>/></span>, '\n', <span style="color:#006666"><<span style="color:#4f4f4f">a</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"sister"</span> <span style="color:#4f4f4f">href</span>=<span style="color:#009900">"http://blog.csdn.net/c406495762/article/details/59095864"</span> <span style="color:#4f4f4f">id</span>=<span style="color:#009900">"link2"</span>></span>Python3网络爬虫(二):利用urllib.urlopen发送数据<span style="color:#006666"></<span style="color:#4f4f4f">a</span>></span>, <span style="color:#006666"><<span style="color:#4f4f4f">br</span>/></span>, '\n', <span style="color:#006666"><<span style="color:#4f4f4f">a</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"sister"</span> <span style="color:#4f4f4f">href</span>=<span style="color:#009900">"http://blog.csdn.net/c406495762/article/details/59488464"</span> <span style="color:#4f4f4f">id</span>=<span style="color:#009900">"link3"</span>></span>Python3网络爬虫(三):urllib.error异常<span style="color:#006666"></<span style="color:#4f4f4f">a</span>></span>, <span style="color:#006666"><<span style="color:#4f4f4f">br</span>/></span>, '\n']</code></span>传递正则表达式:

如果传入正则表达式作为参数,Beautiful Soup会通过正则表达式的 match() 来匹配内容.下面例子中找出所有以b开头的标签,这表示<body>和<b>标签都应该被找到

<span style="color:#000000"><code>import re

<span style="color:#000088">for</span> tag in soup.find_all(re.compile(<span style="color:#009900">"^b"</span>)):

print(tag.name)

<span style="color:#009900">#body</span>

<span style="color:#009900">#b</span>

<span style="color:#009900">#br</span>

<span style="color:#009900">#br</span>

<span style="color:#009900">#br</span></code></span>传递列表:

如果传入列表参数,Beautiful Soup会将与列表中任一元素匹配的内容返回,下面代码找到文档中所有<title>标签和<b>标签:

<span style="color:#000000"><code>print(soup.find_all([<span style="color:#009900">'title'</span>,<span style="color:#009900">'b'</span>]))

#<span style="color:#008800">[<title>Jack_Cui</title>, <b>My Blog</b>]</span></code></span>传递True:

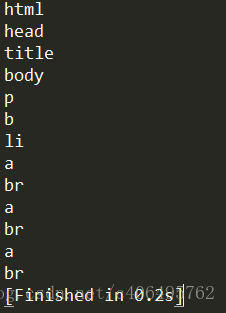

True 可以匹配任何值,下面代码查找到所有的tag,但是不会返回字符串节点:

<span style="color:#000000"><code><span style="color:#000088">for</span> tag <span style="color:#000088">in</span> soup.find_all(<span style="color:#000088">True</span>):

print(tag.name)</code></span>运行结果:

2)attrs参数

我们可以通过 find_all() 方法的 attrs 参数定义一个字典参数来搜索包含特殊属性的tag。

<span style="color:#000000"><code>print(soup.find_all(attrs={"class":"title"}))

#[<span style="color:#006666"><<span style="color:#4f4f4f">p</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"title"</span> <span style="color:#4f4f4f">name</span>=<span style="color:#009900">"blog"</span>></span><span style="color:#006666"><<span style="color:#4f4f4f">b</span>></span>My Blog<span style="color:#006666"></<span style="color:#4f4f4f">b</span>></span><span style="color:#006666"></<span style="color:#4f4f4f">p</span>></span>]</code></span>3)recursive参数

调用tag的 find_all() 方法时,Beautiful Soup会检索当前tag的所有子孙节点,如果只想搜索tag的直接子节点,可以使用参数 recursive=False。

4)text参数

通过 text 参数可以搜搜文档中的字符串内容,与 name 参数的可选值一样, text 参数接受字符串 , 正则表达式 , 列表, True。

<span style="color:#000000"><code><span style="color:#000088">print</span>(soup.find_all(<span style="color:#000088">text</span>=<span style="color:#009900">"Python3网络爬虫(三):urllib.error异常"</span>))

#[<span style="color:#009900">'Python3网络爬虫(三):urllib.error异常'</span>]</code></span>5)limit参数

find_all() 方法返回全部的搜索结构,如果文档树很大那么搜索会很慢.如果我们不需要全部结果,可以使用 limit 参数限制返回结果的数量.效果与SQL中的limit关键字类似,当搜索到的结果数量达到 limit 的限制时,就停止搜索返回结果。

文档树中有3个tag符合搜索条件,但结果只返回了2个,因为我们限制了返回数量:

<span style="color:#000000"><code>print(soup.find_all(<span style="color:#009900">"a"</span>, limit=<span style="color:#006666">2</span>))

#[<<span style="color:#006666">a</span> class=<span style="color:#009900">"sister"</span> href=<span style="color:#009900">"http://blog.csdn.net/c406495762/article/details/58716886"</span> id=<span style="color:#009900">"link1"</span>>Python3网络爬虫(一):利用urllib进行简单的网页抓取</<span style="color:#006666">a</span>>, <<span style="color:#006666">a</span> class=<span style="color:#009900">"sister"</span> href=<span style="color:#009900">"http://blog.csdn.net/c406495762/article/details/59095864"</span> id=<span style="color:#009900">"link2"</span>>Python3网络爬虫(二):利用urllib.urlopen发送数据</<span style="color:#006666">a</span>>]</code></span>6)kwargs参数

如果传入 class 参数,Beautiful Soup 会搜索每个 class 属性为 title 的 tag 。kwargs 接收字符串,正则表达式

<span style="color:#000000"><code>print(soup.find_all(class_="title"))

#[<span style="color:#006666"><<span style="color:#4f4f4f">p</span> <span style="color:#4f4f4f">class</span>=<span style="color:#009900">"title"</span> <span style="color:#4f4f4f">name</span>=<span style="color:#009900">"blog"</span>></span><span style="color:#006666"><<span style="color:#4f4f4f">b</span>></span>My Blog<span style="color:#006666"></<span style="color:#4f4f4f">b</span>></span><span style="color:#006666"></<span style="color:#4f4f4f">p</span>></span>]</code></span>4.小说内容爬取

掌握以上内容就可以进行本次实战练习了

a)单章小说内容爬取

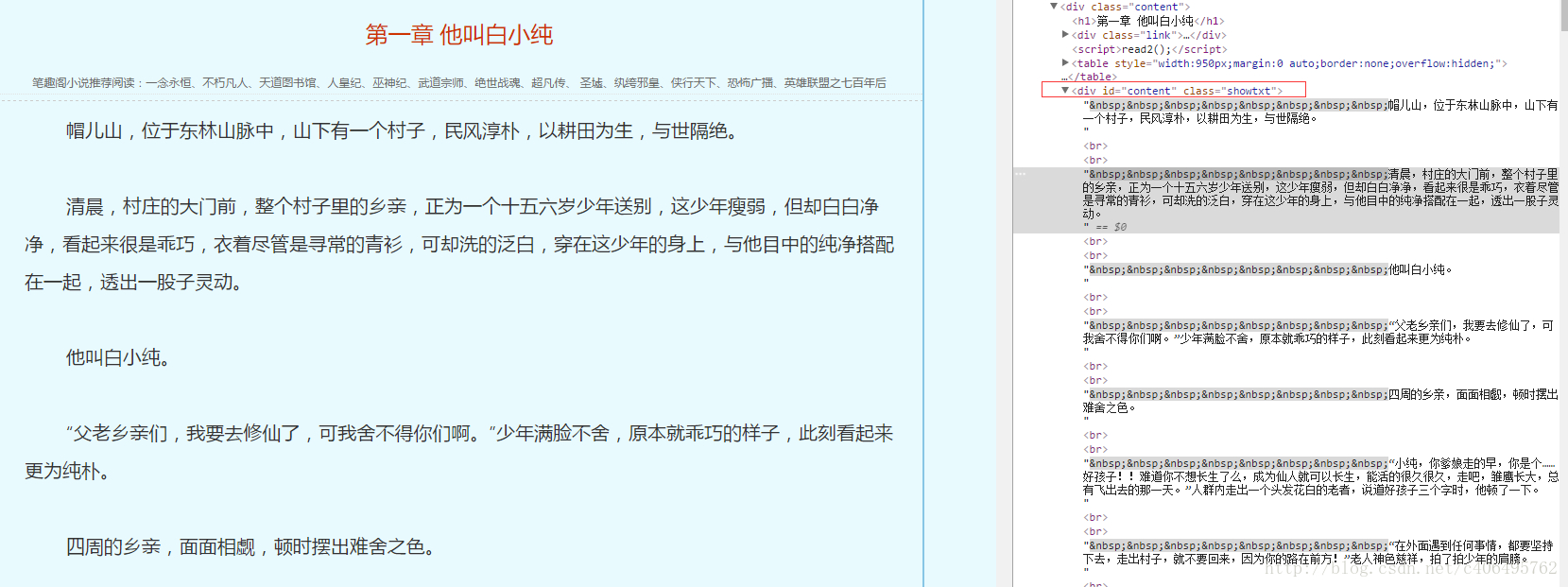

打开《一念永恒》小说的第一章,进行审查元素分析。

URL:http://www.biqukan.com/1_1094/5403177.html

由审查结果可知,文章的内容存放在id为content,class为showtxt的div标签中:

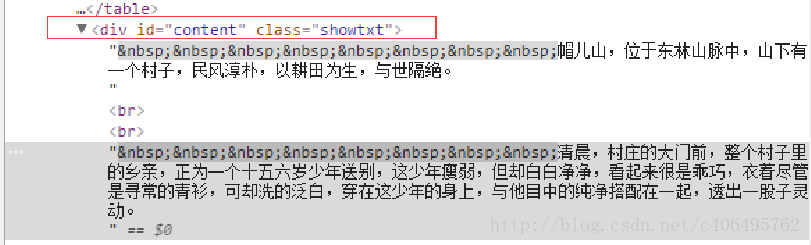

局部放大:

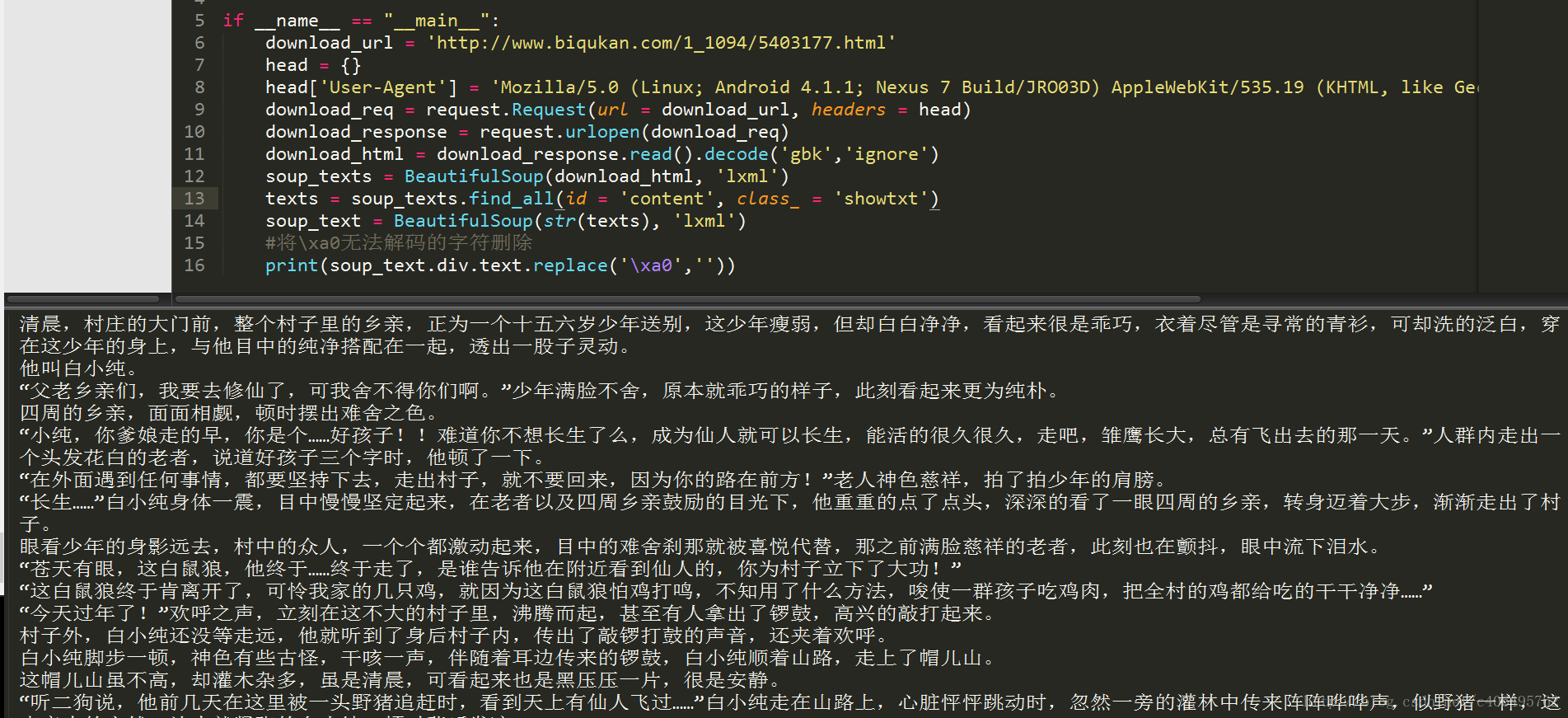

因此我们,可以使用如下方法将本章小说内容爬取下来:

<span style="color:#000000"><code><span style="color:#880000"># -*- coding:UTF-8 -*-</span>

<span style="color:#4f4f4f">from</span> urllib import request

<span style="color:#4f4f4f">from</span> bs4 import BeautifulSoup

<span style="color:#000088">if</span> __name__ == <span style="color:#009900">"__main__"</span>:

download_url = <span style="color:#009900">'http://www.biqukan.com/1_1094/5403177.html'</span>

head = {}

head[<span style="color:#009900">'User-Agent'</span>] = <span style="color:#009900">'Mozilla/5.0 (Linux; Android 4.1.1; Nexus 7 Build/JRO03D) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.166 Safari/535.19'</span>

download_req = request.Request(url = download_url, headers = head)

download_response = request.urlopen(download_req)

download_html = download_response.<span style="color:#4f4f4f">read</span>().decode(<span style="color:#009900">'gbk'</span>,<span style="color:#009900">'ignore'</span>)

soup_texts = BeautifulSoup(download_html, <span style="color:#009900">'lxml'</span>)

texts = soup_texts.find_all(id = <span style="color:#009900">'content'</span>, class_ = <span style="color:#009900">'showtxt'</span>)

soup_text = BeautifulSoup(str(texts), <span style="color:#009900">'lxml'</span>)

<span style="color:#880000">#将\xa0无法解码的字符删除</span>

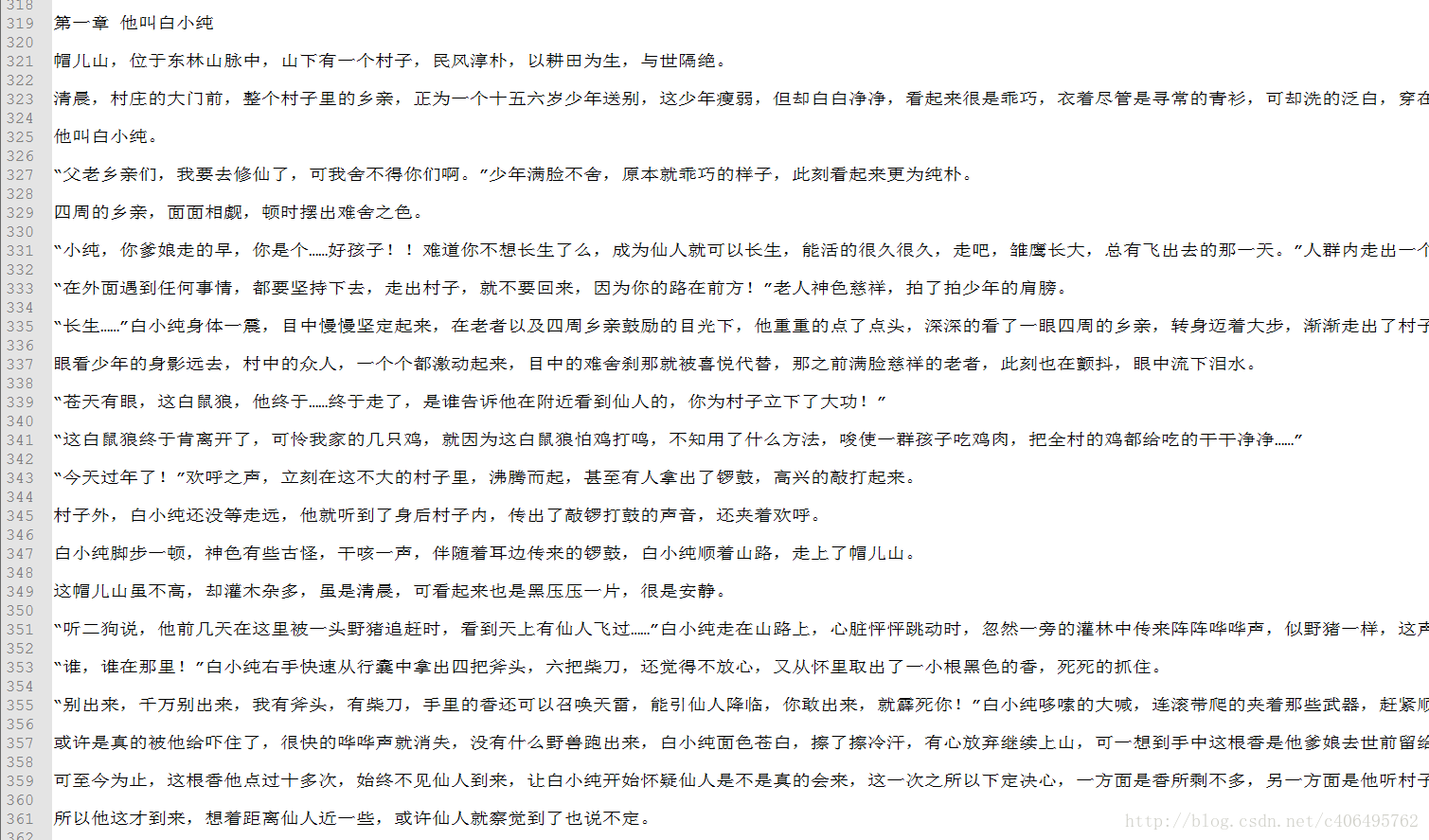

print(soup_text.div.<span style="color:#000088">text</span>.<span style="color:#4f4f4f">replace</span>(<span style="color:#009900">'\xa0'</span>,<span style="color:#009900">''</span>))</code></span>运行结果:

可以看到,我们已经顺利爬取第一章内容,接下来就是如何爬取所有章的内容,爬取之前需要知道每个章节的地址。因此,我们需要审查《一念永恒》小说目录页的内容。

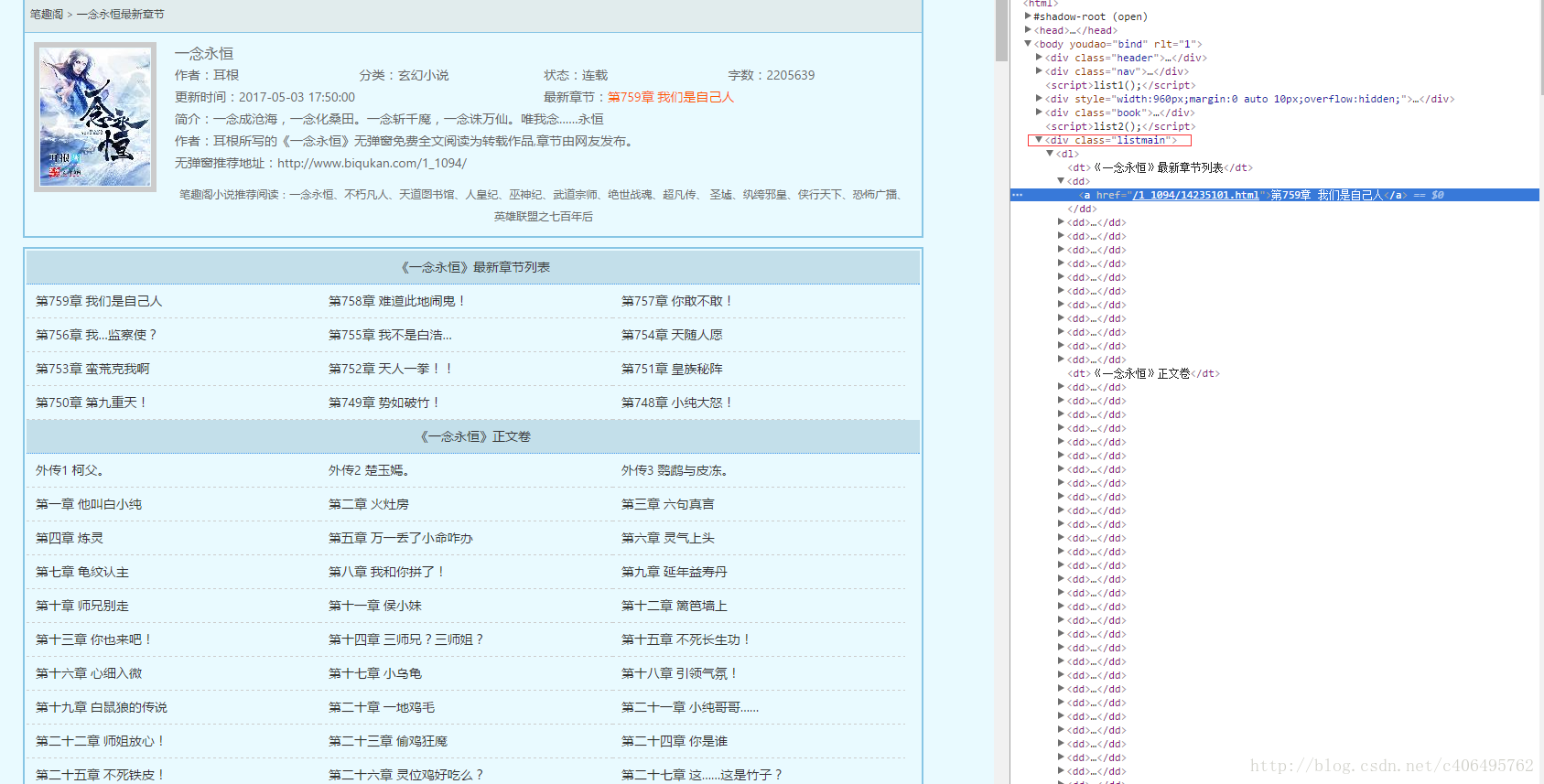

b)各章小说链接爬取

URL:http://www.biqukan.com/1_1094/

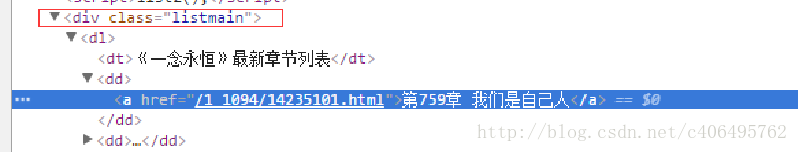

由审查结果可知,小说每章的链接放在了class为listmain的div标签中。链接具体位置放在html->body->div->dd->dl->a的href属性中,例如下图的第759章的href属性为/1_1094/14235101.html,那么该章节的地址为:http://www.biqukan.com/1_1094/14235101.html

局部放大:

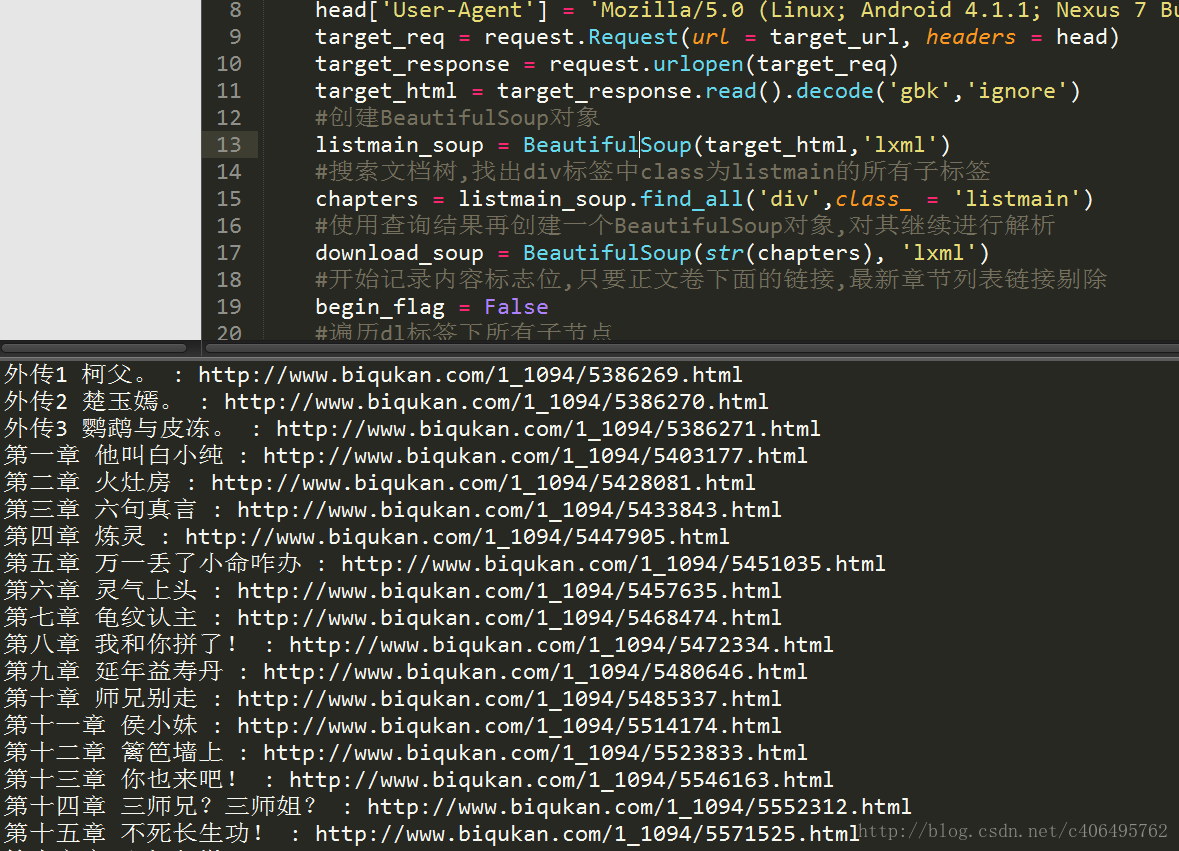

因此,我们可以使用如下方法获取正文所有章节的地址:

<span style="color:#000000"><code><span style="color:#880000"># -*- coding:UTF-8 -*-</span>

<span style="color:#000088">from</span> urllib <span style="color:#000088">import</span> request

<span style="color:#000088">from</span> bs4 <span style="color:#000088">import</span> BeautifulSoup

<span style="color:#000088">if</span> __name__ == <span style="color:#009900">"__main__"</span>:

target_url = <span style="color:#009900">'http://www.biqukan.com/1_1094/'</span>

head = {}

head[<span style="color:#009900">'User-Agent'</span>] = <span style="color:#009900">'Mozilla/5.0 (Linux; Android 4.1.1; Nexus 7 Build/JRO03D) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.166 Safari/535.19'</span>

target_req = request.Request(url = target_url, headers = head)

target_response = request.urlopen(target_req)

target_html = target_response.read().decode(<span style="color:#009900">'gbk'</span>,<span style="color:#009900">'ignore'</span>)

<span style="color:#880000">#创建BeautifulSoup对象</span>

listmain_soup = BeautifulSoup(target_html,<span style="color:#009900">'lxml'</span>)

<span style="color:#880000">#搜索文档树,找出div标签中class为listmain的所有子标签</span>

chapters = listmain_soup.find_all(<span style="color:#009900">'div'</span>,class_ = <span style="color:#009900">'listmain'</span>)

<span style="color:#880000">#使用查询结果再创建一个BeautifulSoup对象,对其继续进行解析</span>

download_soup = BeautifulSoup(str(chapters), <span style="color:#009900">'lxml'</span>)

<span style="color:#880000">#开始记录内容标志位,只要正文卷下面的链接,最新章节列表链接剔除</span>

begin_flag = <span style="color:#000088">False</span>

<span style="color:#880000">#遍历dl标签下所有子节点</span>

<span style="color:#000088">for</span> child <span style="color:#000088">in</span> download_soup.dl.children:

<span style="color:#880000">#滤除回车</span>

<span style="color:#000088">if</span> child != <span style="color:#009900">'\n'</span>:

<span style="color:#880000">#找到《一念永恒》正文卷,使能标志位</span>

<span style="color:#000088">if</span> child.string == <span style="color:#009900">u"《一念永恒》正文卷"</span>:

begin_flag = <span style="color:#000088">True</span>

<span style="color:#880000">#爬取链接</span>

<span style="color:#000088">if</span> begin_flag == <span style="color:#000088">True</span> <span style="color:#000088">and</span> child.a != <span style="color:#000088">None</span>:

download_url = <span style="color:#009900">"http://www.biqukan.com"</span> + child.a.get(<span style="color:#009900">'href'</span>)

download_name = child.string

print(download_name + <span style="color:#009900">" : "</span> + download_url)</code></span>运行结果:

c)爬取所有章节内容,并保存到文件中

整合以上代码,并进行相应处理,编写如下代码:

<span style="color:#000000"><code><span style="color:#880000"># -*- coding:UTF-8 -*-</span>

<span style="color:#4f4f4f">from</span> urllib import request

<span style="color:#4f4f4f">from</span> bs4 import BeautifulSoup

import re

import sys

<span style="color:#000088">if</span> __name__ == <span style="color:#009900">"__main__"</span>:

<span style="color:#880000">#创建txt文件</span>

<span style="color:#4f4f4f">file</span> = <span style="color:#4f4f4f">open</span>(<span style="color:#009900">'一念永恒.txt'</span>, <span style="color:#009900">'w'</span>, encoding=<span style="color:#009900">'utf-8'</span>)

<span style="color:#880000">#一念永恒小说目录地址</span>

target_url = <span style="color:#009900">'http://www.biqukan.com/1_1094/'</span>

<span style="color:#880000">#User-Agent</span>

head = {}

head[<span style="color:#009900">'User-Agent'</span>] = <span style="color:#009900">'Mozilla/5.0 (Linux; Android 4.1.1; Nexus 7 Build/JRO03D) AppleWebKit/535.19 (KHTML, like Gecko) Chrome/18.0.1025.166 Safari/535.19'</span>

target_req = request.Request(url = target_url, headers = head)

target_response = request.urlopen(target_req)

target_html = target_response.<span style="color:#4f4f4f">read</span>().decode(<span style="color:#009900">'gbk'</span>,<span style="color:#009900">'ignore'</span>)

<span style="color:#880000">#创建BeautifulSoup对象</span>

listmain_soup = BeautifulSoup(target_html,<span style="color:#009900">'lxml'</span>)

<span style="color:#880000">#搜索文档树,找出div标签中class为listmain的所有子标签</span>

chapters = listmain_soup.find_all(<span style="color:#009900">'div'</span>,class_ = <span style="color:#009900">'listmain'</span>)

<span style="color:#880000">#使用查询结果再创建一个BeautifulSoup对象,对其继续进行解析</span>

download_soup = BeautifulSoup(str(chapters), <span style="color:#009900">'lxml'</span>)

<span style="color:#880000">#计算章节个数</span>

numbers = (<span style="color:#4f4f4f">len</span>(download_soup.dl.contents) - <span style="color:#006666">1</span>) / <span style="color:#006666">2</span> - <span style="color:#006666">8</span>

index = <span style="color:#006666">1</span>

<span style="color:#880000">#开始记录内容标志位,只要正文卷下面的链接,最新章节列表链接剔除</span>

begin_flag = False

<span style="color:#880000">#遍历dl标签下所有子节点</span>

<span style="color:#000088">for</span> child in download_soup.dl.children:

<span style="color:#880000">#滤除回车</span>

<span style="color:#000088">if</span> child != <span style="color:#009900">'\n'</span>:

<span style="color:#880000">#找到《一念永恒》正文卷,使能标志位</span>

<span style="color:#000088">if</span> child.<span style="color:#000088">string</span> == u<span style="color:#009900">"《一念永恒》正文卷"</span>:

begin_flag = True

<span style="color:#880000">#爬取链接并下载链接内容</span>

<span style="color:#000088">if</span> begin_flag == True and child.a != None:

download_url = <span style="color:#009900">"http://www.biqukan.com"</span> + child.a.<span style="color:#4f4f4f">get</span>(<span style="color:#009900">'href'</span>)

download_req = request.Request(url = download_url, headers = head)

download_response = request.urlopen(download_req)

download_html = download_response.<span style="color:#4f4f4f">read</span>().decode(<span style="color:#009900">'gbk'</span>,<span style="color:#009900">'ignore'</span>)

download_name = child.<span style="color:#000088">string</span>

soup_texts = BeautifulSoup(download_html, <span style="color:#009900">'lxml'</span>)

texts = soup_texts.find_all(id = <span style="color:#009900">'content'</span>, class_ = <span style="color:#009900">'showtxt'</span>)

soup_text = BeautifulSoup(str(texts), <span style="color:#009900">'lxml'</span>)

write_flag = True

<span style="color:#4f4f4f">file</span>.<span style="color:#4f4f4f">write</span>(download_name + <span style="color:#009900">'\n\n'</span>)

<span style="color:#880000">#将爬取内容写入文件</span>

<span style="color:#000088">for</span> <span style="color:#000088">each</span> in soup_text.div.<span style="color:#000088">text</span>.<span style="color:#4f4f4f">replace</span>(<span style="color:#009900">'\xa0'</span>,<span style="color:#009900">''</span>):

<span style="color:#000088">if</span> <span style="color:#000088">each</span> == <span style="color:#009900">'h'</span>:

write_flag = False

<span style="color:#000088">if</span> write_flag == True and <span style="color:#000088">each</span> != <span style="color:#009900">' '</span>:

<span style="color:#4f4f4f">file</span>.<span style="color:#4f4f4f">write</span>(<span style="color:#000088">each</span>)

<span style="color:#000088">if</span> write_flag == True and <span style="color:#000088">each</span> == <span style="color:#009900">'\r'</span>:

<span style="color:#4f4f4f">file</span>.<span style="color:#4f4f4f">write</span>(<span style="color:#009900">'\n'</span>)

<span style="color:#4f4f4f">file</span>.<span style="color:#4f4f4f">write</span>(<span style="color:#009900">'\n\n'</span>)

<span style="color:#880000">#打印爬取进度</span>

sys.<span style="color:#000088">stdout</span>.<span style="color:#4f4f4f">write</span>(<span style="color:#009900">"已下载:%.3f%%"</span> % float(index/numbers) + <span style="color:#009900">'\r'</span>)

sys.<span style="color:#000088">stdout</span>.flush()

index += <span style="color:#006666">1</span>

<span style="color:#4f4f4f">file</span>.<span style="color:#4f4f4f">close</span>()</code></span>代码略显粗糙,运行效率不高,还有很多可以改进的地方,运行效果如下图所示:

最终生成的txt文件,如下图所示:

生成的txt文件,可以直接拷贝到手机中进行阅读,手机阅读软件可以解析这样排版的txt文件。

PS:如果觉得本篇本章对您有所帮助,欢迎关注、评论、点赞,谢谢!

参考文章:

URL:http://cuiqingcai.com/1319.html

2017年5月6日更新:

对代码进行了更改:添加了对错误章节的处理,并剔除了不是正文的部分。支持《笔趣看》网站大部分的小说下载。

代码查看:

Github代码连接