饭店来客数据

CSV数据源:链接:https://pan.baidu.com/s/1mLZBNv1SszQEnRoBGOYX7w 密码:mmrf

import pandas as pd

air_visit = pd.read_csv('air_visit_data.csv')

air_visit.head()

air_visit.index = pd.to_datetime(air_visit['visit_date'])

air_visit.head()

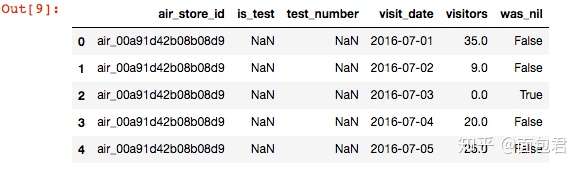

按天来算

air_visit = air_visit.groupby('air_store_id').apply(lambda g: g['visitors'].resample('1d').sum()).reset_index()

air_visit.head()

缺失值填0

air_visit['visit_date'] = air_visit['visit_date'].dt.strftime('%Y-%m-%d')

air_visit['was_nil'] = air_visit['visitors'].isnull()

air_visit['visitors'].fillna(0, inplace=True)

air_visit.head()

日历数据

date_info = pd.read_csv('date_info.csv')

date_info.head()

shift()操作对数据进行移动,可以观察前一天和后天是不是节假日。

date_info.rename(columns={'holiday_flg': 'is_holiday', 'calendar_date': 'visit_date'}, inplace=True)

date_info['prev_day_is_holiday'] = date_info['is_holiday'].shift().fillna(0)

date_info['next_day_is_holiday'] = date_info['is_holiday'].shift(-1).fillna(0)

date_info.head()

地区数据

air_store_info = pd.read_csv('air_store_info.csv')

air_store_info.head()

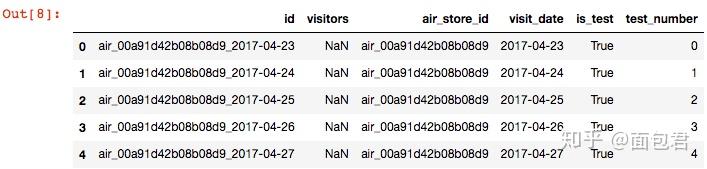

测试集

import numpy as np

submission = pd.read_csv('sample_submission.csv')

submission['air_store_id'] = submission['id'].str.slice(0, 20)

submission['visit_date'] = submission['id'].str.slice(21)

submission['is_test'] = True

submission['visitors'] = np.nan

submission['test_number'] = range(len(submission))

submission.head()

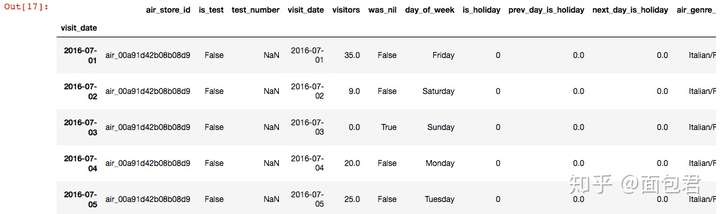

所有数据信息汇总

data = pd.concat((air_visit, submission.drop('id', axis='columns')))

data.head()

data['is_test'].fillna(False, inplace=True)

data = pd.merge(left=data, right=date_info, on='visit_date', how='left')

data = pd.merge(left=data, right=air_store_info, on='air_store_id', how='left')

data['visitors'] = data['visitors'].astype(float)

data.head()

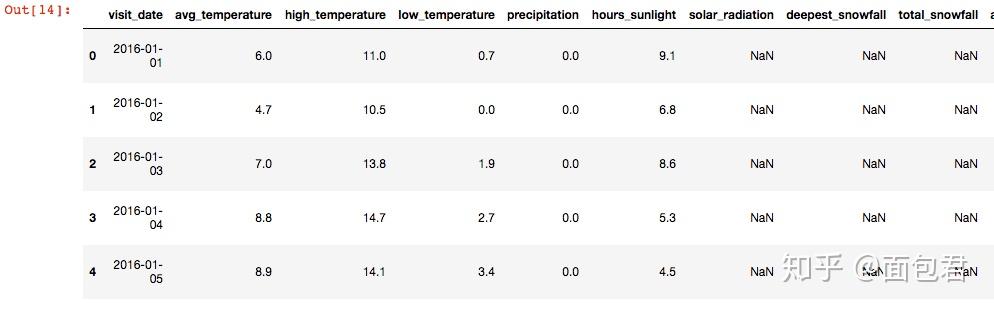

拿到天气数据

import glob

weather_dfs = []

for path in glob.glob('./1-1-16_5-31-17_Weather/*.csv'):

weather_df = pd.read_csv(path)

weather_df['station_id'] = path.split('\\')[-1].rstrip('.csv')

weather_dfs.append(weather_df)

weather = pd.concat(weather_dfs, axis='rows')

weather.rename(columns={'calendar_date': 'visit_date'}, inplace=True)

weather.head()

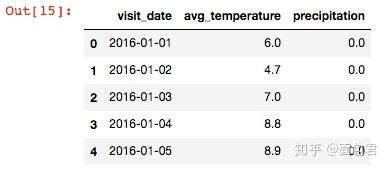

用各个小地方数据求出平均气温

means = weather.groupby('visit_date')[['avg_temperature', 'precipitation']].mean().reset_index()

means.rename(columns={'avg_temperature': 'global_avg_temperature', 'precipitation': 'global_precipitation'}, inplace=True)

weather = pd.merge(left=weather, right=means, on='visit_date', how='left')

weather['avg_temperature'].fillna(weather['global_avg_temperature'], inplace=True)

weather['precipitation'].fillna(weather['global_precipitation'], inplace=True)

weather[['visit_date', 'avg_temperature', 'precipitation']].head()

信息数据

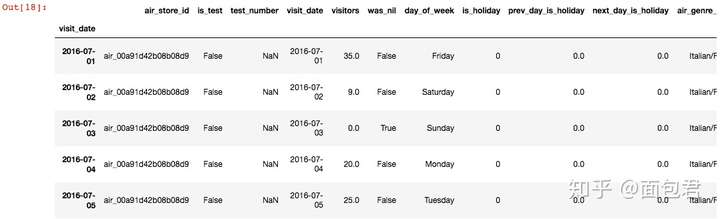

data['visit_date'] = pd.to_datetime(data['visit_date'])

data.index = data['visit_date']

data.sort_values(['air_store_id', 'visit_date'], inplace=True)

data.head()

异常点问题,数据中存在部分异常点,以正太分布为出发点,认为95%的是正常的,所以选择了1.96这个值。对异常点来规范,让特别大的点等于正常中最大的。

def find_outliers(series):

return (series - series.mean()) > 1.96 * series.std()

def cap_values(series):

outliers = find_outliers(series)

max_val = series[~outliers].max()

series[outliers] = max_val

return series

stores = data.groupby('air_store_id')

data['is_outlier'] = stores.apply(lambda g: find_outliers(g['visitors'])).values

data['visitors_capped'] = stores.apply(lambda g: cap_values(g['visitors'])).values

data['visitors_capped_log1p'] = np.log1p(data['visitors_capped'])

data.head()

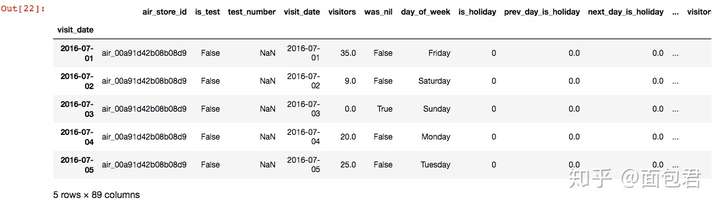

日期特征

data['is_weekend'] = data['day_of_week'].isin(['Saturday', 'Sunday']).astype(int)

data['day_of_month'] = data['visit_date'].dt.day

data.head()

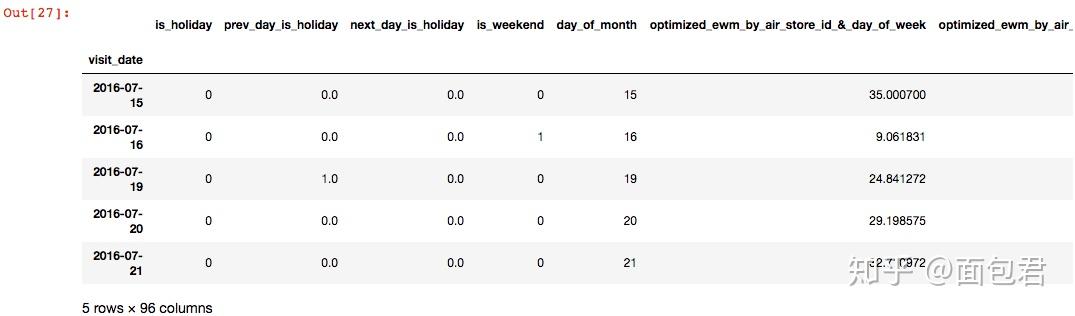

指数加权移动平均(Exponential Weighted Moving Average),反应时间序列变换趋势,需要我们给定alpha值,这里我们来优化求一个最合适的。

from scipy import optimize

def calc_shifted_ewm(series, alpha, adjust=True):

return series.shift().ewm(alpha=alpha, adjust=adjust).mean()

def find_best_signal(series, adjust=False, eps=10e-5):

def f(alpha):

shifted_ewm = calc_shifted_ewm(series=series, alpha=min(max(alpha, 0), 1), adjust=adjust)

corr = np.mean(np.power(series - shifted_ewm, 2))

return corr

res = optimize.differential_evolution(func=f, bounds=[(0 + eps, 1 - eps)])

return calc_shifted_ewm(series=series, alpha=res['x'][0], adjust=adjust)

roll = data.groupby(['air_store_id', 'day_of_week']).apply(lambda g: find_best_signal(g['visitors_capped']))

data['optimized_ewm_by_air_store_id_&_day_of_week'] = roll.sort_index(level=['air_store_id', 'visit_date']).values

roll = data.groupby(['air_store_id', 'is_weekend']).apply(lambda g: find_best_signal(g['visitors_capped']))

data['optimized_ewm_by_air_store_id_&_is_weekend'] = roll.sort_index(level=['air_store_id', 'visit_date']).values

roll = data.groupby(['air_store_id', 'day_of_week']).apply(lambda g: find_best_signal(g['visitors_capped_log1p']))

data['optimized_ewm_log1p_by_air_store_id_&_day_of_week'] = roll.sort_index(level=['air_store_id', 'visit_date']).values

roll = data.groupby(['air_store_id', 'is_weekend']).apply(lambda g: find_best_signal(g['visitors_capped_log1p']))

data['optimized_ewm_log1p_by_air_store_id_&_is_weekend'] = roll.sort_index(level=['air_store_id', 'visit_date']).values

data.head()

尽可能多的提取时间序列信息

def extract_precedent_statistics(df, on, group_by):

df.sort_values(group_by + ['visit_date'], inplace=True)

groups = df.groupby(group_by, sort=False)

stats = {

'mean': [],

'median': [],

'std': [],

'count': [],

'max': [],

'min': []

}

exp_alphas = [0.1, 0.25, 0.3, 0.5, 0.75]

stats.update({'exp_{}_mean'.format(alpha): [] for alpha in exp_alphas})

for _, group in groups:

shift = group[on].shift()

roll = shift.rolling(window=len(group), min_periods=1)

stats['mean'].extend(roll.mean())

stats['median'].extend(roll.median())

stats['std'].extend(roll.std())

stats['count'].extend(roll.count())

stats['max'].extend(roll.max())

stats['min'].extend(roll.min())

for alpha in exp_alphas:

exp = shift.ewm(alpha=alpha, adjust=False)

stats['exp_{}_mean'.format(alpha)].extend(exp.mean())

suffix = '_&_'.join(group_by)

for stat_name, values in stats.items():

df['{}_{}_by_{}'.format(on, stat_name, suffix)] = values

extract_precedent_statistics(

df=data,

on='visitors_capped',

group_by=['air_store_id', 'day_of_week']

)

extract_precedent_statistics(

df=data,

on='visitors_capped',

group_by=['air_store_id', 'is_weekend']

)

extract_precedent_statistics(

df=data,

on='visitors_capped',

group_by=['air_store_id']

)

extract_precedent_statistics(

df=data,

on='visitors_capped_log1p',

group_by=['air_store_id', 'day_of_week']

)

extract_precedent_statistics(

df=data,

on='visitors_capped_log1p',

group_by=['air_store_id', 'is_weekend']

)

extract_precedent_statistics(

df=data,

on='visitors_capped_log1p',

group_by=['air_store_id']

)

data.sort_values(['air_store_id', 'visit_date']).head()

data = pd.get_dummies(data, columns=['day_of_week', 'air_genre_name'])

data.head()

数据集划分

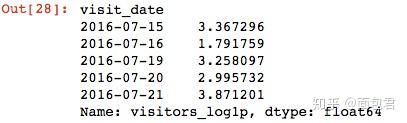

data['visitors_log1p'] = np.log1p(data['visitors'])

train = data[(data['is_test'] == False) & (data['is_outlier'] == False) & (data['was_nil'] == False)]

test = data[data['is_test']].sort_values('test_number')

to_drop = ['air_store_id', 'is_test', 'test_number', 'visit_date', 'was_nil',

'is_outlier', 'visitors_capped', 'visitors',

'air_area_name', 'latitude', 'longitude', 'visitors_capped_log1p']

train = train.drop(to_drop, axis='columns')

train = train.dropna()

test = test.drop(to_drop, axis='columns')

X_train = train.drop('visitors_log1p', axis='columns')

X_test = test.drop('visitors_log1p', axis='columns')

y_train = train['visitors_log1p']

X_train.head()

y_train.head()

看一看是不是哪还有问题

assert X_train.isnull().sum().sum() == 0

assert y_train.isnull().sum() == 0

assert len(X_train) == len(y_train)

assert X_test.isnull().sum().sum() == 0

assert len(X_test) == 32019

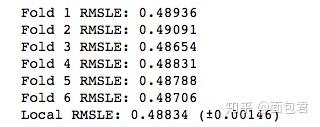

lightgbm建模

import lightgbm as lgbm

from sklearn import metrics

from sklearn import model_selection

np.random.seed(42)

model = lgbm.LGBMRegressor(

objective='regression',

max_depth=5,

num_leaves=25,

learning_rate=0.007,

n_estimators=1000,

min_child_samples=80,

subsample=0.8,

colsample_bytree=1,

reg_alpha=0,

reg_lambda=0,

random_state=np.random.randint(10e6)

)

n_splits = 6

cv = model_selection.KFold(n_splits=n_splits, shuffle=True, random_state=42)

val_scores = [0] * n_splits

sub = submission['id'].to_frame()

sub['visitors'] = 0

feature_importances = pd.DataFrame(index=X_train.columns)

for i, (fit_idx, val_idx) in enumerate(cv.split(X_train, y_train)):

X_fit = X_train.iloc[fit_idx]

y_fit = y_train.iloc[fit_idx]

X_val = X_train.iloc[val_idx]

y_val = y_train.iloc[val_idx]

model.fit(

X_fit,

y_fit,

eval_set=[(X_fit, y_fit), (X_val, y_val)],

eval_names=('fit', 'val'),

eval_metric='l2',

early_stopping_rounds=200,

feature_name=X_fit.columns.tolist(),

verbose=False

)

val_scores[i] = np.sqrt(model.best_score_['val']['l2'])

sub['visitors'] += model.predict(X_test, num_iteration=model.best_iteration_)

feature_importances[i] = model.feature_importances_

print('Fold {} RMSLE: {:.5f}'.format(i+1, val_scores[i]))

sub['visitors'] /= n_splits

sub['visitors'] = np.expm1(sub['visitors'])

val_mean = np.mean(val_scores)

val_std = np.std(val_scores)

print('Local RMSLE: {:.5f} (±{:.5f})'.format(val_mean, val_std))

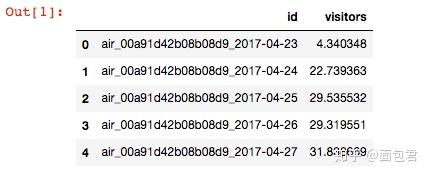

输出结果

sub.to_csv('result.csv', index=False)

import pandas as pd

df = pd.read_csv('result.csv')

df.head()

—END—