- 当下AI的瓶颈:

静观现有AI,尤其是Deep Learning的发展如火如荼,几大热炒的明星模型无非MLP,CNN,和RNN。这些模型更倾向于是对现实世界的representation learning,其最大的问题有二:

- Combinatorial Generalization (CG):即泛化能力,如何解决利用有限样本学习无限可能的这个本质难点,即如何实现‘infinite use of finite means’,如何将over-fitting问题在根源上解决;

- Relational Reasoning: 即学习事物之间的关系和联系,而不仅仅是讲一个input提特征描述为其另一种表征。

- 为何遇到如此瓶颈:

首先要从当前DL模型所能处理的数据说起。以CNN举例,它的input往往是image,而image(或者想RNN处理NLP中的sentence等)是一种Euclidean domain的,展现出grid性质,工整对称的数据。因此,CNN所采用的工整对称的filter可以很好地发挥作用。然而,这一类数据是‘单调无趣’的,即它并不能描述出entities于entities之间多变的relations。这就从根源上限制了对复杂问题的描述力。

而世界上另一种数据表现形式是Non-Euclidean Domain,这类数据包括比如社交网络,蛋白质结构,交通网络等等展现出网络结构或者个体间相互连接的结构。这一类数据内部没有规则的grid结构,而是展现出一种多变的动态的拓扑。这种结构能够很好地表征个体之间的关系,适合于relation reasoning。

其次,从学习模型(网络)的结构解释。一个深度学习模型(网络)往往是由多个深度学习building blocks通过某种方式连接而成的。对于CNN而言,其基础的building block就是Conv layers(和pooling layers等)。一个模型的CG能力,取决于两点:

- Structured representation & computation of the building blocks:所谓structured,我理解就是让该block自己内部也具有某种拓扑结构,进而使得其具有更高的可操作性,可学习性。对于一个Conv filter而言,就是一个正方形的grid,在空间结构上来说是fixed的,可学习的量只有各个权重值;直观上看,讲一个原本固定的行人BBox模型进化成part-based pictorial structure模型就是一个将模型structured化,得到更强RIB的例子。一个pictorial模型仿照spring模型,由node和相连的edge构成。而这些edges,就是描述relations的最好载体,也是configurablility的来源。

- Relational Inductive Bias (RIB) available in the model: RIB,可以理解为assumptions or known properties about the learning process, which imposes constraints on relationships and interactions among entities in the learning process. 也就是说,RIB就是模型block或者结构中存在的某种已掌握的定式,一种规则,使得这个学习模型不是完全random或者unstructured,而是参与了一定程度人为干预的。比如在Bayesian学习中,prior info就是一种RIB,在CNN的conv layer中,locality就是一种RIB,在RNN中,cell之间的sequentiality(Markovan)也是一种RIB,在MLP中,加入regularization term也是一种RIB。

Learning的过程就是不断调整learnable参数的值,从而不断缩小解空间,直到找到最优解。RIB的作用,就是通过增加人为可控且已知的限制,直接缩小解空间。

所以说,一个模型的RIBs,直接决定这个模型的学习能力,和泛化能力。因为当模型的自身规则性更强之后,对于训练数据的变化就展现更强的稳定性。

因此,如何设计一个模型(blocks),使其具有structured representation,而且使其内部具有强的RIBs,而且让这个模型generally and uniformlly可以适应both Euclidean or Non-Euclidean数据,就是突破深度学习瓶颈的关键。

- Graph Networks:

- Definition:

Graph networks是由众多graph network(GN)block连接而成的网络。其中,GN block是一切的核心,也是上述structured representation,high RIB的集中体现。GNN中流动的数据往往是graphs,这有别与CNN和RNN中的vectors and tensors。

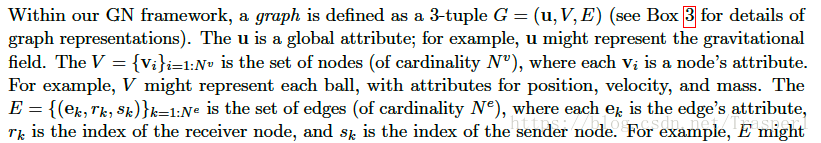

顾名思义,GN是基于Graph设计的,而graph,无非就是node,edge,(nodes和edge各自有自己的attributes,attributes可以是一个vectors或者tensors)和attributes。GN block的定义如下:input a graph, do some computations on it, then output a graph with same topology.

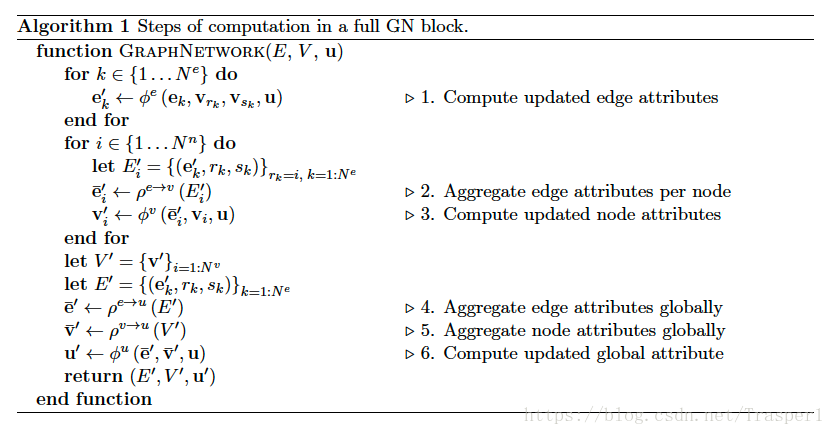

在训练时,对GN block的update遵循3个update function和3个aggregate function(CNN中的update function就是加权求和):

一个GN的update和aggreate function是全局reused的,这与CNN中conv filter的全局reuse相似,这造就了一定的locality和一定的可增值性,也就是一定的CG,因为CG的体现可以理解为可增殖可configurable性,这体现了模型对于不同数据的适应性。这其中,update function往往是CNN,RNN或者MLP,aggregate functions是sum, mean, max or min。换言之,一个GNN中的learnable part是update functions。

每个iteration一个GN update是由一定流程的:总的来说就是由edge到node再到全局global attributes,中间伴随着每一次升级的aggregation。

- Characteristics and design principles:

Flexibility;configurability;composable multi-block architecture;

GN block的最大优势是强有力的RIBs:而RIB是CG的保证!!

- Flexibility:Can facilitate arbitrary relationships among entities, which means can hold whatever he input graph presents;

- Ordering Invariance:Entities (nodes) and their relations (edges) are presented in sets, so they are invariant to permutations, GNs are invariant to the order of the elements.

- CG with high configurability: GN’s per-edge and per-node functions are reused across all edges and nodes, this copy&paste reusing functionalities supports a form of CG, because a GN block with its defined 3 update and 3 aggregate functions can be reused in a graph input with arbitrary numbers of nodes and edges and topologies.

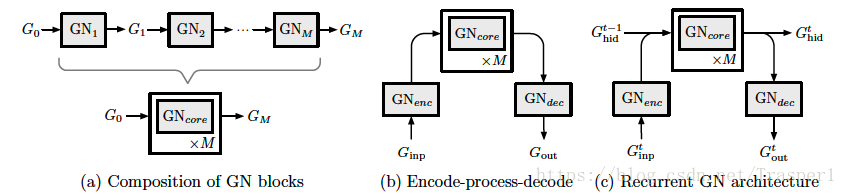

GNN是由众多GN blocks通过某种方式连接而成。这种multi-block连接(级联)与CNN中组合Conv layers的方式类似。

GNN的configurability:

- GN block configurable: update function & aggregate function

- GNN structure configurable: MPNN,NLNN

- Discussion

MLP, CNN, RNN all have RIBs, but they can not handle more structured representations such as sets or graphs. GN can build stronger RIB into DL, performing computations over graph-structure data.

GN naturally supports CG because they do not perform computations strictly at the system level, but also apply shared computations across entities and across the relations as well. This allows never-before-seen systems to be reasoned about, because they are bulti from familiar components.