python爬豆瓣影评&根据词频生成词云

通过爬取豆瓣上正在上映的电影影评信息,并根据评论词频生成词云。

一、需要的包

import warnings # 防止出现future warning

warnings.filterwarnings("ignore")

from urllib import request # 用于爬取网页

from bs4 import BeautifulSoup as bs # 用于解析网页

import re

import pandas as pd

import numpy as np

import jieba # 用于切词

from wordcloud import WordCloud # 用于生成词云

import matplotlib.pyplot as plt

import matplotlib二、获取电影列表

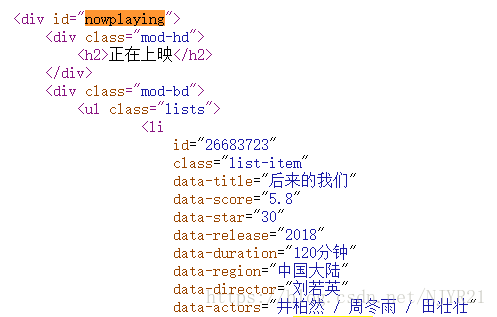

打开豆瓣上海(https://movie.douban.com/cinema/nowplaying/shanghai/),观察源代码内需要爬取内容的特征。

1、获取nowplaying电影,并将每一个电影的内容都存在list内。

'''get url'''

url = 'https://movie.douban.com/nowplaying/shanghai/'

resp = request.urlopen(url)

html_data = resp.read().decode('utf-8') # 防止乱码

soup = bs(html_data, 'html.parser') # 解析

nowplaying = soup.find_all('div', id='nowplaying') # 网页中id为nowplaying是现在正在上映的电影。

nowplaying_list = nowplaying[0].find_all('li', class_='list-item') # 寻找所有上映电影相关信息2、提取电影名称和id

'''get movie list'''

movie_list = [] # 获取电影id和电影名

for item in nowplaying_list:

movie_dic = {}

movie_dic['id'] = item['id']

movie_dic['name'] = item['data-title']

movie_list.append(movie_dic)当前nowplaying电影列表

[{'id': '26683723', 'name': '后来的我们'},

{'id': '26420932', 'name': '巴霍巴利王2:终结'},

{'id': '26774033', 'name': '幕后玩家'},

{'id': '26430636', 'name': '狂暴巨兽'},

{'id': '4920389', 'name': '头号玩家'},

{'id': '26935777', 'name': '玛丽与魔女之花'},

{'id': '26924141', 'name': '低压槽:欲望之城'},

{'id': '26640371', 'name': '犬之岛'},

{'id': '25881611', 'name': '战神纪'},

{'id': '26769474', 'name': '香港大营救'},

{'id': '5330387', 'name': '青年马克思'},

{'id': '26691361', 'name': '21克拉'},

{'id': '26588783', 'name': '冰雪女王3:火与冰'},

{'id': '30183489', 'name': '小公主艾薇拉与神秘王国'},

{'id': '26868408', 'name': '黄金花'},

{'id': '26942631', 'name': '起跑线'},

{'id': '26384741', 'name': '湮灭'},

{'id': '30187395', 'name': '午夜十二点'},

{'id': '26647117', 'name': '暴裂无声'},

{'id': '30152451', 'name': '厉害了,我的国'},

{'id': '27075280', 'name': '青年马克思'},

{'id': '26661189', 'name': '脱单告急'},

{'id': '27077266', 'name': '米花之味'},

{'id': '26603666', 'name': '妈妈咪鸭'},

{'id': '26967920', 'name': '遇见你真好'},

{'id': '30162172', 'name': '出山记'},

{'id': '20435622', 'name': '环太平洋:雷霆再起'}]三、获取《后来的我们》影评

《最好的我们》位于第一个,索引为0。根据影评地址爬取第一页20条影评,并找到评论所在位置。

扫描二维码关注公众号,回复:

2871653 查看本文章

1、获取影评所在div块儿。

'''first is 'zuihaodewomen', get comments'''

url_comment = 'https://movie.douban.com/subject/' + movie_list[0]['id'] + '/comments?start=' + '0' + '&limit=20'

resp = request.urlopen(url_comment)

html_comment = resp.read().decode('utf-8')

soup_comment = bs(html_comment, 'html.parser')

comment_list = soup_comment.find_all('div', class_='comment')2、获取每个影评的内容

'''get comment list'''

comments = []

for item in comment_list:

comment = item.find_all('p')[0].string

comments.append(comment)四、清洗影评

前面步骤得到的影评为list,为了能够利用jieba包进行切词,需要将其转化为字符,并且去除所有标点。

'''clean comments'''

allComment = ''

for item in comments:

allComment = allComment + item.strip()

# 至少匹配一个汉字,两个unicode值正好是Unicode表中的汉字的头和尾。

pattern = re.compile(r'[\u4e00-\u9fa5]+')

finalComment = ''.join(re.findall(pattern, allComment))

segment = jieba.lcut(finalComment)

words_df = pd.DataFrame({'segment': segment})五、去除无关字符

利用stopwords文件(百度即可下载)去除一些无用的词组(如我,你,的.....)。

'''remove useless words'''

stopwords = pd.read_csv(".../chineseStopwords.txt", index_col=False, quoting=3, sep="\t",

names=['stopword'], encoding='GBK')

words_df = words_df[~words_df.segment.isin(stopwords.stopword)]

'''get words frequency'''

words_fre = words_df.groupby(by='segment')['segment'].agg({'count': np.size})

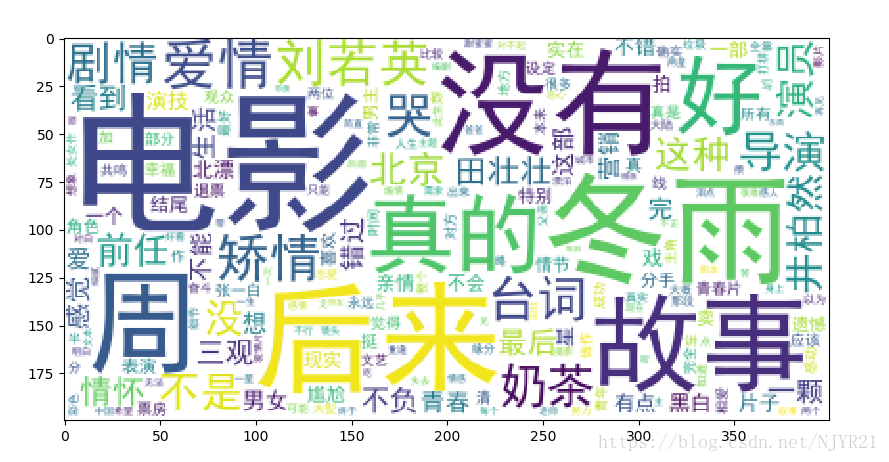

words_fre = words_fre.reset_index().sort_values(by='count', ascending=False)六、画出影评词云图

'''use wordcloud'''

matplotlib.rcParams['figure.figsize'] = [10.0, 5.0]

wordcloud = WordCloud(font_path='simhei.ttf', background_color='white', max_font_size=80)

word_fre_dic = {x[0]: x[1] for x in words_fre.values}

wordcloud = wordcloud.fit_words(word_fre_dic)

plt.imshow(wordcloud)

plt.show()七、完整版代码

import warnings # 防止出现future warning

warnings.filterwarnings("ignore")

from urllib import request # 用于爬取网页

from bs4 import BeautifulSoup as bs # 用于解析网页

import re

import pandas as pd

import numpy as np

import jieba # 用于切词

from wordcloud import WordCloud # 用于生成词云

import matplotlib.pyplot as plt

import matplotlib

def getMovieList(url, headers, pattern1='div', id1='nowplaying', pattern2='li', class_='list-item'):

resp = request.urlopen(url)

html = resp.read().decode('utf-8')

soup = bs(html, 'html.parser')

nowplaying = soup.find_all(pattern1, id=id1)

nowplaying_list = nowplaying[0].find_all(pattern2, class_=class_)

movie_list = []

for item in nowplaying_list:

movie_dic = {}

movie_dic['id'] = item['id']

movie_dic['name'] = item['data-title']

movie_list.append(movie_dic)

return movie_list

def getCommentList(id2, headers, pages=10, pattern='div', class_='comment'):

assert pages > 0

all_comments = []

for i in range(pages):

start = (i) * 20

url = 'https://movie.douban.com/subject/' + id2 + '/comments' +'?' +'start=' + str(start) + '&limit=20'

resp = request.urlopen(url)

html = resp.read().decode('utf-8')

soup = bs(html, 'html.parser')

comment = soup.find_all(pattern, class_=class_)

comments = []

for item in comment:

comment = item.find_all('p')[0].string

comments.append(comment)

all_comments.append(comments)

allComment = ''

for i in range(len(all_comments)):

allComment = allComment + (str(all_comments[i])).strip()

wordpattern = re.compile(r'[\u4e00-\u9fa5]+')

finalComment = ''.join(re.findall(wordpattern, allComment))

return finalComment

def cleanComment(finalComment, path):

segment = jieba.lcut(finalComment)

comment = pd.DataFrame({'segment': segment})

stopwords = pd.read_csv(path, quoting=3, sep='\t', names=['stopword'], encoding='GBK', index_col=False)

comment = comment[~comment.segment.isin(stopwords.stopword)]

comment_fre = comment.groupby(by='segment')['segment'].agg({'count': np.size})

comment_fre = comment_fre.reset_index().sort_values(by='count', ascending=False)

return comment_fre

def wordcloud(comment_fre):

matplotlib.rcParams['figure.figsize'] = [10.0, 5.0]

wordcloud = WordCloud(font_path='simhei.ttf', background_color='white', max_font_size=80)

comment_fre_dic = {x[0]: x[1] for x in comment_fre.head(1000).values}

wordcloud = wordcloud.fit_words(comment_fre_dic)

plt.imshow(wordcloud)

plt.show

def printMoveName(movie_list, id2):

for item in movie_list:

if item['id'] == id2:

print(item['name'])

def main(url, headers, j, pages, path):

movie_list = getMovieList(url, headers, 'div', 'nowplaying', 'li', 'list-item')

comment_list = getCommentList(movie_list[j]['id'], headers, pages, 'div', 'comment')

comment_fre = cleanComment(comment_list, path)

printMoveName(movie_list, movie_list[j]['id'])

return wordcloud(comment_fre)url = 'https://movie.douban.com/nowplaying/shanghai/'

path = ".../chineseStopwords.txt"

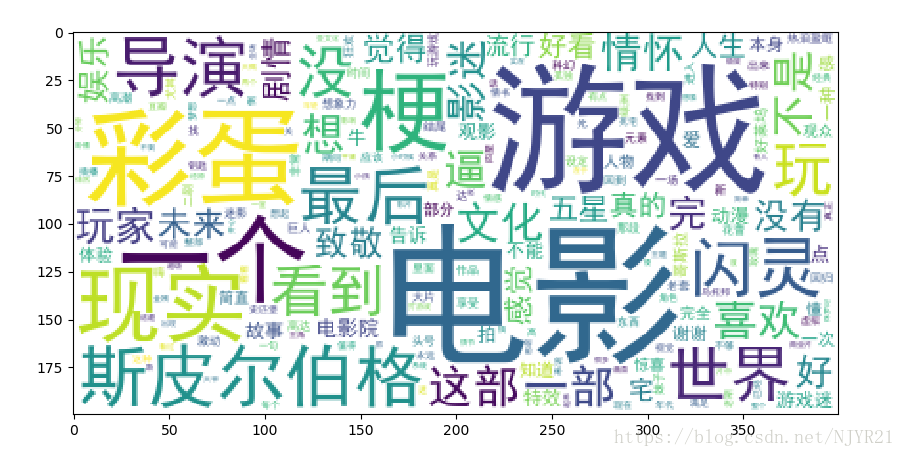

main(url, headers, 0, 10, path)test2:获取前十页《头号玩家》影评

八、参考

https://mp.weixin.qq.com/s/D5Q4Q6YcQDTOOlfwIytFJw

https://www.cnblogs.com/GuoYaxiang/p/6232831.html