批量爬取豆瓣短评并批量制作为词云

我分为两步实现获取短评和制作词云

1.批量爬取豆瓣短评

from bs4 import BeautifulSoup

import requests

import threading

# 获取网页信息

def moviesInfo():

# 1). 获取页面信息

url = "https://movie.douban.com/cinema/nowplaying/xian/"

response = requests.get(url)

content = response.text

# print(content)

# 2). 分析页面,获取id和电影名

soup = BeautifulSoup(content,'lxml')

# 先找到所有的电影信息

nowplaying_movie_list = soup.find_all('li',class_ = 'list-item')

movies_info = []

for item in nowplaying_movie_list:

print(item)

nowplaying_movie_dict = {}

nowplaying_movie_dict['title'] = item['data-title']

nowplaying_movie_dict['id'] = item['data-subject']

movies_info.append(nowplaying_movie_dict)

return movies_info

# 获得指定电影的影评

def getOnePageComment(id,pageNum):

start = (pageNum-1)*20

url='https://movie.douban.com/subject/%s/comments?start=%s&limit=20&sort=new_score&status=P' %(id,start)

# 2).爬取评论信息的网页内容

content = requests.get(url).text

# 3).通过bs4分析网页

soup = BeautifulSoup(content,'lxml')

commentList = soup.find_all('span',class_='short')

pageComments = ""

for commentTag in commentList:

pageComments += commentTag.text

print("%s page" %(pageNum))

print(pageComments)

with open('./doc/%s.txt' %(id),'a') as f:

f.write(pageComments)

threads = []

movies_info = moviesInfo()

for movie in movies_info:

for pageNum in range(100):

pageNum = pageNum + 1

t = threading.Thread(target=getOnePageComment, args=(movie['id'],pageNum))

threads.append(t)

t.start()

_ = [thread.join() for thread in threads]

print("执行结束")

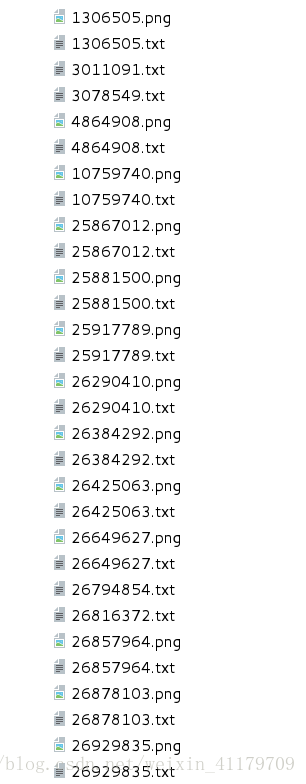

.txt文件即位所爬取电影的豆瓣短评(.png文件为生成的词云)

选取部分短评进行展示

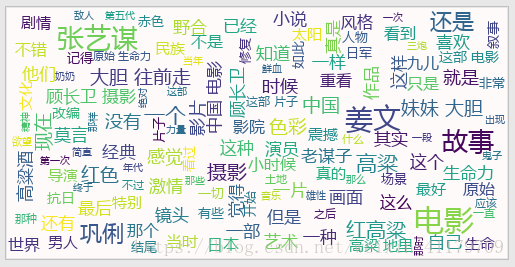

《红高粱》电影短评

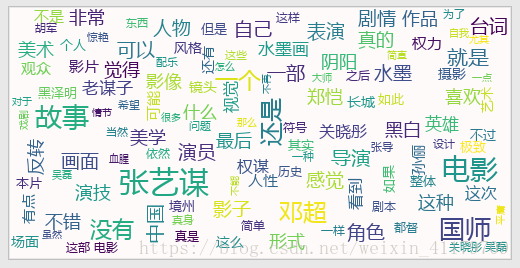

《无双》电影短评

2.批量生成电影词云

import re

import requests

import wordcloud

import jieba

from PIL import Image

import numpy as np

from bs4 import BeautifulSoup

def moviesInfo():

# 1). 获取页面信息

url = "https://movie.douban.com/cinema/nowplaying/xian/"

response = requests.get(url)

content = response.text

# print(content)

# 2). 分析页面,获取id和电影名

soup = BeautifulSoup(content,'lxml')

# 先找到所有的电影信息

nowplaying_movie_list = soup.find_all('li',class_ = 'list-item')

movies_info = []

for item in nowplaying_movie_list:

nowplaying_movie_dict = {}

nowplaying_movie_dict['title'] = item['data-title']

nowplaying_movie_dict['id'] = item['data-subject']

movies_info.append(nowplaying_movie_dict)

return movies_info

movies_info = moviesInfo()

for i in movies_info:

# 1. 对于爬取的评论信息进行数据清洗(删除不必要的逗号,问好,句号,表情,只留下中文或者英文)

with open('./doc/%s.txt' %(i['id'])) as f:

comments = f.read()

if comments != '':

# 通过正则表达式实现

paatern = re.compile(r'([\u4e00-\u9fa5]+|[a-zA-Z]+)')

deal_comments = re.findall(paatern,comments)

newsComments = ''

for item in deal_comments:

newsComments += item

# 1).切割中文,lcut返回一个列表,cut返回一个生成器

result = jieba.lcut(newsComments)

# 3).绘制词云

wc = wordcloud.WordCloud(

background_color='snow',

font_path='./font/msyh.ttf',

min_font_size=10,

max_font_size=30,

width=500,

height=250,

)

wc.generate(','.join(result))

wc.to_file('./doc/%s.png' %(i['id']))

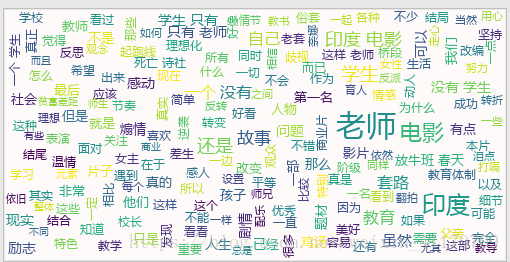

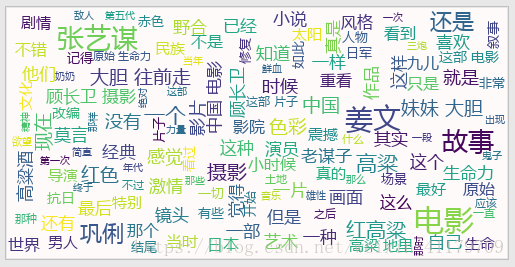

《红高粱》电影词云

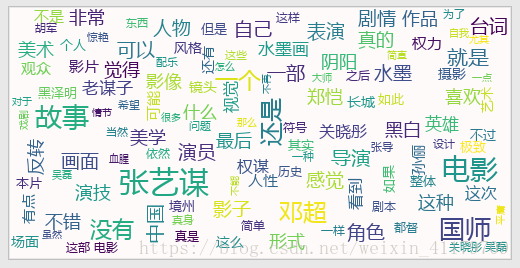

《影》电影词云

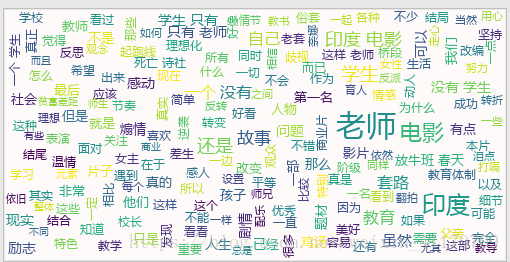

《嗝嗝老师》电影词云