版权声明:请多指教。 https://blog.csdn.net/qq_42776455/article/details/81213567

说明:

爬取豆瓣电影,书籍,音乐(可选择)的所有短评信息,最终筛选出现频率最高的100词生成词云。但是我这个写的有点问题是,在挂代理ip测试时把豆瓣账号永久封禁,造成了电影短评无法全部获取,但书籍,和音乐无影响。代码详情见Github。

详细介绍:

代码结构:

GetID_Douban.py:

需要传入两个参数,一个是爬取对象名称,一个是对象类型(从movie,music,book中选择即可),返回作品对应的豆瓣ID,为下一步爬取短评拼凑完整的URL。代码如下:

import re

import os

import urllib

import random

import urllib.parse

import urllib.request

from lxml import etree

subFilmURL = 'https://www.douban.com/search?cat=1002&q='

subBookURL = 'https://www.douban.com/search?cat=1001&q='

subMusicURL = 'https://www.douban.com/search?cat=1003&q='

class Douban_id():

'''

get a Douban id according to the film name,music name,or book name that you provid

'''

def __init__(self,name,sort='movie'):

'''

:param name: film name,music name,or book name

:param sort: sort attr is optional just from ['movie','book','music']

'''

self.name = name

self.sort = sort

self.url_1 = subFilmURL

self.url_2 = subBookURL

self.url_3 = subMusicURL

def getID(self):

if self.sort == 'book':

url = self.url_2 + urllib.parse.quote(self.name)

elif self.sort == 'movie':

url = self.url_1 + urllib.parse.quote(self.name)

elif self.sort == 'music':

url = self.url_3 + urllib.parse.quote(self.name)

else:

print("error: wrong option about catagory's name.the right one is from 'movie'or'music'or'book'")

os._exit()

headers = [{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:60.0) Gecko/20100101 Firefox/60.0"},

{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Safari/537.36"},

{"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/64.0.3282.140 Safari/537.36 Edge/17.17134"},]

header = random.choice(headers)

request = urllib.request.Request(url,headers=header)

response = urllib.request.urlopen(request)

html = response.read().decode('utf-8')

selector = etree.HTML(html)

a_tag = selector.xpath('//*[@id="content"]/div/div[1]/div[3]/div[2]/div[1]/div[2]/div/h3/a/@onclick')[0]

#print(a_tag)

partten = re.compile(r'sid: (\d+)',re.S)

filmID = partten.findall(a_tag)[0]

return filmIDgetCommen.py:

将Douban_id()获取的id和suburl拼凑出完整的短评url,拿到数据并保存在本地。返回值为文件保存的路径。

import urllib

import urllib.request

import urllib.parse

import requests

import random

import http.cookiejar

import re

import os

import random

import time

from bs4 import BeautifulSoup as bs

#faker包,生产随机虚拟headers

from faker import Faker

uA = Faker()

#全局变量,页数控制

pageIndex = 0

filename = None

#异常处理,只控制程序进行不实际报错

class EmptyError(Exception):

pass

#爬取评论主要函数

def getComments(name,id,sort='movie'):

'''

:param name: 爬取对象名称

:param id: 爬取对象豆瓣对应ID

:param sort: 分类(电影:movie,书籍:book,音乐:music)

:return: 返回评论保存到本地的文件夹路径

'''

while 1:

global pageIndex

page = pageIndex*20

pageIndex += 1

headers = {'User-Agent': uA.user_agent()}

#代理IP,西刺代理这个不知道现在还能不能用,当时性能挺好的

proxies = {"http": "http://14.118.254.221:6666"}

#电影应该是豆瓣最开始的业务吧,和其他分类的URL完全不一样

if sort == 'movie':

url = 'https://movie.douban.com/subject/' + id + '/comments?start='+ str(page) + '&limit=20&sort=new_score&status=P'

else:

url = 'https://book.douban.com/subject/'+ id + '/comments/hot?p=' + str(pageIndex)

print(url)

try:

'''

此处两种出发异常,①URL访问失败,②评论爬取为空(最后一页以后均为空)

触发异常以后弃用代理或者更换代理。

'''

print("with proxy")

response = requests.get(url=url,headers=headers,proxies=proxies)

html = response.text

#print(html)

soup = bs(html, 'lxml')

if sort == "movie":

comm_tag = soup.find_all('p', attrs={'class': ""})[:-6]

else:

comm_tag = soup.find_all('p', attrs ={'class':"comment-content"})

#print(comm_tag)

if comm_tag == []:

#print("sssssss")

raise EmptyError("response is empty!")

except:

time.sleep(3)

print("without proxy")

response = requests.get(url=url,headers=headers)

html = response.text

soup = bs(html, 'lxml')

if sort == "movie":

comm_tag = soup.find_all('p', attrs={'class': ""})[:-6]

else:

comm_tag = soup.find_all('p', attrs ={'class':"comment-content"})

#print(comm_tag)

finally:

path = './comments_infor/'+ sort + "/" +name

isExists = os.path.exists(path)

if not isExists:

os.makedirs(path)

else:

if comm_tag == []:

print('page %d is empty'%(page))

break

else:

for i in comm_tag:

substr = i.get_text()

strlist = substr.split()

comment = ''.join(strlist)

print(comment)

global filename

filename = "./comments_infor/" + sort + '/' + name +"/"+ name + "_comments.txt"

#print(filename)

try:

with open(filename, 'a+') as f:

f.write(comment + "\n\n")

except:

#评 论信息含有emoji表情

pass

finally:

pass

if sort == 'movie':

print('finished page %d'%(page))

else:

print('finished page %d'%(pageIndex))

time.sleep(random.randint(3,6))

print("All have written into files!")

return "./comments_infor/"+ sort + '/' + name +"/"+ name + "_comments"

"""

login()为登陆函数,因为在电影短片的前(page=200)页无登陆限制,以后需要登陆。

其他分类无登陆限制

本来想写登陆的,测试的时候账号被永久封了,慎重啊。

"""

# def login():

# login_url = 'https://www.douban.com/login'

# captcha_Json_url = 'https://www.douban.com/j/misc/captcha'

# headers = {'User-Agent': uA.user_agent()}

#

# captchaInfor = requests.get(captcha_Json_url,headers=headers)

# imgUrl ='https://' + captchaInfor.json()['url'][2:]

# token = captchaInfor.json()['token']

#

# img = requests.get(imgUrl,headers=headers).content

# with open('captcha img.png','wb') as f:

# f.write(img)

# text = input('输入验证码:')

#

# data = {

# "form_email": username,

# "form_password": password,

# "captcha-solution": text,

# }

#

# session = requests.Session()

# data = session.post(login_url,data,headers=headers)

# cookies = requests.utils.dict_from_cookiejar(session.cookies)

# print(cookies)

# return cookiesKeywords.py

将保存在文件中的评论信息,进行清洗。清洗出的关键词生成词云。用到文件夹下的ChineseStopWords.txt,将所有的中文虚词剔除,可以自己做或者从网上下载。simhei.ttf词云字体类型。

import re

import jieba

import pandas

import numpy

import matplotlib.pyplot as plt

import matplotlib

matplotlib.rcParams['figure.figsize'] = (10.0, 5.0)

from wordcloud import WordCloud

def keywords(subpath):

"""

:function: 将保存在文件中的评论信息,进行清洗。清洗出的关键词生成词云。

"""

commentFile = subpath + ".txt"

WC_img = subpath + "_WC.png"

with open(commentFile,'r') as f:

comments = f.readlines()

com = ''.join(comments).split()

comment = ''.join(com)

pattern = re.compile(r'[\u4e00-\u9fa5]+')

filterdata = re.findall(pattern,comment)

cleaned_comments = ''.join(filterdata)

print(cleaned_comments)

segment = jieba.lcut(cleaned_comments)

words_df = pandas.DataFrame({'segment':segment})

stopwords = pandas.read_csv("ChineseStopWords.txt",index_col=False,quoting=3,sep='\t',names=['stopword'],encoding='GB2312')

words_df = words_df[~words_df.segment.isin(stopwords.stopword)]

words_stat=words_df.groupby(by=['segment'])['segment'].agg({"计数":numpy.size})

words_stat=words_stat.reset_index().sort_values(by=["计数"],ascending=False)

# 用词云进行显示

wordcloud = WordCloud(font_path="simhei.ttf", background_color="white", max_font_size=80)

word_frequence = {x[0]: x[1] for x in words_stat.head(1000).values}

word_frequence_list = []

for key in word_frequence:

temp = (key, word_frequence[key])

word_frequence_list.append(temp)

word_frequence_list = dict(word_frequence_list)

#print(word_frequence_list)

wordcloud = wordcloud.fit_words(word_frequence_list)

plt.imshow(wordcloud)

plt.axis("off")

plt.show()

wordcloud.to_file(WC_img)comments_infor文件夹:

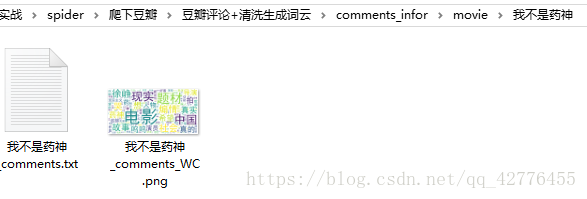

该文件夹用来存储所拿到的数据和生成的词云信息,下面以《我不是药神》为例,查看生产情况。

评论信息(部分):

词云展示: