一、初始RHCS

RHCS(Red Hat Cluster Suite,红帽集群套件)是Red Hat公司开发整合的一套综合集群软件组件,提供了集群系统中三种集群构架,分别是高可用性集群、负载均衡集群、存储集群,可以通过在部署时采用不同的配置,以满足你的对高可用性、负载均衡、可扩展性、文件共享和节约成本的需要。

二、实验环境的说明

server1 172.25.7.1(配置Nginx、ricci和luci)

server2 172.25.7.2(Apache)

server3 172.25.7.3(Apache)

server4 172.25.7.4(配置Nginx、ricci)

Server1和server4配置高可用yum源(不予演示)

三、Nginx的部署

[root@server1 ~]# tar zxf /mnt/nginx-1.10.1.tar.gz

[root@server1 ~]# vim /mnt/nginx-1.10.0/src/core/nginx.h #关闭版本显示

12 #define nginx_version 1010001

13 #define NGINX_VERSION "1.10.1"

14 #define NGINX_VER "nginx"

[root@server1 ~]# vim /mnt/nginx-1.10.0/auto/cc/gcc #关闭调试环境

# debug

#CFLAGS="$CFLAGS -g"

[root@server1 ~]#yum install -y pcre-devel gcc openssl-devel

[root@server1 ~]# ./configure --prefix=/usr/local/nginx --with-http_ssl_module --with-http_stub_status_module -with-threads --with-file-aio

[root@server1 ~]# make && make install

[root@server1 ~]# ln -s /usr/local/nginx/sbin/nginx /usr/local/sbin/

[root@server1 ~]# useradd -M -d /usr/local/nginx/ nginx编辑nginx配置文件实现负载均衡

[root@server1 conf]# vim nginx.conf

upstream westos{

server 172.25.7.2:80;

server 172.25.7.3:80;

}

server {

listen 80;

server_name www.westos.org;

location / {

proxy_pass http://westos;

}

}

编写一个nginx启动脚本,放在/etc/init.d/中:

#!/bin/sh

#

# nginx - this script starts and stops the nginx daemon

#

# chkconfig: - 85 15

# description: Nginx is an HTTP(S) server, HTTP(S) reverse \

# proxy and IMAP/POP3 proxy server

# processname: nginx

# config: /usr/local/nginx/conf/nginx.conf

# pidfile: /usr/local/nginx/logs/nginx.pid

# Source function library.

. /etc/rc.d/init.d/functions

# Source networking configuration.

. /etc/sysconfig/network

# Check that networking is up.

[ "$NETWORKING" = "no" ] && exit 0

nginx="/usr/local/nginx/sbin/nginx"

prog=$(basename $nginx)

lockfile="/var/lock/subsys/nginx"

pidfile="/usr/local/nginx/logs/${prog}.pid"

NGINX_CONF_FILE="/usr/local/nginx/conf/nginx.conf"

start() {

[ -x $nginx ] || exit 5

[ -f $NGINX_CONF_FILE ] || exit 6

echo -n $"Starting $prog: "

daemon $nginx -c $NGINX_CONF_FILE

retval=$?

echo

[ $retval -eq 0 ] && touch $lockfile

return $retval

}

stop() {

echo -n $"Stopping $prog: "

killproc -p $pidfile $prog

retval=$?

echo

[ $retval -eq 0 ] && rm -f $lockfile

return $retval

}

restart() {

configtest_q || return 6

stop

start

}

reload() {

configtest_q || return 6

echo -n $"Reloading $prog: "

killproc -p $pidfile $prog -HUP

echo

}

configtest() {

$nginx -t -c $NGINX_CONF_FILE

}

configtest_q() {

$nginx -t -q -c $NGINX_CONF_FILE

}

rh_status() {

status $prog

}

rh_status_q() {

rh_status >/dev/null 2>&1

}

# Upgrade the binary with no downtime.

upgrade() {

local oldbin_pidfile="${pidfile}.oldbin"

configtest_q || return 6

echo -n $"Upgrading $prog: "

killproc -p $pidfile $prog -USR2

retval=$?

sleep 1

if [[ -f ${oldbin_pidfile} && -f ${pidfile} ]]; then

killproc -p $oldbin_pidfile $prog -QUIT

success $"$prog online upgrade"

echo

return 0

else

failure $"$prog online upgrade"

echo

return 1

fi

}

# Tell nginx to reopen logs

reopen_logs() {

configtest_q || return 6

echo -n $"Reopening $prog logs: "

killproc -p $pidfile $prog -USR1

retval=$?

echo

return $retval

}

case "$1" in

start)

rh_status_q && exit 0

$1

;;

stop)

rh_status_q || exit 0

$1

;;

restart|configtest|reopen_logs)

$1

;;

force-reload|upgrade)

rh_status_q || exit 7

upgrade

;;

reload)

rh_status_q || exit 7

$1

;;

status|status_q)

rh_$1

;;

condrestart|try-restart)

rh_status_q || exit 7

restart

;;

*)

echo $"Usage: $0 {start|stop|reload|configtest|status|force-reload|upgrade|restart|reopen_logs}"

exit 2

esac

在server4进行同样的配置,不再予以演示

四、配置高可用集群

1.安装软件包

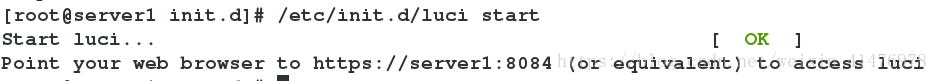

Server1:安装ricci luci 并设置密码

并设置开机自启动

[root@server1 ~]# yum install ricci luci -y

[root@server1 ~]# /etc/init.d/luci start

[root@server1 ~]# /etc/init.d/ricci start

[root@server1 ~]# chkconfig luci on

[root@server1 ~]# chkconfig ricci on

[root@server1 ~]# passwd ricci

Server4:安装ricci并设置密码

[root@server4 ~]# yum install ricci -y

[root@server4 ~]# /etc/init.d/ricci start

[root@server4 ~]# passwd ricci

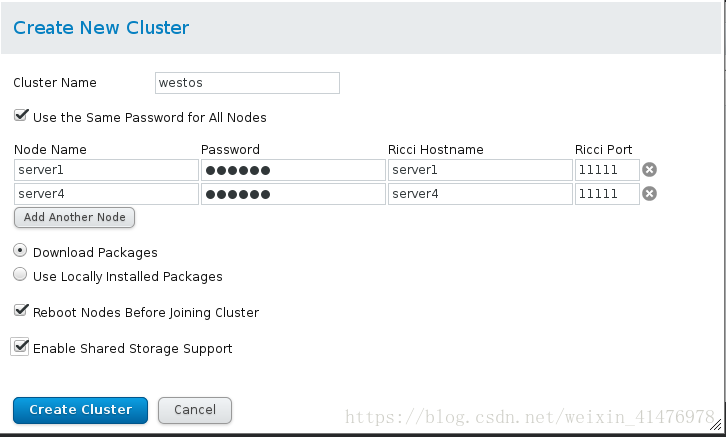

[root@server4 ~]# chkconfig ricci on2.创建集群

在浏览器访问https://172.25.7.1:8084

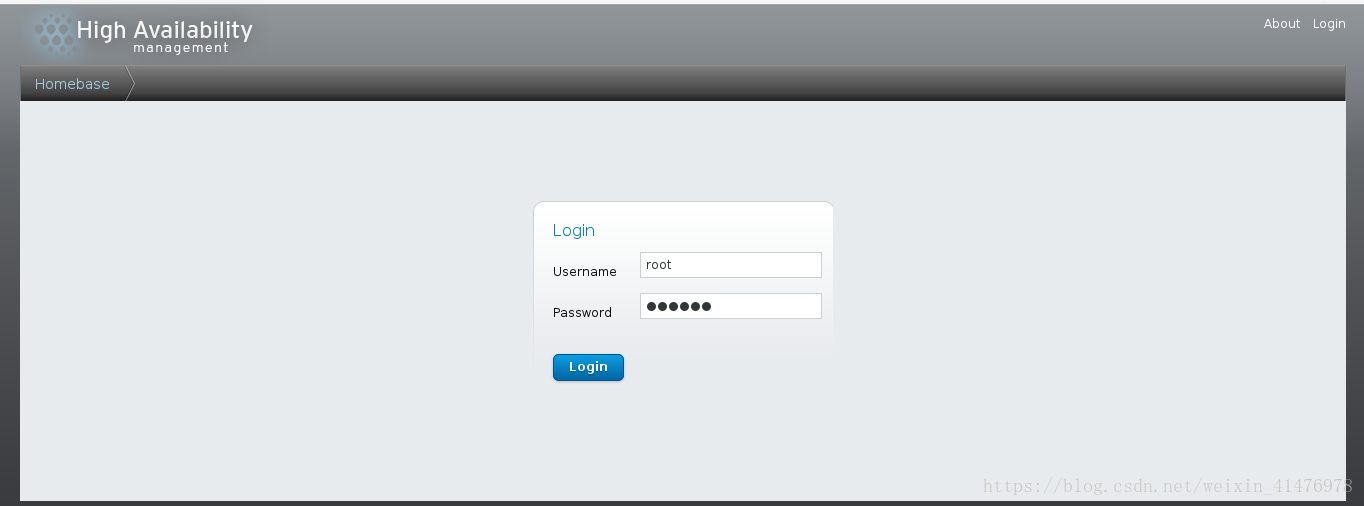

首次访问 luci 时,网页浏览器会根据显示的自我签名 SSL 证书( luci 服务器的证书)给出具体提示。确认一个或者多个对话框后,网页显示器会显示 luci 登录页面。

如果创建失败在server1和server4中执行 > /etc/cluster/cluster.conf清除掉,在执行网页设置步骤即可

用root用户进行登陆:

创建集群:

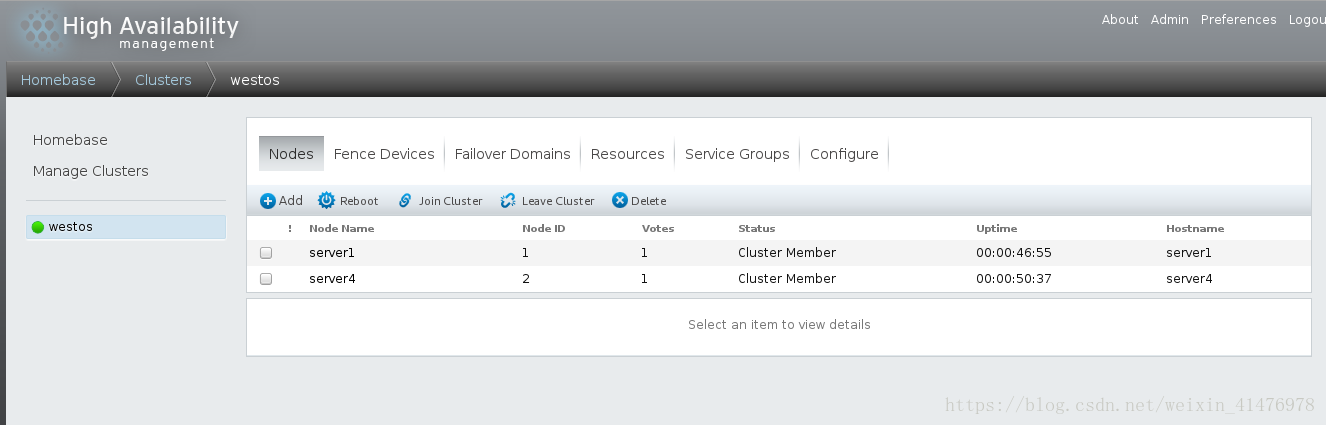

创建后:

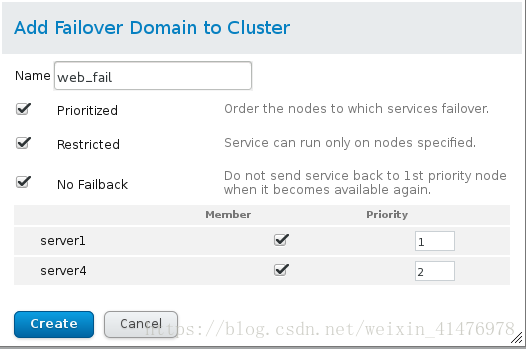

Failover Domains故障转移域设置:

server1的优先权高于server4,数字越小优先级越高

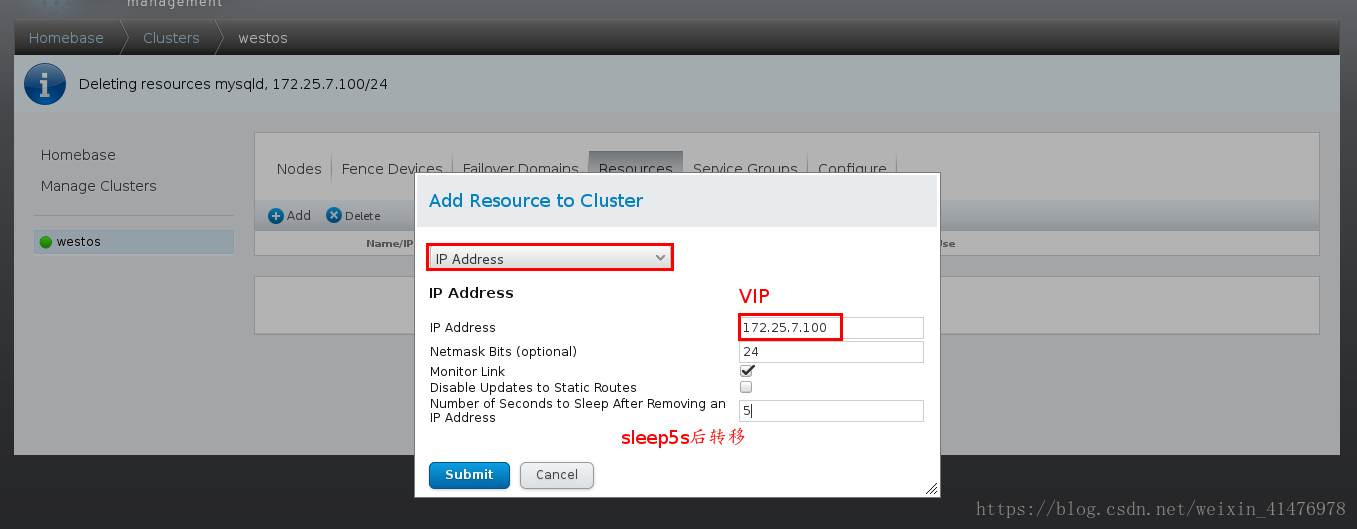

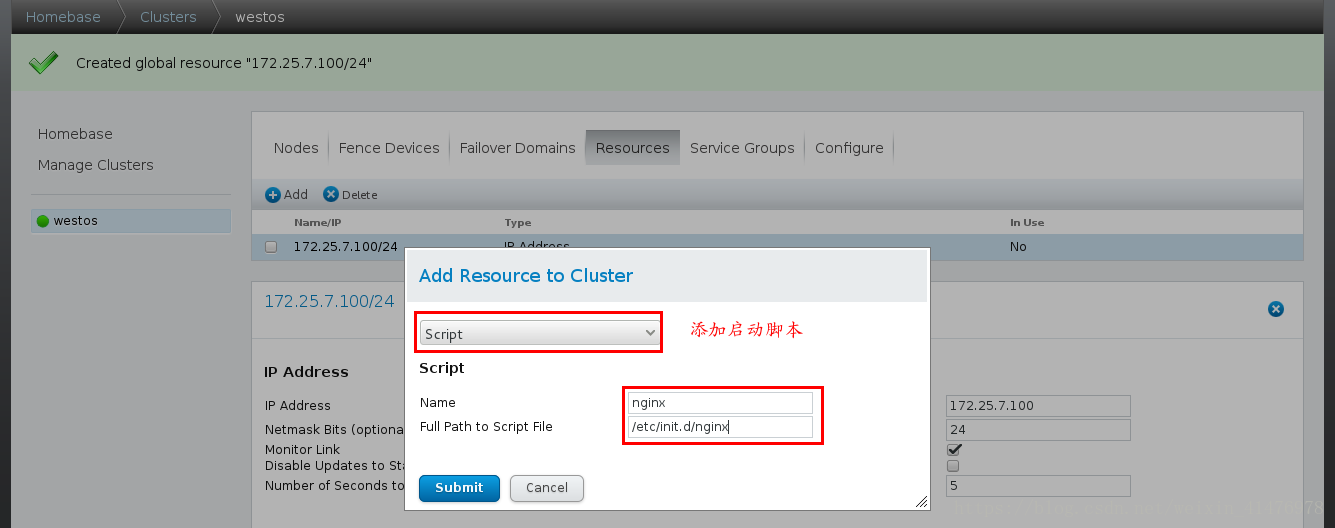

Resource设置:

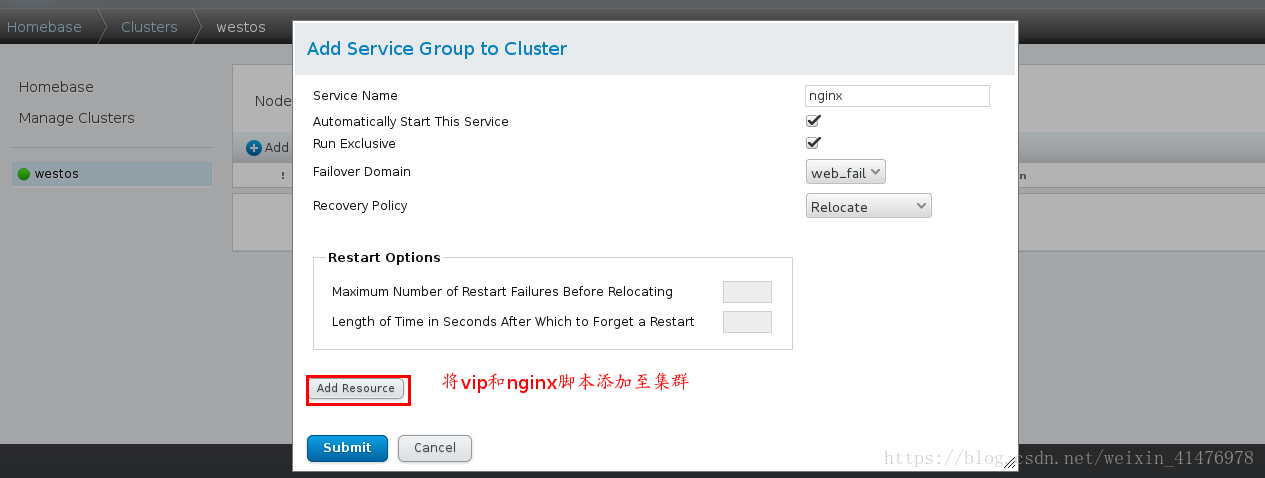

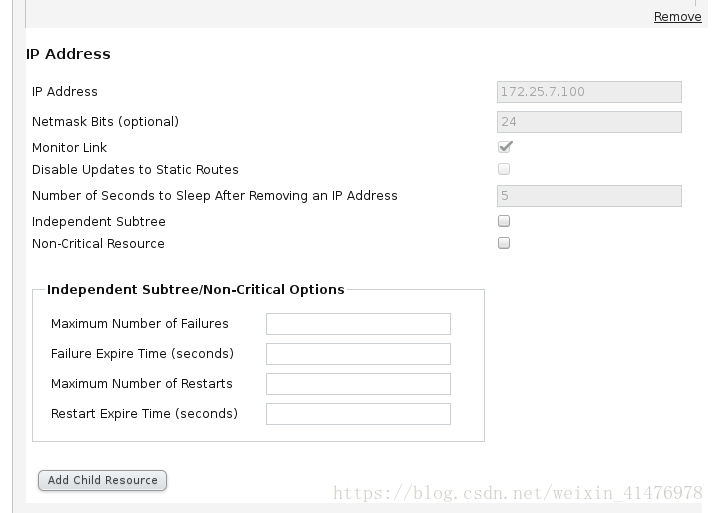

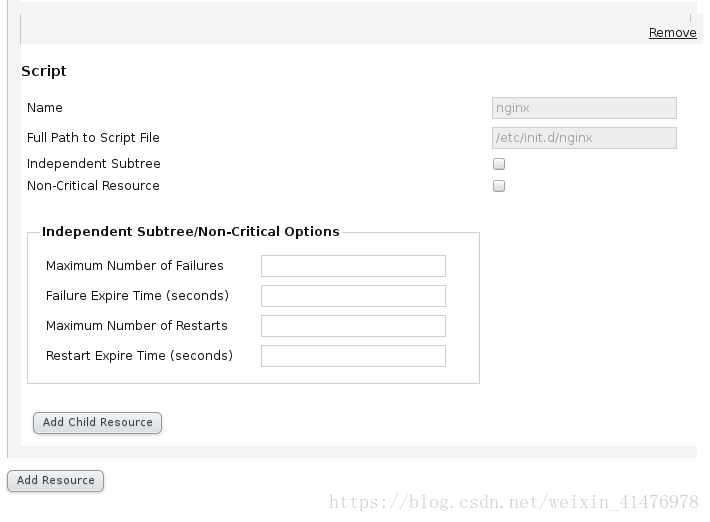

Service Group设置:

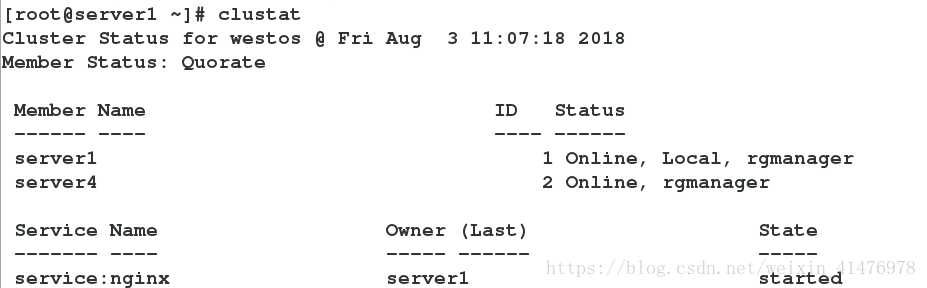

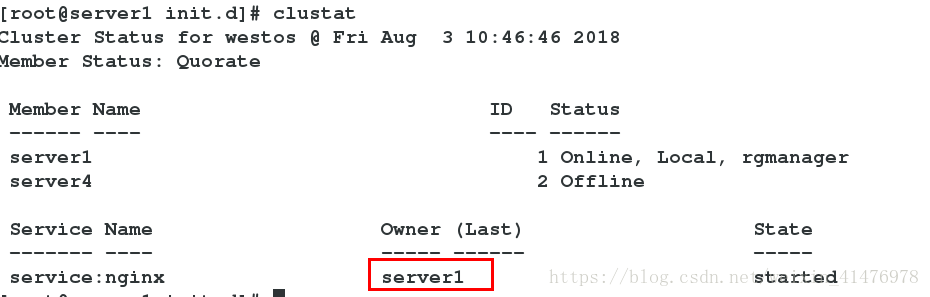

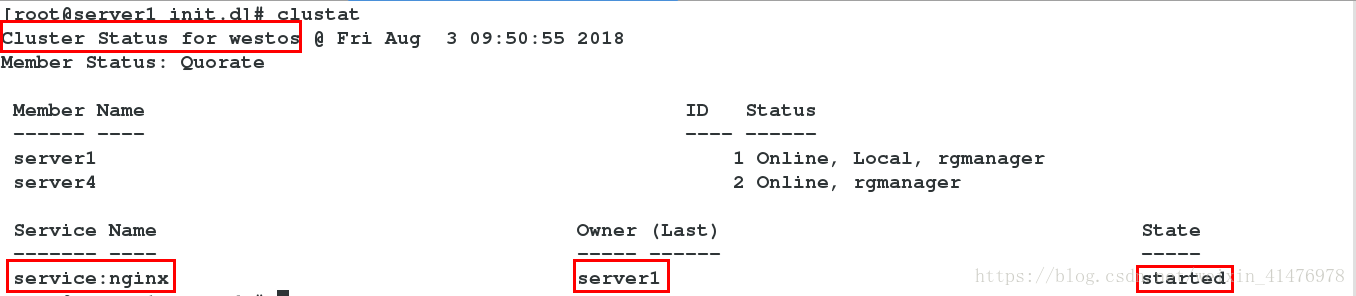

查询集群状态

[root@server1 init.d]# clustat

[root@server1 init.d]# cman_tool status

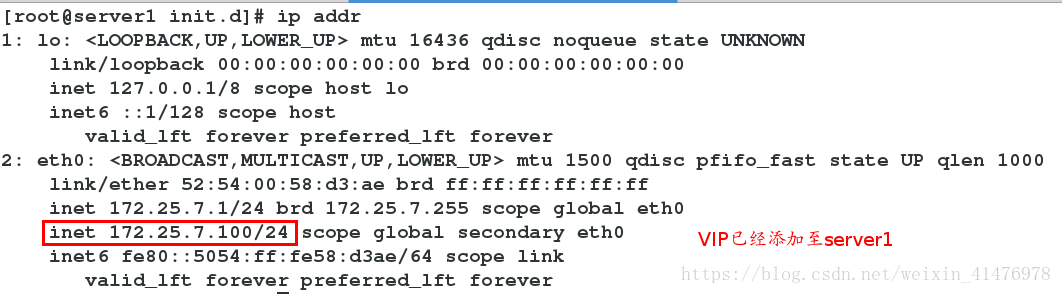

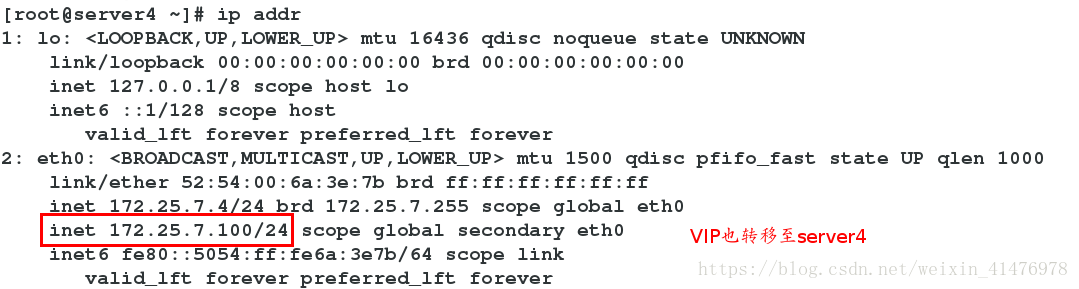

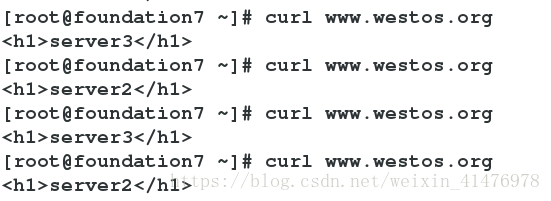

3.测试

在client中加入解析

[root@foundation7 ~]# vim /etc/hosts

172.25.7.100 www.westos.org负载均衡可以实现

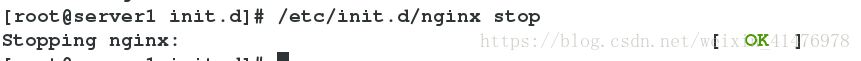

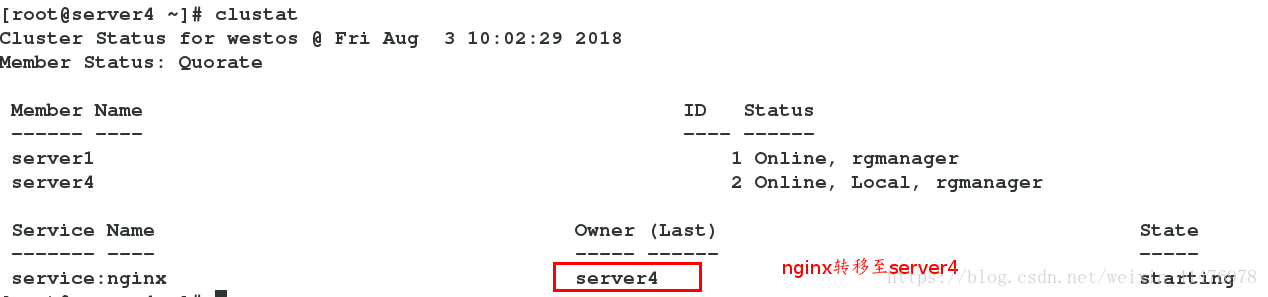

实现高可用,当server1的nginx关闭,nginx转移至server4

[root@server1 init.d]# /etc/init.d/nginx stop

4.clusvcadm 管理集群

[root@server1 ~]# clusvcadm -d nginx ##禁用服务

[root@server1 ~]# clusvcadm -e nginx ##开启服务

[root@server1 ~]# clusvcadm -r nginx -m server1

##nginx转移至server1运行

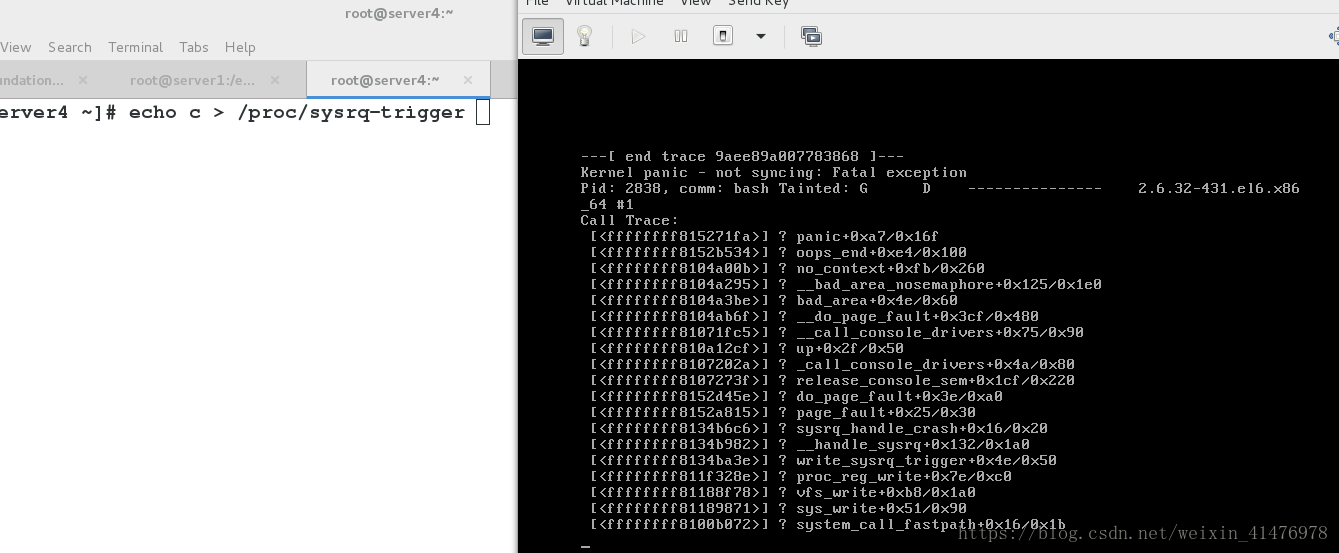

但是当你执行echo c>/proc/sysrq-trigger时,模拟内核崩溃,发现server1并没有接管,下面就是强大的fence讲解

五、Fence机制

1.初识Fence

集群中每个节点之间互相发送探测包进行判断节点的存活性。一般会有专门的线路进行探测,这条线路称为“心跳线”。假设server1的心跳线出问题,则server4会认为server1出问题,然后就会把资源调度在server4或者上运行,但server1会认为自己没问题不让其他节点抢占资源,此时就出现了脑裂(split brain)。

此时如果在整个环境里有一种设备直接把server1断电,则可以避免脑裂的发生,这种设备叫做fence

2.配置Fence

安装软件包:

fence-virtd-libvirt-0.3.2-5.el7.x86_64

fence-virtd-multicast-0.3.2-5.el7.x86_64

fence-virtd-0.3.2-5.el7.x86_64生成key文件

[root@foundation7 cluster]# mkdir /etc/cluster

##生成随机数key

[root@foundation7 cluster]# dd if=/dev/urandom of=fence_xvm.key bs=128 count=1

把key文件分发给两个节点

[root@foundation7 cluster]# scp fence_xvm.key server1:/etc/cluster/

[root@foundation7 cluster]# scp fence_xvm.key server4:/etc/cluster/

fence设置:

[root@foundation7 ~]# fence_virtd -c

Module search path [/usr/lib64/fence-virt]:

Available backends:

libvirt 0.1

Available listeners:

multicast 1.2

Listener modules are responsible for accepting requests

from fencing clients.

Listener module [multicast]:

The multicast listener module is designed for use environments

where the guests and hosts may communicate over a network using

multicast.

The multicast address is the address that a client will use to

send fencing requests to fence_virtd.

Multicast IP Address [225.0.0.12]:

Using ipv4 as family.

Multicast IP Port [1229]:

Setting a preferred interface causes fence_virtd to listen only

on that interface. Normally, it listens on all interfaces.

In environments where the virtual machines are using the host

machine as a gateway, this *must* be set (typically to virbr0).

Set to 'none' for no interface.

Interface [br0]: ###此处根据自己的网卡名进行设置

The key file is the shared key information which is used to

authenticate fencing requests. The contents of this file must

be distributed to each physical host and virtual machine within

a cluster.

Key File [/etc/cluster/fence_xvm.key]:

Backend modules are responsible for routing requests to

the appropriate hypervisor or management layer.

Backend module [libvirt]:

Configuration complete.

=== Begin Configuration ===

fence_virtd {

listener = "multicast";

backend = "libvirt";

module_path = "/usr/lib64/fence-virt";

}

listeners {

multicast {

key_file = "/etc/cluster/fence_xvm.key";

address = "225.0.0.12";

interface = "br0";

family = "ipv4";

port = "1229";

}

}

backends {

libvirt {

uri = "qemu:///system";

}

}

=== End Configuration ===

Replace /etc/fence_virt.conf with the above [y/N]?

重启服务

[root@foundation7 ~]# systemctl restart fence_virtd.service

[root@foundation7 ~]# netstat -anlupe |grep 1229

udp 0 0 0.0.0.0:1229 0.0.0.0:* 0 66273 7234/fence_virtd 3.将Fence加入节点中

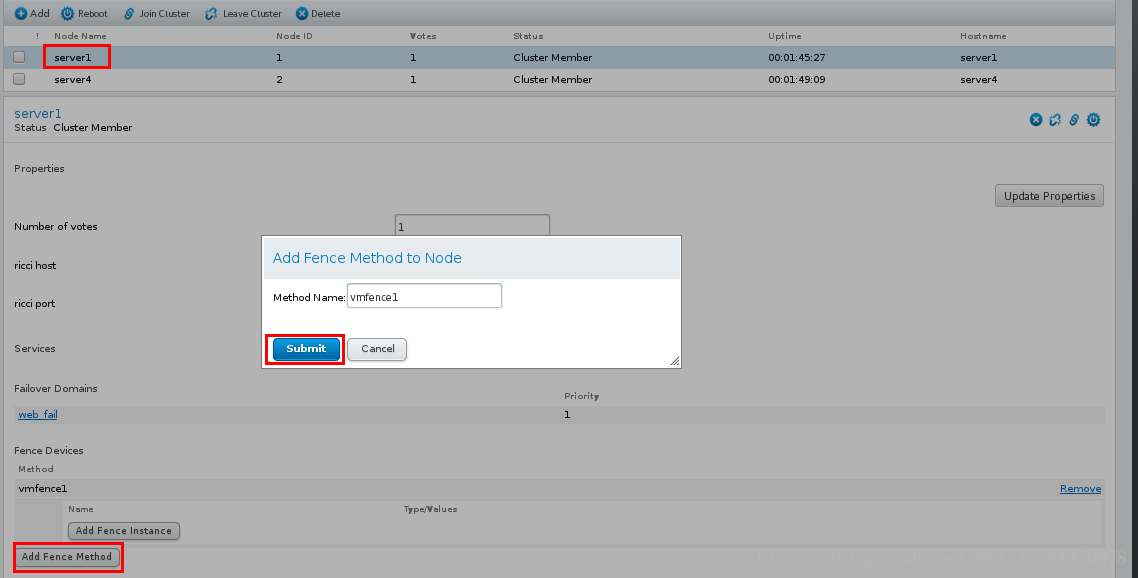

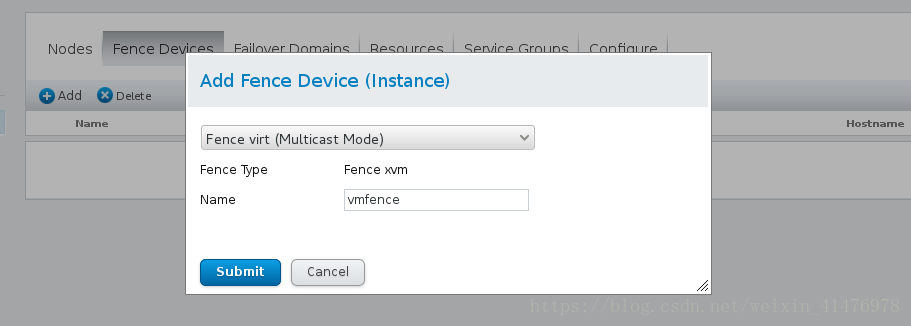

Fence Devices设置:

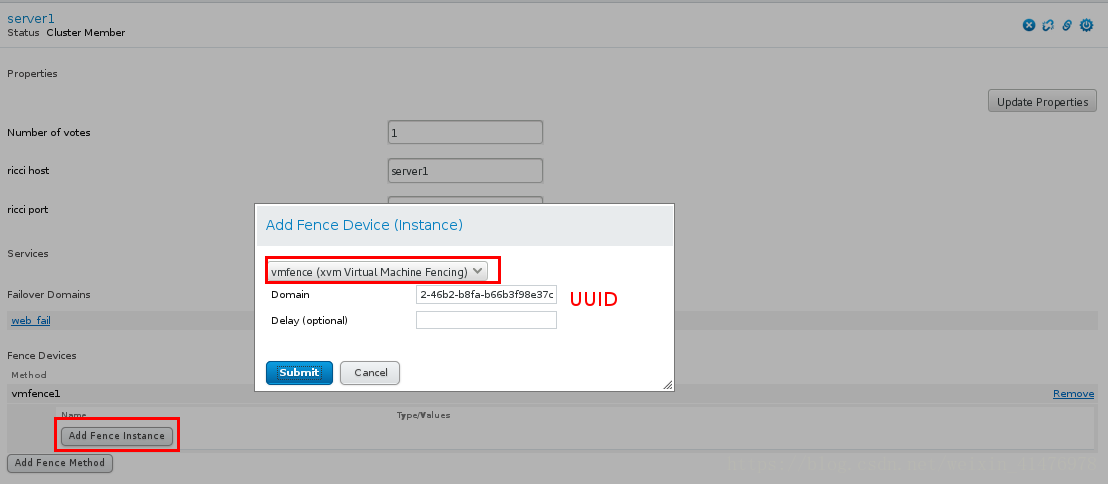

把Fence Method 加入节点

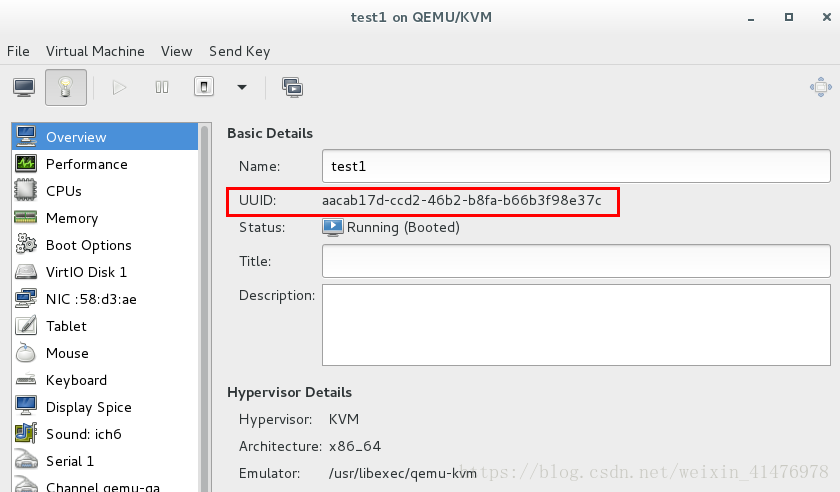

查看server1的UUID

在servre4中进行同样的设置,不再予以演示

测试:此时nginx在server4上运行,模拟内核崩溃

[root@server4 ~]# echo c > /proc/sysrq-trigger

之后server4断电重启,server1接管nginx服务