机器学习–XGBoost与LightGBM对比及应用

本文使用XGBoost与LightGBM对fashion_mnist数据进行分类预测。

import time

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from matplotlib.pylab import rcParams

import seaborn as sns

from sklearn.metrics import accuracy_score

from sklearn.model_selection import GridSearchCV

import xgboost as xgb

from xgboost import XGBClassifier

import lightgbm as lgb

from lightgbm import LGBMClassifier

#导入各种包

#可视化数据

import tensorflow as tf

from tensorflow.keras import datasets

fashionMnist = datasets.fashion_mnist

(train_images, train_labels), (test_images, test_labels) = fashionMnist.load_data()

#可视化

class_names = ['T-shirt/top','Trouser' ,'Pullover','Dress','Coat','Sandal','Shirt','Sneaker','Bag','Ankle boot']

plt.figure(figsize=(20,10))

for i in range(20):

plt.subplot(5,10,i+1)

plt.xticks([])

plt.yticks([])

plt.grid(False)

plt.imshow(train_images[i],cmap=plt.cm.binary)

plt.xlabel(class_names[train_labels[i]])

plt.show()

#数据预处理

train_images = train_images.reshape(-1,28*28)

test_images = test_images.reshape(-1,28*28)

print(train_images.shape,test_images.shape)

def show_time(diff):

m, s = divmod(diff, 60)

h, m = divmod(m, 60)

s, m, h = int(round(s, 0)), int(round(m, 0)), int(round(h, 0))

print("Execution Time: " + "{0:02d}:{1:02d}:{2:02d}".format(h, m, s))

training_times = []

testing_times = []

scores = []

#训练和测试的时间计算

def training_and_testing(clf,X,y,x_test,y_test):

print("Training...")

start = time.time()

model = clf.fit(X,y)

end = time.time()

training_times.append(end-start)

show_time(end-start)

print('\nTesting...')

start = time.time()

scores.append(accuracy_score(y_test,model.predict(x_test)))

end = time.time()

testing_times.append(end-start)

show_time(end-start)

return model

#xgb-model模型训练

xgb_model = training_and_testing(XGBClassifier(n_estimators=50,max_depth=5),train_images,train_labels,test_images,test_labels)

#lgb-model模型训练

lgb_model = training_and_testing(LGBMClassifier(n_estimators=50,max_depth=5),train_images,train_labels,test_images,test_labels)

#使用GridSearchCV 参数优化

def training_and_testing_with_grid_search(clf,params,X,y,x_test,y_test):

print("Training with grid search....")

start = time.time()

model = GridSearchCV(clf,params,scoring='accuracy',n_jobs=-1,cv=5).fit(X,y).best_estimator_

end = time.time()

print("Testing with Grid Search...")

start = time.time()

scores.append(accuracy_score(y_test,model.predict(x_test)))

end = time.time()

testing_times.append(end-start)

show_time(end-start)

return model

parm_grid= [{

'max_depth':[5,10],

'n_estimators':[100],

'learning_rate':[0.05,0.1],

'colsample_bytree':[0.8,0.95]}]

#模型训练

xgb_model_gs = training_and_testing_with_grid_search(XGBClassifier(random_state=42), params=parm_grid,X=train_images[:4000],y = train_labels[:4000],

x_test = test_images,y_test = test_labels)

lgb_model_gs = training_and_testing_with_grid_search(LGBMClassifier(random_state=42),params=parm_grid,X=train_images[:4000],y=train_labels[:4000],

x_test=test_images,y_test=test_labels)

print(scores,training_times,testing_times)

models = [('XGBoost', xgb_model),

('LightGBM', lgb_model),

('XGBoost Grid Search', xgb_model_gs),

('LightGBM Grid Search', lgb_model_gs)]

def plot_metric(model_scores, score='Accuracy'):

rcParams['figure.figsize'] = 7,5

plt.bar(model_scores['Model'], height=model_scores[score])

xlocs, xlabs = plt.xticks()

xlocs=[i for i in range(0,6)]

xlabs=[i for i in range(0,6)]

for i, v in enumerate(model_scores[score]):

plt.text(xlocs[i] - 0.25, v + 0.01, str(v))

plt.xlabel('Model')

plt.ylabel(score)

plt.xticks(rotation=45)

plt.show()

import pandas as pd

model_scores = pd.DataFrame({

'Model': [name for name, _ in models], 'Accuracy': scores })

model_scores.sort_values(by='Accuracy',ascending=False,inplace=True)

plot_metric(model_scores)

从图上发现,优化超参数也不一定能获得更好的结果。

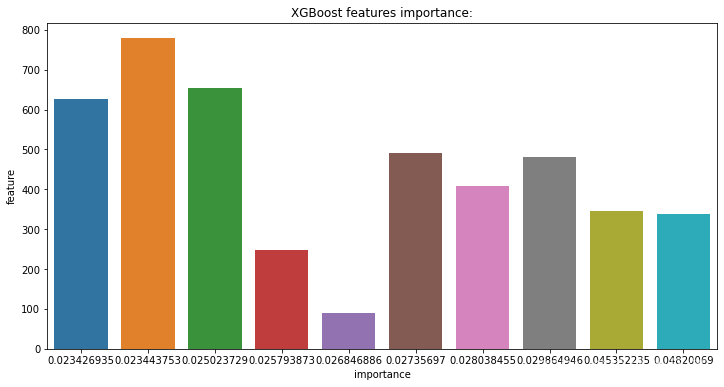

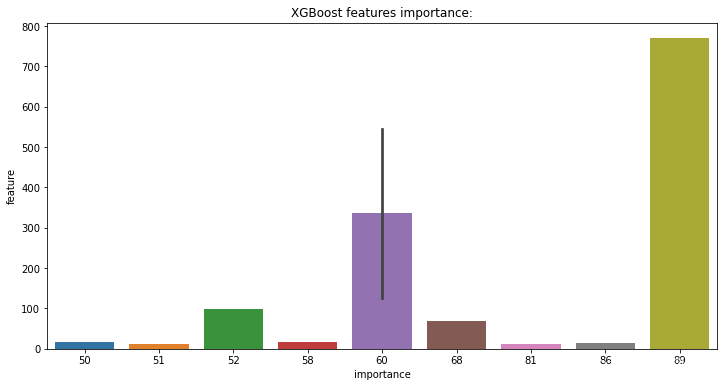

def feature_importances(df, model, model_name, max_num_features=10):

feature_importances = pd.DataFrame(columns = ['feature', 'importance'])

feature_importances['feature'] = df.columns

feature_importances['importance'] = model.feature_importances_

feature_importances.sort_values(by='importance', ascending=False, inplace=True)

feature_importances = feature_importances[:max_num_features]

# print(feature_importances)

plt.figure(figsize=(12, 6));

sns.barplot(x="importance", y="feature", data=feature_importances);

plt.title(model_name+' features importance:');

feature_importances(pd.DataFrame(train_images), xgb_model, 'XGBoost')

feature_importances(pd.DataFrame(test_images), lgb_model, 'XGBoost')

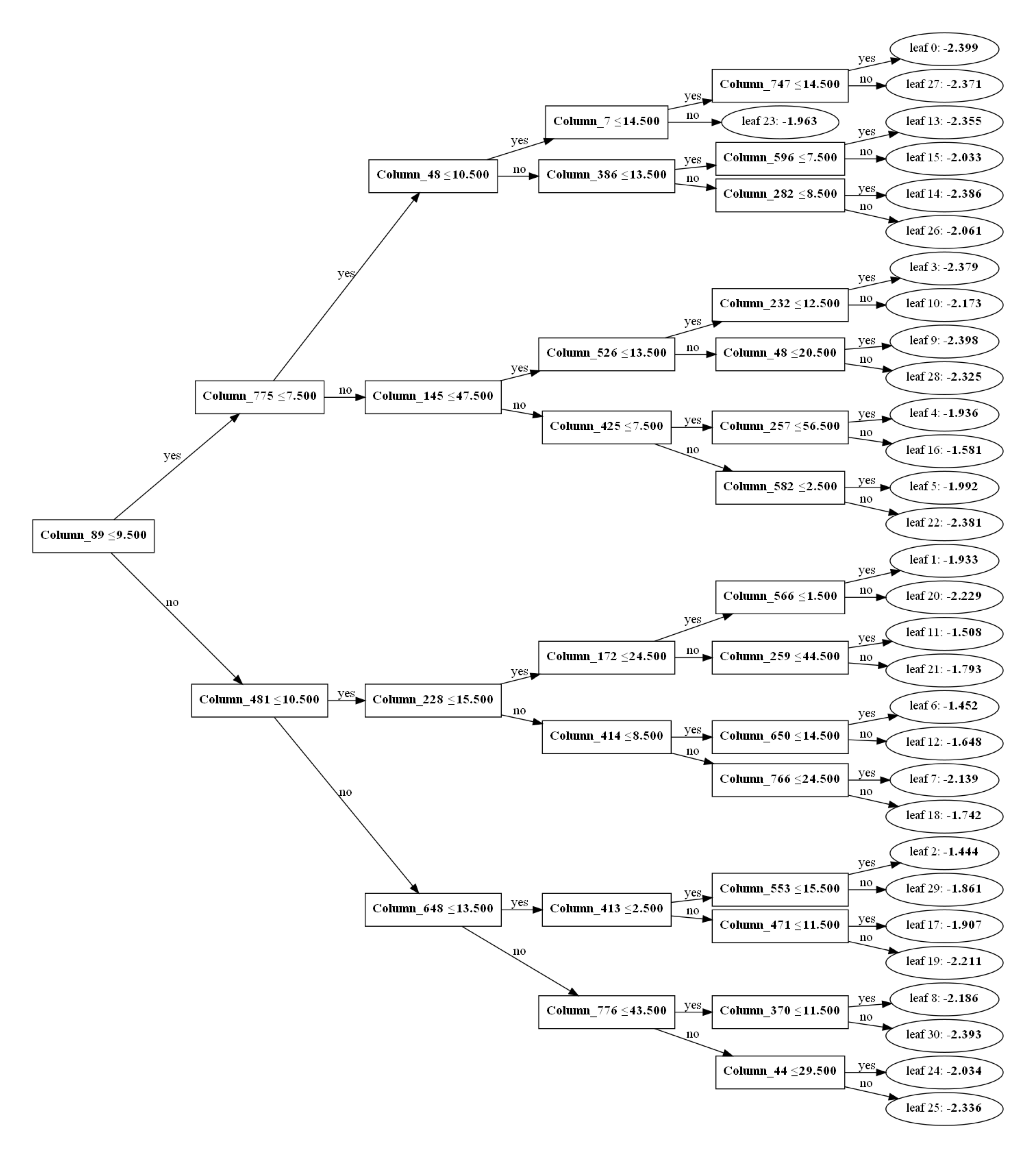

#xgboost树结构图

import xgboost

xgboost.plot_tree(xgb_model)

plt.show()

import lightgbm

lightgbm.plot_tree(lgb_model,dpi=800)

plt.show()