《机器学习公式推导与代码实现》学习笔记,记录一下自己的学习过程,详细的内容请大家购买作者的书籍查阅。

GBDT

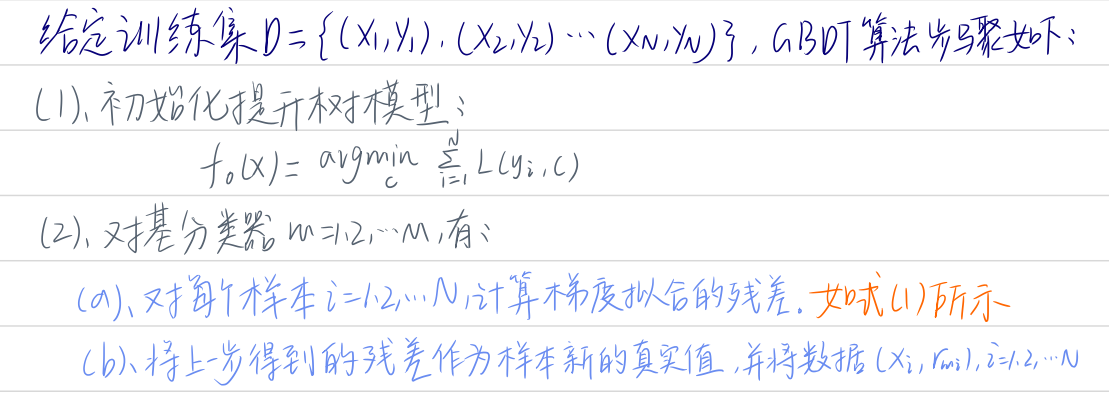

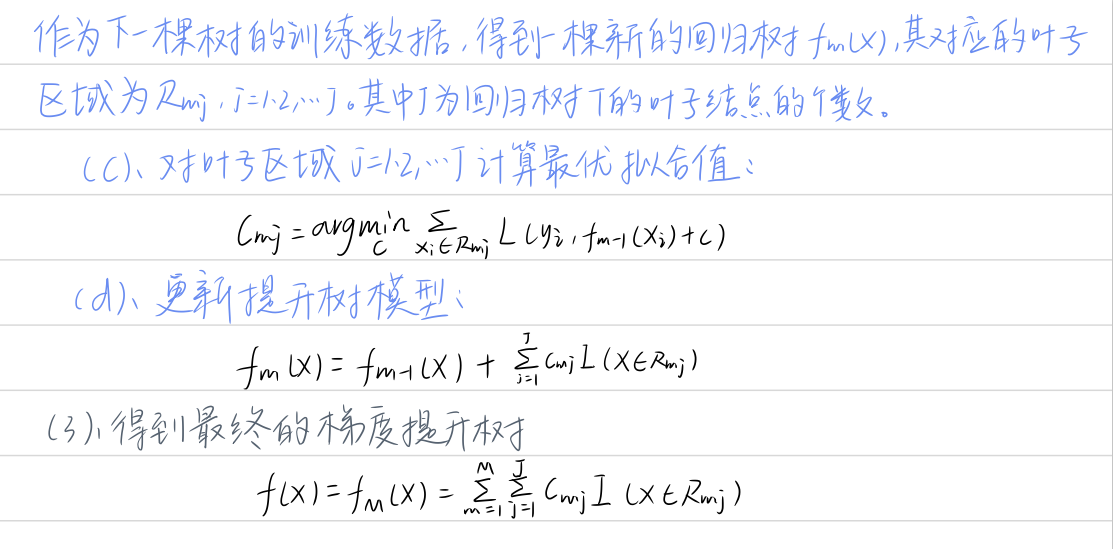

Boosting是一类将弱分类器提升为强分类器的算法总称。提升树(boosting tree)是弱分类器为决策树的提升方法。针对提升树模型,加性模型和前向分步算法的组合是典型的求解方式,当损失函数为平方损失和指数损失时,前向分步算法的每一步迭代都较为容易求解,但如果是一般的损失函数,前向分布算法的每一步迭代并不容易。所以有研究提出使用损失函数的负梯度在当前模型的值来求解更为一般的提升树模型的方法,叫做梯度提升树。

1 GBDT算法介绍

GBDT全称梯度提升决策树(gradient boosting decision tree),其基模型(弱分类器)时CART决策树,对应的梯度提升模型就叫做GBDT,针对回归的问题对应的梯度提升树叫做GBRT(gradient boosting regression tree)。使用多棵决策树组合就是提升树模型,使用梯度下降法对提升树模型进行优化的过程就是梯度提升树模型。大多数情况下,一般损失函数很难直接优化求解,因而就有了基于负梯度求解提升树模型的梯度提升树模型。梯度提升树以梯度下降的方法,使得损失函数的负梯度在当前模型的值作为回归提升树中残差的近似值。

2 GBDT算法实现

import numpy as np

# GBDT损失函数

class SquareLoss:

def loss(self, y, y_pred): # 平方损失函数

return 0.5 * np.power((y - y_pred), 2)

def gradient(self, y, y_pred): # 平方损失的一阶导数

return -(y - y_pred)

我们直接引入CART决策树来定义GBDT类,类属性包括GBDT的一些基本超参数,比如树的棵数、学习率、结点最小分裂样本数、树最大深度等。CART实现

from cart import RegressionTree

# GBDT类定义

class GBDT(object):

def __init__(self, n_estimators, learning_rate, min_samples_split, min_gini_impurity, max_depth, regression):

self.n_estimators = n_estimators # 树的棵树

self.learning_rate = learning_rate # 学习率

self.min_samples_split = min_samples_split # 结点的最小分裂样本数

self.min_gini_impurity = min_gini_impurity # 结点最小基尼不纯度

self.max_depth = max_depth # 最大深度

self.regression = regression # 默认为回归树

self.loss = SquareLoss() # 如果是分类树,需要定义分类树损失函数

self.estimators = []

self.test = RegressionTree(min_samples_split=self.min_samples_split, min_gini_impurity=self.min_gini_impurity, max_depth=self.max_depth)

for _ in range(self.n_estimators):

self.estimators.append(RegressionTree(min_samples_split=self.min_samples_split, min_gini_impurity=self.min_gini_impurity, max_depth=self.max_depth))

def fit(self, X, y):

self.estimators[0].fit(X, y) # 前向分布模型初始化,第一棵树

y_pred = self.estimators[0].predict(X) # 第一棵树的预测结果

for i in range(1, self.n_estimators): # 前向分布迭代训练

gradient = self.loss.gradient(y, y_pred)

self.estimators[i].fit(X, gradient)

y_pred -= np.multiply(self.learning_rate, self.estimators[i].predict(X))

def predict(self, X):

y_pred = self.estimators[0].predict(X) # 回归树预测

for i in range(1, self.n_estimators):

y_pred -= np.multiply(self.learning_rate, self.estimators[i].predict(X))

if not self.regression: # 分类树预测

proba = 1 / (1 + np.exp(-y_pred))

proba = np.vstack([1 - proba, proba]).T

y_pred = np.argmax(proba, axis=1)

return y_pred

实现GBDT与GBRT:

# GBDT

class GBDTClassifier(GBDT):

def __init__(self, n_estimators=2, learning_rate=.5, min_samples_split=2, min_info_gain=999, max_depth=float('inf')):

super(GBDTClassifier, self).__init__(

n_estimators=n_estimators,

learning_rate=learning_rate,

min_samples_split=min_samples_split,

min_gini_impurity=min_info_gain,

max_depth=max_depth,

regression=False

)

# GBRT

class GBDTRegressor(GBDT):

def __init__(self, n_estimators=2, learning_rate=0.1, min_samples_split=3, min_var_reduction=999, max_depth=float('inf')):

super(GBDTRegressor, self).__init__(

n_estimators=n_estimators,

learning_rate=learning_rate,

min_samples_split=min_samples_split,

min_gini_impurity=min_var_reduction,

max_depth=max_depth,

regression=True

)

# 回归树测试

from sklearn.model_selection import train_test_split

from sklearn.metrics import mean_squared_error, accuracy_score

from sklearn.datasets import load_boston

X, y = load_boston(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3)

model = GBDTRegressor()

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

mse = mean_squared_error(y_test, y_pred)

print("Mean Squared Error:", mse)

Mean Squared Error: 72.14748355263158

# 分类树测试

from sklearn.datasets._samples_generator import make_blobs # 导入模拟二分类数据生成模块

X, y = make_blobs(n_samples=150, n_features=2, centers=2, cluster_std=1.2, random_state=40) # 生成模拟二分类数据集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=40)

model = GBDTClassifier()

model.fit(X_train, y_train)

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(accuracy)

0.9777777777777777

3 基于sklearn实现GBDT与GBRT

# GradientBoostingRegressor

from sklearn.ensemble import GradientBoostingRegressor

X, y = load_boston(return_X_y=True)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3)

reg = GradientBoostingRegressor(n_estimators=200, learning_rate=0.5, max_depth=4, random_state=0)

reg.fit(X_train, y_train)

y_pred = reg.predict(X_test)

mse = mean_squared_error(y_pred, y_test)

print(mse)

11.275700412479738

# GradientBoostingClassifier

from sklearn.ensemble import GradientBoostingClassifier

X, y = make_blobs(n_samples=150, n_features=2, centers=2, cluster_std=1.2, random_state=40) # 生成模拟二分类数据集

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=40)

cls = GradientBoostingClassifier(n_estimators=200, learning_rate=0.5, max_depth=4, random_state=0)

cls.fit(X_train, y_train)

y_pred = cls.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(accuracy)

1.0