1. 引入

上一篇博客《机器学习框架Ray -- 3.2 RayRLlib训练BipedalWalker》,主要针对BipedalWalker普通版进行了训练,相对来讲环境较为简单。训练timesteps在80M时,平均回合奖励就非常稳定了。

BipedalWalkerHardcore环境则相对困难,对于算法、算力提出了更高的要求。Hardcore版本包含梯子、树桩和陷阱,需要在2000个时间步内获得300分。

在本篇博客中,使用双路e5-2596v3的30个核心+2080Ti,计算用时1.18d,经过约500M的timesteps,训练得到的回合平均奖励约为256。

2. 算法实践

1)为了使用BipedalWalker的Hardcore模式,需要在上一篇博客的基础上,进一步注册my_BipedalWalkerHardcore-v0环境。《机器学习框架Ray -- 3.2 RayRLlib训练BipedalWalker》![]() https://blog.csdn.net/wenquantongxin/article/details/130461821

https://blog.csdn.net/wenquantongxin/article/details/130461821

在~/anaconda3/envs/RayRLlib/lib/python3.8/site-packages/gymnasium/envs文件夹下,修改与box2d、my_envs、classic_control并列的__init__.py文件,添加my_BipedalWalkerHardcore-v0的注册内容。

# My self-defined envs reg

# ----------------------------------------

register(

id="my_BipedalWalker-v0",

entry_point="gymnasium.envs.my_envs.my_bipedal_walker:my_BipedalWalker",

max_episode_steps=1600,

reward_threshold=300,

)

register(

id="my_BipedalWalkerHardcore-v0",

entry_point="gymnasium.envs.my_envs.my_bipedal_walker:my_BipedalWalker",

kwargs={"hardcore": True},

max_episode_steps=2000,

reward_threshold=300,

)

2) 进一步优化了超参数的选择

适当降低了replay_buffer_config的capacity,避免过大的经验Buffer对于内存OOM的压力,并且一定程度上可以加快神经网络的优化收敛速度。

提高了rollout_fragment_length的大小,使得神经网络在不明显降低训练速度的基础上能够学习到更为“长期”的策略,利于训练的稳定性,并可以提高收敛速度。

| config | 取值 |

| replay_buffer_config["capacity"] | 500_000 |

| rollout_fragment_length | 64 |

训练代码为

import gymnasium as gym

from ray.rllib.algorithms.apex_ddpg.apex_ddpg import ApexDDPGConfig

from ray.rllib.algorithms.ppo import PPOConfig

from ray.tune.logger import pretty_print

import numpy as np

import math

import time

import os

import random

SCALE = 30.0

ob_low = np.array([-3.14, -5., -5., -5., -3.14, -5., -3.14, -5., 0., -3.14, -5., -3.14, -5., 0., -1., -1., -1., -1., -1., -1., -1., -1., -1., -1. ]) * SCALE * 2

ob_high = np.array([3.14, 5., 5., 5., 3.14, 5., 3.14, 5., 5., 3.14, \

5., 3.14, 5., 5., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1. ]) * SCALE * 2

at_low=np.array([-1.0, -1.0, -1.0, -1.0])

at_high=np.array([1.0, 1.0, 1.0, 1.0])

config = ApexDDPGConfig()

config = (

ApexDDPGConfig()

.framework("torch")

.environment(

env = "my_BipedalWalkerHardcore-v0" ,

observation_space=gym.spaces.Box(low=ob_low , high=ob_high, shape=(24,)),

action_space=gym.spaces.Box(low=at_low, high=at_high, shape=(4,)),

)

.training(use_huber=False,n_step=1)

.rollouts(num_envs_per_worker=30)

.resources(num_gpus=1)

.resources(num_trainer_workers=28)

.rollouts(num_rollout_workers=30)

)

config["actor_hiddens"] = [200, 200]

config["actor_hidden_activation"] = 'relu'

config["actor_lr"] = 0.0001

config["critic_hiddens"] = [200, 200]

config["critic_hidden_activation"] = 'relu'

config["critic_lr"] = 0.0001

config.replay_buffer_config["capacity"] = 500_000 # 500_000_000 occupies 9.75GBx4 memory

config["clip_rewards"] = False

config["lr"] = 0.0001

config["rollout_fragment_length"] = 64 # 10

config["smooth_target_policy"] = True

config["tau"] = 0.005

config["target_network_update_freq"] = 500

config["target_noise"] = 0.3

config["target_noise_clip"] = 0.5

config["train_batch_size"] = 128

config["observation_filter"] = "MeanStdFilter"

print(config.to_dict())

algo = config.build()

for iters in range(1,5001):

result = algo.train()

print("\n当前迭代次数: {}".format(iters))

print("平均reward: {}".format(result['episode_reward_mean']))3)训练结果

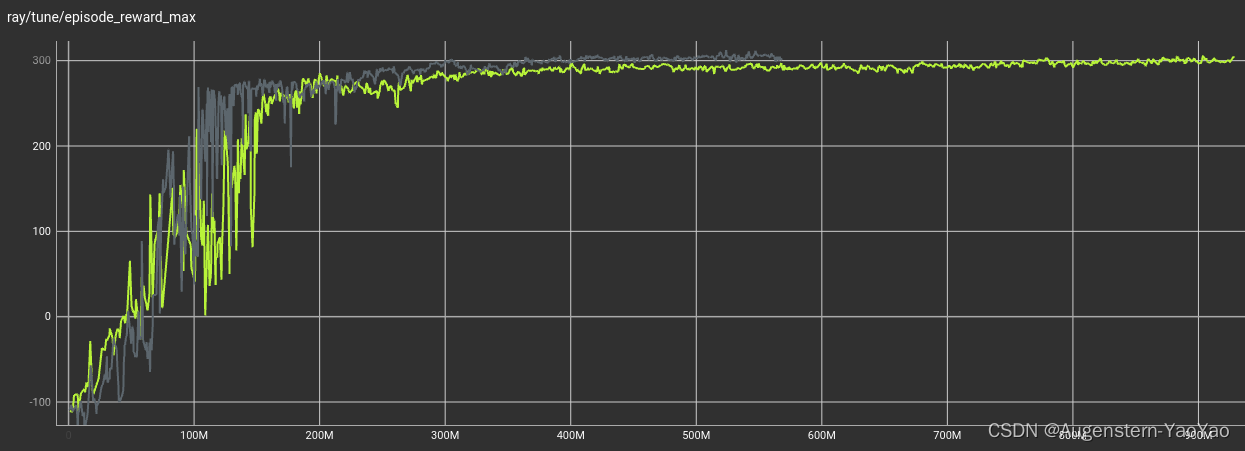

下图给出了训练过程的回合平均奖励值。

其中,深色线条对应的训练为replay_buffer_config["capacity"]取5_000_000导致OOM而中途计算失败。

实测replay_buffer_config["capacity"]取5_000_000时,训练后期内存占用接近100G;取500_000时,内存占用小于40G。

上图中,亮黄色训练记录的即为上述python代码的训练结果。其回合最大奖励值,在大于300M的timesteps即大约280。

当然,代码中所给的超参数有很大改进空间,本人没有详细调整。比如学习率、tau都可以稍微提高,神经网络结构可以适当缩减,以进一步提高收敛的速度。

3.其他注意事项

在RayRLlib库中,参考以下链接

Saving and Loading your RL Algorithms and Policies![]() https://docs.ray.io/en/latest/rllib/rllib-saving-and-loading-algos-and-policies.html给定了以下方法用于载入、恢复或重启算法的训练

https://docs.ray.io/en/latest/rllib/rllib-saving-and-loading-algos-and-policies.html给定了以下方法用于载入、恢复或重启算法的训练

- .save()方法用于储存训练中的checkpoints

- .from_checkpoint()用于从指定位置恢复algorithms或policy

-

compute_single_action(observation)用于从恢复的policy中进行单步推理

似乎RayRLlib库对于Apex-DDPG算法的Checkpoint恢复存在Bug,奖励值在Checkpoint处重新启动训练后断崖式下降;可以进行单步推理,但是效果远远差于训练过程中的奖励值。

以下代码为CartPole-v1 训练+Checkpoint评估,在该环境下运行效果非常好。但是,迁移到本文所述的Apex-DDPG后存在问题,还需要寻找解决方法。

# Create a PPO algorithm object using a config object ..

from ray.rllib.algorithms.ppo import PPOConfig

my_ppo_config = PPOConfig().environment("CartPole-v1")

my_ppo = my_ppo_config.build()

# 训练Agent

for iters in range(0,50):

result = my_ppo.train()

#print(pretty_print(result))

print("\n当前迭代次数: {}".format(iters))

print("平均reward: {}".format(result['episode_reward_mean']))

# .. and call `save()` to create a checkpoint.

path_to_checkpoint = my_ppo.save()

print(

"An Algorithm checkpoint has been created inside directory: "

f"'{path_to_checkpoint}'."

)

# Let's terminate the algo for demonstration purposes.

my_ppo.stop()单步推理

import numpy as np

from ray.rllib.policy.policy import Policy

# Use the `from_checkpoint` utility of the Policy class:

my_restored_policy = Policy.from_checkpoint("/home/yaoyao/ray_results/PPO_CartPole-v1_2023-05-04_03-09-142sowg3d5/checkpoint_000050/policies/default_policy")

# Use the restored policy for serving actions.

obs = np.array([0.0, 0.1, 0.2, 0.3]) # individual CartPole observation

# action = my_restored_policy.compute_single_action(obs)

action = my_restored_policy.compute_single_action(obs)

print(f"Computed action \n {action} from given CartPole observation.")

完整回合推理

import time

import gymnasium as gym

import numpy as np

from ray.rllib.policy.policy import Policy

my_restored_policy = Policy.from_checkpoint("/home/yaoyao/ray_results/PPO_CartPole-v1_2023-05-04_03-09-142sowg3d5/checkpoint_000050/policies/default_policy")

def RandomPolicy(observation):

# randomly choose between 0 and 1

action = np.random.choice([0, 1])

return action

def CheckPointPolicy(observation):

action_tuple = my_restored_policy.compute_single_action(observation, explore = False)

action = np.array(action_tuple[0])

return action

# BipedalWalkerHardcore-v3 main function

env = gym.make("CartPole-v1", render_mode = "human")

observation, info = env.reset(seed=42)

for _ in range(200): # 单个episode中的timesteps

env.render()

# action = RandomPolicy(observation)

action = CheckPointPolicy(observation)

observation, reward, terminated, truncated, info = env.step(action)

if terminated or truncated:

observation, info = env.reset()

env.close()