联合国贸易统计数据库UNCOMTRADE是国际海关组织汇总所有成员上报的各自进出口贸易情况的综合信息数据库,是进行国际贸易分析的必不可少的数据来源。联合国贸易统计数据库中提供国际海关组织的多种商品分类标准数据查询,包括HS2002、HS1996、HS1992、SITC1、SITC2、SITC3、SITC4等,覆盖250多个国家、五千种商品的6位码税号(国际海关组织4位码税号)的年度数据,最早可追溯至1962年,贸易数据记录总数超过10亿条。其官方提供了一种以网页协议获取数据的API方式,但使用上存在许多问题,本文将针对问题实现以下几个部分的内容:①对API进行封装,使之更符合常见的Python中数据获取的API形式;②使用PPTP方式(动态ip代理服务器),改变请求ip,以打破uncomtrade对单个ip取数据的限制;③使用多线程的方法,对多个国家进行数据的同时提取,加快数据提取效率。

目录

1 UNCOMTRADE API的再封装

1.1 原API简介

简要地说就是通过自己改变URL中的参数,并使用URL来获取数据。

URL格式:http://comtrade.un.org/api/get?parameters

参数介绍:

max:最大返回数据量(默认为100000);

r:reportering area,选择所需要的目标国家;

freq:选择数据为年度或月度(A,M);

ps:选择所需要的年份;

px:选择分类标准,如常用的SITC Revision 3为S3;

p:partner area,选择所需要的对象国家,如需要中国与俄罗斯的出口额,则目标为中国,对象为俄罗斯;

rg:选择进口或出口(进口为1,出口为2);

cc:选择产品代码;

fmt:选择输出文件格式,csv或json,默认使用json(实测中csv更快);

type:选择贸易类型,产品(C)或服务(S);

示例:

数据下载页面:

1.2 本文封装的API

在实际使用时,可能出现【502 Bad Gateway】【403 Forbidden】等问题,需要使用异常捕获等手段对这些问题进行记录或处理。并且,进口数据和出口数据只能分开获取,我们以国家的逻辑来取数据的话,就需要在新封装的API中将进口和出口数据进行拼接。此外我们为每一次获取的数据取名,以方便后续对数据的理解,因此封装后的函数将以字典的形式返回{数据名(str):数据(dataframe)}。

*考虑到后续需要使用代理,因此在这里将代理的情况也考虑进去了,当不需要使用代理是将相关参数设置为False和None即可。

代码:

import requests

import time

import pandas as pd

from pandas import json_normalize

import numpy as np

from tqdm import tqdm

from random import randint

import datetime

from io import StringIO

class proxy:

proxyHost = "u8804.5.tn.16yun.cn"

proxyPort = "6441"

proxyUser = "16IHUBEP"

proxyPass = "727634"

user_agents = []

proxies = {}

def __init__(self, proxyHost, proxyPort, proxyUser, proxyPass, user_agents):

self.proxyHost = proxyHost

self.proxyPort = proxyPort

self.proxyUser = proxyUser

self.proxyPass = proxyPass

self.user_agents = user_agents

proxyMeta = "http://%(user)s:%(pass)s@%(host)s:%(port)s" % {

"host": proxyHost,

"port": proxyPort,

"user": proxyUser,

"pass": proxyPass,

}

self.proxies = {

"http": proxyMeta,

"https": proxyMeta,

}

def download_url(url, ifuse_proxy = False ,proxy = None):

if(ifuse_proxy):

time.sleep(0.5)# 调用多线程时不需要这一行

random_agent = proxy.user_agents[randint(0, len(proxy.user_agents) - 1)] # chose an user agent from the user agent list above

tunnel = randint(1, 10000)# generate a tunnel

header = {

"Proxy-Tunnel": str(tunnel),

"User-Agent": random_agent

}

#print(header,proxy.proxies)

try:

if(ifuse_proxy):

content = requests.get(url, timeout=100,headers = header, proxies=proxy.proxies)

else:

content = requests.get(url, timeout=100, proxies=proxy)

''' note that sometimes we only get error informations in the responses, and here are some really dumb quick fixes'''

if (

content.text == "<html><body><h1>502 Bad Gateway</h1>\nThe server returned an invalid or incomplete response.\n</body></html>\n" or content.text == "Too Many Requests.\n" or content.text == "{\"Message\":\"An error has occurred.\"}"):

with open("./uncomtrade_data/serverError.csv", 'a', encoding="utf-8") as log:

log.write(str(datetime.datetime.now()) + "," + str(url) + "\n")

print("\n" + content.content.decode())

if(ifuse_proxy):

download_url(url,ifuse_proxy = True , proxy = proxy)

else:

download_url(url,ifuse_proxy = False , proxy = None)

else:

if('json' in url):

return json_normalize(content.json()['dataset'])

elif('csv' in url):

return pd.read_csv(StringIO(content.text),on_bad_lines = 'skip')

except requests.RequestException as e:

''' I have absolutely no knowledge about Request Exception Handling so I chose to write the error information to a log file'''

print(type(e).__name__ + " has occurred, change proxy!")

# if(type(e).__name__=='JSONDecodeError'):

# print(content.content)

with open("./uncomtrade_data/exp.csv", 'a', encoding="utf-8") as log:

log.write(

str(datetime.datetime.now()) + "," + str(type(e).__name__) + "," + str(url) + "\n")

if(ifuse_proxy):

download_url(url,ifuse_proxy = True , proxy = proxy)

else:

download_url(url,ifuse_proxy = False , proxy = None)

def get_data_un_comtrade(max_un = 100000,r = '156',freq = 'A',ps = '2021',px = 'S4',p = 'all',rg = '2',cc = 'TOTAL',fmt = 'json',type_un ='C',ifuse_proxy = False ,proxy = None ):

'''

max_un:最大返回数据量(默认为100000);

r:reportering area,选择所需要的目标国家;

freq:选择数据为年度或月度(A,M);

ps:选择所需要的年份;

px:选择分类标准,如常用的SITC Revision 3为S3;

p:partner area,选择所需要的对象国家,如需要中国与俄罗斯的出口额,则目标为中国,对象为俄罗斯;

rg:选择进口或出口(进口为1,出口为2);

cc:选择产品代码;

fmt:选择输出文件格式,csv或json,默认使用json(实测中csv更快);

type_un:选择贸易类型,产品或服务;

ifuse_proxy:是否使用代理;

proxy:代理信息。

return:{数据名称: 数据}{str:dataframe}

'''

pre_url = "http://comtrade.un.org/api//get/plus?max={}&type={}&freq={}&px={}&ps={}&r={}&p={}&rg={}&cc={}&fmt={}"

url_use = pre_url.format(max_un,type_un,freq,px,ps,r,p,rg,cc,fmt)

print("Getting data from:"+url_use)

data = download_url(url_use, ifuse_proxy = ifuse_proxy ,proxy = proxy)

if(rg==1):

ex_or_in = 'IMPORT'

else:

ex_or_in = 'EXPORT'

data_name = ps+"_"+r+"_"+p+"_"+px+"_"+cc+"_"+ex_or_in+"_"+freq

return {data_name:data}同时,在获取不同国家数据时,需要有不同国家的国家代码,官方将这一数据提供在https://comtrade.un.org/Data/cache/reporterAreas.json ,也可以直接使用下面的字典数据:

countries = {'156': 'China','344': 'China, Hong Kong SAR','446': 'China, Macao SAR',

'4': 'Afghanistan','8': 'Albania','12': 'Algeria','20': 'Andorra','24': 'Angola','660': 'Anguilla','28': 'Antigua and Barbuda','32': 'Argentina','51': 'Armenia',

'533': 'Aruba','36': 'Australia','40': 'Austria','31': 'Azerbaijan','44': 'Bahamas','48': 'Bahrain','50': 'Bangladesh', '52': 'Barbados',

'112': 'Belarus','56': 'Belgium','58': 'Belgium-Luxembourg','84': 'Belize','204': 'Benin','60': 'Bermuda','64': 'Bhutan','68': 'Bolivia (Plurinational State of)','535': 'Bonaire',

'70': 'Bosnia Herzegovina','72': 'Botswana','92': 'Br. Virgin Isds','76': 'Brazil','96': 'Brunei Darussalam','100': 'Bulgaria','854': 'Burkina Faso','108': 'Burundi','132': 'Cabo Verde','116': 'Cambodia',

'120': 'Cameroon','124': 'Canada','136': 'Cayman Isds','140': 'Central African Rep.','148': 'Chad','152': 'Chile',

'170': 'Colombia','174': 'Comoros','178': 'Congo','184': 'Cook Isds','188': 'Costa Rica','384': "Côte d'Ivoire",'191': 'Croatia','192': 'Cuba','531': 'Curaçao','196': 'Cyprus','203': 'Czechia',

'200': 'Czechoslovakia','408': "Dem. People's Rep. of Korea",'180': 'Dem. Rep. of the Congo','208': 'Denmark','262': 'Djibouti','212': 'Dominica','214': 'Dominican Rep.','218': 'Ecuador',

'818': 'Egypt','222': 'El Salvador','226': 'Equatorial Guinea','232': 'Eritrea','233': 'Estonia','231': 'Ethiopia','234': 'Faeroe Isds','238': 'Falkland Isds (Malvinas)','242': 'Fiji','246': 'Finland',

'251': 'France','254': 'French Guiana','258': 'French Polynesia','583': 'FS Micronesia','266': 'Gabon','270': 'Gambia','268': 'Georgia','276': 'Germany','288': 'Ghana','292': 'Gibraltar',

'300': 'Greece','304': 'Greenland','308': 'Grenada','312': 'Guadeloupe','320': 'Guatemala','324': 'Guinea','624': 'Guinea-Bissau','328': 'Guyana','332': 'Haiti','336': 'Holy See (Vatican City State)',

'340': 'Honduras','348': 'Hungary','352': 'Iceland','699': 'India','364': 'Iran','368': 'Iraq','372': 'Ireland','376': 'Israel','381': 'Italy','388': 'Jamaica','392': 'Japan',

'400': 'Jordan','398': 'Kazakhstan','404': 'Kenya','296': 'Kiribati','414': 'Kuwait','417': 'Kyrgyzstan','418': "Lao People's Dem. Rep.",'428': 'Latvia','422': 'Lebanon','426': 'Lesotho',

'430': 'Liberia','434': 'Libya','440': 'Lithuania','442': 'Luxembourg','450': 'Madagascar','454': 'Malawi','458': 'Malaysia','462': 'Maldives','466': 'Mali','470': 'Malta','584': 'Marshall Isds',

'474': 'Martinique','478': 'Mauritania','480': 'Mauritius','175': 'Mayotte','484': 'Mexico','496': 'Mongolia','499': 'Montenegro','500': 'Montserrat','504': 'Morocco','508': 'Mozambique','104': 'Myanmar',

'580': 'N. Mariana Isds','516': 'Namibia','524': 'Nepal','530': 'Neth. Antilles','532': 'Neth. Antilles and Aruba','528': 'Netherlands','540': 'New Caledonia','554': 'New Zealand','558': 'Nicaragua',

'562': 'Niger','566': 'Nigeria','579': 'Norway','512': 'Oman','586': 'Pakistan','585': 'Palau','591': 'Panama','598': 'Papua New Guinea','600': 'Paraguay','459': 'Peninsula Malaysia','604': 'Peru','608': 'Philippines',

'616': 'Poland','620': 'Portugal','634': 'Qatar','410': 'Rep. of Korea','498': 'Rep. of Moldova','638': 'Réunion','642': 'Romania','643': 'Russian Federation','646': 'Rwanda','647': 'Ryukyu Isd','461': 'Sabah',

'652': 'Saint Barthelemy','654': 'Saint Helena','659': 'Saint Kitts and Nevis','662': 'Saint Lucia','534': 'Saint Maarten','666': 'Saint Pierre and Miquelon','670': 'Saint Vincent and the Grenadines',

'882': 'Samoa','674': 'San Marino','678': 'Sao Tome and Principe','457': 'Sarawak','682': 'Saudi Arabia','686': 'Senegal','688': 'Serbia','690': 'Seychelles','694': 'Sierra Leone','702': 'Singapore',

'703': 'Slovakia','705': 'Slovenia','90': 'Solomon Isds','706': 'Somalia','710': 'South Africa','728': 'South Sudan','724': 'Spain','144': 'Sri Lanka','275': 'State of Palestine',

'729': 'Sudan','740': 'Suriname','748': 'Eswatini','752': 'Sweden','757': 'Switzerland','760': 'Syria','762': 'Tajikistan','807': 'North Macedonia','764': 'Thailand','626': 'Timor-Leste',

'768': 'Togo','772': 'Tokelau','776': 'Tonga','780': 'Trinidad and Tobago','788': 'Tunisia','795': 'Turkmenistan','796': 'Turks and Caicos Isds','798': 'Tuvalu','800': 'Uganda',

'804': 'Ukraine','784': 'United Arab Emirates','826': 'United Kingdom','834': 'United Rep. of Tanzania','858': 'Uruguay','850': 'US Virgin Isds','842': 'USA','860': 'Uzbekistan',

'548': 'Vanuatu','862': 'Venezuela','704': 'Viet Nam','876': 'Wallis and Futuna Isds','887': 'Yemen','894': 'Zambia','716': 'Zimbabwe'}

对上述API 进行调用的实例:

#使用上述封装后的get_data_un_comtrade()取数据实例【不使用动态ip代理】【不使用多线程】

#例子:获取中国2021年出口所有国家的所有商品贸易数据

#使用上述封装后的get_data_un_comtrade()取数据实例【不使用动态ip代理】【不使用多线程】

#例子:获取中国2021年出口所有国家的所有商品贸易数据

temp = get_data_un_comtrade(max_un = 100000,r = '156',freq = 'A',ps = '2021',px = 'S4',p = 'all',rg = '2',cc = 'TOTAL',fmt = 'json',type_un ='C',ifuse_proxy = False ,proxy = None )

temp_name = list(temp.keys())[0]

print("DATA NAME IS "+temp_name)

temp[temp_name]输出:

Getting data from:http://comtrade.un.org/api//get/plus?max=100000&type=C&freq=A&px=S4&ps=2021&r=156&p=all&rg=2&cc=TOTAL&fmt=json DATA NAME IS 2021_156_all_S4_TOTAL_EXPORT_AOut[13]:

pfCode

yr

period

periodDesc

aggrLevel

IsLeaf

rgCode

rgDesc

rtCode

rtTitle

...

qtAltCode

qtAltDesc

TradeQuantity

AltQuantity

NetWeight

GrossWeight

TradeValue

CIFValue

FOBValue

estCode

0 H5 2021 2021 2021 5 0 0 X 156 China ... -1 N/A 0 0.0 0 0.0 2756859964 0.0 2.756860e+09 4 1 H5 2021 2021 2021 5 0 0 X 156 China ... -1 N/A 0 0.0 0 0.0 2312182195 0.0 2.312182e+09 4 2 H5 2021 2021 2021 5 0 0 X 156 China ... -1 N/A 0 0.0 0 0.0 1658462 0.0 1.658462e+06 4 3 H5 2021 2021 2021 5 0 0 X 156 China ... -1 N/A 0 0.0 0 0.0 3565158772 0.0 3.565159e+09 4 4 H5 2021 2021 2021 5 0 0 X 156 China ... -1 N/A 0 0.0 0 0.0 276740207 0.0 2.767402e+08 4 ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... ... 214 H5 2021 2021 2021 5 0 0 X 156 China ... -1 N/A 0 0.0 0 0.0 1227521463 0.0 1.227521e+09 4 215 H5 2021 2021 2021 5 0 0 X 156 China ... -1 N/A 0 0.0 0 0.0 1950333100 0.0 1.950333e+09 4 216 H5 2021 2021 2021 5 0 0 X 156 China ... -1 N/A 0 0.0 0 0.0 88878914 0.0 8.887891e+07 4 217 H5 2021 2021 2021 5 0 0 X 156 China ... -1 N/A 0 0.0 0 0.0 2714022068 0.0 2.714022e+09 4 218 H5 2021 2021 2021 5 0 0 X 156 China ... -1 N/A 0 0.0 0 0.0 43630019204 0.0 4.363002e+10 4 219 rows × 35 columns

2. 动使用态ip代理获取大量数据

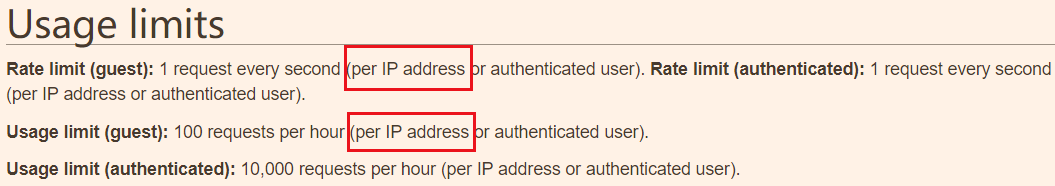

由于官方对单个ip有以下数据获取限制:

因此想要单次获取大量数据,就必须使用PPTP这种手段。

此外最好在请求头中增添User-Agent字段,并且将其设置为从pc chorm、ie等浏览器发出的请求(默认将是从Pythonxxx发出的,很多服务器收到这种请求头就会直接拒绝访问)。可供选择的有https://download.csdn.net/download/standingflower/86515035

使用代码例子:

#使用上述封装后的get_data_un_comtrade()取数据实例【使用动态ip代理】【不使用多线程】

#使用上述封装后的get_data_un_comtrade()取数据实例【使用动态ip代理】【不使用多线程】

#proxyHost、proxyPort、proxyUser、proxyPass、user_agents需根据自己使用的代理来进行设置

proxyHost = "your proxyHost "

proxyPort = "your proxyPort "

proxyUser = "your proxyUser "

proxyPass = "your proxyPass "

user_agents = [

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)",

"Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)",

"Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)",

"Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)",

"Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0",

"Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5",

"Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20",

"Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52"]

#初始化proxy实例

proxy_use = proxy(proxyHost, proxyPort, proxyUser, proxyPass, user_agents)

#调用数据获取函数,使用proxy,防止获取数据时ip被封禁

#例子:获取所有国家2021年的贸易数据

num = 0

for key in list(countries.keys()):

print(countries[key]+" BEGINS! TIME:",datetime.datetime.now())

temp_import = get_data_un_comtrade(max_un = 100000,r = key,freq = 'A',ps = '2021',px = 'HS',p = 'all',rg = '1',cc = 'TOTAL',fmt = 'csv',type_un ='C',ifuse_proxy = True ,proxy = proxy_use )

temp_name_import = list(temp_import.keys())[0]

temp_export = get_data_un_comtrade(max_un = 100000,r = key,freq = 'A',ps = '2021',px = 'HS',p = 'all',rg = '2',cc = 'TOTAL',fmt = 'csv',type_un ='C',ifuse_proxy = True ,proxy = proxy_use )

temp_name_export = list(temp_export.keys())[0]

if((temp_import[temp_name_import] is not None) or (temp_export[temp_name_export] is not None)):

temp_data = pd.concat([temp_import[temp_name_import],temp_export[temp_name_export]],axis=0)

num = num +1

if(not temp_data.empty):

temp_data.to_excel("./uncomtrade_data/uncomtrade_data_test2/"+countries[key]+".xlsx")

print("DATA NAME IS "+temp_name_import+" and "+temp_name_export + ". COMPLETED! "+ str(len(list(countries.keys()))-num)+" remains!")

else:

print(temp_name_import +" or "+temp_name_export+" is None! SKIP!")

else:

print(temp_name_import +" or "+temp_name_export+" is None! SKIP!")

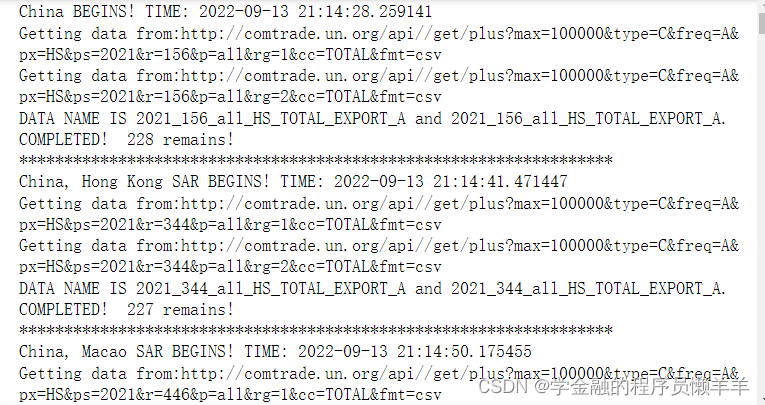

print("******************************************************************")输出:

3. 使用多线程加快数据提取效率

在动态ip的基础上再进行多线程操作,这样可以大大加快数据提取的速度。

这里构建多线程使用到的相关知识是:通过集成threading.thread类并重写run()方法来构建线程实例,使用信号量来控制线程数量 。

代码:

#使用上述封装后的get_data_un_comtrade()取数据实例【使用动态ip代理】【使用多线程】

#proxyHost、proxyPort、proxyUser、proxyPass、user_agents需根据自己使用的代理来进行设置

proxyHost = "your proxyHost "

proxyPort = "your proxyPort "

proxyUser = "your proxyUser "

proxyPass = "your proxyPass "

user_agents = [

"Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)",

"Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)",

"Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)",

"Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)",

"Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)",

"Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)",

"Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1",

"Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0",

"Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5",

"Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20",

"Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52"]

#初始化proxy实例

proxy_use = proxy(proxyHost, proxyPort, proxyUser, proxyPass, user_agents)

import threading

import queue

import random

import time

class DownloadData(threading.Thread):

all_data = None

def __init__(self,country_code):

super().__init__()

self.country_code = country_code

def run(self):

with semaphore:

print(countries[self.country_code]+" BEGINS! TIME:",datetime.datetime.now())

temp_import = get_data_un_comtrade(max_un = 100000,r = self.country_code,freq = 'A',ps = '2021',px = 'HS',p = 'all',rg = '1',cc = 'TOTAL',fmt = 'csv',type_un ='C',ifuse_proxy = True ,proxy = proxy_use )

temp_name_import = list(temp_import.keys())[0]

temp_export = get_data_un_comtrade(max_un = 100000,r = self.country_code,freq = 'A',ps = '2021',px = 'HS',p = 'all',rg = '2',cc = 'TOTAL',fmt = 'csv',type_un ='C',ifuse_proxy = True ,proxy = proxy_use )

temp_name_export = list(temp_export.keys())[0]

if((temp_import[temp_name_import] is not None) or (temp_export[temp_name_export] is not None)):

temp_data = pd.concat([temp_import[temp_name_import],temp_export[temp_name_export]],axis=0)

if(not temp_data.empty):

temp_data.to_excel("./uncomtrade_data/uncomtrade_data_test3/"+countries[self.country_code]+".xlsx")

print("DATA NAME IS "+temp_name_import+" and "+temp_name_export + ". COMPLETED! ")

else:

print(temp_name_import +" or "+temp_name_export+" is None! SKIP!")

else:

print(temp_name_import +" or "+temp_name_export+" is None! SKIP!")

return

thread_list = [] # 定义一个列表,向里面追加线程

MAX_THREAD_NUM = 5 #最大线程数

semaphore = threading.BoundedSemaphore(MAX_THREAD_NUM) # 或使用Semaphore方法

for i,country_code in zip(list(range(len(countries.keys()))),list(countries.keys())):

# print(i)

m = DownloadData(country_code)

thread_list.append(m)

for m in thread_list:

m.start() # 调用start()方法,开始执行

for m in thread_list:

m.join() # 子线程调用join()方法,使主线程等待子线程运行完毕之后才退出

能开的线程数越多,提升的速度越快,数据下载出来和没有开多线程的数据是一样的

4. 几点值得注意的点

(1)实测使用csv格式提取数据比json快很多;

(2)台湾的数据是编号490,Other Asia,nes

(3)查询国家的时候,只能录入国家编号,查询国家对应编号的地址是https://comtrade.un.org/Data/cache/reporterAreas.json

(4)查询对手方国家的时候,只能录入国家编号,查询对手方国家对应编号的地址是https://comtrade.un.org/Data/cache/partnerAreas.json

(5)查询相关商品对应的HS编码,地址是https://comtrade.un.org/Data/cache/classificationHS.json

(6)如果 freq 参数赋值为 M (代表以月份为单位获取数据)时,px (classification) 参数不要选择 SITC 那一套 (ST, S1, S2, … , S4) 因为没有数据,获得的都将是空表。