无聊爬爬,仅供学习,无其他用途

这几天在高考派(http://www.gaokaopai.com/)上爬招生信息,其中也绕了不少弯路也学到了许多。

以下为涉及到的模块

import requests

from fake_useragent import UserAgent

from multiprocessing import Process

import urllib.request as r

import threading

import re

import time

import random首先看我们需要爬取的网页

不同学校对应文科理科以及全国各省才能确定招生计划,通过点击搜索可以获取到一个请求页面,是通过ajax实现的

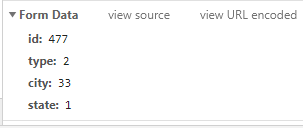

其中发送的数据如下

通过多个页面对比可以知道id指的是学校id,tpye为1或2指的是文科或理科,city自然是城市号,state为1或0表示有或无招生计划。

所以我们需要先获取全部id和city并写入到txt中,这部分通过在首页中使用BeautifulSoup和正则来实现,具体代码很简单,得到学校和对应的url、省份与id号的文本文件:

一共有3054个大学

以及31个省份

然后通过进入各个大学的url中发送请求获取json数据

#获取id列表

def getSchoolIdList():

#...

#获取city列表

def getCityIdList():

# ...

#获取请求数据列表

def getDataList(schoolId,cityId):

dataList = []

for sid in schoolId:

for cid in cityId:

for type in [1, 2]:

dataList.append('id={}&type={}&city={}&state=1'.format(sid, type, cid))

return dataList也就是说一共有3054x31x2=189348个数据需要获取

并且考虑到是大量数据,为了防止被封还得设置代理以及不同的消息头

我是通过向代理网站发送请求获取代理ip(19块一天,不过能无限取),另外在proxies字典中如果是'http'会报错,'http'和'http'都有也有错误,不知为啥,索性从代理网站获取https的ip

#获取代理ip列表,其中有15个ip

def getProxyList():

url1 = 'http://api3.xiguadaili.com/ip/?tid=558070598680507&num=15&delay=1&category=2&protocol=https'

res = requests.get(url1)

proxyList = []

for ip in res.text.split('\r\n'):

proxyList.append(ip)

return proxyList

#随机获取一条代理ip

def getProxies(proxyList):

proxy_ip = random.choice(proxyList)

proxies = {

'https': 'https://' + proxy_ip

}

return proxies

#随机获取一个消息头

def getUserAgent():

h = {

'User-Agent': UserAgent().random,

'X-Requested-With': 'XMLHttpRequest'

}

return h然后就是发送请求了,我是封装在一个线程类中(虽然python的线程比较鸡肋,就纯当练练手)

class SpiderThread(threading.Thread):

def __init__(self,begin,end,name,dataList):

super().__init__()

self.begin=begin

self.end=end

self.name=name

self.dataList=dataList

def run(self):

f3 = open('D:/PyGaokaopai/majorinfo2.txt', 'a', encoding='utf-8')

url = 'http://www.gaokaopai.com/university-ajaxGetMajor.html'

flag=0

num=0

n=0

i=0

h = getUserAgent()

proxyList = getProxyList()

for i in range(int(self.begin), int(self.end)):

flag+=1

n+=1

i+=1

num+=1

data = self.dataList[i].encode()

#每获取5个数据更换一次消息头和代理ip

if(flag == 5):

h = getUserAgent()

proxies = getProxies(proxyList)

flag = 0

time.sleep(0.4)

#每获取50个数据等两秒更换一次代理ip列表

if(i==50):

time.sleep(2)

proxyList = getProxyList()

i=0

#发送请求数据,并且获取数据写入txt

write2txt(url,data,h,proxyList,f3,num,self.name)接下来就是这个write2txt的方法了,由于不能保证ip质量,所以我们应该在获取数据的时候设置一个timeout,超时则更换一个ip发送请求;其次通过测试如果网站在同时间获取到大量请求会获取一个错误的页面的代码,这时候获取的数据就有误了。我第一次爬的时候就获取到了大量错误页面的代码

大概到第2000多次就错误了,所以当获取错误数据时应该等一会再获取

所以为了防止以上两个错误发生,这个write2txt的方法应该有递归,而且是两个

def write2txt(url,data,h,proxyList,f3,num,name):

try:

proxies = getProxies(proxyList)

httpproxy_handler = r.ProxyHandler(proxies)

opener = r.build_opener(httpproxy_handler)

req = r.Request(url, data=data, headers=h)

data1 = opener.open(req, timeout=2).read().decode('utf-8', 'ignore')

#通过观察,错误的数据是错误页面的html代码,等待3秒再递归

if(str(data1).startswith('<!DOCTYPE html>')):

time.sleep(3)

write2txt(url,data,h,proxyList,f3,num,name)

data1=''

f3.write(data1 + '\r\n')

print(str(num),name)

#超时则递归

except Exception as e:

time.sleep(1)

write2txt(url, data, h, proxyList, f3, num,name)因为在操作错误代码的逻辑中把获取到的data设置为空字符串了,所以还是会写入换行符,所以这个文本文件之后还是要操作一下

最后,提高效率通过使用多进程来实现

def getThread(i,name,dataList):

t1=SpiderThread(str(int(i)*3054),str(int(i)*3054+1527),name+'.t1',dataList)

t2 = SpiderThread(str(int(i)*3054+1527), str((int(i)+1)*3054), name+'.t2', dataList)

t1.start()

time.sleep(1.5)

t2.start()

if __name__ == '__main__':

schoolId = getSchoolIdList()

cityId = getCityIdList()

dataList = getDataList(schoolId,cityId)

for i in range(62):

p = Process(target=getThread, args=(i,'P'+str(i),dataList))

p.start()

time.sleep(1.5)

p.join()我的想法是一共62个进程,每个进程分为2个线程,每个线程发送1527个请求

其中有state为0 的数据,也就是没有招生计划,程序大概跑了两小时

并且由于错误页面那块的逻辑,会有空行:

所以这个文本还是要通过正则来处理一下

import re

f=open('D:/PyGaokaopai/majorinfo2.txt','r',encoding='utf-8')

list = re.findall('\{\"data.*?\"status\":1\}',f.read())

f1=open('D:/PyGaokaopai/majorinfo.txt','w',encoding='utf-8')

for i in range(len(list)):

f1.write(list[i]+'\n')

f.close()

f1.close()最后得到一个 243M 的文本文件,一共有 61553个有效数据

然后就是数据处理了:D

完整代码(除去获取id,city文本代码以及有效数据处理)

import requests

from fake_useragent import UserAgent

import re

import urllib.request as r

import threading

import time

import random

from multiprocessing import Process

#获取id列表

def getSchoolIdList():

f1 = open('schoolurl.txt', 'r', encoding='utf-8')

schoolId = []

while True:

try:

line = f1.readline()

url = line.split(',')[1]

id = re.search('\d+', url).group()

schoolId.append(id)

except Exception as e:

pass

if (not line):

break

f1.close()

return schoolId

#获取city列表

def getCityIdList():

f2 = open('citynumber.txt', 'r', encoding='utf-8')

cityId = []

while True:

try:

line = f2.readline()

url = line.split(',')[1]

id = re.search('\d+', url).group()

cityId.append(id)

except Exception as e:

pass

if (not line):

break

f2.close()

return cityId

#获取请求列表

def getDataList(schoolId,cityId):

dataList = []

for sid in schoolId:

for cid in cityId:

for type in [1, 2]:

dataList.append('id={}&type={}&city={}&state=1'.format(sid, type, cid))

return dataList

#获取代理ip列表

def getProxyList():

url1 = 'http://api3.xiguadaili.com/ip/?tid=558070598680507&num=15&delay=1&category=2&protocol=https'

res = requests.get(url1)

proxyList = []

for ip in res.text.split('\r\n'):

proxyList.append(ip)

return proxyList

#随机获取一条代理ip

def getProxies(proxyList):

proxy_ip = random.choice(proxyList)

proxies = {

'https': 'https://' + proxy_ip

}

return proxies

#随机获取一个消息头

def getUserAgent():

h = {

'User-Agent': UserAgent().random,

'X-Requested-With': 'XMLHttpRequest'

}

return h

class SpiderThread(threading.Thread):

def __init__(self,begin,end,name,dataList):

super().__init__()

self.begin=begin

self.end=end

self.name=name

self.dataList=dataList

def run(self):

f3 = open('D:/PyGaokaopai/majorinfo2.txt', 'a', encoding='utf-8')

url = 'http://www.gaokaopai.com/university-ajaxGetMajor.html'

flag=0

num=0

n=0

i=0

h = getUserAgent()

proxyList = getProxyList()

for i in range(int(self.begin), int(self.end)):

flag+=1

n+=1

i+=1

num+=1

data = self.dataList[i].encode()

if(flag == 5):

h = getUserAgent()

proxies = getProxies(proxyList)

flag = 0

time.sleep(0.4)

if(i==50):

time.sleep(2)

proxyList = getProxyList()

i=0

write2txt(url,data,h,proxyList,f3,num,self.name)

print(self.name+' finished-----------------------')

def write2txt(url,data,h,proxyList,f3,num,name):

try:

proxies = getProxies(proxyList)

httpproxy_handler = r.ProxyHandler(proxies)

opener = r.build_opener(httpproxy_handler)

req = r.Request(url, data=data, headers=h)

data1 = opener.open(req, timeout=2).read().decode('utf-8', 'ignore')

if(str(data1).startswith('<!DOCTYPE html>')):

time.sleep(3)

write2txt(url,data,h,proxyList,f3,num,name)

data1=''

f3.write(data1 + '\r\n')

print(str(num),name)

except Exception as e:

time.sleep(1)

write2txt(url, data, h, proxyList, f3, num,name)

def getThread(i,name,dataList):

t1=SpiderThread(str(int(i)*3054),str(int(i)*3054+1527),name+'.t1',dataList)

t2 = SpiderThread(str(int(i)*3054+1527), str((int(i)+1)*3054), name+'.t2', dataList)

t1.start()

time.sleep(1.5)

t2.start()

if __name__ == '__main__':

schoolId = getSchoolIdList()

cityId = getCityIdList()

dataList = getDataList(schoolId,cityId)

for i in range(62):

p = Process(target=getThread, args=(i,'P'+str(i),dataList))

p.start()

time.sleep(1.5)

p.join()