多线程爬虫——图片爬取

引言

最近这几天,我一直在看多线程,感觉多线程并不是想象中的那么容易,自己也尝试用多线程写了几个爬虫的例子。

思路分析

具体的爬取思路就不说了,都是一些常规的爬虫操作,这里主要来说一下多线程的问题。

这三段爬虫代码都是应用了生产者——消费者设计模式。

官方解释

生产者消费者问题(英语:Producer-consumer problem),也称有限缓冲问题(英语:Bounded-buffer problem),是一个多线程同步问题的经典案例。该问题描述了两个共享固定大小缓冲区的线程——即所谓的“生产者”和“消费者”——在实际运行时会发生的问题。生产者的主要作用是生成一定量的数据放到缓冲区中,然后重复此过程。与此同时,消费者也在缓冲区消耗这些数据。该问题的关键就是要保证生产者不会在缓冲区满时加入数据,消费者也不会在缓冲区中空时消耗数据。

——百度百科

个人理解

生产者生产数据放到容器中,而消费者负责消耗掉容器中的数据。

代码

(1)斗图啦表情包

# !/usr/bin/env python

# —*— coding: utf-8 —*—

# @Time: 2020/2/3 18:54

# @Author: Martin

# @File: doutula_mul.py

# @Software:PyCharm

import requests

import os

import re

import threading

from lxml import etree

from queue import Queue

class Producer(threading.Thread):

headers = {

'Referer': 'http://www.doutula.com/photo/list/?page=1',

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36'

}

def __init__(self, page_queue, img_queue, *args, **kwargs):

super(Producer, self).__init__(*args, **kwargs)

self.page_queue = page_queue

self.img_queue = img_queue

def run(self):

while True:

if self.page_queue.empty():

break

url = self.page_queue.get()

self.parse_page(url)

print("Producer: %d %d" % (self.page_queue.qsize(), self.img_queue.qsize()))

def parse_page(self, url):

try:

r = requests.get(url, headers=self.headers)

except:

print("请求失败!")

return

text = r.text

html = etree.HTML(text)

imgs = html.xpath("//a[@class='col-xs-6 col-sm-3']/img")

for img in imgs:

# 图片信息的默认值

img_name = ""

img_url = ""

suffix = ""

img_url_list = img.xpath("@data-original")

if len(img_url_list) != 0:

img_url = img_url_list[0]

suffix = os.path.splitext(img_url)[1]

img_name_list = img.xpath("@alt")

if len(img_name_list) != 0:

img_name = img_name_list[0]

img_name = re.sub(r'\W', "", img_name)

if len(img_name) != 0 and len(img_url) != 0 and len(suffix) != 0:

self.img_queue.put((img_name, img_url, suffix))

class Consumer(threading.Thread):

def __init__(self, page_queue, img_queue, *args, **kwargs):

super(Consumer, self).__init__(*args, **kwargs)

self.page_queue = page_queue

self.img_queue = img_queue

def run(self):

while True:

if self.img_queue.empty():

if self.page_queue.empty():

return

try:

img_name, img_url, suffix = self.img_queue.get(True, 1)

self.img_queue.task_done()

except :

break

try:

r = requests.get(img_url)

except :

print("请求失败!")

continue

if not os.path.exists('./result/Expression_Bao_mul'):

os.makedirs('./result/Expression_Bao_mul')

with open('./result/Expression_Bao_mul/' + img_name + suffix, 'wb') as f:

f.write(r.content)

print(img_name + " 下载完成!")

print("Consumer: %d %d" % (self.page_queue.qsize(), self.img_queue.qsize()))

def main():

page_queue = Queue(100)

img_queue = Queue(1000)

url = 'http://www.doutula.com/photo/list/?page=%d'

for i in range(1, 101):

page_queue.put(url % i)

for x in range(5):

t = Producer(page_queue, img_queue)

t.start()

for y in range(5):

t = Consumer(page_queue, img_queue)

t.start()

if __name__ == '__main__':

main()

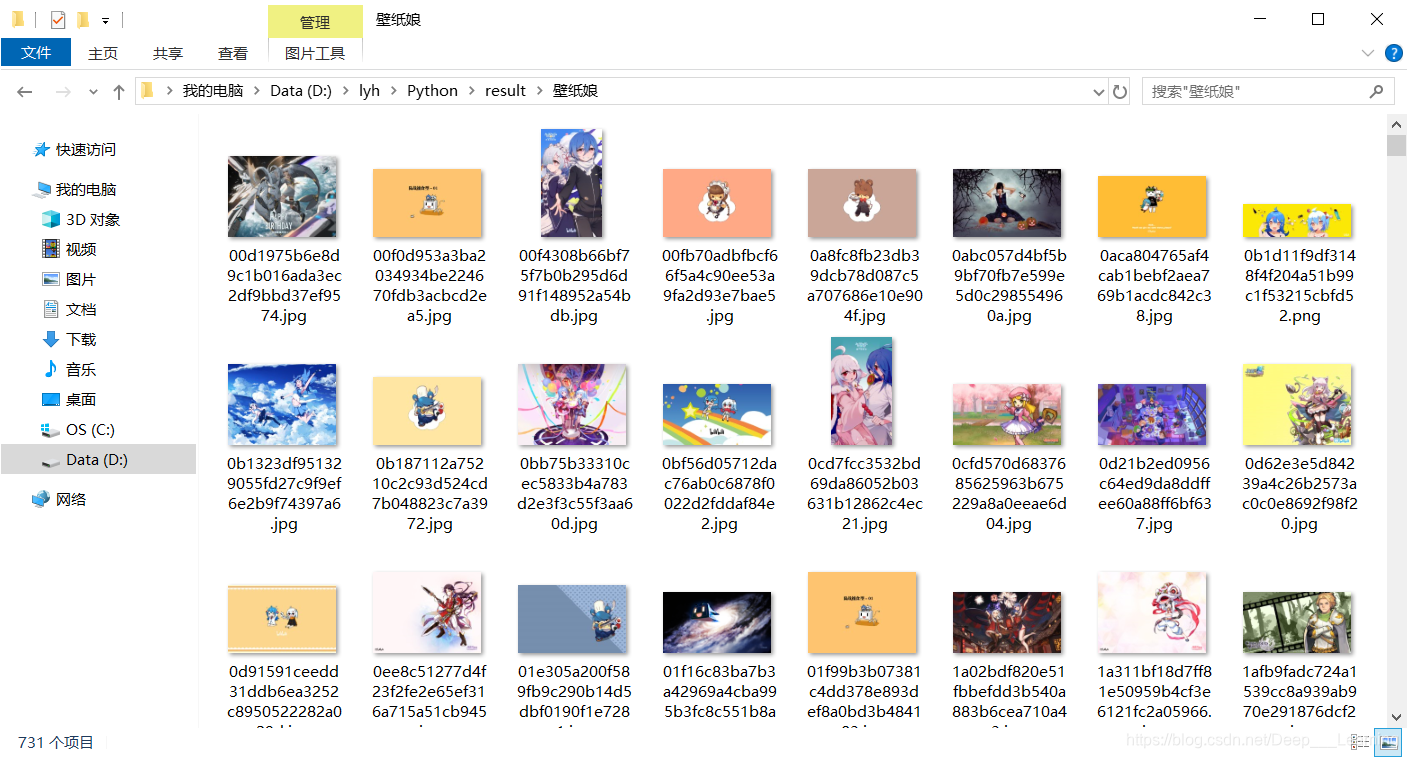

(2)B站壁纸娘相册

# !/usr/bin/env python

# —*— coding: utf-8 —*—

# @Time: 2020/2/28 22:12

# @Author: Martin

# @File: Bilibili_wallpaper.py

# @Software:PyCharm

import requests

from urllib import request

from queue import Queue

import threading

import json

import os

class Producer(threading.Thread):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36'

}

def __init__(self, url_queue, img_queue, *args, **kwargs):

super(Producer, self).__init__(*args, **kwargs)

self.url_queue = url_queue

self.img_queue = img_queue

def run(self):

while True:

if self.url_queue.empty():

break

url = self.url_queue.get()

self.parse_data(url)

print("Producer: %d %d" % (self.url_queue.qsize(), self.img_queue.qsize()))

def parse_data(self, url):

r = requests.get(url, headers=self.headers)

data = json.loads(r.text)['data']

items = data['items']

for item in items:

pictures = item['pictures']

for picture in pictures:

self.img_queue.put(picture['img_src'])

class Consumer(threading.Thread):

def __init__(self, url_queue, img_queue, *args, **kwargs):

super(Consumer, self).__init__(*args, **kwargs)

self.url_queue = url_queue

self.img_queue = img_queue

def run(self):

while True:

if self.img_queue.empty() and self.url_queue.empty():

break

try:

url = self.img_queue.get(True, 1)

self.img_queue.task_done()

except :

break

name = url.split('/')[-1]

try:

request.urlretrieve(url, './result/壁纸娘/' + name)

print(name + ' 下载完成!')

except Exception as e:

print(e)

print(name + ' 下载失败!')

print("Consumer: %d %d" % (self.url_queue.qsize(), self.img_queue.qsize()))

def main():

url_queue = Queue(11)

img_queue = Queue(1000)

if not os.path.exists('./result/壁纸娘/'):

os.makedirs('./result/壁纸娘/')

for i in range(11):

url = 'https://api.vc.bilibili.com/link_draw/v1/doc/doc_list?uid=6823116&page_num=%s&page_size=30&biz=all' % str(

i)

url_queue.put(url)

for x in range(5):

t = Producer(url_queue, img_queue)

t.start()

for y in range(5):

t = Consumer(url_queue, img_queue)

t.start()

if __name__ == '__main__':

main()

(3)某博客的文章头图

# !/usr/bin/env python

# —*— coding: utf-8 —*—

# @Time: 2020/2/28 9:15

# @Author: Martin

# @File: xiaoyou_mul.py

# @Software:PyCharm

import requests

import json

import os

import threading

from queue import Queue

class Spider(threading.Thread):

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/79.0.3945.130 Safari/537.36'

}

def __init__(self, page_queue, img_queue, *args, **kwargs):

super(Spider, self).__init__(*args, **kwargs)

self.page_queue = page_queue

self.img_queue = img_queue

def run(self):

while True:

if self.page_queue.empty():

break

url = self.page_queue.get()

self.parse_page(url)

print("Producer: %d %d" % (self.page_queue.qsize(), self.img_queue.qsize()))

def parse_page(self, url):

r = requests.get(url, headers=self.headers)

data = json.loads(r.text)['data']

try:

postlist = data['Postlist']

for item in postlist:

self.img_queue.put(item['image'])

except Exception as e:

print(e)

class Saver(threading.Thread):

def __init__(self, page_queue, img_queue, *args, **kwargs):

super(Saver, self).__init__(*args, **kwargs)

self.page_queue = page_queue

self.img_queue = img_queue

def run(self):

while True:

if self.img_queue.empty() and self.page_queue.empty():

break

try:

img_url = self.img_queue.get(True, 1)

self.img_queue.task_done()

except :

break

img_name = img_url.split('/')[-1]

if not os.path.exists('./result/封面图片(mul)/'):

os.makedirs('./result/封面图片(mul)/')

try:

r = requests.get(img_url)

with open('./result/封面图片(mul)/'+img_name, 'wb') as f:

f.write(r.content)

print(img_name + " 下载完成!")

except Exception as e:

print(e)

print(img_name + " 下载失败!")

print("Consumer: %d %d" % (self.page_queue.qsize(), self.img_queue.qsize()))

def main():

page_queue = Queue(30)

img_queue = Queue(1000)

raw_url = 'https://api.blog.xiaoyou66.com/api/getpostlist?id=%s'

for i in range(1, 30):

url = raw_url % str(i)

page_queue.put(url)

for x in range(5):

t = Spider(page_queue, img_queue)

t.start()

for y in range(5):

t = Saver(page_queue, img_queue)

t.start()

if __name__ == '__main__':

main()

效果展示

总结分析

据说Python的多线程不是很好用,等有时间我再用Java试一试。