基于深度学习的表情识别服务搭建(一)

背景

之前我完成了终端和服务端之间交流的全部内容,接下来我需要完成的是服务端识别线程的功能。完成之后,我们的系统应该就剩下前端和优化工作了

识别服务设计

我们称之为服务,是因为它并不能很好的和我们现有的系统无缝拼接,因为这个模块主要由Python实现,但是我们服务端最多只能是PHP+Java,我们的设计是这个样子的:

- 后台的Java+PHP负责从终端和web端接受音频图片或上传的视频,而识别服务仅仅完成对已有图片的处理

- 后台和识别服务通过网络方式通信,后台将从用户处接受到的文件名发送给识别服务,识别服务打开这些文件,完成识别,并将识别结果以json的格式发送给后台

有了具体框架,我们还需要定义识别服务的功能:

- 找出图片中每一张脸的位置

- 对图片中人脸的朝向进行分析

- 对人脸的表情进行分析

那么我们就清楚了我们如何去实现这一系列功能

实现方式的选择

经过前期组内的研究,人脸识别应该使用caffe,darknet中虽然有相关的网络,但是darknet的输入只能是彩色图片,而人脸表情特征实际上使用黑白图片就能表述清楚,由于对框架了解不够深入,不知如何做出调整,所以我们先使用功能完善,组内熟悉的Caffe完成表情分类

在寻找脸部方位检测方法的时候我们找到了dlib,这个东西其实是挺不错的,有许多功能,包括人脸检测,头部位置检测,鉴于我们不得不使用它,并考虑到组内其他同学调试方便(如果直接使用darknet的话问题会比较有趣,因为组内所有同学中只有我的电脑有独立显卡,而darknet由于不成熟的原因并不支持传统的CPU加速方法,完成一张图片的检测,GPU只需几十毫秒,而CPU需要80秒),我们决定先完全使用dlib+caffe完成一套系统,darknet过一段时间再考虑

总结一下我们的选择:

- 人脸检测:darknet(dlib)

- 头部位置识别:dlib

- 表情分类:caffe

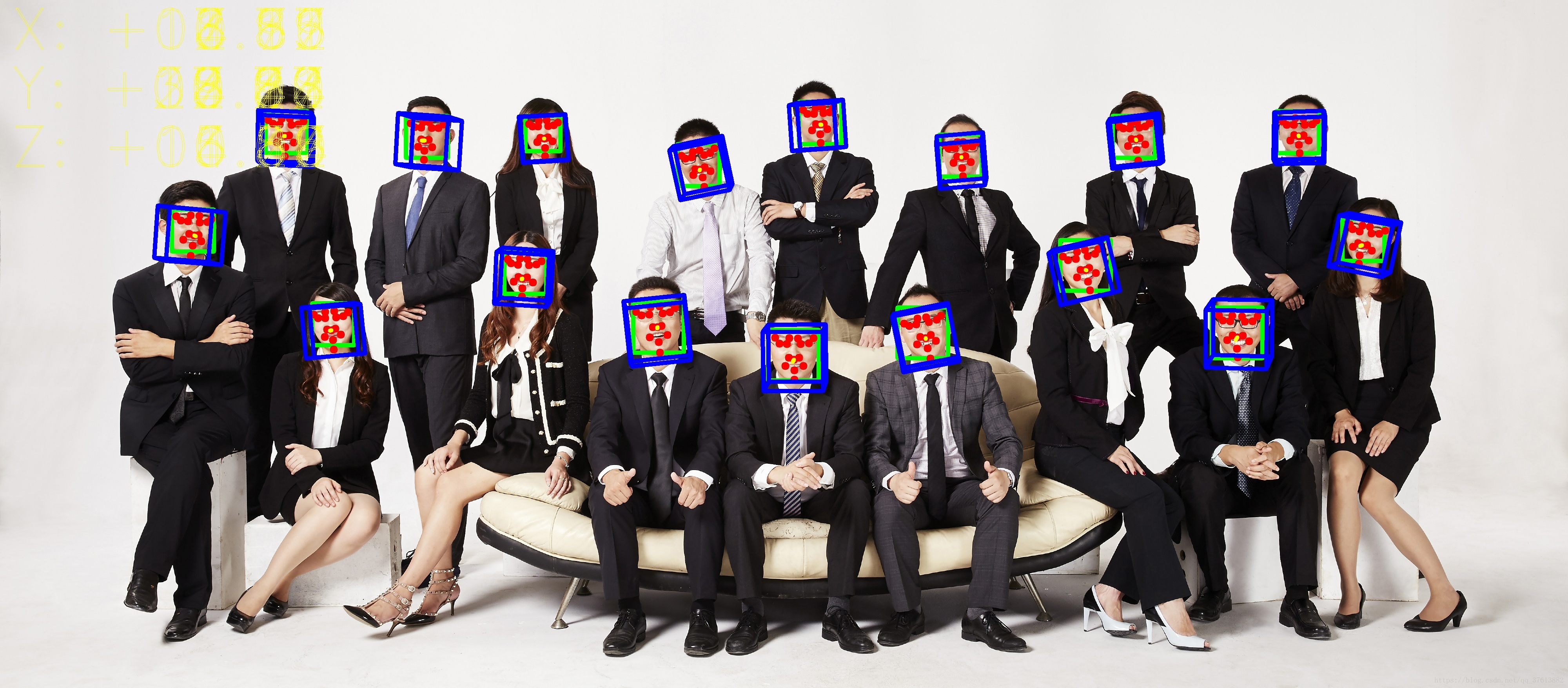

dlib性能验证

经过我们的研究,dlib中人脸检测使用的是图形学方法,这在光线不均匀的情况下效果极差,表现为一下状态:

这样可以很明显的看出问题,但是基于深度学习方法的darknet似乎没有这样的烦恼

原本darknet的人脸检测框的没有这么方正,我对框选算法进行了一下修改,具体改动将在后续接入darknet的文章中详细介绍

功能实现

类定义:

class HPD():

# 3D facial model coordinates

landmarks_3d_list = [

np.array([

[0.000, 0.000, 0.000], # Nose tip

[0.000, -8.250, -1.625], # Chin

[-5.625, 4.250, -3.375], # Left eye left corner

[5.625, 4.250, -3.375], # Right eye right corner

[-3.750, -3.750, -3.125], # Left Mouth corner

[3.750, -3.750, -3.125] # Right mouth corner

], dtype=np.double),

np.array([

[0.000000, 0.000000, 6.763430], # 52 nose bottom edge

[6.825897, 6.760612, 4.402142], # 33 left brow left corner

[1.330353, 7.122144, 6.903745], # 29 left brow right corner

[-1.330353, 7.122144, 6.903745], # 34 right brow left corner

[-6.825897, 6.760612, 4.402142], # 38 right brow right corner

[5.311432, 5.485328, 3.987654], # 13 left eye left corner

[1.789930, 5.393625, 4.413414], # 17 left eye right corner

[-1.789930, 5.393625, 4.413414], # 25 right eye left corner

[-5.311432, 5.485328, 3.987654], # 21 right eye right corner

[2.005628, 1.409845, 6.165652], # 55 nose left corner

[-2.005628, 1.409845, 6.165652], # 49 nose right corner

[2.774015, -2.080775, 5.048531], # 43 mouth left corner

[-2.774015, -2.080775, 5.048531], # 39 mouth right corner

[0.000000, -3.116408, 6.097667], # 45 mouth central bottom corner

[0.000000, -7.415691, 4.070434] # 6 chin corner

], dtype=np.double),

np.array([

[0.000000, 0.000000, 6.763430], # 52 nose bottom edge

[5.311432, 5.485328, 3.987654], # 13 left eye left corner

[1.789930, 5.393625, 4.413414], # 17 left eye right corner

[-1.789930, 5.393625, 4.413414], # 25 right eye left corner

[-5.311432, 5.485328, 3.987654] # 21 right eye right corner

], dtype=np.double)

]

# 2d facial landmark list

lm_2d_index_list = [

[30, 8, 36, 45, 48, 54],

[33, 17, 21, 22, 26, 36, 39, 42, 45, 31, 35, 48, 54, 57, 8], # 14 points

[33, 36, 39, 42, 45] # 5 points

]

# caffe 模型的各种路径

caffe_model = 'face/myfacialnet_iter_59000.caffemodel'

caffe_lable = 'face/labels.txt'

caffe_deploy = 'face/deploy.prototxt'

caffe_mean = 'face/mean.binaryproto'

def __init__(self, lm_type=1, predictor="model/shape_predictor_68_face_landmarks.dat", verbose=True):

self.bbox_detector = dlib.get_frontal_face_detector()

self.landmark_predictor = dlib.shape_predictor(predictor)

self.lm_2d_index = self.lm_2d_index_list[lm_type]

self.landmarks_3d = self.landmarks_3d_list[lm_type]

self.v = verbose

# caffe 模型初始化一下

self.net = caffe.Net(self.caffe_deploy, self.caffe_model, caffe.TEST)

# 图片预处理设置

self.transformer = caffe.io.Transformer({'data': self.net.blobs['data'].data.shape}) # 设定图片的shape格式(1,1,42,42)

self.transformer.set_transpose('data', (2, 0, 1)) # 改变维度的顺序,由原始图片(42,42,1)变为(1,42,42)

self.transformer.set_raw_scale('data', 255) # 缩放到【0,255】之间

#加载均值文件

proto_data = open(self.caffe_mean, "rb").read()

a = caffe.io.caffe_pb2.BlobProto.FromString(proto_data)

m = caffe.io.blobproto_to_array(a)[0]

self.transformer.set_mean('data', m.mean(1).mean(1)) #减去均值,前面训练模型时没有减均值,这儿就不用

self.net.blobs['data'].reshape(1, 1, 42, 42)

caffe.set_mode_cpu()

def class2np(self, landmarks):

coords = []

for i in self.lm_2d_index:

coords += [[landmarks.part(i).x, landmarks.part(i).y]]

return np.array(coords).astype(np.int)

def getLandmark(self, im):

# Detect bounding boxes of faces

if im is not None:

rects = self.bbox_detector(im, 1)

else:

rects = []

# make this an larger array

landmarks_2ds = []

if len(rects) <= 0:

return None, None

for rect in rects:

# Detect landmark of first face

landmarks_2d = self.landmark_predictor(im, rect) # 这里获得特征点的集合,我们需要这个结果来切脸

# Choose specific landmarks corresponding to 3D facial model

## and i decided to move it to another place!! --Tecelecta

landmarks_2ds.append(landmarks_2d)

return landmarks_2ds, rects

def getHeadpose(self, im, landmarks_2d, verbose=False):

h, w, c = im.shape

f = w # column size = x axis length (focal length)

u0, v0 = w / 2, h / 2 # center of image plane

camera_matrix = np.array(

[[f, 0, u0],

[0, f, v0],

[0, 0, 1]], dtype=np.double

)

# Assuming no lens distortion

dist_coeffs = np.zeros((4, 1))

# Find rotation, translation

(success, rotation_vector, translation_vector) = cv2.solvePnP(self.landmarks_3d, landmarks_2d, camera_matrix,

dist_coeffs)

if verbose:

print("Camera Matrix:\n {0}".format(camera_matrix))

print("Distortion Coefficients:\n {0}".format(dist_coeffs))

print("Rotation Vector:\n {0}".format(rotation_vector))

print("Translation Vector:\n {0}".format(translation_vector))

return rotation_vector, translation_vector, camera_matrix, dist_coeffs

# rotation vector to euler angles

def getAngles(self, rvec, tvec):

rmat = cv2.Rodrigues(rvec)[0]

P = np.hstack((rmat, tvec)) # projection matrix [R | t]

degrees = -cv2.decomposeProjectionMatrix(P)[6]

rx, ry, rz = degrees[:, 0]

return [rx, ry, rz]

# return image and angles

def processImage(self, im, draw=True):

faces_dets = dlib.full_object_detections()

prob_list = []

draws = []

all_angles = []

# landmark Detection

im_gray = cv2.cvtColor(im, cv2.COLOR_BGR2GRAY)

landmarks_2ds, bboxs = self.getLandmark(im_gray) # 这里直接使用这个数组

# if no face deteced, return original image

if bboxs is None:

return im, None

for i in range(len(bboxs)):

# Headpose Detection

landmarks_2d = landmarks_2ds[i]

bbox = bboxs[i]

faces_dets.append(landmarks_2d)

landmarks_2d = self.class2np(landmarks_2d)

landmarks_2d = landmarks_2d.astype(np.double)

rvec, tvec, cm, dc = self.getHeadpose(im, landmarks_2d)

angles = self.getAngles(rvec, tvec)

all_angles.append(angles)

if draw:

draws.append(Draw(im, angles, bbox, landmarks_2d, rvec, tvec, cm, dc, b=10.0))

images = dlib.get_face_chips(im, faces_dets, size=320)

# 接入caffe完成分类

for face in images:

net_input = cv2ski(face)

net_input = self.transformer.preprocess("data",net_input)

self.net.blobs['data'].data[...] = net_input

self.net.forward()

prob_list.append(self.net.blobs['prob'].data[0].flatten())

for d in draws:

im = d.drawAll()

return im, bboxs, all_angles, prob_list

def processBatch(self, fileNames, in_dir, out_dir):

if in_dir[-1] != '/': in_dir += '/'

if out_dir[-1] != '/': out_dir += '/'

batch_json = []

for fn in fileNames:

im = cv2.imread(in_dir + fn)

im, bboxes, angles, probs = self.processImage(im)

cv2.imwrite(out_dir + fn, im)

pic_json = []

for i in range(len(bboxes)):

pic_json.append({

"x" : (bboxes[i].left() + bboxes[i].right()) / 2,

"y" : (bboxes[i].top() + bboxes[i].bottom()) / 2,

"rx" : angles[i][0].astype("float"),

"ry" : angles[i][1].astype("float"),

"rz" : angles[i][2].astype("float"),

"angry" : probs[i][0].astype("float"),

"digust" : probs[i][1].astype("float"),

"fear" : probs[i][2].astype("float"),

"happy" : probs[i][3].astype("float"),

"sad" : probs[i][4].astype("float")

})

batch_json.append(pic_json)

return batch_json这个类的实现过程中我们参考了已经有的项目,对它进行了解读和完善,最终得到这个类,其中核心的方法是processImage,它首先完成了人脸的检测,然后使用自己支持的深度学习算法完成了landmark的标定,之后将画出的人脸交给caffe模型完成表情分类

辅助函数定义:

def cv2ski(cv_mat):

'''

将脸从cv2转换成ski的格式

:param cv_mat:

:return:

'''

# rgbgr & normalize

ski_mat = np.zeros((48,48,1), dtype="float32")

cv_mat = cv2.cvtColor(cv_mat, cv2.COLOR_BGR2GRAY, dstCn=1)

cv_mat = cv2.resize(cv_mat, (48, 48), interpolation=cv2.INTER_LINEAR) / 255.

ski_mat = cv_mat[:,:,np.newaxis]

#cv2.imwrite("middle.jpg", cv_mat)

# cv2.imshow("middle", ski_mat)

#cv2.waitKey(0)

return ski_mat.astype("float32")正如注释描述的一样,这个函数完成的是格式转换功能,因为caffe输入需要的格式是skimage.io获得的,而我们整体的处理过程使用opencv完成的,这就要求我们完成格式转换另外,从原图上截下的脸是彩色的,我们还需要完成色域转换

执行文件:

# -- encoding:utf-8 --

#import landmarkPredict as headpose

import socket

import hpd

import json

LOCALHOST = "127.0.0.1"

sock = None

def sockInit(port):

'''

接收文件名,一次一个batch

:param port: 接收使用的port,字符串格式!

:return:

'''

global sock

sock = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

sock.bind((LOCALHOST, port))

sock.listen(1)

return

def recvFileName(conn):

'''

利用已经建立的连接完成一批文件的接收

:param conn:

:return: 一堆文件名

'''

data = conn.recv(4096)

file_name = data.split('\n')

return file_name[:len(file_name) - 1]

def sendJson(conn, jList):

'''

将识别返回的结果发回请求端

:param conn: 之前与请求端建立的连接

:param jList: 返回的一批图片的识别结果

:return: shi

'''

for pic_json in jList:

conn.send(json.dumps(pic_json))

print("---finish sending res of 1 pic---")

print(pic_json)

if __name__ == "__main__":

sockInit(10101)

headpose = hpd.HPD()

while 1:

conn, addr = sock.accept()

print("Accpeting conn from {}".format(addr))

batch_name = recvFileName(conn)

res = headpose.processBatch(batch_name, "/srv/ftp/pic", "/srv/ftp/res")#这里指定正确的路径就可以完成任务

sendJson(conn,res)

依旧是首先定义基本的网络传输函数,接受文件名,并完成从服务端接收所有json对象,并转换成字符串发送的功能

小结

这一部分还会被进一步完善,上面我们提到,dlib的性能存在问题,接下来我们会将darknet接入,着手解决这些问题