Scrapy爬虫数据持久化

一、本地文件持久化:

最简单的储存成json格式文件,在运行爬虫时,命令为:scrapy crawl name -o xxx.json

jsonlines格式:命令为:scrapy crawl name -o xxx.jl

二、数据库持久化:

1、MySQL存储:

(1)、settings文件设置MySQL相关的参数,以及打开ITEM_PIPELINES

1 MYSQL_HOST = "localhost"

2 MYSQL_DBNAME = "yyyyyy"

3 MYSQL_USER = "root"

4 MYSQL_PASSWD = "xxxxxx"

5

6 ITEM_PIPELINES = {

7 'Spider.pipelines.spiderPipeline': 300,

8 }

(2)、在pipelines文件书写SpiderPipeline类:

demo:

1 import pymysql 2 from Spider.items import xxxspiderItems 3 from Spider.Spider import settings 4 from scrapy import log 5 6 7 class spiderPipeline(object): 8 def __init__(self): 9 self.con = pymysql.connect( 10 host=settings.MYSQL_HOST, 11 db=settings.MYSQL_DBNAME, 12 user=settings.MYSQL_USER, 13 passwd=settings.MYSQL_PASSWD, 14 charset='utf8', 15 use_unicode=True 16 ) 17 self.cursor = self.con.cursor() 18 19 def process_item(self, item, spider): 20 try: 21 self.cursor.execute("select * from table where name=%s", item["name"]) 22 ret = self.cursor.fetchone() 23 if ret: # 数据去重 24 self.cursor.execute("update table set name=%s,author=%s,score=%s", 25 (item["name"], 26 item["author"], 27 item["score"],) 28 else: 29 self.cursor.execute("insert into table(name,author,score) value (%s,%s,%s)", 30 (item["name"], 31 item["author"], 32 item["score"],) 33 self.con.commit() 34 except Exception as e: 35 log(e) 36 return item 37 38 def db_close(self): 39 self.cursor.close() 40 self.con.close()

2、redis存储demo:

1 import redis 2 3 class ProxyspiderPipeline(object): 4 def __init__(self): 5 self.con = redis.StrictRedis(host="localhost", port=6379, db=15, password="123456") 6 7 def process_item(self, item, spider): 8 self.con.sadd("usable_proxy", item["proxy"]) 9 return item

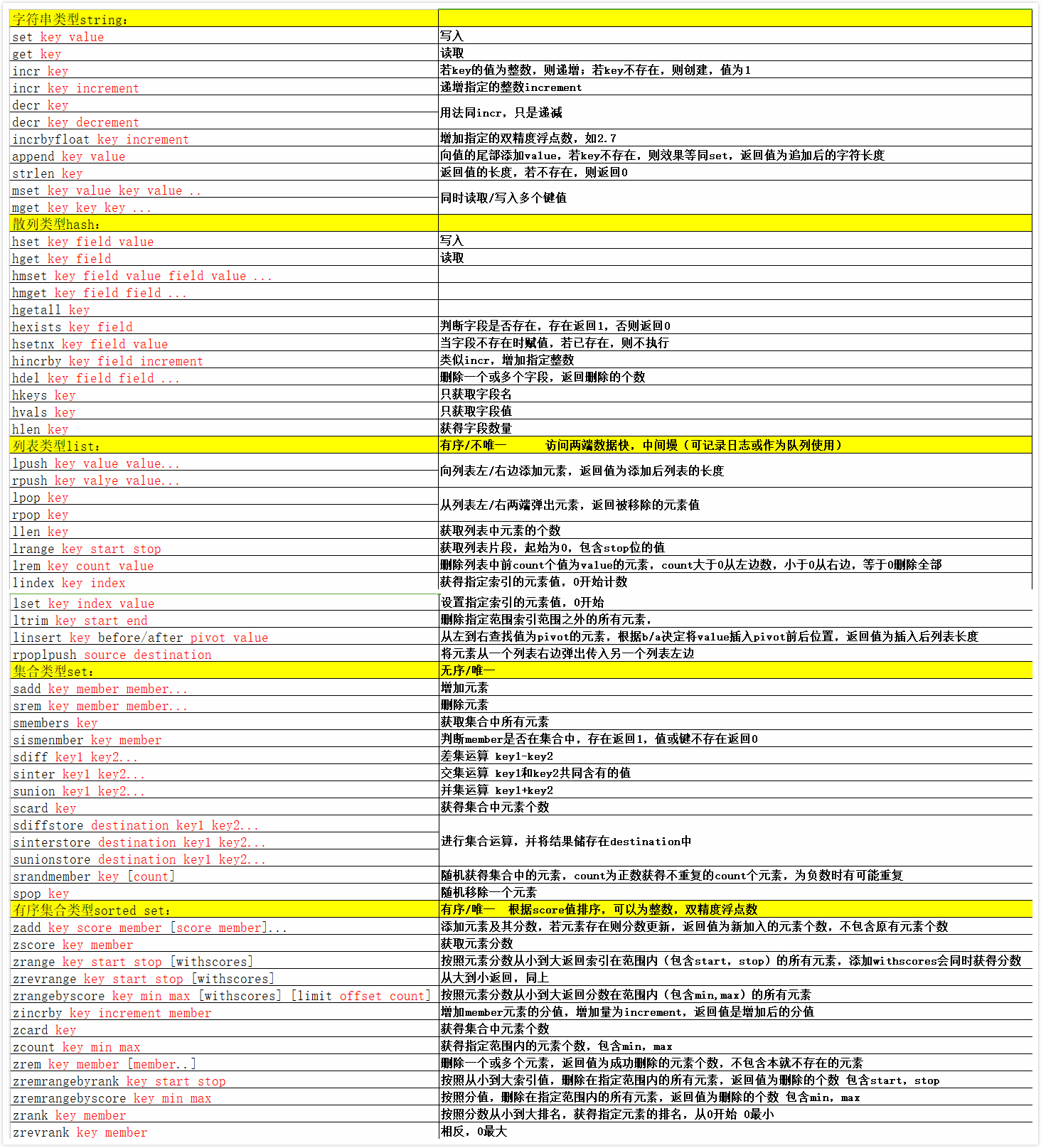

demo中,将数据存入了set集合类型中,也可以存成其他redis数据类型,具体可看图片操作,redis库的方法基本和redis库操作一致

3、MongoDB存储:

占坑

4、Sqlites存储:

占坑