【论文阅读】A Gentle Introduction to Graph Neural Networks [图神经网络入门](1)

最近读了一篇Distill网站上的一篇文章,讲的是图神经网络的入门,我给翻译了一下供大家参考。

原文章阅读请移步至A Gentle Introduction to Graph Neural Networks。非常推荐大家去原网址读这篇文章,因为这个网站上的文章的图都具有交互性,可读性很高,非常便于大家理解。

如果大家想看这篇文章的视频解读的话,非常推荐大家去听,是李沐老师为大家讲解,李沐老师讲的很好。请移步至零基础多图详解图神经网络(GNN/GCN)【论文精读】

注:1. 我是一段一段翻译的,所以会以一段原文一段翻译的形式呈现;

2. 小一号的字体为原作者对文章的图或者一段话的解释;

3. 小一号的加粗字体为图的名称或者图下面的翻译;

4. 灰色字体为我自己对文章的一些粗浅的见解;

5. 斜体字为原文章的斜体字。

6. 大一号的加粗字体是文章每节的标题

Neural networks have been adapted to leverage the structure and properties of graphs. We explore the components needed for building a graph neural network - and motivate the design choices behind them.

神经网络被用来利用图的结构和属性。我们将探索构建图神经网络所需的模块,并解释它们背后设计选择的原因。

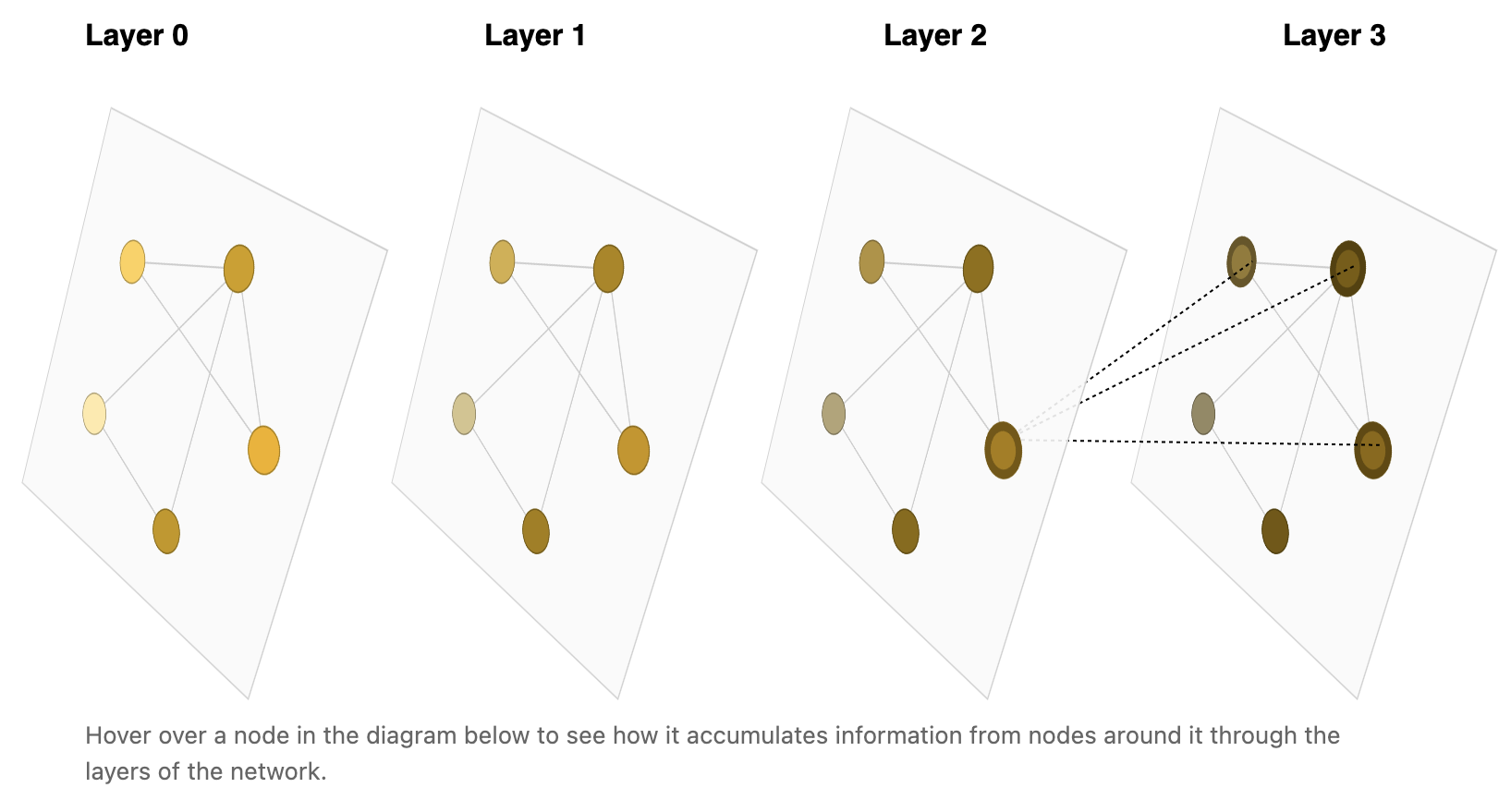

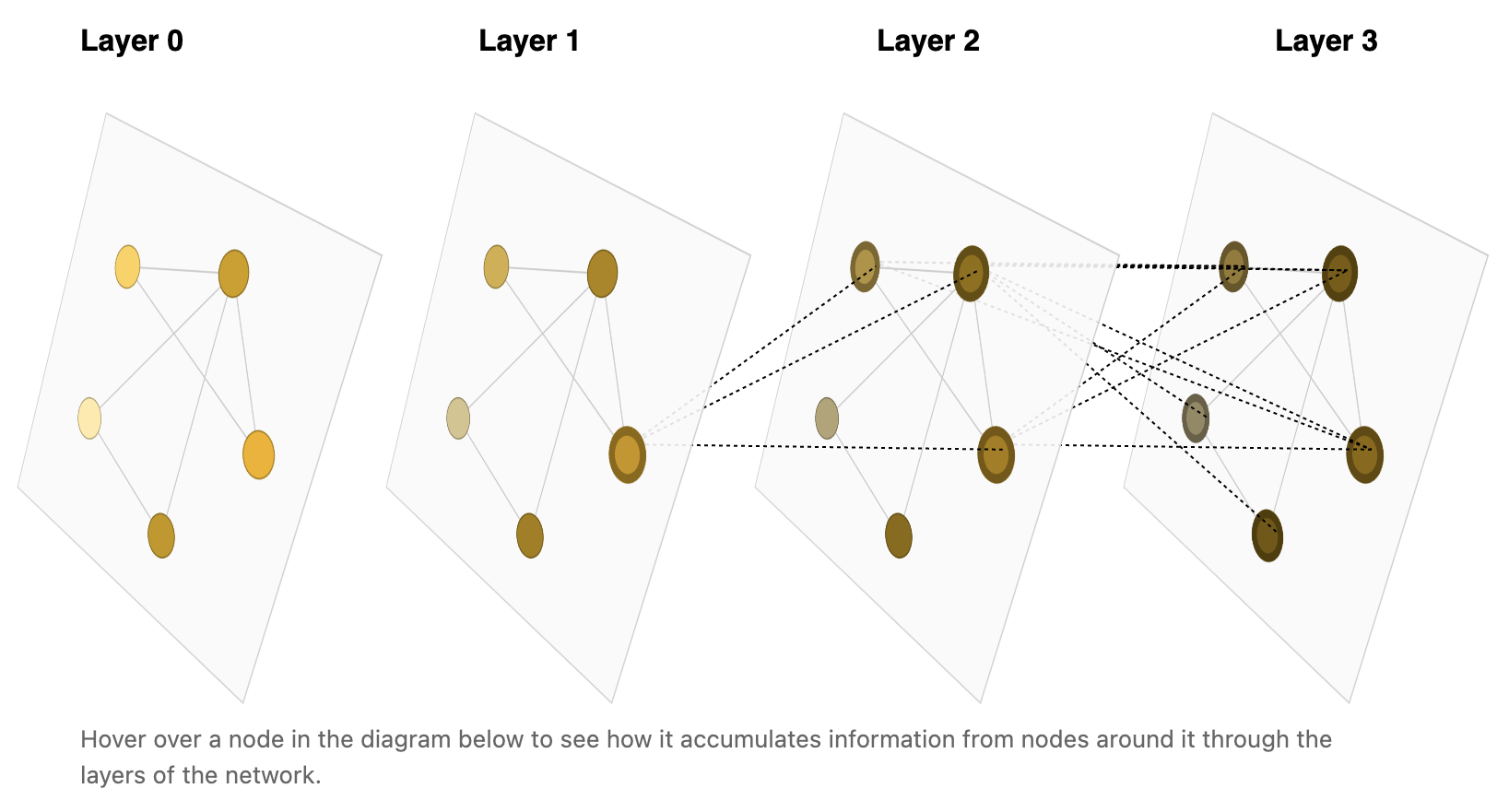

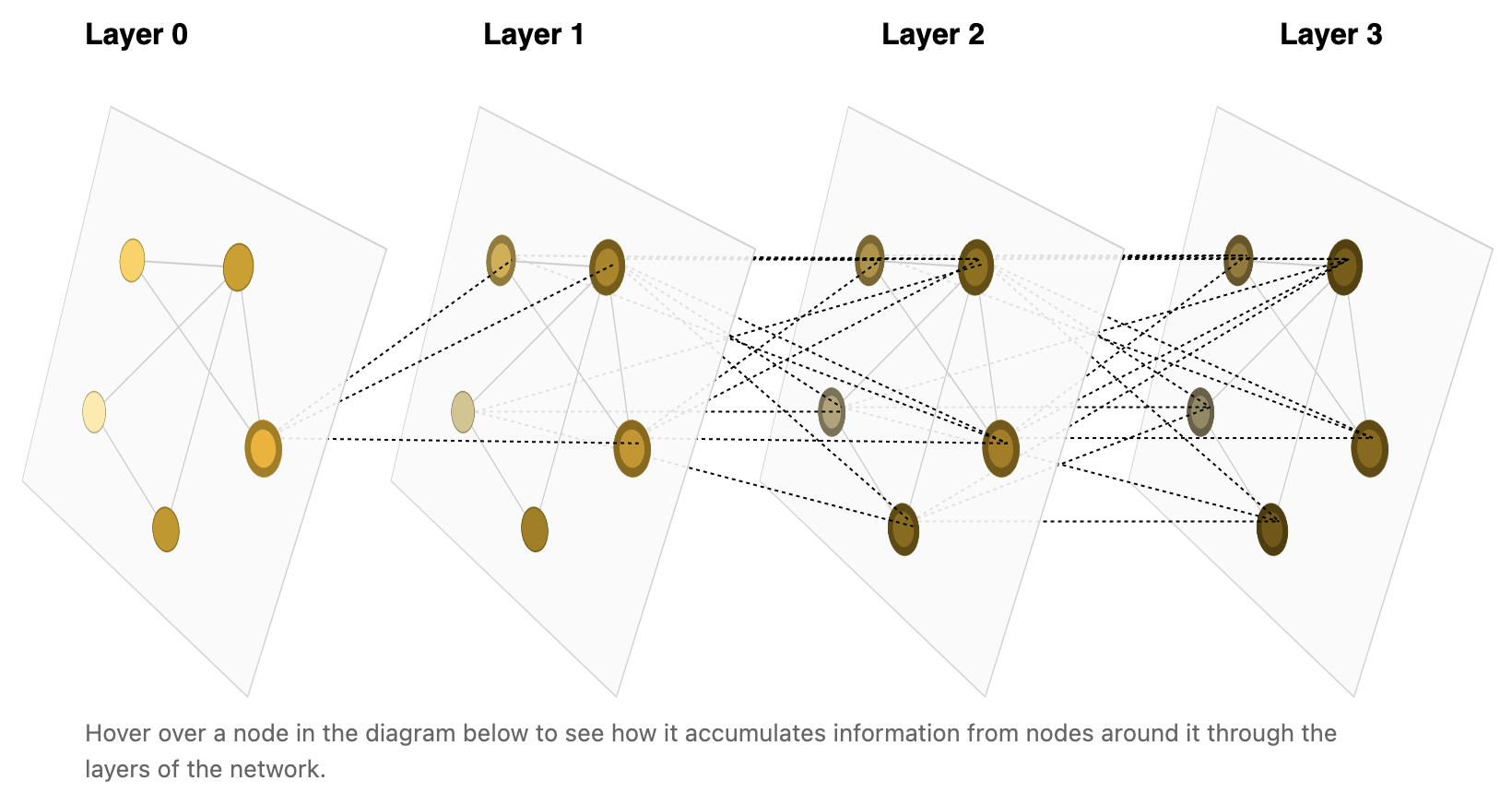

Hover over a node in the diagram below to see how it accumulates information from nodes around it through the layers of the network.

将鼠标悬停在下图中的一个节点上,查看它是如何通过网络层从周围的节点收集信息的。

可以看到,每个节点是通过下一层与其的有联系的节点来收集信息的。例如图1-1中Layer 2中的一个节点就是通过Layer 3中与这个节点有联系的节点来收集信息的。每一层都是通过下一层的节点来收集信息的。

This article is one of two Distill publications about graph neural networks. Take a look at Understanding Convolutions on Graphs1 to understand how convolutions over images generalize naturally to convolutions over graphs.

本文是两篇Distill关于图神经网络的出版物之一。观察一下图的卷积操作1,以理解图像上的卷积如何自然地泛化到图上的卷积。

Graphs are all around us; real world objects are often defined in terms of their connections to other things. A set of objects, and the connections between them, are naturally expressed as a graph. Researchers have developed neural networks that operate on graph data (called graph neural networks, or GNNs) for over a decade.2 Recent developments have increased their capabilities and expressive power. We are starting to see practical applications in areas such as antibacterial discovery 3, physics simulations4 , fake news detection5 , traffic prediction6 and recommendation systems7 .

图围绕着我们的日常生活;现实世界的对象通常是根据它们与其他事物的联系来定义的。一组对象,以及它们之间的联系,可以自然地使用图表示出来。十多年来,研究人员已经开发出基于图数据的神经网络(称为图神经网络,或GNN)2。最近的发展提高了它们的功能和表达能力。我们开始看到它在抗菌发现3、物理模拟4、假新闻检测5、交通预测6和推荐系统7等领域的实际应用。

This article explores and explains modern graph neural networks. We divide this work into four parts. First, we look at what kind of data is most naturally phrased as a graph, and some common examples. Second, we explore what makes graphs different from other types of data, and some of the specialized choices we have to make when using graphs. Third, we build a modern GNN, walking through each of the parts of the model, starting with historic modeling innovations in the field. We move gradually from a bare-bones implementation to a state-of-the-art GNN model. Fourth and finally, we provide a GNN playground where you can play around with a real-word task and dataset to build a stronger intuition of how each component of a GNN model contributes to the predictions it makes.

本文探讨并解释了现代图神经网络。我们把这项工作分成四个部分。首先,我们看看什么样的数据可以自然地用图来表达,以及列举一些常见的例子。其次,我们将探讨是什么使图不同于其他类型的数据,以及在使用图时必须做出的一些特殊的选择。第三,我们将构建一个现代GNN模型,从该领域的历史建模创新开始,遍历模型的每个部分。我们逐步从一个最基本的实现过渡到最先进的GNN模型。第四,也是最后,我们提供了一个GNN playground,在这里您可以使用一个real word任务和相关数据集来对GNN有一个更好的理解,了解GNN模型的每个部分如何对其所做的预测发挥作用。

To start, let’s establish what a graph is. A graph represents the relations (edges) between a collection of entities (nodes).

首先,让我们先定义什么是图。图表示实体(节点)集合之间的关系(边)。

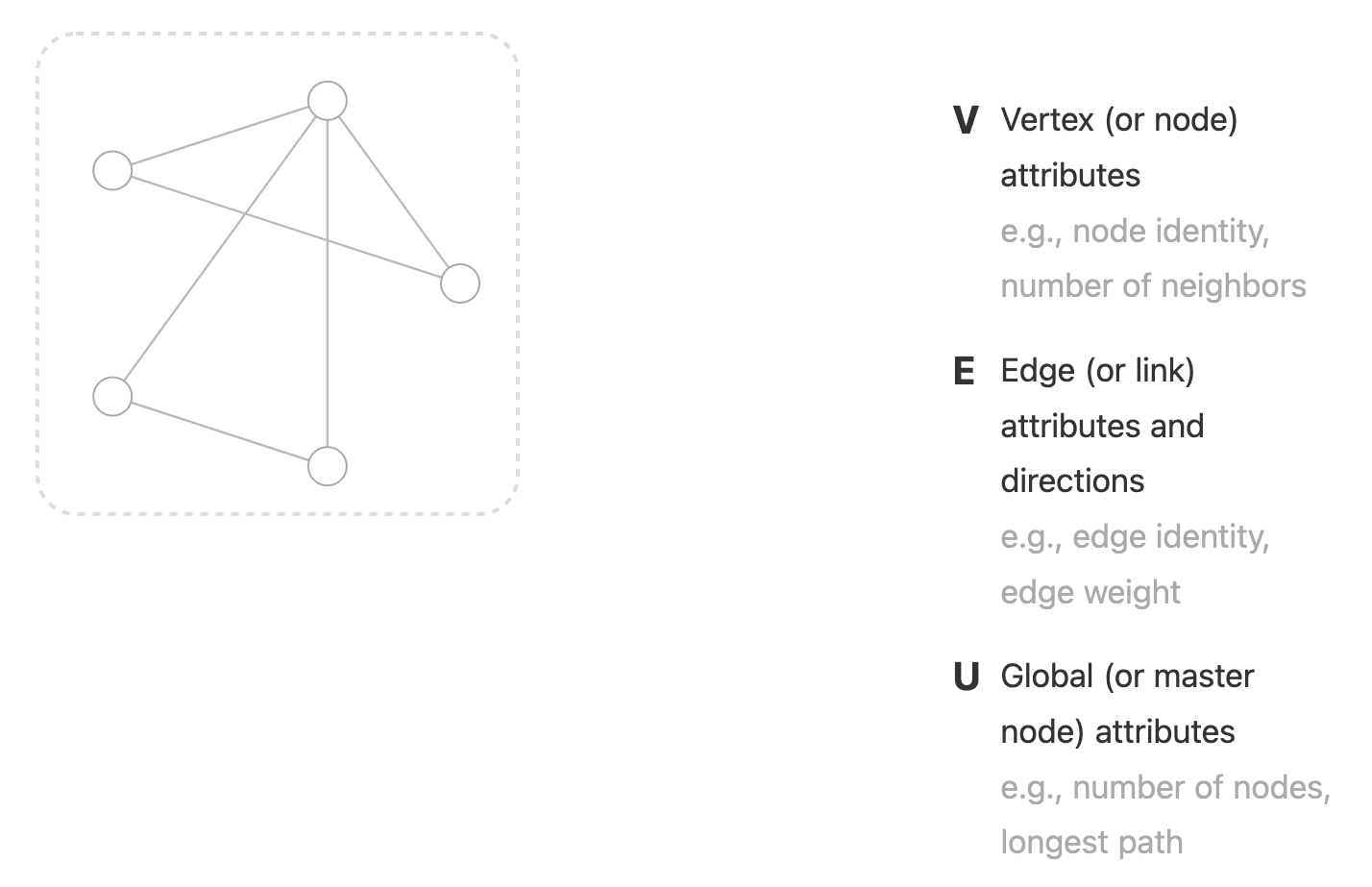

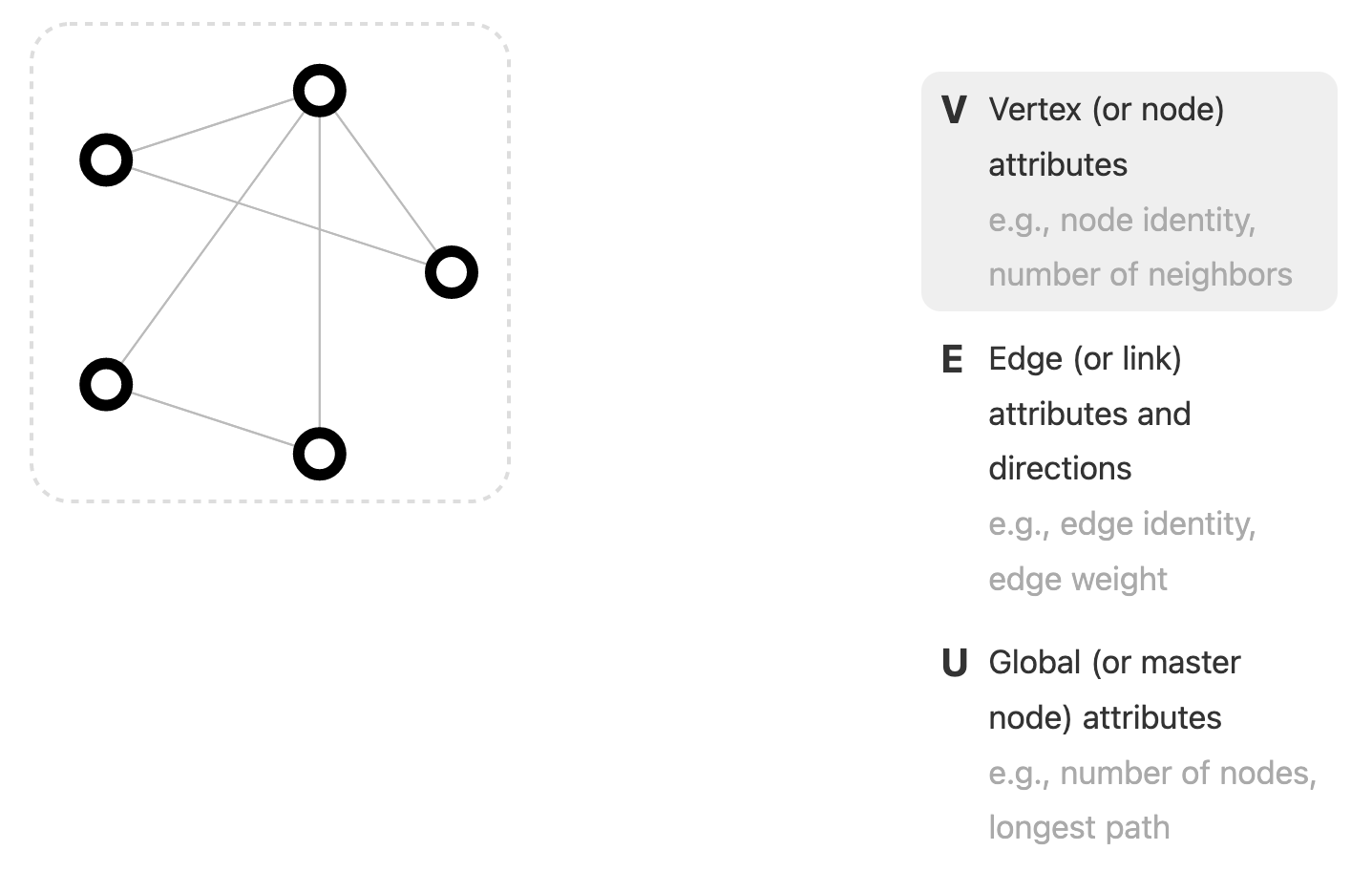

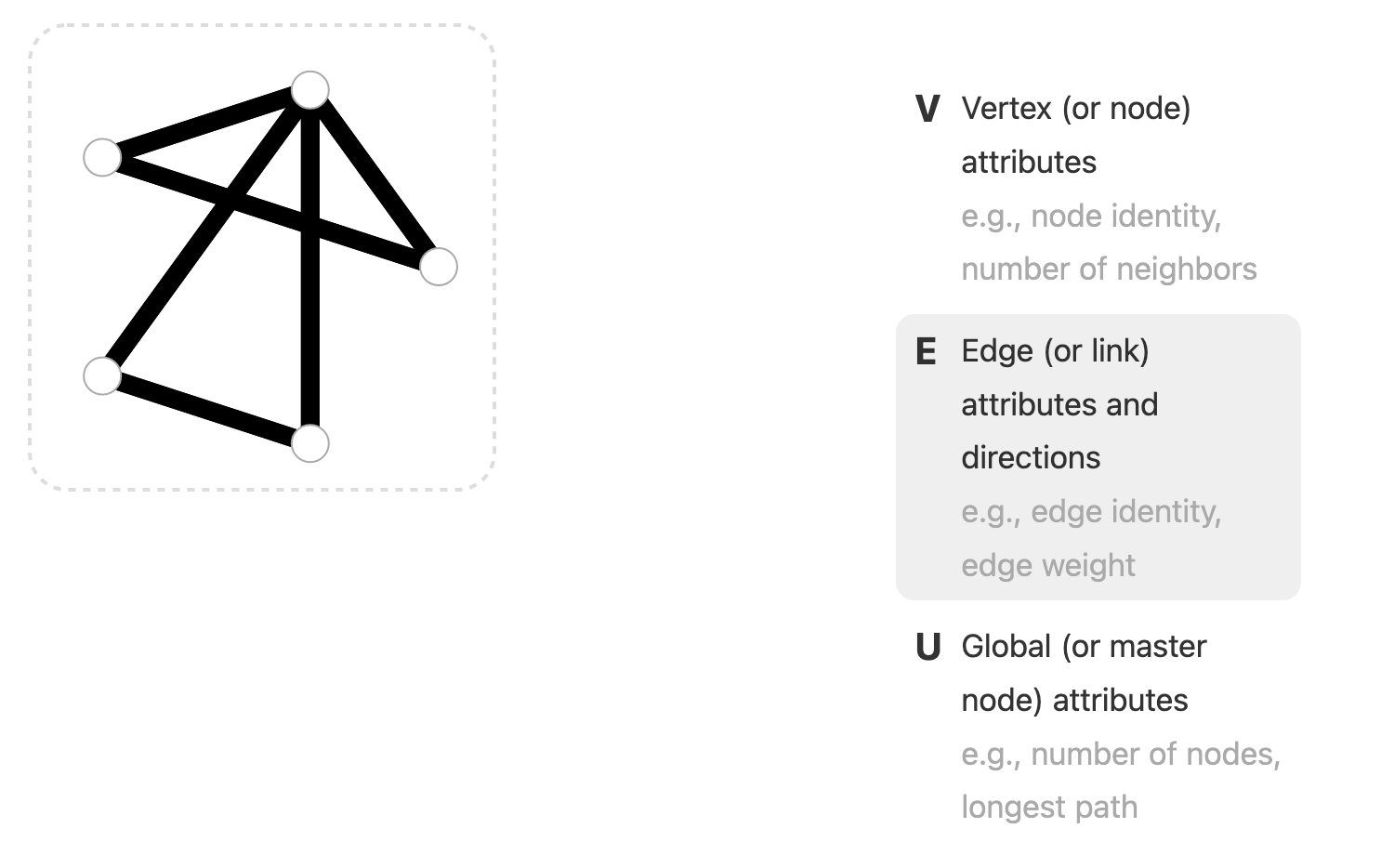

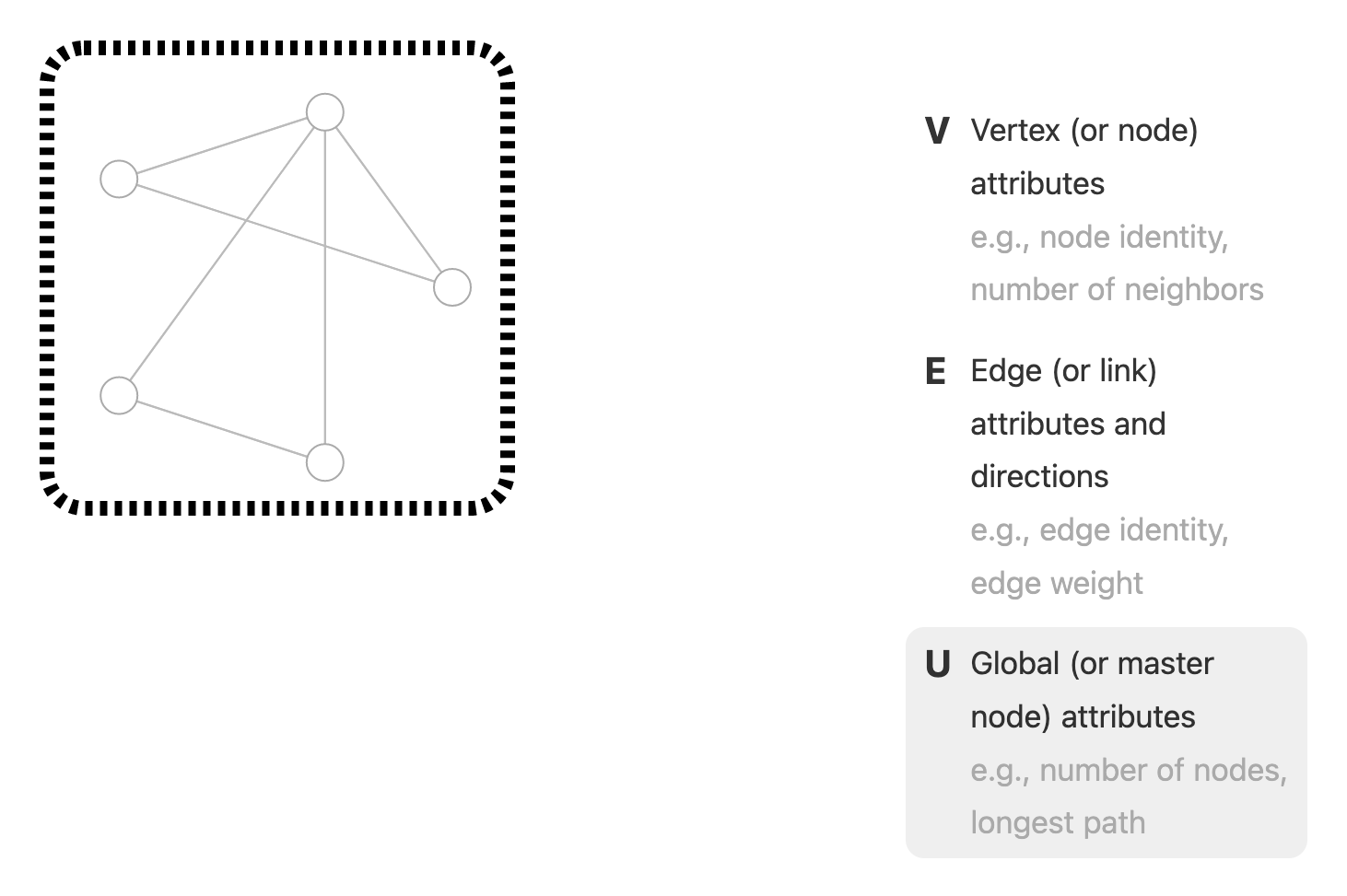

我们可以在图中找到三种类型的属性,将鼠标悬停以突出显示每个属性。其他类型的图和属性将在“其他类型的图”一节中讨论。

e.g., node identity, number of neighbors

V: 顶点(或节点)属性。例如,节点标识,邻居的数量

e.g., edge identity, edge weight

E: 边(或连接)属性和边的方向。例如,边标识,边权值

e.g., number of nodes, longest path

U: 全局(或主节点)属性。例如,节点数,最长路径

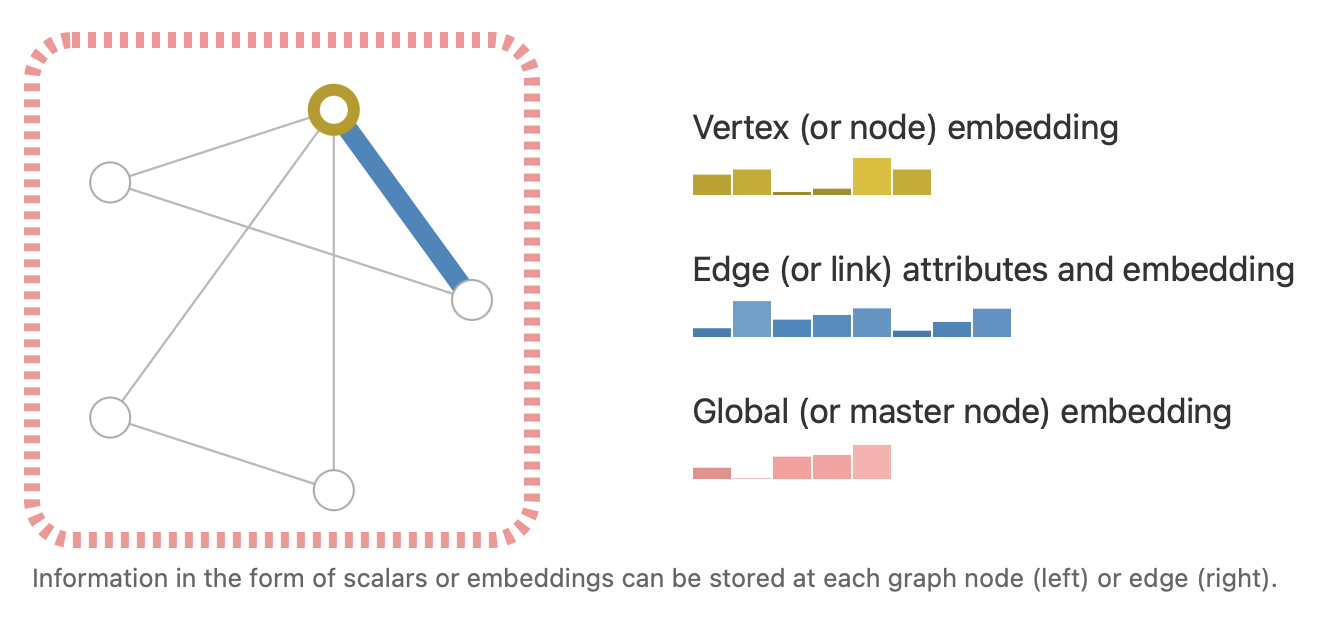

To further describe each node, edge or the entire graph, we can store information in each of these pieces of the graph. 为了进一步描述每个节点、边或者整个图,我们可以在图的每个部分中存储信息。

标量或embeddings形式的信息可以存储在每个图节点(左)或边(右)中。

可以看到,节点使用长度为6的向量来表示; 边使用长度为8的向量来表示; 全局使用长度为5的向量来表示。这个图的意思是怎样把我们想要的信息表示成向量的形式进行存储。

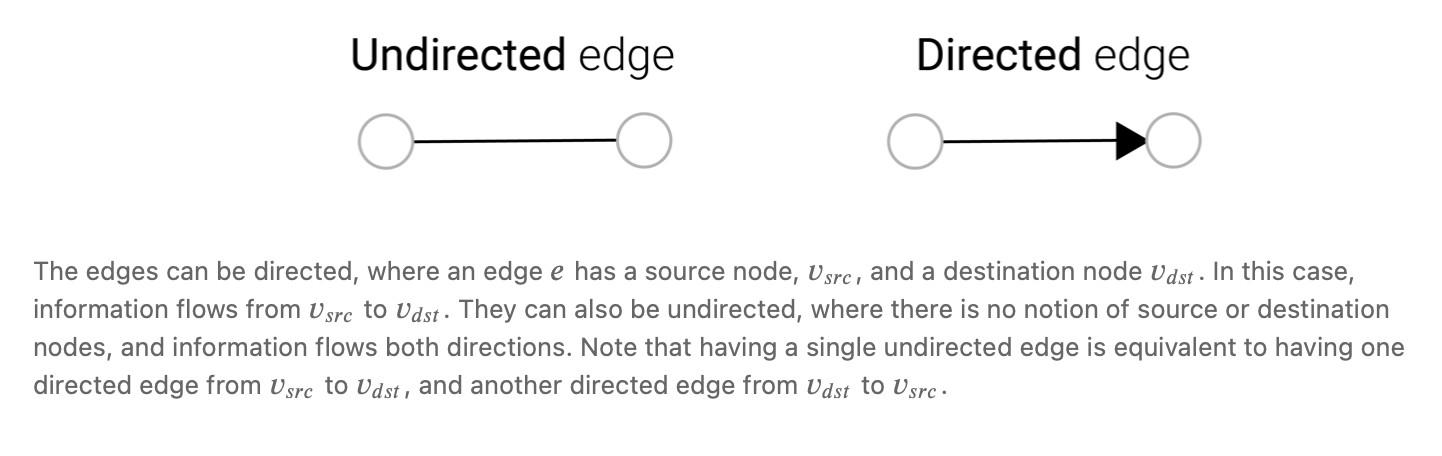

We can additionally specialize graphs by associating directionality to edges (directed, undirected).

我们还可以通过将方向性与边(有向、无向)联系起来来使图具有特殊性。

这些边可以是有方向的,其中边 e e e有一个源节点 v s r c v_{src} vsrc,和一个目标节点 v d s t v_{dst} vdst。在这种情况下,信息从 v s r c v_{src} vsrc流向 v d s t v_{dst} vdst。它们也可以是无方向的,即没有源节点或目标节点,信息是双向流动的。请注意,拥有一条无向边等价于拥有一条从 v s r c v_{src} vsrc到 v d s t v_{dst} vdst的有向边,以及另一条从 v d s t v_{dst} vdst到 v s r c v_{src} vsrc的有向边。

Graphs are very flexible data structures, and if this seems abstract now, we will make it concrete with examples in the next section.

图是非常灵活的数据结构,如果现在看起来很抽象,我们将在下一节中通过示例使其具体化。

参考文献

[1] Understanding Convolutions on Graphs Daigavane, A., Ravindran, B. and Aggarwal, G., 2021. Distill. DOI: 10.23915/distill.00032 ↩︎ ↩︎

[2] The Graph Neural Network Model Scarselli, F., Gori, M., Tsoi, A.C., Hagenbuchner, M. and Monfardini, G., 2009. IEEE Transactions on Neural Networks, Vol 20(1), pp. 61–80. ↩︎ ↩︎

[3] A Deep Learning Approach to Antibiotic Discovery Stokes, J.M., Yang, K., Swanson, K., Jin, W., Cubillos-Ruiz, A., Donghia, N.M., MacNair, C.R., French, S., Carfrae, L.A., Bloom-Ackermann, Z., Tran, V.M., Chiappino-Pepe, A., Badran, A.H., Andrews, I.W., Chory, E.J., Church, G.M., Brown, E.D., Jaakkola, T.S., Barzilay, R. and Collins, J.J., 2020. Cell, Vol 181(2), pp. 475–483. ↩︎ ↩︎

[4] Learning to simulate complex physics with graph networks Sanchez-Gonzalez, A., Godwin, J., Pfaff, T., Ying, R., Leskovec, J. and Battaglia, P.W., 2020. ↩︎ ↩︎

[5] Fake News Detection on Social Media using Geometric Deep Learning Monti, F., Frasca, F., Eynard, D., Mannion, D. and Bronstein, M.M., 2019. ↩︎ ↩︎

[6] Traffic prediction with advanced Graph Neural Networks *, O.L. and Perez, L… ↩︎ ↩︎

[7] Pixie: A System for Recommending 3+ Billion Items to 200+ Million Users in {Real-Time} Eksombatchai, C., Jindal, P., Liu, J.Z., Liu, Y., Sharma, R., Sugnet, C., Ulrich, M. and Leskovec, J., 2017. ↩︎ ↩︎