1. 四个问题

-

解决什么问题

点云局部操作子的探索 -

用了什么方法解决

提出了GRA模块,基于此构建了RPNet完成点云分析。

To this end, we propose group relation aggregator (GRA) to learn from both low-level and high-level relations. Compared with self-attention and SA, our designed bottleneck version of GRA is obviously efficient in terms of computation and the number of parameters. With bottleneck GRA, we construct the efficient point-based networks RPNet

-

效果如何

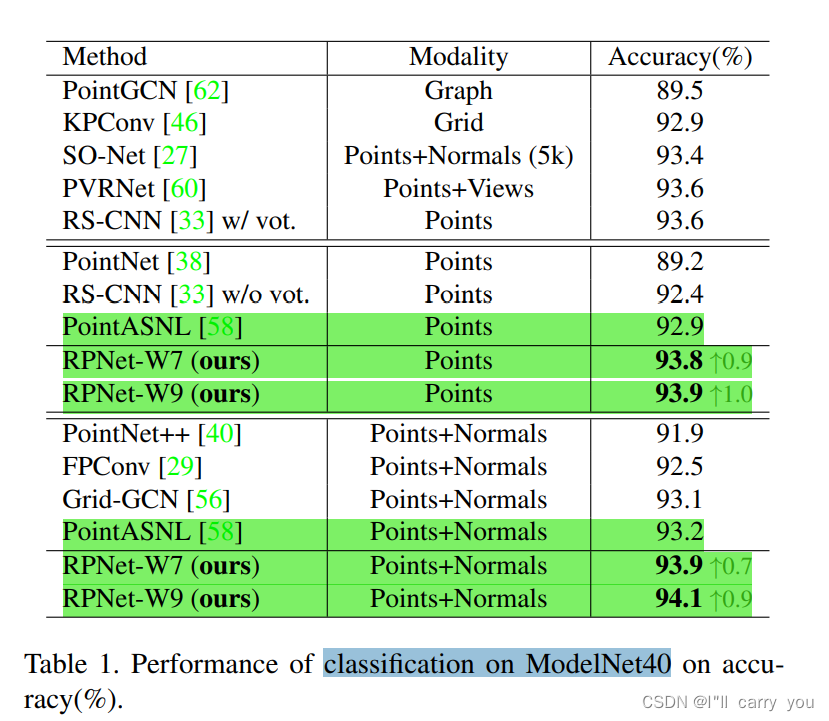

RPNet achieves state-of-the-art for classification and segmentation on challenging benchmarks(classification on ModelNet40 - 94.1, semantic segmentation on the datasets of ScanNet v2 and S3DIS (6-fold cross validation-70.8 和68.2)

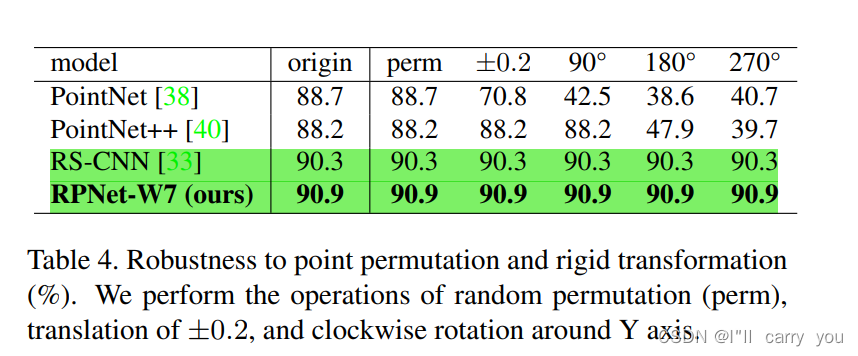

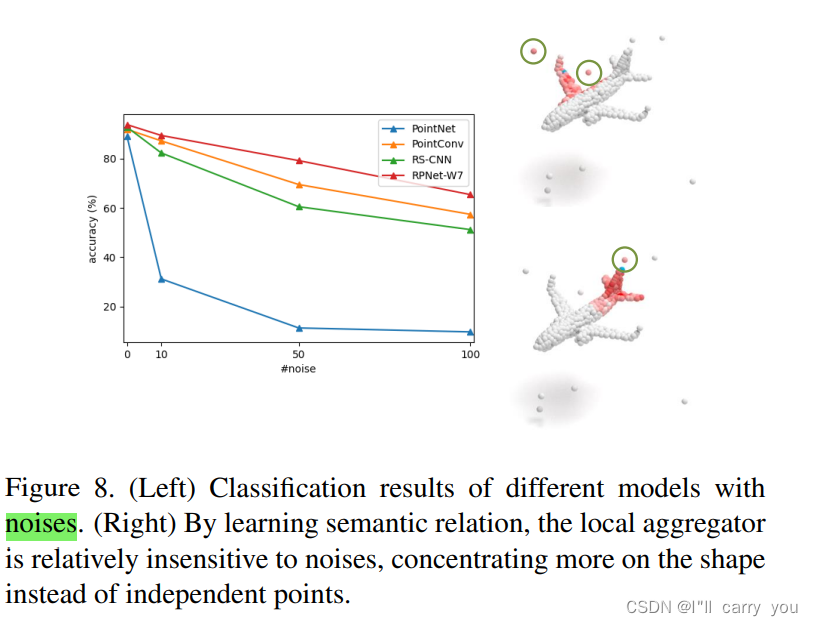

此外,在 parameters,computation saving, robustness,noises均有不错的表现。 -

还存在什么问题

?

2. 论文介绍

1. Introduction

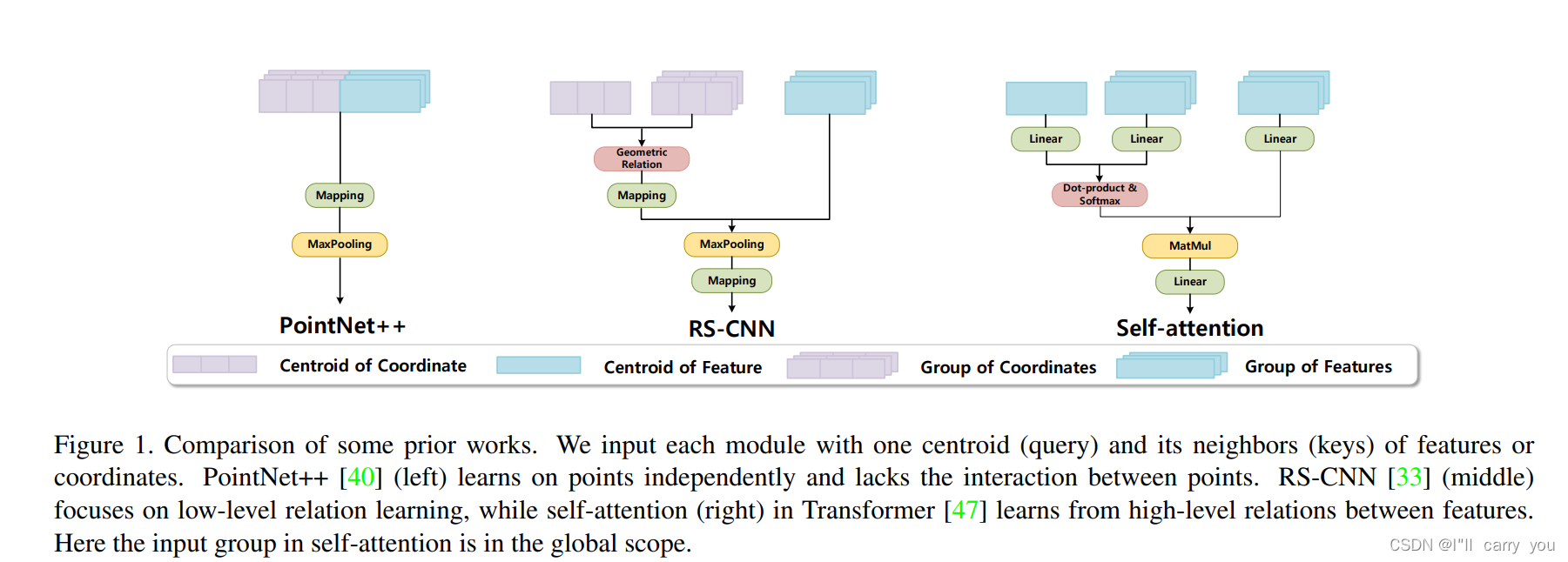

对PointNet,PointNet++,RS-CNN,self-attention的分析问题,以及引出自己方法。(看别人分析问题,以及如何引出自己的方法,研究动机)

- PointNet:For the ignorance of local structures

- PointNet++:However, this aggregator keeps learning on points independently, losing the sight of shape awareness

- RS-CNN:RS-CNN [33] computes a point feature from the aggregation of features weighted by predefined geometric relations (low-level relation) between the point Si and its neighbors N (Si) (shown in Fig. 1 middle)

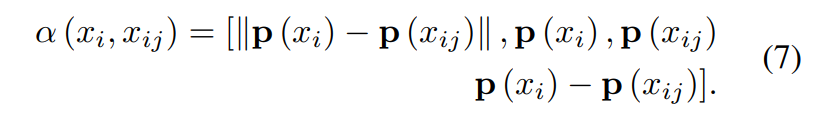

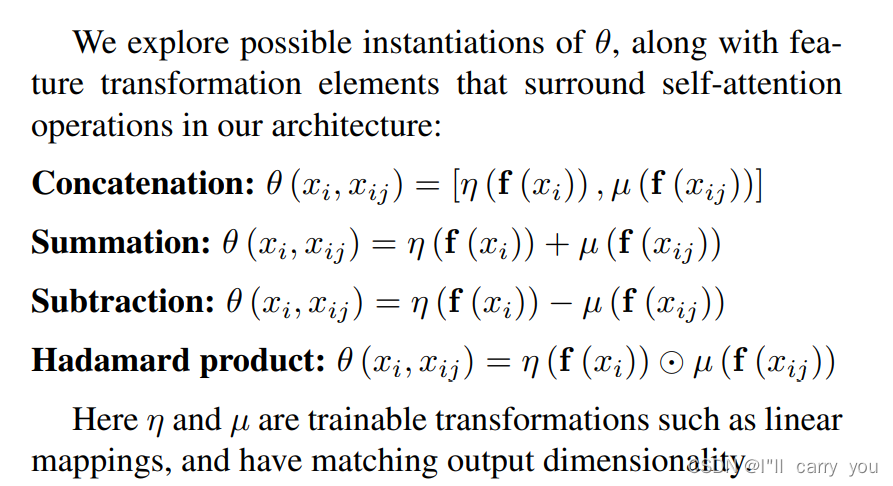

( 有从邻域内预定义的 geometric relations(文中称为low-level relation)计算出中心点的特征,但是缺少semantic relations(high-level relations),semantic relations 从哪里来? feature?)(However, RS-CNN is insufficient to learn semantic relations (high-level relations) for the lack of interaction between features)

- self-attention:self-attention能够补充这种 high-level relations,但是受限于 运算量和参数量

- 本文:The goal of this work is to extend grid-based self-attention to irregular points with a high-efficiency strategy。(提出bottleneck的GRA模块(既能学到 geometric shape又能学到semantic information),基于模块构建了宽的和深的网络:width (RPNet-W) and depth (RPNet-D),宽的在分类上好,深的在分割上好,而且high-efficiency)

整个网络:

实验结果:

classification on ModelNet40

semantic segmentation on the datasets of ScanNet v2 and S3DIS (6-fold cross validation).

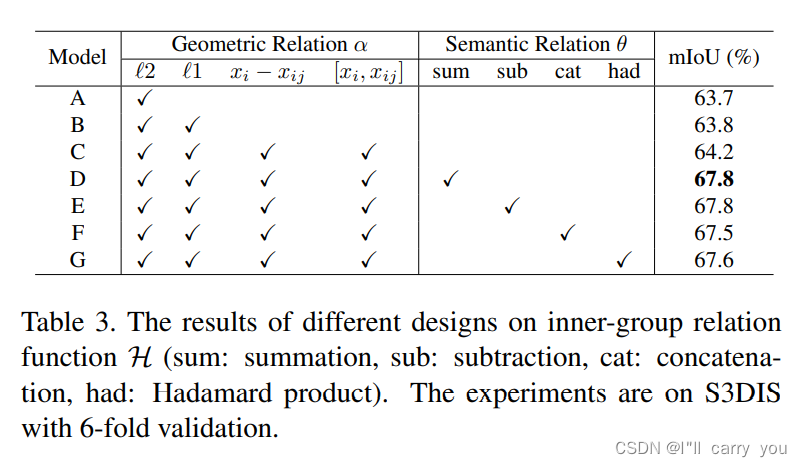

4.4. Ablation Study

Inner-group relation function H

Aggregation function A.

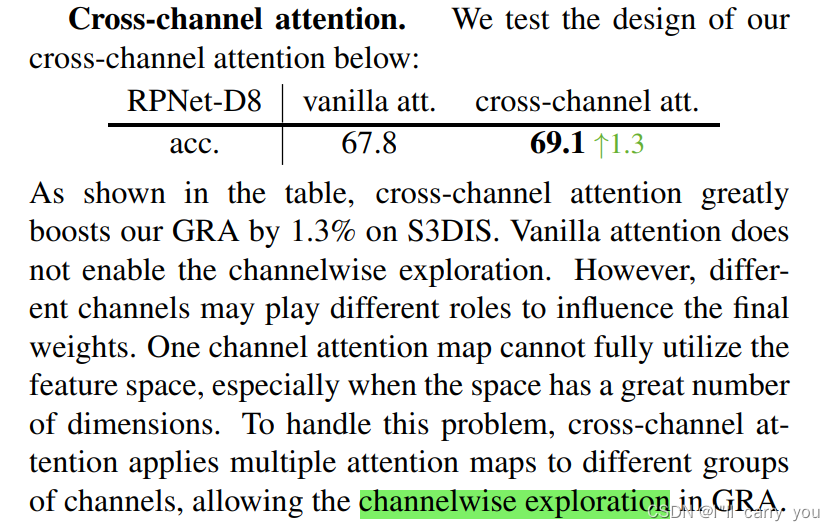

Cross-channel attention.

4.5. Analysis of Robustness

Robustness to rigid transformation.

noises.

3. 参考资料

4. 收获

本文从分析PointNet,PointNet++,RS-CNN,self-attention出发,到引出自己的方法的分析过程值得学习。

- PointNet,PointNet++:没有从邻域交互

- RS-CNN:预定义的 low-level的 几何特征,没有high-level 的sementic infomation。

- self-attention:有sementic infomation

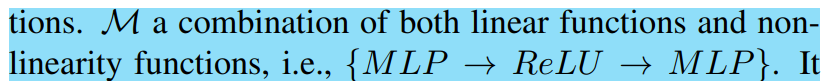

本文:GLR融合起来都有,learn from both low-level and high-level relations, concat起来,再用MLP去映射。还引入了cross-channel attention。

- Low-level Relation:预定义的几何特征

- high-level relations 从哪里来? features? features就代表语义信息?