一、Darknet

Darknet是最经典的一个深层网络,结合Resnet的特点在保证对特征进行超强表达的同时又避免了网络过深带来的梯度问题,主要有Darknet19和Darknet53,当然,如果你觉得这还不够深,在你条件允许的情况下你也可以延伸到99,199,999…

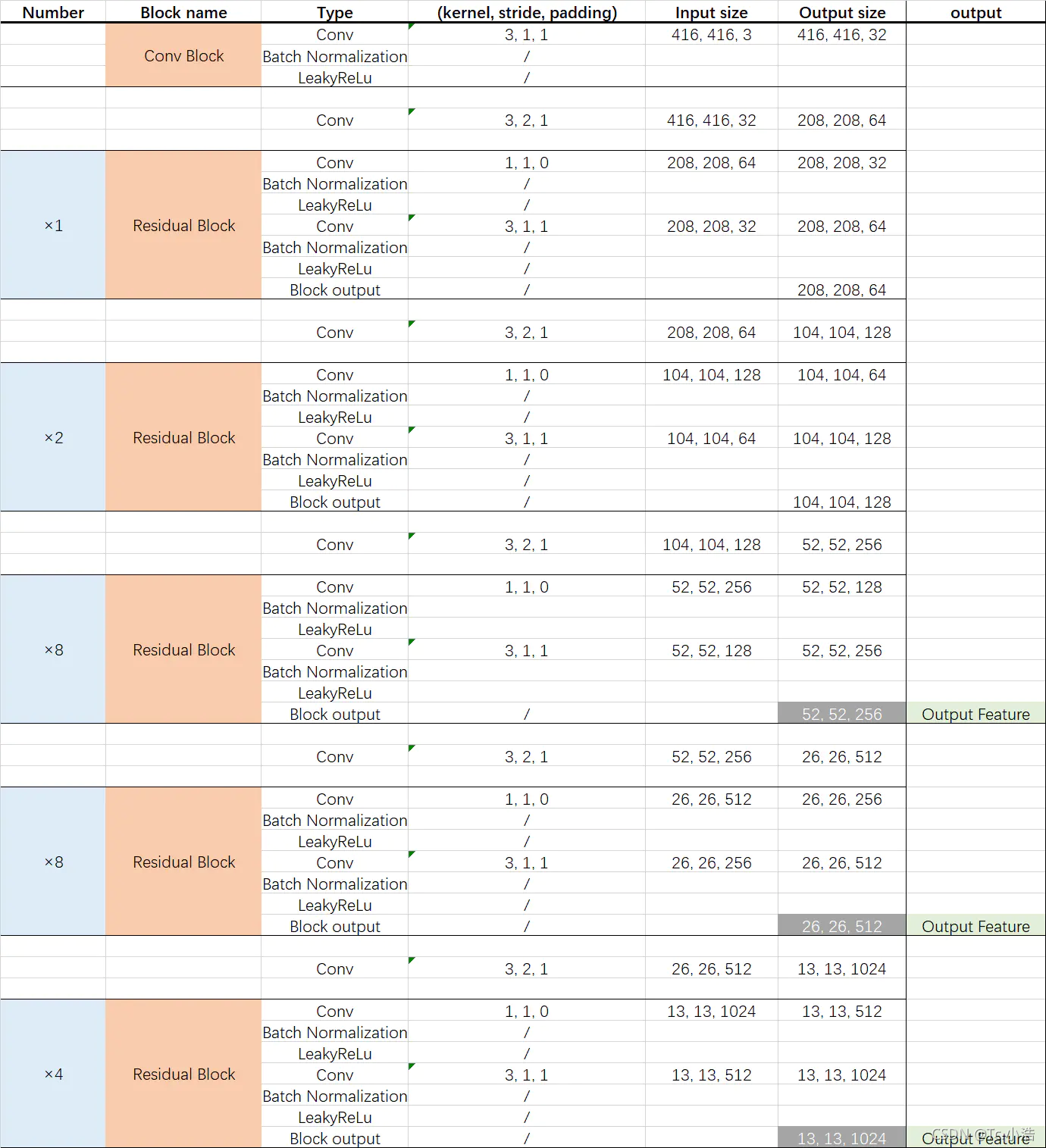

Darknet53的网络结构如图1所示,其中蓝色方块×1,x2,x8分别表示该模块重复1次、2次和8次,黄色方块是该模块的名字,Conv Block表示该模块是一个普通的卷积模块,Residual Bolck代表该模块是一个残差网络

二、代码实现

1、卷积

class Conv(nn.Module):

def __init__(self, c_in, c_out, k, s, p, bias=True):

"""

自定义一个卷积块,一次性完成卷积+归一化+激活,这在类似于像DarkNet53这样的深层网络编码上可以节省很多代码

:param c_in: in_channels,输入通道

:param c_out: out_channels,输出通道

:param k: kernel_size,卷积核大小

:param s: stride,步长

:param p: padding,边界扩充

:param bias: …

"""

super(Conv, self).__init__()

self.conv = nn.Sequential(

#卷积

nn.Conv2d(c_in, c_out, k, s, p),

#归一化

nn.BatchNorm2d(c_out),

#激活函数

nn.LeakyReLU(0.1),

)

def forward(self, entry):

return self.conv(entry)

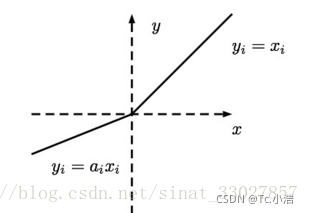

LeakyReLU数学表达式:y = max(0, x) + leak*min(0,x) (leak是一个很小的常数,这样保留了一些负轴的值,使得负轴的信息不会全部丢失)

leakyRelu的图像:

2、残差单元

class ConvResidual(nn.Module):

def __init__(self, c_in): # converlution * 2 + residual

"""

自定义残差单元,只需给出通道数,该单元完成两次卷积,并进行加残差后返回相同维度的特征图

:param c_in: 通道数

"""

c = c_in // 2

super(ConvResidual, self).__init__()

self.conv = nn.Sequential(

Conv(c_in, c, 1, 1, 0), # kernel_size = 1进行降通道

Conv(c, c_in, 3, 1, 1), # 再用kernel_size = 3把通道升回去

)

def forward(self, entry):

return entry + self.conv(entry) # 加残差,既保留原始信息,又融入了提取到的特征

# 采用 1*1 + 3*3 的形式加深网络深度,加强特征抽象

3、最后按照结构图把残差和卷积装一块

class Darknet53(nn.Module):

def __init__(self):

super(Darknet53, self).__init__()

self.conv1 = Conv(3, 32, 3, 1, 1) # 一个卷积块 = 1层卷积

self.conv2 = Conv(32, 64, 3, 2, 1)

self.conv3_4 = ConvResidual(64) # 一个残差块 = 2层卷积

self.conv5 = Conv(64, 128, 3, 2, 1)

self.conv6_9 = nn.Sequential( # = 4层卷积

ConvResidual(128),

ConvResidual(128),

)

self.conv10 = Conv(128, 256, 3, 2, 1)

self.conv11_26 = nn.Sequential( # = 16层卷积

ConvResidual(256),

ConvResidual(256),

ConvResidual(256),

ConvResidual(256),

ConvResidual(256),

ConvResidual(256),

ConvResidual(256),

ConvResidual(256),

)

self.conv27 = Conv(256, 512, 3, 2, 1)

self.conv28_43 = nn.Sequential( # = 16层卷积

ConvResidual(512),

ConvResidual(512),

ConvResidual(512),

ConvResidual(512),

ConvResidual(512),

ConvResidual(512),

ConvResidual(512),

ConvResidual(512),

)

self.conv44 = Conv(512, 1024, 3, 2, 1)

self.conv45_52 = nn.Sequential( # = 8层卷积

ConvResidual(1024),

ConvResidual(1024),

ConvResidual(1024),

ConvResidual(1024),

)

def forward(self, entry):

conv1 = self.conv1(entry)

conv2 = self.conv2(conv1)

conv3_4 = self.conv3_4(conv2)

conv5 = self.conv5(conv3_4)

conv6_9 = self.conv6_9(conv5)

conv10 = self.conv10(conv6_9)

conv11_26 = self.conv11_26(conv10)

conv27 = self.conv27(conv11_26)

conv28_43 = self.conv28_43(conv27)

conv44 = self.conv44(conv28_43)

conv45_52 = self.conv45_52(conv44)

return conv45_52, conv28_43, conv11_26 # YOLOv3用,所以输出了3次特征

原因是原本的Darknet53还包括一层输出层,前52层用于特征提取,最后一层进行最终输出。这里就根据自己实际需求再定义一层或多层对前52层提取到的特征进行融合和输出。是不是感觉一下子就豁然开朗了,就53行代码尽然就把经典的深度网络模型Darknet53写出来了