首先,hadoop需要java运行环境,所以请提前配置好JDK以及Hadooop环境变量

1. 集群规划

主机名 |

ip |

NameNode |

DataNode |

Yarn |

ZooKeeper |

JournalNode |

Zhiyou01 |

192.168.233.128 |

是 |

是 |

否 |

是 |

是 |

Zhiyou02 |

192.168.233.130 |

是 |

是 |

否 |

是 |

是 |

Zhiyou03 |

192.168.233.131 |

否 |

是 |

是 |

是 |

是 |

2. 准备:(在一台机器上配置,之后复制到其他节点上)

1. 配置jdk,hadoop环境变量,hosts文件

[root@zhiyou bin]# vi /etc/hosts

#127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

#::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

# 上面的给注释掉或者删除

192.168.233.128 zhiyou01

192.168.233.130 zhiyou02

192.168.233.131 zhiyou032. 配置ssh免密登录

配置免密登录前请确保防火墙已关闭并且之间能ping通。设置好静态ip

systemctl stop firewalld.service #停止firewall

systemctl disable firewalld.service #禁止firewall开机启动#进入home目录下

[root@zhiyou01 /]# cd ~/.ssh

[root@zhiyou01 .ssh]#

[root@zhiyou01 .ssh]# ssh-keygen -t rsa #全部输回车

Generating public/private rsa key pair.

Enter file in which to save the key (/root/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /root/.ssh/id_rsa.

Your public key has been saved in /root/.ssh/id_rsa.pub.

The key fingerprint is:

00:64:8c:5d:6a:fc:fc:70:16:62:de:b8:9e:15:b7:b6 root@zhiyou01

The key's randomart image is:

+--[ RSA 2048]----+

| =+.. |

| .ooo |

| + + . |

| . = = . |

| * S . |

| * o . |

| . o o |

| . o . . |

| o E |

+-----------------+

[root@zhiyou01 .ssh]#

[root@zhiyou01 .ssh]# ls

id_rsa id_rsa.pub known_hosts

免密登录的原理

1.在A 服务商上生成密钥 (公钥和私钥 ssh-keygen -t rsa)

2.将公钥拷贝给B服务器 (ssh-copy-id 192.168.233.129)B服务器上会生成一个authorized_keys 文件

3.A向B发送一个请求

4.B获取到A的请求之后,在authorized_keys里面查找对应的(A的)用户名和ip,如果有,会生成一个随机的字符串,然后用A的公钥对该字符串进行加密,发送给A

5.A接收到B给的字符串,用私钥进行解密,然后把解密后的字符串再发给B

6.B接收到A的字符串,与生成的字符串进行对比,如果一样,运行免密;

7.known_hosts会保存已经获得免密的ip地址等信息

3. 配置zookeeper

安装过程:

在一台电脑上配置好,然后发送到其他的电脑上;

1.上传zookeeper到user/zookeeper下

[root@zhiyou01 zookeeper]# ls

zookeeper-3.4.12.tar.gz2.解压

[root@zhiyou01 zookeeper]# tar -zxvf zookeeper-3.4.12.tar.gz$zookeeper/conf

3.1 生成zoo.cfg

[root@zhiyou01 zookeeper]# cd /usr/zookeeper/zookeeper-3.4.12/conf

[root@zhiyou01 conf]# ls

configuration.xsl log4j.properties zoo_sample.cfg

[root@zhiyou01 conf]#

[root@zhiyou01 conf]# mv zoo_sample.cfg zoo.cfg #生成zoo.cfg

[root@zhiyou01 conf]# ls

configuration.xsl log4j.properties zoo.cfg

[root@zhiyou01 conf]#zoo.cfg。其中各配置项的含义,解释如下:

1.tickTime:CS通信心跳时间

Zookeeper 服务器之间或客户端与服务器之间维持心跳的时间间隔,也就是每个 系ckTime 时间

就会发送一个心跳。系ckTime以毫秒为单位。

tickTime=2000

2.initLimit:LF初始通信时限

集群中的follower服务器(F)与leader服务器(L)之间初始连接时能容忍的最多心跳数(系ckTime的

数量)。

initLimit=5

3.syncLimit:LF同步通信时限

集群中的follower服务器与leader服务器之间请求和应答之间能容忍的最多心跳数(系ckTime的数

量)。

syncLimit=2

4.dataDir:数据文件目录

Zookeeper保存数据的目录,默认情况下,Zookeeper将写数据的日志文件也保存在这个目录里。

dataDir=/home/michael/opt/zookeeper/data

5.clientPort:客户端连接端口

客户端连接 Zookeeper 服务器的端口,Zookeeper 会监听这个端口,接受客户端的访问请求。

clientPort=2181

6.服务器名称与地址:集群信息(服务器编号,服务器地址,LF通信端口,选举端口)

这个配置项的书写格式比较特殊,规则如下:

server.N=YYY:A:B

3.2修改

3.2.1修改dateDir

dataDir=/usr/zookeeper/dataserver.01=192.168.233.129:2888:3888

server.02=192.168.233.132:2888:3888

server.03=192.168.233.133:2888:3888在里面添加内容,内容是 server.N中的N

(server.01 内容就是 01 位置对ip地址对应 )

zhiyou01 ------- 01

zhiyou02 ------- 02

zhiyou03 ------- 03

[root@zhiyou01 data]# echo "01">myid(jdk 也要发 /etc/profile也要发送 拷贝之后需要 resource /etc/profile)

scp -r 本地目录 其他节点的用户名@IP:目录

[root@zhiyou01 data]# scp -r /usr/java/ [email protected]:/usr/java/

[root@zhiyou01 data]# scp -r /usr/java/ [email protected]:/usr/java/

[root@zhiyou01 data]# scp -r /etc/profile [email protected]:/etc/profile

[root@zhiyou01 data]# scp -r /etc/profile [email protected]:/etc/profile把zookeeper给发过去

[root@zhiyou01 data]# scp -r /usr/zookeeper/

[email protected]:/usr/zookeeper/

[root@zhiyou01 data]# scp -r /usr/zookeeper/

[email protected]:/usr/zookeeper/6. zhiyou02 把myid --02

[root@zhiyou02 usr]# cd /usr/zookeeper/data/

[root@zhiyou02 data]# echo "02">myid

[root@zhiyou02 data]# cat myid

02

[root@zhiyou02 data]#

zhiyou03 把myid--03

[root@zhiyou03 usr]# cd /usr/zookeeper/data/

[root@zhiyou03 data]# echo "03">myid

[root@zhiyou03 data]# cat myid

037.分别启动zookeeper

[root@zhiyou01 ~]# cd /usr/zookeeper/zookeeper-3.4.12/bin/

[root@zhiyou01 bin]# ls

README.txt zkCleanup.sh zkCli.cmd zkCli.sh zkEnv.cmd zkEnv.sh zkServer.cmd

zkServer.sh

[root@zhiyou01 bin]# ./zkServer.sh start8.查看状态

[root@zhiyou01 bin]# ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/zookeeper/zookeeper-3.4.12/bin/../conf/zoo.cfg

Mode: follower

[root@zhiyou01 bin]#9.查看进程: jps

[root@zhiyou03 bin]# jps

18214 Jps

18153 QuorumPeerMain测试: 把leader的进程给结束了

[root@zhiyou03 bin]# kill -9 18153

[root@zhiyou03 bin]# jps

18224 Jps又产生了新的领导

[root@zhiyou02 bin]# ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/zookeeper/zookeeper-3.4.12/bin/../conf/zoo.cfg

Mode: follower

[root@zhiyou02 bin]# ./zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /usr/zookeeper/zookeeper-3.4.12/bin/../conf/zoo.cfg

Mode: leader

[root@zhiyou02 bin]#1.进入zkClient

[root@zhiyou01 bin]# ./zkCli.sh

Connecting to localhost:2181

2018-05-31 17:03:00,806 [myid:] - INFO [main:Environment@100] - Client

environment:zookeeper.version=3.4.12-

e5259e437540f349646870ea94dc2658c4e44b3b, built on 03/27/2018 03:55 GMT

2018-05-31 17:03:00,822 [myid:] - INFO [main:Environment@100] - Client

environment:host.name=zhiyou01

2018-05-31 17:03:00,822 [myid:] - INFO [main:Environment@100] - Client

environment:java.version=1.8.0_141

2018-05-31 17:03:00,829 [myid:] - INFO [main:Environment@100] - Client

environment:java.vendor=Oracle Corporation

2018-05-31 17:03:00,829 [myid:] - INFO [main:Environment@100] - Client

environment:java.home=/usr/java/jdk1.8.0_141/jre

2018-05-31 17:03:00,829 [myid:] - INFO [main:Environment@100] - Client

environment:java.class.path=/usr/zookeeper/zookeeper-

3.4.12/bin/../build/classes:/usr/zookeeper/zookeeper-

3.4.12/bin/../build/lib/*.jar:/usr/zookeeper/zookeeper-3.4.12/bin/../lib/slf4j-log4j12-

1.7.25.jar:/usr/zookeeper/zookeeper-3.4.12/bin/../lib/slf4j-api-

1.7.25.jar:/usr/zookeeper/zookeeper-3.4.12/bin/../lib/netty-

3.10.6.Final.jar:/usr/zookeeper/zookeeper-3.4.12/bin/../lib/log4j-

1.2.17.jar:/usr/zookeeper/zookeeper-3.4.12/bin/../lib/jline-

0.9.94.jar:/usr/zookeeper/zookeeper-3.4.12/bin/../lib/audience-annotations-

0.5.0.jar:/usr/zookeeper/zookeeper-3.4.12/bin/../zookeeper-

3.4.12.jar:/usr/zookeeper/zookeeper-

3.4.12/bin/../src/java/lib/*.jar:/usr/zookeeper/zookeeper-

3.4.12/bin/../conf:/usr/java/jdk1.8.0_141/lib/

2018-05-31 17:03:00,829 [myid:] - INFO [main:Environment@100] - Client

environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2018-05-31 17:03:00,829 [myid:] - INFO [main:Environment@100] - Client

environment:java.io.tmpdir=/tmp

2018-05-31 17:03:00,829 [myid:] - INFO [main:Environment@100] - Client

environment:java.compiler=<NA>

2018-05-31 17:03:00,829 [myid:] - INFO [main:Environment@100] - Client

environment:os.name=Linux

2018-05-31 17:03:00,829 [myid:] - INFO [main:Environment@100] - Client

environment:os.arch=amd64

2018-05-31 17:03:00,829 [myid:] - INFO [main:Environment@100] - Client

environment:os.version=3.10.0-514.el7.x86_64

2018-05-31 17:03:00,830 [myid:] - INFO [main:Environment@100] - Client

environment:user.name=root

2018-05-31 17:03:00,830 [myid:] - INFO [main:Environment@100] - Client

environment:user.home=/root

2018-05-31 17:03:00,830 [myid:] - INFO [main:Environment@100] - Client

environment:user.dir=/usr/zookeeper/zookeeper-3.4.12/bin

2018-05-31 17:03:00,831 [myid:] - INFO [main:ZooKeeper@441] - Initiating client

connection, connectString=localhost:2181 sessionTimeout=30000

watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@22d8cfe0

Welcome to ZooKeeper!

2018-05-31 17:03:00,952 [myid:] - INFO [mainSendThread(localhost:2181):ClientCnxn$SendThread@1028]

- Opening socket

connection to server localhost/127.0.0.1:2181. Will not attempt to authenticate using

SASL (unknown error)

JLine support is enabled

2018-05-31 17:03:01,075 [myid:] - INFO [mainSendThread(localhost:2181):ClientCnxn$SendThread@878]

- Socket connection

established to localhost/127.0.0.1:2181, initiating session

2018-05-31 17:03:01,105 [myid:] - INFO [mainSendThread(localhost:2181):ClientCnxn$SendThread@1302]

- Session establishment

complete on server localhost/127.0.0.1:2181, sessionid = 0x10000b834fd0000,

negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0]查看zookeeper上面的内容

[zk: localhost:2181(CONNECTED) 0] ls / #查看

[zookeeper]创建一个文件

create /文件 文件里面的内容

[zk: localhost:2181(CONNECTED) 1] create /zhiyou01 hello

WATCHER::

WatchedEvent state:SyncConnected type:NodeChildrenChanged path:/

Created /zhiyou01

[zk: localhost:2181(CONNECTED) 2]查看文件的内容

[zk: localhost:2181(CONNECTED) 5] get /zhiyou01

hello

cZxid = 0x500000002

ctime = Thu May 31 17:06:02 CST 2018

mZxid = 0x500000002

mtime = Thu May 31 17:06:02 CST 2018

pZxid = 0x500000002

cversion = 0

dataVersion = 0

aclVersion = 0

ephemeralOwner = 0x0

dataLength = 5

numChildren = 0

[zk: localhost:2181(CONNECTED) 6][zk: localhost:2181(CONNECTED) 0] ls /

[zookeeper, zhiyou01]

[zk: localhost:2181(CONNECTED) 1]3. 修改hadoop配置文件

cd /usr/hadoop/hadoop-2.7.3/etc/hadoop/3.1. core-site.xml

<!-- 指定hdfs的nameservice为ns -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://ns</value>

</property>

<!--指定hadoop数据临时存放目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>/zhiyou/hadoop/tmp</value>

</property>

<property>

<name>io.file.buffer.size</name>

<value>4096</value>

</property>

<!--指定zookeeper地址-->

<property>

<name>ha.zookeeper.quorum</name>

<value>zhiyou01:2181,zhiyou02:2181,zhiyou03:2181</value>

</property>

<property>

<name>ipc.client.connect.max.retries</name>

<value>100</value>

<description>Indicates the number of retries a client will make to establish

a server connection.

</description>

</property>

<property>

<name>ipc.client.connect.retry.interval</name>

<value>10000</value>

<description>Indicates the number of milliseconds a client will wait for

before retrying to establish a server connection.

</description>

</property>3.2. hdfs-site.xml

<!--指定hdfs的nameservice为ns,需要和core-site.xml中的保持一致 -->

<property>

<name>dfs.nameservices</name>

<value>ns</value>

</property>

<!-- ns下面有两个NameNode,分别是nn1,nn2 -->

<property>

<name>dfs.ha.namenodes.ns</name>

<value>nn1,nn2</value>

</property>

<!-- nn1的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns.nn1</name>

<value>zhiyou01:9000</value>

</property>

<!-- nn1的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns.nn1</name>

<value>zhiyou01:50070</value>

</property>

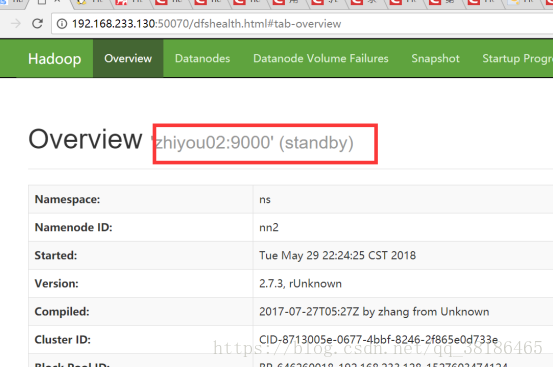

<!-- nn2的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns.nn2</name>

<value>zhiyou02:9000</value>

</property>

<!-- nn2的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns.nn2</name>

<value>zhiyou02:50070</value>

</property>

<!-- 指定NameNode的元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://zhiyou01:8485;zhiyou02:8485;zhiyou03:8485/ns</value>

</property>

<!-- 指定JournalNode在本地磁盘存放数据的位置 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/zhiyou/hadoop/journal</value>

</property>

<!-- 开启NameNode故障时自动切换 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.ns</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<!-- 使用隔离机制时需要ssh免登陆 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:///zhiyou/hadoop/hdfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:///zhiyou/hadoop/hdfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- 在NN和DN上开启WebHDFS (REST API)功能,不是必须 -->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property> 3.3. mapred-site.xml

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property> 3.4. yarn-site.xml

<!-- 指定nodemanager启动时加载server的方式为shuffle server -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<!-- 指定resourcemanager地址 -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>zhiyou03</value>

</property> 3.5. hadoop-env.sh

找到文件的export JAVA_HOME=${JAVA_HOME} 一行,将其修改为 export JAVA_HOME=/usr/java/xxx

3.6. 修改salves文件(dataNode所在)

想将dataNode设置在谁就写谁

zhiyou01

zhiyou02

zhiyou034. 复制内容到zhiyou02,zhiyou03里面

java

hadoop

zookeeper

/etc/hosts

/etc/profile5. 启动集群

注:" ./ " 为在hadoop sbin目录下

5.1. 分别启动zookeeper

[root@zhiyou3 bin]# ./zkServer.sh start5.2. 在zhiyou01,02,03分别上启动journalnode集群

[root@zhiyou sbin]# ./hadoop-daemon.sh start journalnode

starting journalnode, logging to /usr/hadoop/hadoop-2.7.3/logs/hadoop-root-journalnode-zhiyou.out

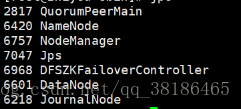

[root@zhiyou sbin]# jps

2817 QuorumPeerMain

2908 JournalNode

2958 Jps

[root@zhiyou sbin]#5.3. 在zhiyou01分别上格式化zkfc

[root@zhiyou sbin]# hdfs zkfc -formatZK5.4. 在zhiyou01上格式化hdfs

hadoop namenode -format5.5. 在zhiyou01上启动namenode

[root@zhiyou sbin]# ./hadoop-daemon.sh start namenode

starting namenode, logging to /usr/hadoop/hadoop-2.7.3/logs/hadoop-root-namenode-zhiyou.out

[root@zhiyou sbin]# jps

2817 QuorumPeerMain

6420 NameNode

6218 JournalNode

6493 Jps

[root@zhiyou sbin]#5.6. 在zhiyou02上启动数据同步和standby的namenode

hdfs namenode -bootstrapStandby

./hadoop-daemon.sh start namenode

5.7. 在zhiyou01 上启动datanode

./hadoop-daemons.sh start datanode5.8. 在zhiyou03上启动yarn

./start-yarn.sh5.9. 在zhiyou01上启动zkfc

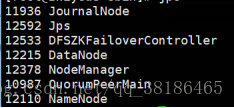

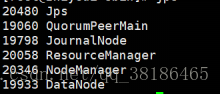

./hadoop-daemons.sh start zkfc6. 查看jps

Zhiyou01

Zhiyou02

Zhiyou03

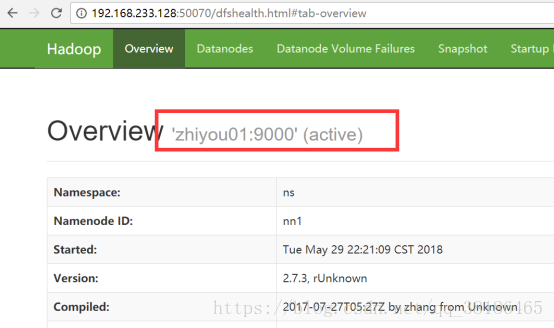

7. 测试高可用性

7.1. Zhiyou01

7.2. Zhiyou02

杀死active的nodeNode节点

另一个变为active