一、网络及主机名配置

| ip地址 | 主机名 | 安装软件 | j进程 |

| 192.168.1.2 | master.hadoop | jdk,hadoop,zookeeper | namenode,datanode,ZKFC,ResourceManager,nodemanager |

| 192.168.1.3 | slave1.hadoop | jdk.hadoop,zookeeper | namenode,datanode,ZKFC,ResouceManager,nodemanager |

| 192.168.1.4 | slave1.hadoop | jdk.hadoop.zookeeper | datanode,nodemanager |

1.修改主机名

[root@master /]# vi /etc/hostname #主机名

master.hadoop[root@master ~]# hostname master.hadoop

[root@master ~]# hostname

master.hadoop其余结点一样的操作

[root@master ~]# cd /etc/hosts

192.168.1.2 master.hadoop

192.168.1.3 slave1.hadoop

192.168.1.4 slave2.hadoop

[root@master ~]# scp /etc/hosts 192.168.1.3:/etc

[root@master ~]# scp /etc/hosts 192.168.1.4:/etc2.修改网卡配置

硬件配置:取消动态ip地址,使用固定的

[root@master network-scripts]# cd /etc/sysconfig/network-scripts/

[root@master network-scripts]# ll

总用量 244

-rw-r--r--. 1 root root 363 7月 6 08:09 ifcfg-ens33

-rw-r--r--. 1 root root 254 5月 3 2017 ifcfg-lo

lrwxrwxrwx. 1 root root 24 4月 27 06:30 ifdown -> ../../../usr/sbin/ifdown

-rwxr-xr-x. 1 root root 654 5月 3 2017 ifdown-bnep

-rwxr-xr-x. 1 root root 6571 5月 3 2017 ifdown-eth

-rwxr-xr-x. 1 root root 6190 8月 4 2017 ifdown-ib

[root@master network-scripts]# vi ifcfg-ens33

[root@master network-scripts]# TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=yes

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=f090a391-d137-4d93-8594-03baeada0d1f

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.1.2

PREFIX=24

GATEWAY=192.168.1.1

IPV6_PRIVACY=no

DNS1=192.168.1.13.重启网卡

[root@master network-scripts]# service network restart

#查看网络配置

[root@master network-scripts]# ifconfig

ens33: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.1.2 netmask 255.255.255.0 broadcast 192.168.1.255

inet6 fe80::5d8a:5d86:d69a:1d54 prefixlen 64 scopeid 0x20<link>

ether 00:0c:29:be:6c:d6 txqueuelen 1000 (Ethernet)

RX packets 2787 bytes 199847 (195.1 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1488 bytes 96410 (94.1 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1 (Local Loopback)

RX packets 1477 bytes 143901 (140.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1477 bytes 143901 (140.5 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

virbr0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 192.168.122.1 netmask 255.255.255.0 broadcast 192.168.122.255

ether 52:54:00:a0:aa:ad txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 04、centos7关闭防火墙

//临时关闭

systemctl stop firewalld

//禁止开机启动

systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.二、安装JDK

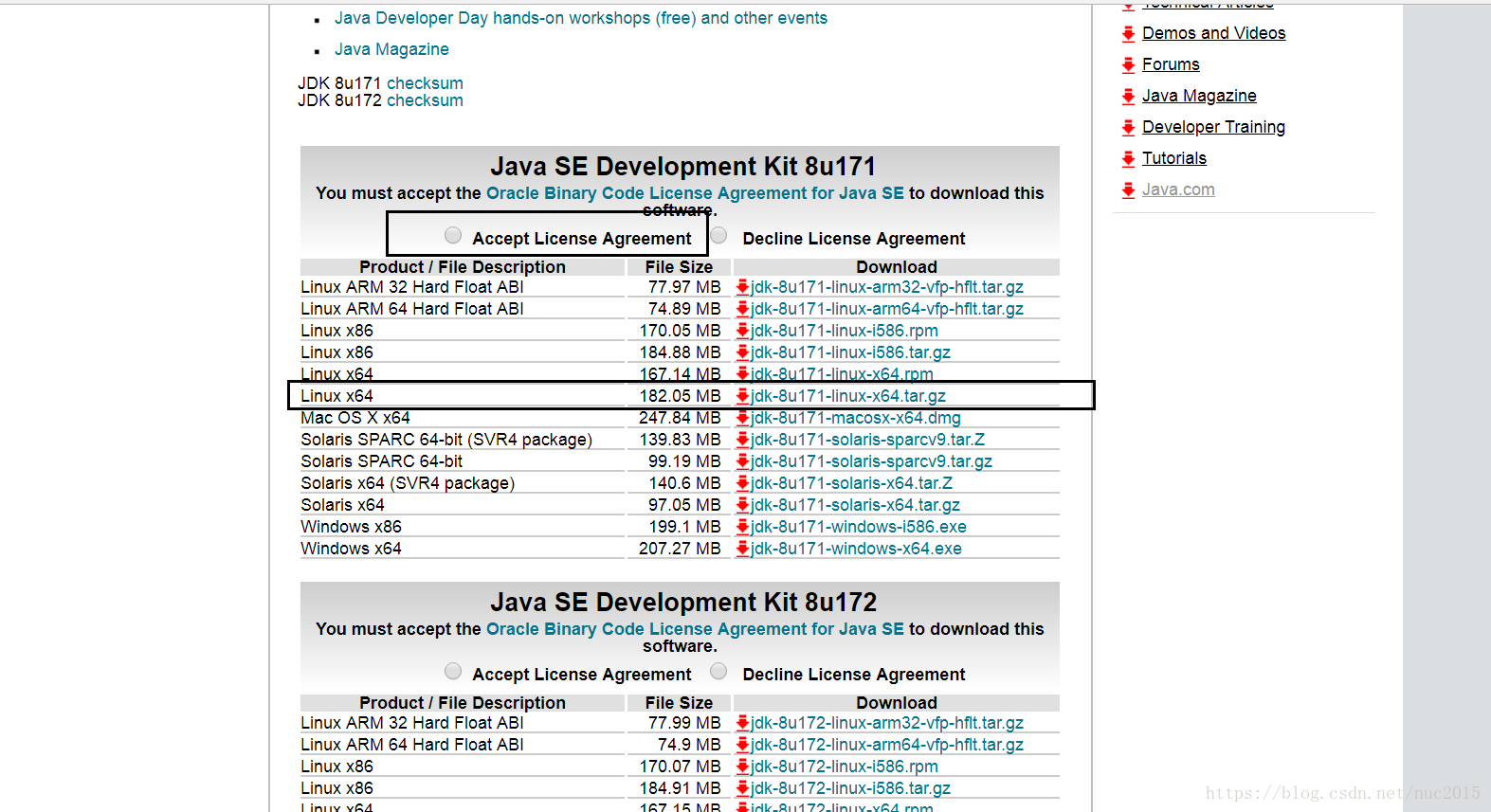

1、下载linux版本的jdk

jdk1.8下载地址

2、解压配置并环境变量

[root@master apps]# tar -zxvf jdk-8u171-linux-x64.tar.gz

[root@master apps]# vi /etc/profile

在其中添加一下内容:

export JAVA_HOME=/apps/jdk1.8.0_171

export JRE_HOME=/apps/jdk1.8.0_171/jre

export CLASS_PATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin然后更新配置文件

[root@master apps]# source /etc/profile[root@master apps]# java -version

java version "1.8.0_171"

Java(TM) SE Runtime Environment (build 1.8.0_171-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.171-b11, mixed mode)三、配置SSH免密登录

1、每台机器生成自己的私钥和公钥

[root@master apps]# cd /root/.ssh/

[root@master .ssh]# ssh-keygen -t rsa

然后一直回车什么也不输入,直到结束。

[root@master .ssh]# touch authorized_keys

[root@master .ssh]# ll

总用量 16

-rw-r--r--. 1 root root 2000 7月 4 20:48 authorized_keys

-rw-------. 1 root root 1679 6月 19 15:17 id_rsa

-rw-r--r--. 1 root root 400 6月 19 15:17 id_rsa.pub

-rw-r--r--. 1 root root 935 7月 4 20:54 known_hosts

[root@master .ssh]# cat id_rsa.pub >> authorized_keys在另外两台机器同样的执行以下操作:

[root@slave apps]# cd /root/.ssh/

[root@salve .ssh]# ssh-keygen -t rsa

[root@slave .ssh]# ll

-rw-------. 1 root root 1679 6月 19 15:17 id_rsa

-rw-r--r--. 1 root root 400 6月 19 15:17 id_rsa.pub

-rw-r--r--. 1 root root 935 7月 4 20:54 known_hosts

将自己机器上的id_rsa.pub追加到主节点的authorized_keys文件中。

[root@slave .ssh]# scp id_rsa.pub 192.168.1.2:/

去主节点追加

[root@master .ssh]# cd /

[root@master .ssh]# cat id_rsa.pub >> /root/.ssh/authorized_keys3、将主节点上的authorized_keys分发给每一个从节点,测试登录

[root@master .ssh]# scp authorized_keys 192.168.1.3:$PWD

[root@master .ssh]# scp authorized_keys 192.168.1.4:$PWD

[root@master .ssh]# ssh 192.168.1.3

Last login: Fri Jul 6 16:02:23 2018

[root@slave1 ~]# 四、hadoop2.8.0+zookeeper-3.4.10搭建HA

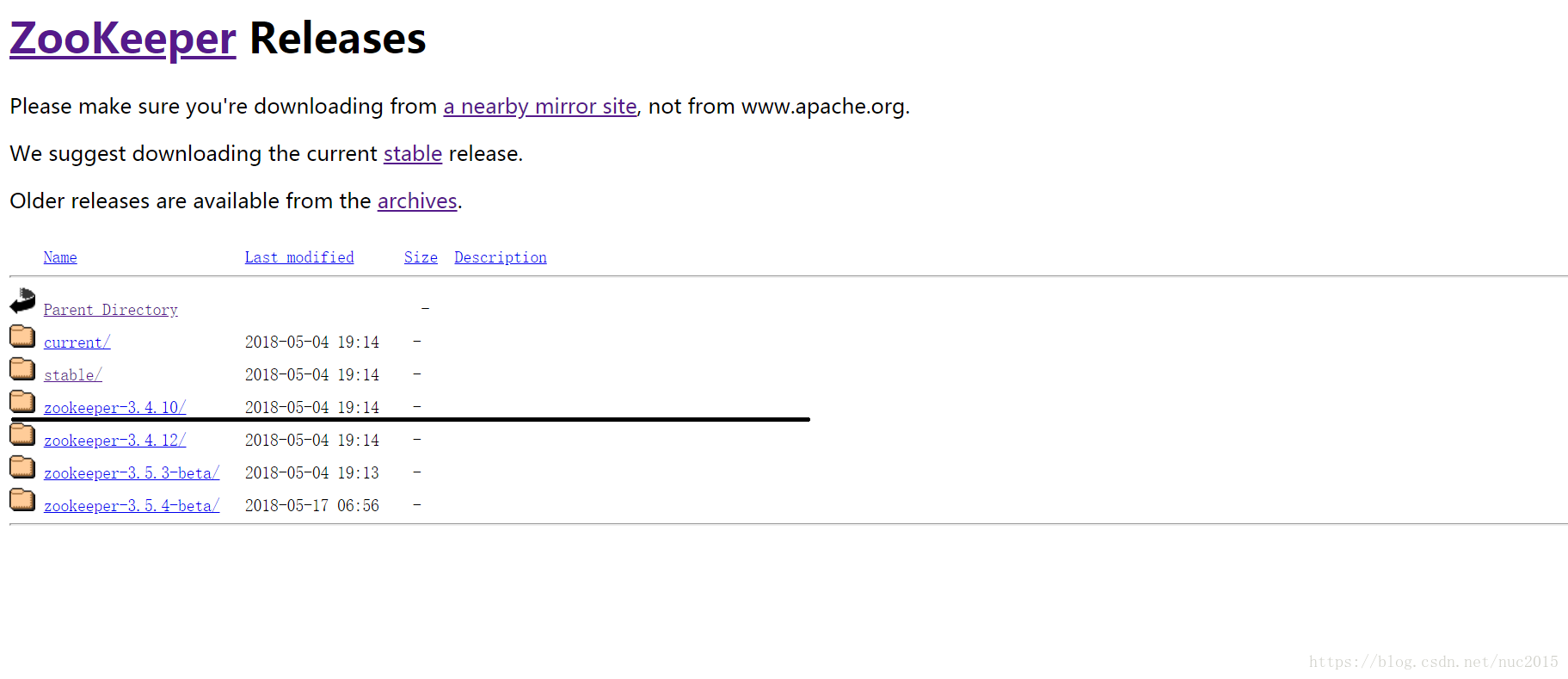

1、安装zookeeper

下载zookeeper下载链接

在三台机器上都安装zookeeper

解压到/apps

[root@master apps]# tar -zxvf zookeeper-3.4.10.tar.gz #删除没用的文件,只留下一下部分

[root@master apps]# cd zookeeper-3.4.10/

[root@master zookeeper-3.4.10]# ll

总用量 1456

drwxr-xr-x. 2 root root 149 3月 23 2017 bin

drwxr-xr-x. 2 root root 91 7月 5 11:28 conf

drwxr-xr-x. 4 root root 267 3月 23 2017 lib

-rw-rw-r--. 1 root root 1456729 3月 23 2017 zookeeper-3.4.10.jar配置环境变量

[root@master /]# vi /etc/profile#set java environment

export JAVA_HOME=/apps/jdk1.8.0_171

export JRE_HOME=/apps/jdk1.8.0_171/jre

export CLASS_PATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib

export ZOOKEEPER_HOME=/apps/zookeeper-3.4.10

export PATH=$PATH:$JAVA_HOME/bin:$ZOOKEEPER_HOME/bin[root@master /]# source /etc/profile切换到conf目录,修改zookeepe配置文件:

将zoo_sample.cfg改名为zoo.cfg

[root@master conf]# mv zoo_sample.cfg zoo.cfg [root@master zookeeper-3.4.10]# cd conf/

[root@master conf]# ll

总用量 40

-rw-rw-r--. 1 root root 535 3月 23 2017 configuration.xsl

-rw-rw-r--. 1 root root 2161 3月 23 2017 log4j.properties

-rw-rw-r--. 1 root root 1019 7月 5 11:19 zoo.cfg提前建好这两个目录(/apps/zkdata)(/apps/zookeeper-3.4.10/datalog)

dataDir=/apps/zkdata

dataLogDir=/apps/zookeeper-3.4.10/datalog末尾添加:

server.1=master.hadoop:2888:3888

server.2=slave1.hadoop:2888:3888

server.3=slave2.hadoop:2888:3888

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

dataDir=/apps/zkdata

dataLogDir=/apps/zookeeper-3.4.10/datalog

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#

# Be sure to read the maintenance section of the

# administrator guide before turning on autopurge.

#

# http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance

#

# The number of snapshots to retain in dataDir

#autopurge.snapRetainCount=3

# Purge task interval in hours

# Set to "0" to disable auto purge feature

#autopurge.purgeInterval=1

server.1=master.hadoop:2888:3888

server.2=slave1.hadoop:2888:3888

server.3=slave2.hadoop:2888:3888[root@master conf]# cd /apps/zkdata

[root@master zkdata]# echo 1 > myidslave1.hadoop: 在zkdata目录下创建myid文件并添加内容为2(以此类推)

[root@slave1 conf]# cd /apps/zkdata

[root@slave1 zkdata]# echo 2 > myid[root@master /]# zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /apps/zookeeper-3.4.10/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED[root@slave1 ~]# zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /apps/zookeeper-3.4.10/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED[root@slave2 ~]# zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /apps/zookeeper-3.4.10/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED[root@master /]# jps

43587 QuorumPeerMain

43871 Jps

[root@master /]# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /apps/zookeeper-3.4.10/bin/../conf/zoo.cfg

Mode: follower

[root@master /]# [root@slave1 ~]# jps

2709 QuorumPeerMain

2757 Jps

[root@slave1 ~]# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /apps/zookeeper-3.4.10/bin/../conf/zoo.cfg

Mode: leader

[root@slave1 ~]# [root@slave2 ~]# jps

1784 Jps

1759 QuorumPeerMain

[root@slave2 ~]# zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /apps/zookeeper-3.4.10/bin/../conf/zoo.cfg

Mode: follower

[root@slave2 ~]#

[root@master /]# zkServer.sh stop[root@slave1 ~]# zkServer.sh stop[root@slave2 ~]# zkServer.sh stop2、安装hadoop

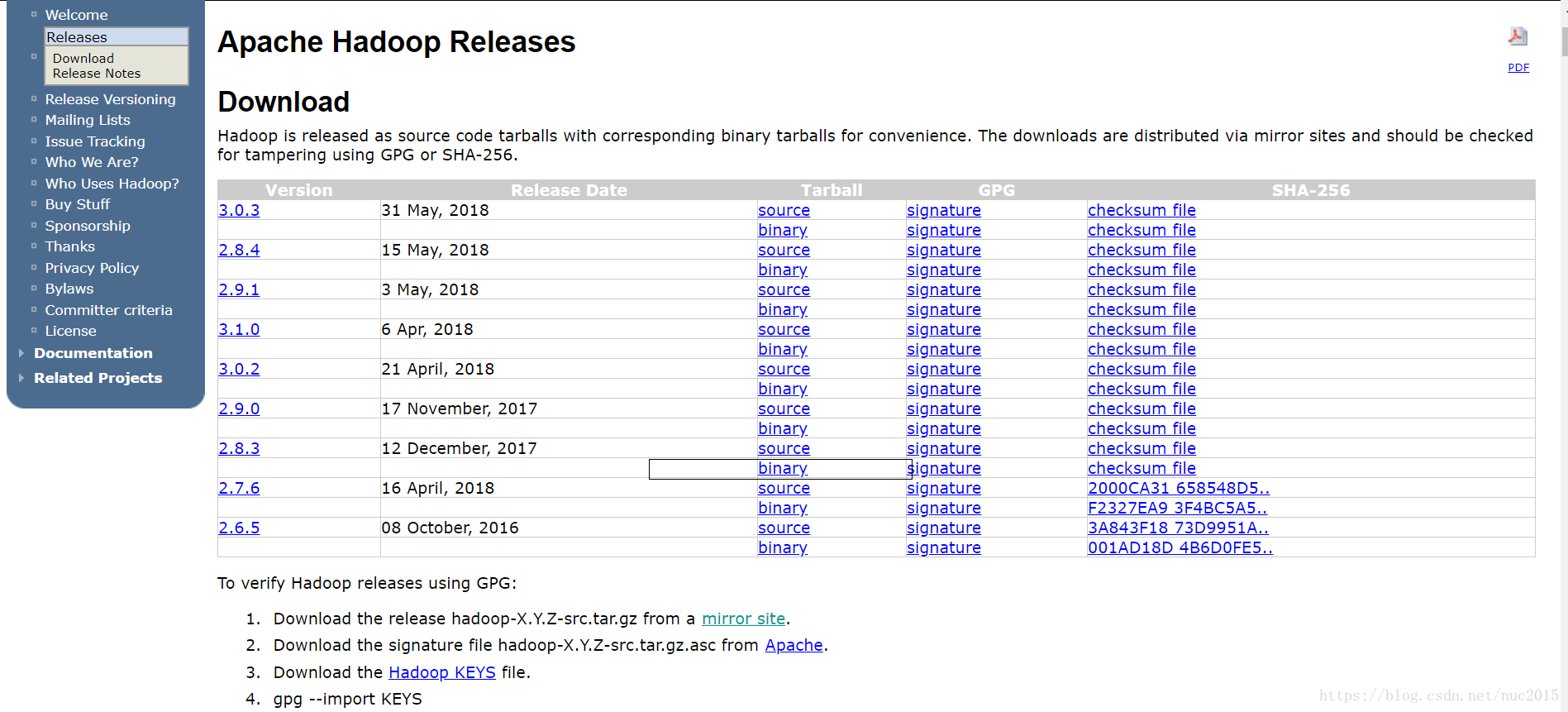

下载hadoop安装包

解压安装包,并修改配置文件

[root@master apps]# tar -zxvf hadoop-2.8.0.tar.gz

[root@master apps]# mkdir hdptmp

在两台从节点上建立相同的目录(hdfs初始化的时候会用到)1)配置hadoop-env.sh

# set java environment(添加jdk环境变量)

export JAVA_HOME=/apps/jdk1.8.0_1712)配置core-site.xml文件

修改Hadoop核心配置文件core-site.xml,这里配置的是HDFS的地址和端口号。

core-site.xml

<configuration>

<!-- 指定hdfs的nameservice为ns -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://ns</value>

</property>

<!--指定hadoop数据临时存放目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>/apps/hdptmp</value>

</property>

<!--指定zookeeper地址-->

<property>

<name>ha.zookeeper.quorum</name>

<value>master.hadoop:21810,slave1.hadoop:21810,slave2.hadoop:21810</value>

</property>

</configuration>3)配置hdfs-site.xml文件

修改Hadoop中HDFS的配置,配置的备份方式默认为3。

<configuration>

<!--指定hdfs的nameservice为ns,需要和core-site.xml中的保持一致 -->

<property>

<name>dfs.nameservices</name>

<value>ns</value>

</property>

<!-- ns下面有两个NameNode,分别是nn1,nn2 -->

<property>

<name>dfs.ha.namenodes.ns</name>

<value>nn1,nn2</value>

</property>

<!-- nn1的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns.nn1</name>

<value>master.hadoop:9000</value>

</property>

<!-- nn1的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns.nn1</name>

<value>master.hadoop:50070</value>

</property>

<!-- nn2的RPC通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ns.nn2</name>

<value>slave1.hadoop:9000</value>

</property>

<!-- nn2的http通信地址 -->

<property>

<name>dfs.namenode.http-address.ns.nn2</name>

<value>slave1.hadoop:50070</value>

</property>

<!-- 指定NameNode的元数据在JournalNode上的存放位置 -->

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://master.hadoop:8485;slave1.hadoop:8485;slave2.hadoop:8485/ns</value>

</property>

<!-- 指定JournalNode在本地磁盘存放数据的位置 -->

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/apps/journal</value>

</property>

<!-- 开启NameNode故障时自动切换 -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 配置失败自动切换实现方式 -->

<property>

<name>dfs.client.failover.proxy.provider.ns</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!-- 配置隔离机制 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>

sshfence

shell(/bin/true)

</value>

</property>

<!-- 使用隔离机制时需要ssh免登陆 -->

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<!-- 在NN和DN上开启WebHDFS (REST API)功能,不是必须 -->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>10000</value>

</property>

<property>

<name>dfs.permissions</name>

<value>false</value>

</property>

</configuration>4)配置mapred-site.xml文件

修改Hadoop中MapReduce的配置文件,配置的是JobTracker的地址和端口。

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration><configuration>

<!-- //////////////以下为YARN HA的配置////////////// -->

<!-- 开启YARN HA -->

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<!-- 启用自动故障转移 -->

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 指定YARN HA的名称 -->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yarncluster</value>

</property>

<!-- 指定两个resourcemanager的名称 -->

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!-- 配置rm1,rm2的主机 -->

<property>

<name>yarn.resourcemanager.hostname.rm1</name>

<value>master.hadoop1</value>

</property>

<property>

<name>yarn.resourcemanager.hostname.rm2</name>

<value>slave1.hadoop</value>

</property>

<!-- 配置zookeeper的地址 -->

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>master.hadoop:2181,slave1.hadoop:2181,slave2.hadoop:2181</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>6)配置slaves文件

[root@master /]# vi slaves

master.hadoop

slave1.hadoop

slave2.hadoop7) 配置hadoop环境变量

[root@master /]# vi /etc/profile

#set hadoop enviroment

export HADOOP_HOME=/apps/hadoop-2.8.0/

export PATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin#生效

[root@master /]# source /etc/profile启动集群(按照顺序启动)

1.启动zookeeper(三台机器一台一台的启动)

[root@master /]# zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /apps/zookeeper-3.4.10/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED[root@slave1 ~]# zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /apps/zookeeper-3.4.10/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED[root@slave2 ~]# zkServer.sh start

ZooKeeper JMX enabled by default

Using config: /apps/zookeeper-3.4.10/bin/../conf/zoo.cfg

Starting zookeeper ... STARTED2.启动journalnode(三台一台一台启动)

[root@master /]# hadoop-daemon.sh start journalnode

starting journalnode, logging to /apps/hadoop-2.8.0/logs/hadoop-root-journalnode-master.hadoop.out

[root@master /]#

[root@slave1 ~]# hadoop-daemon.sh start journalnode

starting journalnode, logging to /apps/hadoop-2.8.0/logs/hadoop-root-journalnode-slave1.hadoop.out

[root@slave1 ~]#

[root@slave2 ~]# hadoop-daemon.sh start journalnode

starting journalnode, logging to /apps/hadoop-2.8.0/logs/hadoop-root-journalnode-slave2.hadoop.out

[root@slave2 ~]# 3.启动hdfs

[root@master /]# start-dfs.sh

Starting namenodes on [master.hadoop slave1.hadoop]

slave1.hadoop: starting namenode, logging to /apps/hadoop-2.8.0/logs/hadoop-root-namenode-slave1.hadoop.out

master.hadoop: starting namenode, logging to /apps/hadoop-2.8.0/logs/hadoop-root-namenode-master.hadoop.out

slave2.hadoop: starting datanode, logging to /apps/hadoop-2.8.0/logs/hadoop-root-datanode-slave2.hadoop.out

master.hadoop: starting datanode, logging to /apps/hadoop-2.8.0/logs/hadoop-root-datanode-master.hadoop.out

slave1.hadoop: starting datanode, logging to /apps/hadoop-2.8.0/logs/hadoop-root-datanode-slave1.hadoop.out

Starting journal nodes [master.hadoop slave1.hadoop slave2.hadoop]

slave2.hadoop: journalnode running as process 1870. Stop it first.

slave1.hadoop: journalnode running as process 2842. Stop it first.

master.hadoop: journalnode running as process 45029. Stop it first.

Starting ZK Failover Controllers on NN hosts [master.hadoop slave1.hadoop]

slave1.hadoop: starting zkfc, logging to /apps/hadoop-2.8.0/logs/hadoop-root-zkfc-slave1.hadoop.out

master.hadoop: starting zkfc, logging to /apps/hadoop-2.8.0/logs/hadoop-root-zkfc-master.hadoop.out

[root@master /]# 4.启动yarn

[root@master /]# start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /apps/hadoop-2.8.0/logs/yarn-root-resourcemanager-master.hadoop.out

slave1.hadoop: starting nodemanager, logging to /apps/hadoop-2.8.0/logs/yarn-root-nodemanager-slave1.hadoop.out

slave2.hadoop: starting nodemanager, logging to /apps/hadoop-2.8.0/logs/yarn-root-nodemanager-slave2.hadoop.out

master.hadoop: starting nodemanager, logging to /apps/hadoop-2.8.0/logs/yarn-root-nodemanager-master.hadoop.out

[root@master /]# 5.启动单个结点的yarn进程(因为yarn的两个resourcemanager不会同时启动,必须手动启动另一台上的resourcemanager)

去slave1.hadoop上启动

[root@slave1 ~]# yarn-daemon.sh start resourcemanager

starting resourcemanager, logging to /apps/hadoop-2.8.0/logs/yarn-root-resourcemanager-slave1.hadoop.out

[root@slave1 ~]# jps

3489 Jps

2931 NameNode

2709 QuorumPeerMain

2842 JournalNode

3131 DFSZKFailoverController

3451 ResourceManager

3276 NodeManager

3005 DataNode

[root@slave1 ~]# 到此集群搭建完成!!!!!!!!!!!!!!!!!

============================================================================测试高可用

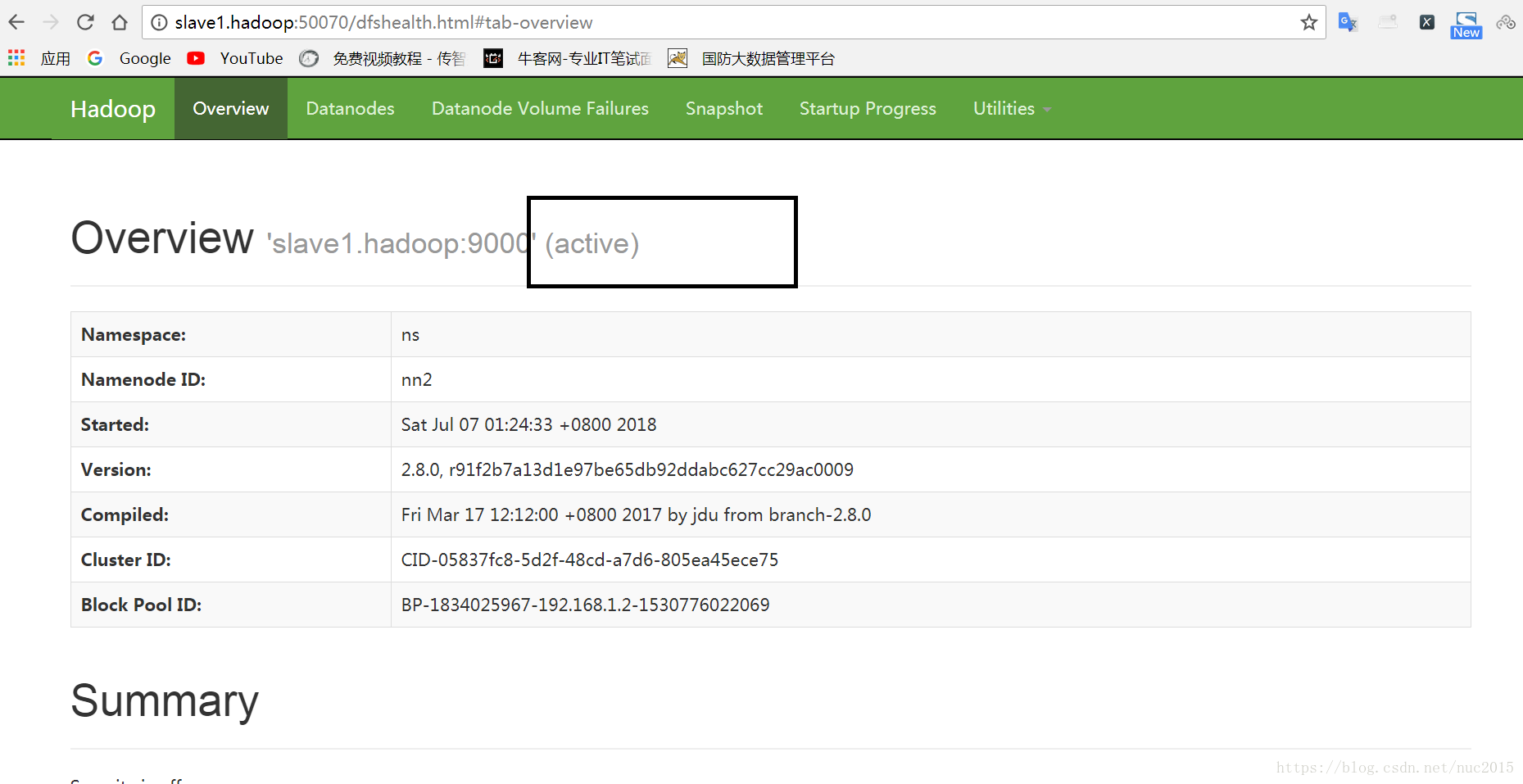

访问 http://slave1.hadoop:50070/

接下来是一台宕机(关闭slave1.hadoop上的namenode进程)杀掉2931进程

[root@slave1 ~]# jps

2931 NameNode

2709 QuorumPeerMain

3733 Jps

2842 JournalNode

3131 DFSZKFailoverController

3451 ResourceManager

3276 NodeManager

3005 DataNode

[root@slave1 ~]# kill -9 2931

[root@slave1 ~]# jps

2709 QuorumPeerMain

3753 Jps

2842 JournalNode

3131 DFSZKFailoverController

3451 ResourceManager

3276 NodeManager

3005 DataNode

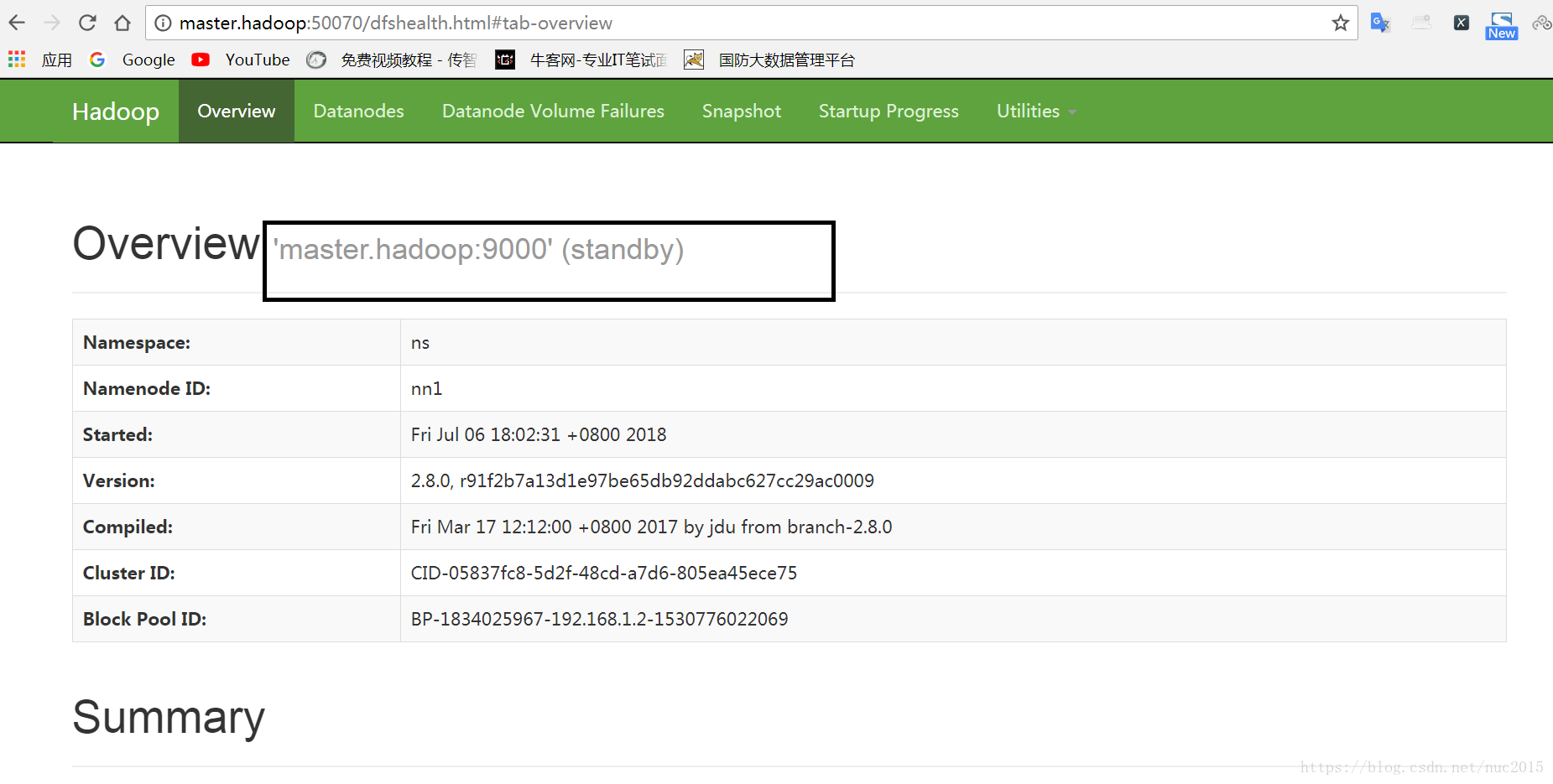

[root@slave1 ~]# 然后访问 http://slave1.hadoop:50070/(无响应)

访问http://master.hadoop:50070/ (变成active)

============================================================================