使用函数式API实现wide_deep模型的多输入:

关键代码展示:

# 多输入,使用函数式方法

input_wide = keras.layers.Input(shape=[5]) #取前5个feature做为wide模型的输入

input_deep = keras.layers.Input(shape=[6]) ##取后6个feature做为wide模型的输入

hidden1 = keras.layers.Dense(30, activation='relu')(input_deep)

hidden2 = keras.layers.Dense(30, activation='relu')(hidden1)

concat = keras.layers.concatenate([input_wide, hidden2])

output = keras.layers.Dense(1)(concat)

model = keras.models.Model(inputs = [input_wide, input_deep],

outputs = [output])

model.compile(loss="mean_squared_error", optimizer="sgd")

callbacks = [keras.callbacks.EarlyStopping(

patience=5, min_delta=1e-2)]

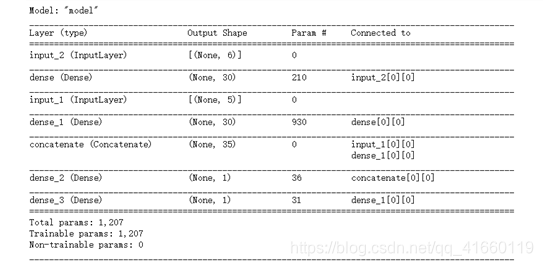

model.summary()模型层次结构展示:

附完整代码:

import matplotlib as mpl

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

print(tf.__version__)

print(sys.version_info)

for module in mpl, np, pd, sklearn, tf, keras:

print(module.__name__, module.__version__)

from sklearn.datasets import fetch_california_housing

housing = fetch_california_housing()

print(housing.DESCR)

print(housing.data.shape)

print(housing.target.shape)

from sklearn.model_selection import train_test_split

x_train_all, x_test, y_train_all, y_test = train_test_split(

housing.data, housing.target, random_state = 7)

x_train, x_valid, y_train, y_valid = train_test_split(

x_train_all, y_train_all, random_state = 11)

print(x_train.shape, y_train.shape)

print(x_valid.shape, y_valid.shape)

print(x_test.shape, y_test.shape)

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(x_train)

x_valid_scaled = scaler.transform(x_valid)

x_test_scaled = scaler.transform(x_test)

# 多输入,使用函数式方法

input_wide = keras.layers.Input(shape=[5]) #取前5个feature做为wide模型的输入

input_deep = keras.layers.Input(shape=[6]) ##取后6个feature做为wide模型的输入

hidden1 = keras.layers.Dense(30, activation='relu')(input_deep)

hidden2 = keras.layers.Dense(30, activation='relu')(hidden1)

concat = keras.layers.concatenate([input_wide, hidden2])

output = keras.layers.Dense(1)(concat)

model = keras.models.Model(inputs = [input_wide, input_deep],

outputs = [output])

model.compile(loss="mean_squared_error", optimizer="sgd")

callbacks = [keras.callbacks.EarlyStopping(

patience=5, min_delta=1e-2)]

model.summary()

x_train_scaled_wide = x_train_scaled[:, :5]

x_train_scaled_deep = x_train_scaled[:, 2:]

x_valid_scaled_wide = x_valid_scaled[:, :5]

x_valid_scaled_deep = x_valid_scaled[:, 2:]

x_test_scaled_wide = x_test_scaled[:, :5]

x_test_scaled_deep = x_test_scaled[:, 2:]

history = model.fit([x_train_scaled_wide, x_train_scaled_deep],

y_train,

validation_data = (

[x_valid_scaled_wide, x_valid_scaled_deep],

y_valid),

epochs = 100,

callbacks = callbacks)

def plot_learning_curves(history):

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.grid(True)

plt.gca().set_ylim(0, 1)

plt.show()

plot_learning_curves(history)

model.evaluate([x_test_scaled_wide, x_test_scaled_deep], y_test, verbose=0)

使用函数式API实现wide_deep模型的多输出:

note:这种多输出并不是wide_deep模型所需要的,而是在现实问题中可能会遇到的一种形式,这种多输出的网络结构,主要是针对多任务学习的问题,例如想预测当前的房价,和一年后的房价,这样就有了两个预测任务。

下面的实现是:假设另外一个问题也是学习预测当前房价,(两个问题都是学习预测当前房价),第二个任务利用hidden2来实现。

关键代码展示:

# 多输出

input_wide = keras.layers.Input(shape=[5])

input_deep = keras.layers.Input(shape=[6])

hidden1 = keras.layers.Dense(30, activation='relu')(input_deep)

hidden2 = keras.layers.Dense(30, activation='relu')(hidden1)

concat = keras.layers.concatenate([input_wide, hidden2])

output = keras.layers.Dense(1)(concat)

output2 = keras.layers.Dense(1)(hidden2)

model = keras.models.Model(inputs = [input_wide, input_deep],

outputs = [output, output2])

model.compile(loss="mean_squared_error", optimizer="sgd")

callbacks = [keras.callbacks.EarlyStopping(

patience=5, min_delta=1e-2)]

model.summary()

附完整代码:

import matplotlib as mpl

import matplotlib.pyplot as plt

%matplotlib inline

import numpy as np

import sklearn

import pandas as pd

import os

import sys

import time

import tensorflow as tf

from tensorflow import keras

print(tf.__version__)

print(sys.version_info)

for module in mpl, np, pd, sklearn, tf, keras:

print(module.__name__, module.__version__)

from sklearn.datasets import fetch_california_housing

housing = fetch_california_housing()

print(housing.DESCR)

print(housing.data.shape)

print(housing.target.shape)

from sklearn.model_selection import train_test_split

x_train_all, x_test, y_train_all, y_test = train_test_split(

housing.data, housing.target, random_state = 7)

x_train, x_valid, y_train, y_valid = train_test_split(

x_train_all, y_train_all, random_state = 11)

print(x_train.shape, y_train.shape)

print(x_valid.shape, y_valid.shape)

print(x_test.shape, y_test.shape)

from sklearn.preprocessing import StandardScaler

scaler = StandardScaler()

x_train_scaled = scaler.fit_transform(x_train)

x_valid_scaled = scaler.transform(x_valid)

x_test_scaled = scaler.transform(x_test)

# 多输出

input_wide = keras.layers.Input(shape=[5])

input_deep = keras.layers.Input(shape=[6])

hidden1 = keras.layers.Dense(30, activation='relu')(input_deep)

hidden2 = keras.layers.Dense(30, activation='relu')(hidden1)

concat = keras.layers.concatenate([input_wide, hidden2])

output = keras.layers.Dense(1)(concat)

output2 = keras.layers.Dense(1)(hidden2)

model = keras.models.Model(inputs = [input_wide, input_deep],

outputs = [output, output2])

model.compile(loss="mean_squared_error", optimizer="sgd")

callbacks = [keras.callbacks.EarlyStopping(

patience=5, min_delta=1e-2)]

model.summary()

x_train_scaled_wide = x_train_scaled[:, :5]

x_train_scaled_deep = x_train_scaled[:, 2:]

x_valid_scaled_wide = x_valid_scaled[:, :5]

x_valid_scaled_deep = x_valid_scaled[:, 2:]

x_test_scaled_wide = x_test_scaled[:, :5]

x_test_scaled_deep = x_test_scaled[:, 2:]

history = model.fit([x_train_scaled_wide, x_train_scaled_deep],

[y_train, y_train],

validation_data = (

[x_valid_scaled_wide, x_valid_scaled_deep],

[y_valid, y_valid]),

epochs = 100,

callbacks = callbacks)

def plot_learning_curves(history):

pd.DataFrame(history.history).plot(figsize=(8, 5))

plt.grid(True)

plt.gca().set_ylim(0, 1)

plt.show()

plot_learning_curves(history)

model.evaluate([x_test_scaled_wide, x_test_scaled_deep],

[y_test, y_test], verbose=0)