上一章没有使用gluon实现了L2正则化解决过拟合问题,本章使用过gluon来实现以下相关代码:

import mxnet.ndarray as nd

import mxnet.autograd as ag

import mxnet.gluon as gn

import matplotlib.pyplot as plt

import random

n_train, n_test, num_inputs = 20, 100, 200

true_w, true_b = nd.ones((num_inputs, 1)) * 0.01, 0.05

features = nd.random.normal(shape=(n_train + n_test, num_inputs))

labels = nd.dot(features, true_w) + true_b

labels += nd.random.normal(scale=0.01, shape=labels.shape)

train_features, test_features = features[:n_train, :], features[n_train:, :]

train_labels, test_labels = labels[:n_train], labels[n_train:]

'''---数据读取---'''

# 定义一个函数让它每批次读取数据(batch_size)

batch_size=10

dataset=gn.data.ArrayDataset(train_features,train_labels)

data_iter=gn.data.DataLoader(dataset=dataset,batch_size=batch_size,shuffle=True)

# 损失函数定义

square_loss=gn.loss.L2Loss()

'''--------L2范数正则化----------'''

def test(net,X,y):

return square_loss(y,net(X)).mean().asscalar()

def train(weight_decay):

net = gn.nn.Sequential()

net.add(gn.nn.Dense(1))

net.initialize()

trainer = gn.Trainer(net.collect_params(), "sgd",

{"learning_rate": 0.005, "wd": weight_decay})

train_loss, test_loss = [], []

for _ in range(10):

for X, y in data_iter:

with ag.record():

l = square_loss(y,net(X))

l.backward()

# 对两个Trainer实例分别调用step函数,从而分别更新权重和偏差

trainer.step(batch_size)

train_loss.append(test(net, train_features, train_labels))

test_loss.append(test(net, test_features, test_labels))

plt.plot(train_loss)

plt.plot(test_loss)

plt.legend(["train", "test"])

plt.show()

print(net[0].weight.data()[:, 10], net[0].bias.data()) # 取出所在层的权重

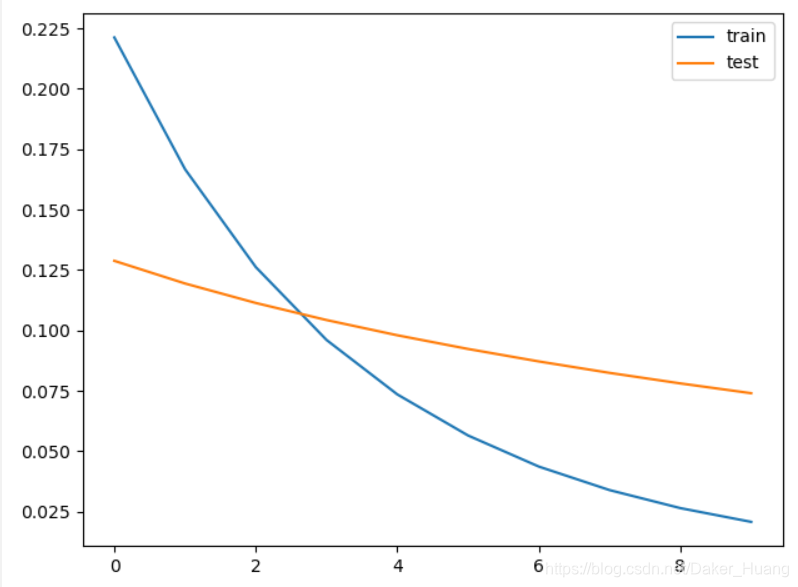

train(0) # 不使用惩罚项

# train(3) # 使用惩罚项

运行结果:

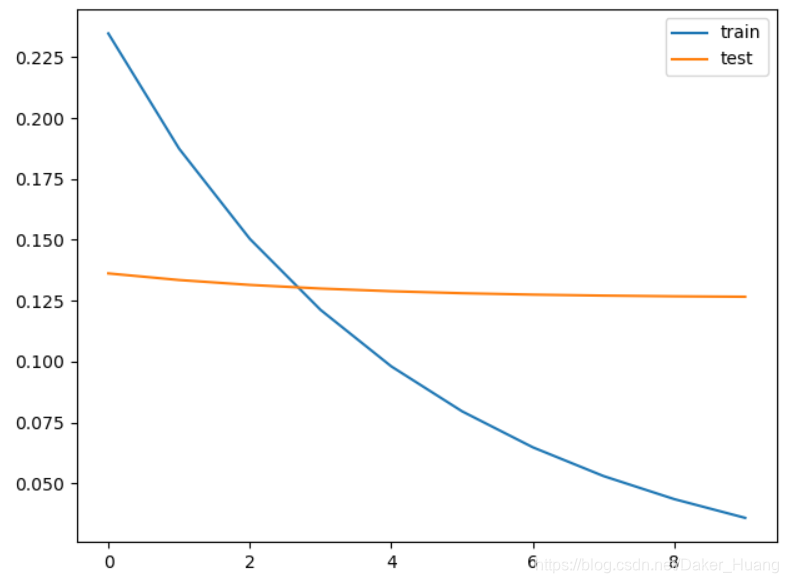

当使用了正则化后:

train(3) # 使用惩罚项

运行结果: