前面我们了解了 GAN 的原理,下面我们就来用 TensorFlow 搭建 GAN(严格说来是 DCGAN,如无特别说明,本系列文章所说的 GAN 均指 DCGAN),如前面所说,GAN 分为有约束条件的 GAN,和不加约束条件的GAN,我们先来搭建一个简单的 MNIST 数据集上加约束条件的 GAN。

首先数据集使用的是著名的MNIST,每一张图片的大小为[28, 28, 1],训练集有60000张,测试集有10000张,共有70000张可以使用来训练GAN.

使用的GAN的种类是DCGAN,即deep convolutional GAN,同时使用了CGAN的condition,用条件来约束GAN生成的图像的内容。

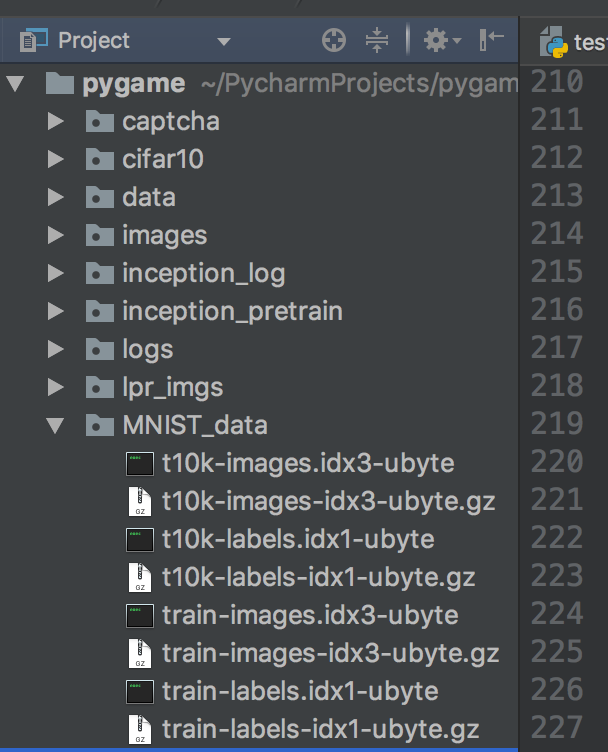

首先下载数据:在 当前项目下建立文件夹 MNIST_data,从 http://yann.lecun.com/exdb/mnist/ 网站上下载 mnist 数据集 train-images-idx3-ubyte.gz,train-labels-idx1-ubyte.gz,t10k-images-idx3-ubyte.gz,t10k-labels-idx1-ubyte.gz 到 mnist 文件夹下得到四个 .gz 文件。

代码结构分成了4个部分:

- read_data

- ops

- model

- train

使用的layer的种类有:

- conv(卷积层)

- deconv(反卷积层)

- linear(线性层)

- batch_norm(批量归一化层)

- lrelu/relu/sigmoid(非线性函数层)

1.数据预处理和读入

def read_data():

data_dir = "MNIST_data"

# 打开训练数据

fd = open(os.path.join(data_dir, 'train-images.idx3-ubyte'))

# 转化成 numpy 数组

loaded = np.fromfile(file=fd, dtype=np.uint8)

# 根据 mnist 官网描述的数据格式,图像像素从 16 字节开始

trX = loaded[16:].reshape((60000, 28, 28, 1)).astype(np.float)

# 训练 label

fd = open(os.path.join(data_dir, 'train-labels.idx1-ubyte'))

loaded = np.fromfile(file=fd, dtype=np.uint8)

trY = loaded[8:].reshape((60000)).astype(np.float)

# 测试数据

fd = open(os.path.join(data_dir, 't10k-images.idx3-ubyte'))

loaded = np.fromfile(file=fd, dtype=np.uint8)

teX = loaded[16:].reshape((10000, 28, 28, 1)).astype(np.float)

# 测试 label

fd = open(os.path.join(data_dir, 't10k-labels.idx1-ubyte'))

loaded = np.fromfile(file=fd, dtype=np.uint8)

teY = loaded[8:].reshape((10000)).astype(np.float)

trY = np.asarray(trY)

teY = np.asarray(teY)

# 由于生成网络由服从某一分布的噪声生成图片,不需要测试集,

# 所以把训练和测试两部分数据合并

X = np.concatenate((trX, teX), axis=0)

y = np.concatenate((trY, teY), axis=0)

# 打乱排序

seed = 547

np.random.seed(seed)

np.random.shuffle(X)

np.random.seed(seed)

np.random.shuffle(y)

# 这里,y_vec 表示对网络所加的约束条件,这个条件是类别标签,

# 可以看到,y_vec 实际就是对 y 的独热编码,关于什么是独热编码,

# 请参考 http://www.cnblogs.com/Charles-Wan/p/6207039.html

y_vec = np.zeros((len(y), 10), dtype=np.float)

for i, label in enumerate(y):

y_vec[i, int(y[i])] = 1.0

return X / 255., y_vec

先把训练集和测试集读入,并且将两个集合并乘70000大小的训练集,然后是使用了numpy中的随机化,设置相同的seed就可以把两个数组随机成相同顺序的。然后把X范围归于0到1之间(原X中的数据为0-255的整数),y标签大小为[70000]的向量。

2.layer的实现

然后,定义一些基本的操作层,例如卷积,池化,全连接等层

import tensorflow as tf

from tensorflow.contrib.layers.python.layers import batch_norm as batch_norm# ops

# layer的实现

def linear_layer(value,output_dim,name = 'linear_connected'):

with tf.variable_scope(name):

try:

weights = tf.get_variable('weights',

[int(value.get_shape()[1]), output_dim],

initializer=tf.truncated_normal_initializer(stddev=0.02))

biases = tf.get_variable('biases',

[output_dim], initializer=tf.constant_initializer(0.0))

except ValueError:

tf.get_variable_scope().reuse_variables()

weights = tf.get_variable('weights',

[int(value.get_shape()[1]),output_dim],

initializer=tf.truncated_normal_initializer(stddev=0.02))

biases = tf.get_variable('biases',

[output_dim],initializer= tf.constant_initializer(0.0))

return tf.matmul(value,weights) + biases

def conv2d(value, output_dim, k_h = 5, k_w = 5, strides = [1,1,1,1], name = "conv2d"):

with tf.variable_scope(name):

try:

weights = tf.get_variable('weights',

[k_h, k_w, int(value.get_shape()[-1]), output_dim],

initializer = tf.truncated_normal_initializer(stddev = 0.02))

biases = tf.get_variable('biases',

[output_dim], initializer = tf.constant_initializer(0.0))

except ValueError:

tf.get_variable_scope().reuse_variables()

weights = tf.get_variable('weights',

[k_h, k_w, int(value.get_shape()[-1]), output_dim],

initializer = tf.truncated_normal_initializer(stddev = 0.02))

biases = tf.get_variable('biases',

[output_dim], initializer = tf.constant_initializer(0.0))

conv = tf.nn.conv2d(value, weights, strides = strides, padding = "SAME")

conv = tf.reshape(tf.nn.bias_add(conv, biases), conv.get_shape())

return conv

# deconv层是反卷积层,也叫转置卷积层,是卷积层反向传播时的操作

def deconv2d(value, output_shape, k_h = 5, k_w = 5, strides = [1,1,1,1], name = "deconv2d"):

with tf.variable_scope(name):

try:

weights = tf.get_variable('weights',

[k_h, k_w, output_shape[-1], int(value.get_shape()[-1])],

initializer = tf.truncated_normal_initializer(stddev = 0.02))

biases = tf.get_variable('biases',

[output_shape[-1]], initializer = tf.constant_initializer(0.0))

except ValueError:

tf.get_variable_scope().reuse_variables()

weights = tf.get_variable('weights',

[k_h, k_w, output_shape[-1], int(value.get_shape()[-1])],

initializer = tf.truncated_normal_initializer(stddev = 0.02))

biases = tf.get_variable('biases',

[output_shape[-1]], initializer = tf.constant_initializer(0.0))

deconv = tf.nn.conv2d_transpose(value, weights, output_shape, strides = strides)

deconv = tf.reshape(tf.nn.bias_add(deconv, biases), deconv.get_shape())

return deconv

# 把用于卷积层计算的四维数据[batch_size, w, h, c]和约束条件y连接起来的操作,

# 需要把两个数据的前三维转化到一样大小才能使用tf.concat

# 把约束条件串联到 feature map

def conv_cond_concat(value, cond, name = 'concat'):

# 把张量的维度形状转化成 Python 的 list

value_shapes = value.get_shape().as_list()

cond_shapes = cond.get_shape().as_list()

# 在第3个维度上(feature map 维度上)把条件和输入串联起来,

# 条件会被预先设为四维张量的形式,假设输入为 [64, 32, 32, 32] 维的张量,

# 条件为 [64, 32, 32, 10] 维的张量,那么输出就是一个 [64, 32, 32, 42] 维张量

with tf.variable_scope(name):

return tf.concat([value, cond * tf.ones(value_shapes[0:3] + cond_shapes[3:])], 3, name = name)

def batch_norm_layer(value, is_train = True, name = 'batch_norm'):

with tf.variable_scope(name) as scope:

if is_train:

return batch_norm(value, decay = 0.9, epsilon = 1e-5, scale = True,

is_training = is_train, updates_collections = None, scope = scope)

else:

return batch_norm(value, decay = 0.9, epsilon = 1e-5, scale = True,

is_training = is_train, reuse = True,

updates_collections = None, scope = scope)

# lrelu就是relu的改良版

def lrelu(x, leak = 0.2, name = 'lrelu'):

with tf.variable_scope(name):

return tf.maximum(x, x*leak, name = name)

deconv层是反卷积层,也叫转置卷积层,是卷积层反向传播时的操作,熟悉卷积神经网络反向传播原理的肯定很容易就能理解deconv层的操作,只要输入输出的大小,以及filter和步长strides的大小就可以使用tf里封装的函数了。

conv_cond_concat是为了把用于卷积层计算的四维数据[batch_size, w, h, c]和约束条件y连接起来的操作,需要把两个数据的前三维转化到一样大小才能使用tf.concat

lrelu就是relu的改良版,按照论文里的要求使用的。

3.model

# model

def generator(z, y, train=True):

with tf.variable_scope('generator') as scope:

yb = tf.reshape(y, [BATCH_SIZE, 1, 1, 10], name='g_yb')

z_y = tf.concat([z,y], 1, name='g_z_concat_y')

linear1 = linear_layer(z_y, 1024, name='g_linear_layer1')

bn1 = tf.nn.relu(batch_norm_layer(linear1, is_train=True, name='g_bn1'))

bn1_y = tf.concat([bn1, y], 1, name='g_bn1_concat_y')

linear2 = linear_layer(bn1_y, 128 * 49, name='g_linear_layer2')

bn2 = tf.nn.relu(batch_norm_layer(linear2, is_train=True, name='g_bn2'))

bn2_re = tf.reshape(bn2, [BATCH_SIZE, 7, 7, 128], name='g_bn2_reshape')

bn2_yb = conv_cond_concat(bn2_re, yb, name='g_bn2_concat_yb')

deconv1 = deconv2d(bn2_yb, [BATCH_SIZE, 14, 14, 128], strides=[1, 2, 2, 1], name='g_deconv1')

bn3 = tf.nn.relu(batch_norm_layer(deconv1, is_train=True, name='g_bn3'))

bn3_yb = conv_cond_concat(bn3, yb, name='g_bn3_concat_yb')

deconv2 = deconv2d(bn3_yb, [BATCH_SIZE, 28, 28, 1], strides=[1, 2, 2, 1], name='g_deconv2')

return tf.nn.sigmoid(deconv2)

def discriminator(image, y, reuse=False):

with tf.variable_scope('discriminator') as scope:

if reuse:

tf.get_variable_scope().reuse_variables()

yb = tf.reshape(y, [BATCH_SIZE, 1, 1, 10], name='d_yb')

image_yb = conv_cond_concat(image, yb, name='d_image_concat_yb')

conv1 = conv2d(image_yb, 11, strides=[1, 2, 2, 1], name='d_conv1')

lr1 = lrelu(conv1, name='d_lrelu1')

lr1_yb = conv_cond_concat(lr1, yb, name='d_lr1_concat_yb')

conv2 = conv2d(lr1_yb, 74, strides=[1, 2, 2, 1], name='d_conv2')

bn1 = batch_norm_layer(conv2, is_train=True, name='d_bn1')

lr2 = lrelu(bn1, name='d_lrelu2')

lr2_re = tf.reshape(lr2, [BATCH_SIZE, -1], name='d_lr2_reshape')

lr2_y = tf.concat([lr2_re, y], 1, name='d_lr2_concat_y')

linear1 = linear_layer(lr2_y, 1024, name='d_linear_layer1')

bn2 = batch_norm_layer(linear1, is_train=True, name='d_bn2')

lr3 = lrelu(bn2, name='d_lrelu3')

lr3_y = tf.concat([lr3, y], 1, name='d_lr3_concat_y')

linear2 = linear_layer(lr3_y, 1, name='d_linear_layer2')

return linear2

def sampler(z, y, train = True):

tf.get_variable_scope().reuse_variables()

return generator(z, y, train = train)

可以看到,生成器由 7 × 7 变为 14 × 14 再变为 28 × 28大小,每一层都加入了约束条件 y,完美的诠释了论文所给出的网络,之所以要加入 is_train 参数,是由于 Batch_norm 层中训练和测试的时候的过程是不同的,用这个参数区分训练和测试,生成器的最后一层,用了一个 sigmoid 函数把值归一化到 0~1 之间,如果是不加约束的网络,则用 tanh 函数,所以在 save_images 函数中要用到语句:img = (images + 1.0) / 2.0。

sampler 函数的作用是在训练过程中对生成器生成的图片进行采样,所以这个函数必须指定 reuse 可用,关于 reuse 说明,请看:http://www.cnblogs.com/Charles-Wan/p/6200446.html。

最后的sampler模型,是用于在训练中,去生成图像的,纯粹是为了不用generator里加reuse变量而使用的。其实在generator模型里加个reuse重用一下变量就行了。这样写清楚一点。

4.train

# train

# 这个函数的作用是在训练的过程中保存采样生成的图片。

def save_images(images, size, path):

"""

Save the samples images

The best size number is

int(max(sqrt(image.shape[0]),sqrt(image.shape[1]))) + 1

example:

The batch_size is 64, then the size is recommended [8, 8]

The batch_size is 32, then the size is recommended [6, 6]

"""

# 图片归一化,主要用于生成器输出是 tanh 形式的归一化

img = (images + 1.0)/2.0

h, w = img.shape[1], img.shape[2]

# 产生一个大画布,用来保存生成的 batch_size 个图像

merge_img = np.zeros((h * size[0], w * size[1], 3))

# 循环使得画布特定地方值为某一幅图像的值

for idx, image in enumerate(images):

i = idx % size[1]

j = idx // size[1]

merge_img[j*h:j*h+h,i*w:i*w+w,:] = image

return scipy.misc.imsave(path,merge_img) # 保存画布

def train():

# read data

X,Y = read_data()

# global_step to record the step of training

global_step = tf.Variable(0,name = 'global_step',trainable= False)

# set the data placeholder

y = tf.placeholder(tf.int32,[BATCH_SIZE],name= 'y')

_y = tf.one_hot(y, depth=10, on_value=None, off_value=None, axis=None, dtype=None, name='one_hot')

z = tf.placeholder(tf.float32,[None,100],name= 'z')

images = tf.placeholder(tf.float32,[BATCH_SIZE,28,28,1],name='images')

# model

G = generator(z,_y)

# train real data

D = discriminator(images,_y,reuse=False)

# train generated data

_D = discriminator(G,_y,reuse= True)

# calculate loss using sigmoid cross entropy

d_loss_real = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits= D,labels= tf.ones_like(D)))

d_loss_fake = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits= _D,labels=tf.zeros_like(_D)))

g_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits= _D,labels= tf.ones_like(_D)))

d_loss = d_loss_real + d_loss_fake

t_vars = tf.trainable_variables()

d_vars = [var for var in t_vars if 'd_' in var.name]

g_vars = [var for var in t_vars if 'g_' in var.name]

with tf.variable_scope(tf.get_variable_scope(),reuse= False):

d_optim = tf.train.AdamOptimizer(0.0002,beta1= 0.5).minimize(d_loss,var_list=d_vars,global_step= global_step)

g_optim = tf.train.AdamOptimizer(0.0002,beta2= 0.5).minimize(g_loss,var_list=g_vars,global_step= global_step)

# tensorboard

train_dir = 'logs'

z_sum = tf.summary.histogram('z',z)

d_sum = tf.summary.histogram('d',D)

d__sum = tf.summary.histogram('d_',_D)

g_sum = tf.summary.histogram('g',G)

d_loss_real_sum = tf.summary.scalar('d_loss_real',d_loss_real)

d_loss_fake_sum = tf.summary.scalar('d_loss_fake',d_loss_fake)

g_loss_sum = tf.summary.scalar('g_loss',g_loss)

d_loss_sum = tf.summary.scalar('d_loss',d_loss)

g_sum = tf.summary.merge([z_sum,d__sum,g_sum,d_loss_fake_sum,g_loss_sum])

d_sum = tf.summary.merge([z_sum,d_sum,d_loss_real_sum,d_loss_sum])

# initial

init = tf.global_variables_initializer()

sess = tf.InteractiveSession()

writer = tf.summary.FileWriter(train_dir+'/train',sess.graph)

# save

saver = tf.train.Saver()

check_path =train_dir + '/save/model.ckpt'

# sample

sample_z = np.random.uniform(-1,1,size=(BATCH_SIZE,100))

sample_labels = Y[0:BATCH_SIZE]

# make sample

sample = sampler(z,_y)

# run

sess.run(init)

# train

for epoch in range(10):

batch_idx = int(70000/64)

for idx in range(batch_idx):

batch_images = X[idx * 64:(idx + 1) * 64]

batch_labels = Y[idx * 64:(idx + 1) * 64]

batch_z = np.random.uniform(-1, 1, size=(BATCH_SIZE, 100))

_, summary_str = sess.run([d_optim, d_sum],

feed_dict={images: batch_images,

z: batch_z,

_y: batch_labels})

writer.add_summary(summary_str, idx + 1)

_, summary_str = sess.run([g_optim, g_sum],

feed_dict={images: batch_images,

z: batch_z,

_y: batch_labels})

writer.add_summary(summary_str, idx + 1)

writer.add_summary(summary_str,idx + 1)

d_loss1 = d_loss_fake.eval({z:batch_z,_y:batch_labels})

d_loss2 = d_loss_real.eval({images:batch_images,_y:batch_labels})

D_loss = d_loss1 + d_loss2

G_loss = g_loss.eval({z:batch_z,_y:batch_labels})

# every 20 batch output loss

if idx % 20 == 0:

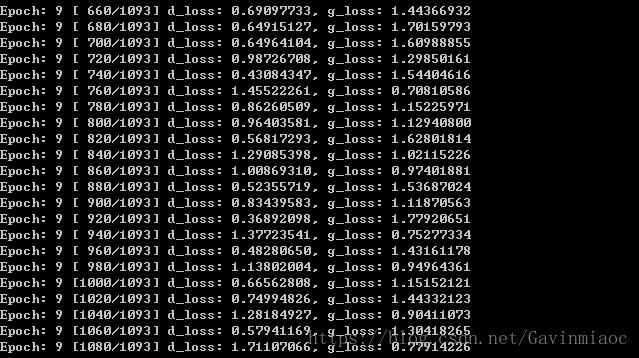

print('Epoch:%d [%4d/%4d] d_loss: %.8f,g_loss: %.8f' % (epoch,idx,batch_idx,D_loss,G_loss))

# every 100 batch save a picture

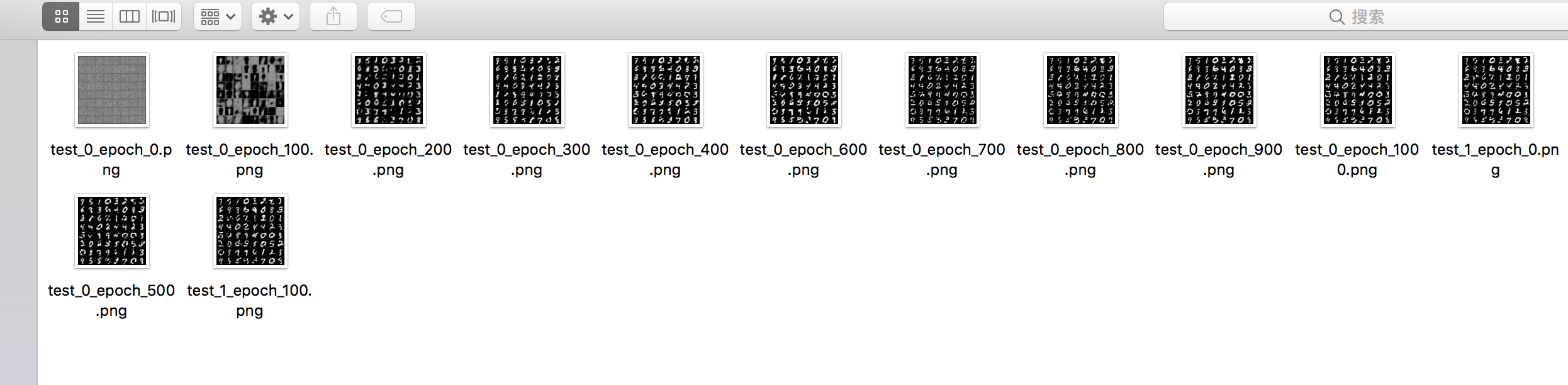

if idx % 100 == 0:

sap = sess.run(sample,feed_dict={z:sample_z,_y:sample_labels})

samples_path = 'sample/'

if not os.path.exists(samples_path):

os.makedirs(samples_path)

save_images(sap,[8,8],samples_path + 'test_%d_epoch_%d.png' % (epoch,idx))

# every 500 batch save model

if idx % 500 == 0:

saver.save(sess,check_path,global_step = idx +1)

sess.close()

if __name__ == '__main__':

train()

设置了一个_y的placeholder主要是把y变成[BATCH_SIZE, 10]大小的one-hot编码格式。

模型训练的顺序是先generator生成fake data,然后real data喂给D训练,再把fake data喂给D训练。

loss的计算是分开计算了real loss和fake loss,然后相加才是D的loss,应该理解上也没有问题。

设置了一些tensorboard中的观测数据,以及saver来存储模型,这些大多是参考别人的代码写的。训练中就是每一个batch的训练,训练一次D,再训练一次G,按照论文里讲的应该是训练k次D,训练一次G。但是按照Goodfellow本人说的一般是一次D一次G也没有问题。

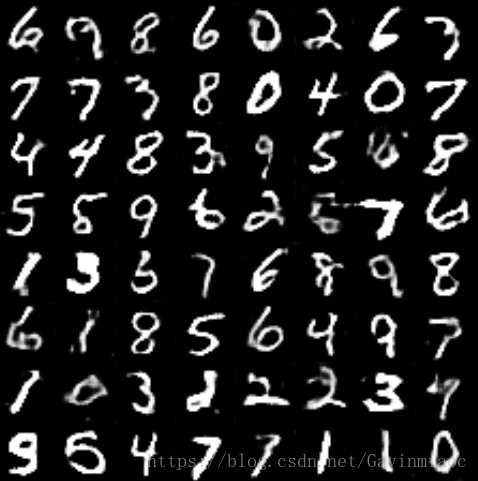

然后每100个batch就生成一下sample图片,我最终跑出来的效果是这样的。

放大一张看看:

运行过程中,可以看到,生成的每个图片对应行对应列都是一样的数字,这是因为我们加了条件约束;采样器 sampler 采样的图片被保存在 samples 文件夹下,由模糊到清晰,由刚开始的噪声,慢慢变成手写字符,最后完全区分不出来是生成图片还是真实图片,反正我是区分不出来。

部分数字生成的和real data中的很相似,但是也有部分数字还是有点崩。不过本来这个MNIST里面的real data中的数字也非常丑的,我也就不往下训练了。

贴上几轮训练的误差:

有的g_loss很小,有的很大,说明有的图已经很realistic了,有的还不行,一般是d_loss小的g_loss大,d_loss大的g_loss小,在这样互相的对抗中一直训练下去,我的model可能还没有拟合,但是看生成出来的效果已经还可以了,就不往下继续训练了,毕竟笔记本负担有点大。

本着分享精神,完整代码如下:

'''

GAN生成手写数字

代码结构分成了4个部分:

read_data

ops

model

train

使用的layer的种类有:

conv(卷积层)

deconv(反卷积层)

linear(线性层)

batch_norm(批量归一化层)

lrelu/relu/sigmoid(非线性函数层)

'''

# -*- coding: utf-8 -*-

import os

import numpy as np

import tensorflow as tf

from tensorflow.examples.tutorials.mnist import input_data

import scipy.misc

from tensorflow.contrib.layers.python.layers import batch_norm as batch_norm

# read_data

# 1.数据预处理和读入

# 载入数据集

# mnist = input_data.read_data_sets("MNIST_data", one_hot=True)

BATCH_SIZE = 64

# 计算一共有多少个批次

# n_batch = mnist.train.num_examples // BATCH_SIZE

def read_data():

data_dir = "MNIST_data"

# 打开训练数据

fd = open(os.path.join(data_dir, 'train-images.idx3-ubyte'))

# 转化成 numpy 数组

loaded = np.fromfile(file=fd, dtype=np.uint8)

# 根据 mnist 官网描述的数据格式,图像像素从 16 字节开始

trX = loaded[16:].reshape((60000, 28, 28, 1)).astype(np.float)

# 训练 label

fd = open(os.path.join(data_dir, 'train-labels.idx1-ubyte'))

loaded = np.fromfile(file=fd, dtype=np.uint8)

trY = loaded[8:].reshape((60000)).astype(np.float)

# 测试数据

fd = open(os.path.join(data_dir, 't10k-images.idx3-ubyte'))

loaded = np.fromfile(file=fd, dtype=np.uint8)

teX = loaded[16:].reshape((10000, 28, 28, 1)).astype(np.float)

# 测试 label

fd = open(os.path.join(data_dir, 't10k-labels.idx1-ubyte'))

loaded = np.fromfile(file=fd, dtype=np.uint8)

teY = loaded[8:].reshape((10000)).astype(np.float)

trY = np.asarray(trY)

teY = np.asarray(teY)

# 由于生成网络由服从某一分布的噪声生成图片,不需要测试集,

# 所以把训练和测试两部分数据合并

X = np.concatenate((trX, teX), axis=0)

y = np.concatenate((trY, teY), axis=0)

# 打乱排序

seed = 547

np.random.seed(seed)

np.random.shuffle(X)

np.random.seed(seed)

np.random.shuffle(y)

# 这里,y_vec 表示对网络所加的约束条件,这个条件是类别标签,

# 可以看到,y_vec 实际就是对 y 的独热编码,关于什么是独热编码,

# 请参考 http://www.cnblogs.com/Charles-Wan/p/6207039.html

y_vec = np.zeros((len(y), 10), dtype=np.float)

for i, label in enumerate(y):

y_vec[i, int(y[i])] = 1.0

return X / 255., y_vec

# ops

# layer的实现

def linear_layer(value,output_dim,name = 'linear_connected'):

with tf.variable_scope(name):

try:

weights = tf.get_variable('weights',

[int(value.get_shape()[1]), output_dim],

initializer=tf.truncated_normal_initializer(stddev=0.02))

biases = tf.get_variable('biases',

[output_dim], initializer=tf.constant_initializer(0.0))

except ValueError:

tf.get_variable_scope().reuse_variables()

weights = tf.get_variable('weights',

[int(value.get_shape()[1]),output_dim],

initializer=tf.truncated_normal_initializer(stddev=0.02))

biases = tf.get_variable('biases',

[output_dim],initializer= tf.constant_initializer(0.0))

return tf.matmul(value,weights) + biases

def conv2d(value, output_dim, k_h = 5, k_w = 5, strides = [1,1,1,1], name = "conv2d"):

with tf.variable_scope(name):

try:

weights = tf.get_variable('weights',

[k_h, k_w, int(value.get_shape()[-1]), output_dim],

initializer = tf.truncated_normal_initializer(stddev = 0.02))

biases = tf.get_variable('biases',

[output_dim], initializer = tf.constant_initializer(0.0))

except ValueError:

tf.get_variable_scope().reuse_variables()

weights = tf.get_variable('weights',

[k_h, k_w, int(value.get_shape()[-1]), output_dim],

initializer = tf.truncated_normal_initializer(stddev = 0.02))

biases = tf.get_variable('biases',

[output_dim], initializer = tf.constant_initializer(0.0))

conv = tf.nn.conv2d(value, weights, strides = strides, padding = "SAME")

conv = tf.reshape(tf.nn.bias_add(conv, biases), conv.get_shape())

return conv

# deconv层是反卷积层,也叫转置卷积层,是卷积层反向传播时的操作

def deconv2d(value, output_shape, k_h = 5, k_w = 5, strides = [1,1,1,1], name = "deconv2d"):

with tf.variable_scope(name):

try:

weights = tf.get_variable('weights',

[k_h, k_w, output_shape[-1], int(value.get_shape()[-1])],

initializer = tf.truncated_normal_initializer(stddev = 0.02))

biases = tf.get_variable('biases',

[output_shape[-1]], initializer = tf.constant_initializer(0.0))

except ValueError:

tf.get_variable_scope().reuse_variables()

weights = tf.get_variable('weights',

[k_h, k_w, output_shape[-1], int(value.get_shape()[-1])],

initializer = tf.truncated_normal_initializer(stddev = 0.02))

biases = tf.get_variable('biases',

[output_shape[-1]], initializer = tf.constant_initializer(0.0))

deconv = tf.nn.conv2d_transpose(value, weights, output_shape, strides = strides)

deconv = tf.reshape(tf.nn.bias_add(deconv, biases), deconv.get_shape())

return deconv

# 把用于卷积层计算的四维数据[batch_size, w, h, c]和约束条件y连接起来的操作,

# 需要把两个数据的前三维转化到一样大小才能使用tf.concat

# 把约束条件串联到 feature map

def conv_cond_concat(value, cond, name = 'concat'):

# 把张量的维度形状转化成 Python 的 list

value_shapes = value.get_shape().as_list()

cond_shapes = cond.get_shape().as_list()

# 在第3个维度上(feature map 维度上)把条件和输入串联起来,

# 条件会被预先设为四维张量的形式,假设输入为 [64, 32, 32, 32] 维的张量,

# 条件为 [64, 32, 32, 10] 维的张量,那么输出就是一个 [64, 32, 32, 42] 维张量

with tf.variable_scope(name):

return tf.concat([value, cond * tf.ones(value_shapes[0:3] + cond_shapes[3:])], 3, name = name)

def batch_norm_layer(value, is_train = True, name = 'batch_norm'):

with tf.variable_scope(name) as scope:

if is_train:

return batch_norm(value, decay = 0.9, epsilon = 1e-5, scale = True,

is_training = is_train, updates_collections = None, scope = scope)

else:

return batch_norm(value, decay = 0.9, epsilon = 1e-5, scale = True,

is_training = is_train, reuse = True,

updates_collections = None, scope = scope)

# lrelu就是relu的改良版

def lrelu(x, leak = 0.2, name = 'lrelu'):

with tf.variable_scope(name):

return tf.maximum(x, x*leak, name = name)

# model

def generator(z, y, train=True):

with tf.variable_scope('generator') as scope:

yb = tf.reshape(y, [BATCH_SIZE, 1, 1, 10], name='g_yb')

z_y = tf.concat([z,y], 1, name='g_z_concat_y')

linear1 = linear_layer(z_y, 1024, name='g_linear_layer1')

bn1 = tf.nn.relu(batch_norm_layer(linear1, is_train=True, name='g_bn1'))

bn1_y = tf.concat([bn1, y], 1, name='g_bn1_concat_y')

linear2 = linear_layer(bn1_y, 128 * 49, name='g_linear_layer2')

bn2 = tf.nn.relu(batch_norm_layer(linear2, is_train=True, name='g_bn2'))

bn2_re = tf.reshape(bn2, [BATCH_SIZE, 7, 7, 128], name='g_bn2_reshape')

bn2_yb = conv_cond_concat(bn2_re, yb, name='g_bn2_concat_yb')

deconv1 = deconv2d(bn2_yb, [BATCH_SIZE, 14, 14, 128], strides=[1, 2, 2, 1], name='g_deconv1')

bn3 = tf.nn.relu(batch_norm_layer(deconv1, is_train=True, name='g_bn3'))

bn3_yb = conv_cond_concat(bn3, yb, name='g_bn3_concat_yb')

deconv2 = deconv2d(bn3_yb, [BATCH_SIZE, 28, 28, 1], strides=[1, 2, 2, 1], name='g_deconv2')

return tf.nn.sigmoid(deconv2)

def discriminator(image, y, reuse=False):

with tf.variable_scope('discriminator') as scope:

if reuse:

tf.get_variable_scope().reuse_variables()

yb = tf.reshape(y, [BATCH_SIZE, 1, 1, 10], name='d_yb')

image_yb = conv_cond_concat(image, yb, name='d_image_concat_yb')

conv1 = conv2d(image_yb, 11, strides=[1, 2, 2, 1], name='d_conv1')

lr1 = lrelu(conv1, name='d_lrelu1')

lr1_yb = conv_cond_concat(lr1, yb, name='d_lr1_concat_yb')

conv2 = conv2d(lr1_yb, 74, strides=[1, 2, 2, 1], name='d_conv2')

bn1 = batch_norm_layer(conv2, is_train=True, name='d_bn1')

lr2 = lrelu(bn1, name='d_lrelu2')

lr2_re = tf.reshape(lr2, [BATCH_SIZE, -1], name='d_lr2_reshape')

lr2_y = tf.concat([lr2_re, y], 1, name='d_lr2_concat_y')

linear1 = linear_layer(lr2_y, 1024, name='d_linear_layer1')

bn2 = batch_norm_layer(linear1, is_train=True, name='d_bn2')

lr3 = lrelu(bn2, name='d_lrelu3')

lr3_y = tf.concat([lr3, y], 1, name='d_lr3_concat_y')

linear2 = linear_layer(lr3_y, 1, name='d_linear_layer2')

return linear2

def sampler(z, y, train = True):

tf.get_variable_scope().reuse_variables()

return generator(z, y, train = train)

# train

# 这个函数的作用是在训练的过程中保存采样生成的图片。

def save_images(images, size, path):

"""

Save the samples images

The best size number is

int(max(sqrt(image.shape[0]),sqrt(image.shape[1]))) + 1

example:

The batch_size is 64, then the size is recommended [8, 8]

The batch_size is 32, then the size is recommended [6, 6]

"""

# 图片归一化,主要用于生成器输出是 tanh 形式的归一化

img = (images + 1.0)/2.0

h, w = img.shape[1], img.shape[2]

# 产生一个大画布,用来保存生成的 batch_size 个图像

merge_img = np.zeros((h * size[0], w * size[1], 3))

# 循环使得画布特定地方值为某一幅图像的值

for idx, image in enumerate(images):

i = idx % size[1]

j = idx // size[1]

merge_img[j*h:j*h+h,i*w:i*w+w,:] = image

return scipy.misc.imsave(path,merge_img) # 保存画布

def train():

# read data

X,Y = read_data()

# global_step to record the step of training

global_step = tf.Variable(0,name = 'global_step',trainable= False)

# set the data placeholder

y = tf.placeholder(tf.int32,[BATCH_SIZE],name= 'y')

_y = tf.one_hot(y, depth=10, on_value=None, off_value=None, axis=None, dtype=None, name='one_hot')

z = tf.placeholder(tf.float32,[None,100],name= 'z')

images = tf.placeholder(tf.float32,[BATCH_SIZE,28,28,1],name='images')

# model

G = generator(z,_y)

# train real data

D = discriminator(images,_y,reuse=False)

# train generated data

_D = discriminator(G,_y,reuse= True)

# calculate loss using sigmoid cross entropy

d_loss_real = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits= D,labels= tf.ones_like(D)))

d_loss_fake = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits= _D,labels=tf.zeros_like(_D)))

g_loss = tf.reduce_mean(tf.nn.sigmoid_cross_entropy_with_logits(logits= _D,labels= tf.ones_like(_D)))

d_loss = d_loss_real + d_loss_fake

t_vars = tf.trainable_variables()

d_vars = [var for var in t_vars if 'd_' in var.name]

g_vars = [var for var in t_vars if 'g_' in var.name]

with tf.variable_scope(tf.get_variable_scope(),reuse= False):

d_optim = tf.train.AdamOptimizer(0.0002,beta1= 0.5).minimize(d_loss,var_list=d_vars,global_step= global_step)

g_optim = tf.train.AdamOptimizer(0.0002,beta2= 0.5).minimize(g_loss,var_list=g_vars,global_step= global_step)

# tensorboard

train_dir = 'logs'

z_sum = tf.summary.histogram('z',z)

d_sum = tf.summary.histogram('d',D)

d__sum = tf.summary.histogram('d_',_D)

g_sum = tf.summary.histogram('g',G)

d_loss_real_sum = tf.summary.scalar('d_loss_real',d_loss_real)

d_loss_fake_sum = tf.summary.scalar('d_loss_fake',d_loss_fake)

g_loss_sum = tf.summary.scalar('g_loss',g_loss)

d_loss_sum = tf.summary.scalar('d_loss',d_loss)

g_sum = tf.summary.merge([z_sum,d__sum,g_sum,d_loss_fake_sum,g_loss_sum])

d_sum = tf.summary.merge([z_sum,d_sum,d_loss_real_sum,d_loss_sum])

# initial

init = tf.global_variables_initializer()

sess = tf.InteractiveSession()

writer = tf.summary.FileWriter(train_dir+'/train',sess.graph)

# save

saver = tf.train.Saver()

check_path =train_dir + '/save/model.ckpt'

# sample

sample_z = np.random.uniform(-1,1,size=(BATCH_SIZE,100))

sample_labels = Y[0:BATCH_SIZE]

# make sample

sample = sampler(z,_y)

# run

sess.run(init)

# train

for epoch in range(10):

batch_idx = int(70000/64)

for idx in range(batch_idx):

batch_images = X[idx * 64:(idx + 1) * 64]

batch_labels = Y[idx * 64:(idx + 1) * 64]

batch_z = np.random.uniform(-1, 1, size=(BATCH_SIZE, 100))

_, summary_str = sess.run([d_optim, d_sum],

feed_dict={images: batch_images,

z: batch_z,

_y: batch_labels})

writer.add_summary(summary_str, idx + 1)

_, summary_str = sess.run([g_optim, g_sum],

feed_dict={images: batch_images,

z: batch_z,

_y: batch_labels})

writer.add_summary(summary_str, idx + 1)

writer.add_summary(summary_str,idx + 1)

d_loss1 = d_loss_fake.eval({z:batch_z,_y:batch_labels})

d_loss2 = d_loss_real.eval({images:batch_images,_y:batch_labels})

D_loss = d_loss1 + d_loss2

G_loss = g_loss.eval({z:batch_z,_y:batch_labels})

# every 20 batch output loss

if idx % 20 == 0:

print('Epoch:%d [%4d/%4d] d_loss: %.8f,g_loss: %.8f' % (epoch,idx,batch_idx,D_loss,G_loss))

# every 100 batch save a picture

if idx % 100 == 0:

sap = sess.run(sample,feed_dict={z:sample_z,_y:sample_labels})

samples_path = 'sample/'

if not os.path.exists(samples_path):

os.makedirs(samples_path)

save_images(sap,[8,8],samples_path + 'test_%d_epoch_%d.png' % (epoch,idx))

# every 500 batch save model

if idx % 500 == 0:

saver.save(sess,check_path,global_step = idx +1)

sess.close()

if __name__ == '__main__':

train()

参考文献:

1. https://github.com/carpedm20/DCGAN-tensorflow

2. https://github.com/tensorflow/tensorflow/blob/b826b79718e3e93148c3545e7aa3f90891744cc0/tensorflow/contrib/layers/python/layers/layers.py#L100

3.http://www.cnblogs.com/Charles-Wan/p/6338074.html

4.https://zhuanlan.zhihu.com/p/27347398