(一)搭建前的环境配置

本文从零开始搭建并熟悉Flink的开发方式,故所有环境以Windows单机为主,开发语言采用Java,最后涉及一些集群环境的配置方式。

在搭建Flink本地单机环境前,首先确保电脑上Java及Maven环境已搭建,笔者使用的Java版本为1.8.0_241;maven版本为3.6.3;Flink版本为1.9.2。

随后,从Flink官网下载对应的Flink安装包,下载地址:https://flink.apache.org/downloads.html

在选择下载版本时,有如下可选:

对于本地环境而言,Scala2.11和2.12没有区别,选择一个下载即可。解压后如图所示:

(二)启动Cluster界面

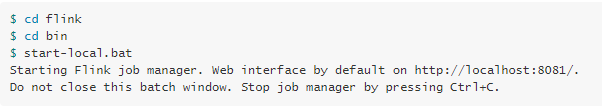

官网标注的启动本地任务方式如下图所示:

但笔者打开实际的界面如下图所示:

并没有start-local.bat,后来查询资料得知,新版本的Flink不提供这种启动方式了,直接启动start-cluster.bat即可,如下图所示:

然后在电脑网页打开http://localhost:8081即可,运行界面如下图所示:

这里是空白的,但不影响测试,后期启动集群环境后,界面上就能够看到运行的任务信息了。

Windows环境下还有一种启动方式,安装Cygwin,然后按照Linux的方式来进行启动,也就是运行start-cluster.sh即可。但是由于是演示使用,因此就不推荐这种方式了。如果想真正的参与到开发中,还是推荐能够搭建一个小型的集群来进行模拟和开发。

(三)创建Maven工程

官方推荐的是使用mvn构建,相关命令如下:

mvn archetype:generate \

-DarchetypeGroupId=org.apache.flink \

-DarchetypeArtifactId=flink-quickstart-java \

-DarchetypeCatalog=https://repository.apache.org/content/repositories/snapshots/ \

-DarchetypeVersion=1.3-SNAPSHOT笔者实际安装中,发现三个问题:

第一个是-DarchetypeCatalog报错,这个删除即可。

第二个是maven官网访问总是缓慢,因此换成阿里云的节点即可。具体方式是:打开maven安装目录,打开conf目录下的settings.xml,注意,如果使用Eclipse等IDE,maven的目录可能不是自己安装的,需要去修改对应的settings.xml。

打开settings.xml之后,搜索:“mirror”,把原本注释掉的部分打开,修改成如下:

<mirror>

<id>nexus-aliyun</id>

<mirrorOf>*</mirrorOf>

<name>Nexus aliyun</name>

<url>http://maven.aliyun.com/nexus/content/groups/public</url>

</mirror>

但是存在一个问题,阿里云的镜像与官网的有一些区别,在Flink最新版本中,使用阿里云镜像会出现找不到对应pom的情况,这时候修改maven配置文件,改回官方镜像,然后重新编译,即可解决。

第三个是1.3-SNAPSHOT不知道是什么时候的版本了,笔者手动改成改成了1.9.2版本,目录如下:

Jar包的下载地址从maven官网查找:

https://mvnrepository.com/artifact/org.apache.flink/flink-quickstart-java/1.9.2

下载后,放入到对应的目录即可:

修改后,官网的构建任务就可以正确运行了。

最终的mvn命令如下:

mvn archetype:generate -DarchetypeGroupId=org.apache.flink -DarchetypeArtifactId=flink-quickstart-java -DarchetypeVersion=1.9.2 -DgroupId=wiki-edits -DartifactId=wiki-edits -Dversion=0.1 -Dpackage=wikiedits -DinteractiveMode=false

构建成功图如下所示:

构建完成后,打开Eclipse,导入Maven工程,mvn clean install后,工程样子如下图所示:

(四)创建WordCount任务并运行

Quickstart 工程包含了一个 WordCount 的实现,也就是大数据处理系统的 Hello World。WordCount 的目标是计算文本中单词出现的频率。比如: 单词 “the” 或者 “house” 在所有的Wikipedia文本中出现了多少次。

样本输入:

big data is big样本输出:

big 2

data 1

is 1下面的代码就是 Quickstart 工程的 WordCount 实现,它使用两种操作( FlatMap 和 Reduce )处理了一些文本,并且在标准输出中打印了单词的计数结果。

public class WordCount {

public static void main(String[] args) throws Exception {

// set up the execution environment

final ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

// get input data

DataSet<String> text = env.fromElements(

"To be, or not to be,--that is the question:--",

"Whether 'tis nobler in the mind to suffer",

"The slings and arrows of outrageous fortune",

"Or to take arms against a sea of troubles,"

);

DataSet<Tuple2<String, Integer>> counts =

// split up the lines in pairs (2-tuples) containing: (word,1)

text.flatMap(new LineSplitter())

// group by the tuple field "0" and sum up tuple field "1"

.groupBy(0)

.sum(1);

// execute and print result

counts.print();

}

}这些操作是在专门的类中定义的,下面是 LineSplitter 类的实现。

public static final class LineSplitter implements FlatMapFunction<String, Tuple2<String, Integer>> {

@Override

public void flatMap(String value, Collector<Tuple2<String, Integer>> out) {

// normalize and split the line

String[] tokens = value.toLowerCase().split("\\W+");

// emit the pairs

for (String token : tokens) {

if (token.length() > 0) {

out.collect(new Tuple2<String, Integer>(token, 1));

}

}

}

}运行结果如下:

以上的实现方式非常简单,因此设计一种更加复杂的WordCount例子,包括了输入、输出及DataSet数据结构。

首先写一个WordCountData:

public class WordCountData {

public static final String[] WORDS = new String[] {

"To be, or not to be,--that is the question:--",

"Whether 'tis nobler in the mind to suffer",

"The slings and arrows of outrageous fortune",

"Or to take arms against a sea of troubles,",

"And by opposing end them?--To die,--to sleep,--",

"No more; and by a sleep to say we end",

"The heartache, and the thousand natural shocks",

"That flesh is heir to,--'tis a consummation",

"Devoutly to be wish'd. To die,--to sleep;--",

"To sleep! perchance to dream:--ay, there's the rub;",

"For in that sleep of death what dreams may come,",

"When we have shuffled off this mortal coil,",

"Must give us pause: there's the respect",

"That makes calamity of so long life;",

"For who would bear the whips and scorns of time,",

"The oppressor's wrong, the proud man's contumely,",

"The pangs of despis'd love, the law's delay,",

"The insolence of office, and the spurns",

"That patient merit of the unworthy takes,",

"When he himself might his quietus make",

"With a bare bodkin? who would these fardels bear,",

"To grunt and sweat under a weary life,",

"But that the dread of something after death,--",

"The undiscover'd country, from whose bourn",

"No traveller returns,--puzzles the will,",

"And makes us rather bear those ills we have",

"Than fly to others that we know not of?",

"Thus conscience does make cowards of us all;",

"And thus the native hue of resolution",

"Is sicklied o'er with the pale cast of thought;",

"And enterprises of great pith and moment,",

"With this regard, their currents turn awry,",

"And lose the name of action.--Soft you now!",

"The fair Ophelia!--Nymph, in thy orisons",

"Be all my sins remember'd."

};

public static DataSet<String> getDefaultTextLineDataSet(ExecutionEnvironment env) {

return env.fromElements(WORDS);

}

}然后撰写WordCount例子:

public class WordCount {

// *************************************************************************

// PROGRAM

// *************************************************************************

public static void main(String[] args) throws Exception {

final MultipleParameterTool params = MultipleParameterTool.fromArgs(args);

// set up the execution environment

final ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

// make parameters available in the web interface

env.getConfig().setGlobalJobParameters(params);

// get input data

DataSet<String> text = null;

if (params.has("input")) {

// union all the inputs from text files

for (String input : params.getMultiParameterRequired("input")) {

if (text == null) {

text = env.readTextFile(input);

} else {

text = text.union(env.readTextFile(input));

}

}

Preconditions.checkNotNull(text, "Input DataSet should not be null.");

} else {

// get default test text data

System.out.println("Executing WordCount example with default input data set.");

System.out.println("Use --input to specify file input.");

text = WordCountData.getDefaultTextLineDataSet(env);

}

DataSet<Tuple2<String, Integer>> counts =

// split up the lines in pairs (2-tuples) containing: (word,1)

text.flatMap(new Tokenizer())

// group by the tuple field "0" and sum up tuple field "1"

.groupBy(0)

.sum(1);

// emit result

if (params.has("output")) {

counts.writeAsCsv(params.get("output"), "\n", " ");

// execute program

env.execute("WordCount Example");

} else {

System.out.println("Printing result to stdout. Use --output to specify output path.");

counts.print();

}

}

// *************************************************************************

// USER FUNCTIONS

// *************************************************************************

/**

* Implements the string tokenizer that splits sentences into words as a user-defined

* FlatMapFunction. The function takes a line (String) and splits it into

* multiple pairs in the form of "(word,1)" ({@code Tuple2<String, Integer>}).

*/

public static final class Tokenizer implements FlatMapFunction<String, Tuple2<String, Integer>> {

@Override

public void flatMap(String value, Collector<Tuple2<String, Integer>> out) {

// normalize and split the line

String[] tokens = value.toLowerCase().split("\\W+");

// emit the pairs

for (String token : tokens) {

if (token.length() > 0) {

out.collect(new Tuple2<>(token, 1));

}

}

}

}

}运行结果与第一个例子相同。

(五)集群运行与Kafka接入

首先,要在pom中添加对应的java包:

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-connector-kafka-0.8_2.11</artifactId>

<version>${flink.version}</version>

</dependency>接下来,去掉原有代码中的print()方法,改成写入到Kafka中:

StreamExecutionEnvironment see = StreamExecutionEnvironment.getExecutionEnvironment();

DataStream<WikipediaEditEvent> edits = see.addSource(new WikipediaEditsSource());

KeyedStream<WikipediaEditEvent, String> keyedEdits = edits

.keyBy(new KeySelector<WikipediaEditEvent, String>() {

@Override

public String getKey(WikipediaEditEvent event) {

return event.getUser();

}

});

DataStream<Tuple2<String, Long>> result = keyedEdits

.timeWindow(Time.seconds(5))

.fold(new Tuple2<>("", 0L), new FoldFunction<WikipediaEditEvent, Tuple2<String, Long>>() {

@Override

public Tuple2<String, Long> fold(Tuple2<String, Long> acc, WikipediaEditEvent event) {

acc.f0 = event.getUser();

acc.f1 += event.getByteDiff();

return acc;

}

});

result

.map(new MapFunction<Tuple2<String,Long>, String>() {

@Override

public String map(Tuple2<String, Long> tuple) {

return tuple.toString();

}

})

.addSink(new FlinkKafkaProducer08<>("localhost:9092", "wiki-result", new SimpleStringSchema()));

see.execute();

最后,启动Kafka并观察输出:

bin/kafka-console-consumer.sh --zookeeper localhost:2181 --topic wiki-result在Web页面中观察运行情况: