1 项目依赖

我的Scala版本是2.12,这里Flink的依赖要和Scala版本相对应

<properties>

<java.version>1.8</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-clients_2.12</artifactId>

<version>1.12.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-java</artifactId>

<version>1.12.0</version>

</dependency>

<dependency>

<groupId>org.apache.flink</groupId>

<artifactId>flink-streaming-java_2.12</artifactId>

<version>1.12.0</version>

</dependency>

</dependencies>

2 本地运行

2.1 函数作为参数传递

import org.apache.flink.api.common.RuntimeExecutionMode;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

public class WordCountSourceDataStream {

public static void main(String[] args) throws Exception {

// 1. 构建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 2. 设置运行模式

env.setRuntimeMode(RuntimeExecutionMode.AUTOMATIC);

// 3. 准备数据并接收

DataStream<String> linesDS = env.fromElements("hadoop,hdfs,flink,spark", "hadoop,hdfs,flink", "hadoop,hdfs", "hadoop,spark");

// 4. 处理数据

// 4.1 每行按照逗号分割组成集合

DataStream<String> wordsDS = linesDS.flatMap(new FlatMapFunction<String, String>() {

@Override

public void flatMap(String value, Collector<String> out) throws Exception {

// value是一行单词

String[] words = value.split(",");

for (String word : words) {

// 收集每个word

out.collect(word);

}

}

});

// 4.2 将每个单词记为1,并组成元组返回

DataStream<Tuple2<String, Integer>> word_1 = wordsDS.map(new MapFunction<String, Tuple2<String, Integer>>() {

@Override

public Tuple2<String, Integer> map(String value) throws Exception {

return Tuple2.of(value, 1);

}

});

// 4.3 对数据按照单词分组

KeyedStream<Tuple2<String, Integer>, String> word_1_group = word_1.keyBy(tuple2 -> tuple2.f0);

// 4.4 把分组的数据按照索引聚合

DataStream<Tuple2<String, Integer>> result = word_1_group.sum(1);

// 5. 输出结果

result.print();

// 6. 执行

env.execute();

}

}

2.2 Lambda匿名函数

对上面代码的4.1和4.2进行了修改

import org.apache.flink.api.common.RuntimeExecutionMode;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.typeinfo.TypeHint;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

import java.util.Arrays;

public class WordCountSourceDataStreamL {

public static void main(String[] args) throws Exception {

// 1. 构建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 2. 设置运行模式

env.setRuntimeMode(RuntimeExecutionMode.AUTOMATIC);

// 3. 准备数据并接收

DataStream<String> linesDS = env.fromElements("hadoop,hdfs,flink,spark", "hadoop,hdfs,flink", "hadoop,hdfs", "hadoop,spark");

// 4. 处理数据

// 4.1 每行按照逗号分割组成集合

DataStream<String> wordsDS = linesDS.flatMap(

(String value, Collector<String> out) -> Arrays.asList(value.split(",")).forEach(out::collect)

).returns(Types.STRING);

// 4.2 将每个单词记为1,并组成元组返回

DataStream<Tuple2<String, Integer>> word_1 = wordsDS.map(

(String value) -> Tuple2.of(value, 1)

).returns(TypeInformation.of(new TypeHint<Tuple2<String, Integer>>(){

}));

// 4.3 对数据按照单词分组

KeyedStream<Tuple2<String, Integer>, String> word_1_group = word_1.keyBy(tuple2 -> tuple2.f0);

// 4.4 把分组的数据按照索引聚合

DataStream<Tuple2<String, Integer>> result = word_1_group.sum(1);

// 5. 输出结果

result.print();

// 6. 执行

env.execute();

}

}

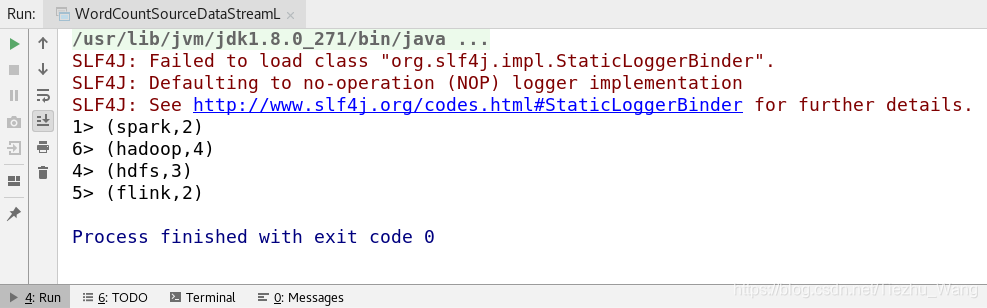

控制台输出:

3 提交到Yarn上运行

对2.2代码的5进行了修改

import org.apache.flink.api.common.RuntimeExecutionMode;

import org.apache.flink.api.common.functions.FlatMapFunction;

import org.apache.flink.api.common.functions.MapFunction;

import org.apache.flink.api.common.typeinfo.TypeHint;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.api.common.typeinfo.Types;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.datastream.KeyedStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

import java.util.Arrays;

public class WordCountSourceDataStreamYarn {

public static void main(String[] args) throws Exception {

// 1. 构建环境

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

// 2. 设置运行模式

env.setRuntimeMode(RuntimeExecutionMode.AUTOMATIC);

// 3. 准备数据并接收

DataStream<String> linesDS = env.fromElements("hadoop,hdfs,flink,spark", "hadoop,hdfs,flink", "hadoop,hdfs", "hadoop,spark");

// 4. 处理数据

// 4.1 每行按照逗号分割组成集合

DataStream<String> wordsDS = linesDS.flatMap(

(String value, Collector<String> out) -> Arrays.asList(value.split(",")).forEach(out::collect)

).returns(Types.STRING);

// 4.2 将每个单词记为1,并组成元组返回

DataStream<Tuple2<String, Integer>> word_1 = wordsDS.map(

(String value) -> Tuple2.of(value, 1)

).returns(TypeInformation.of(new TypeHint<Tuple2<String, Integer>>(){

}));

// 4.3 对数据按照单词分组

KeyedStream<Tuple2<String, Integer>, String> word_1_group = word_1.keyBy(tuple2 -> tuple2.f0);

// 4.4 把分组的数据按照索引聚合

DataStream<Tuple2<String, Integer>> result = word_1_group.sum(1);

// 5. 写入结果

result.writeAsText("hdfs://localhost:9000/user/hadoop/flink/wordcount/output_"+System.currentTimeMillis());

// 6. 执行

env.execute();

}

}

开启HDFS和cluster:

start-dfs.sh

start-cluster.sh

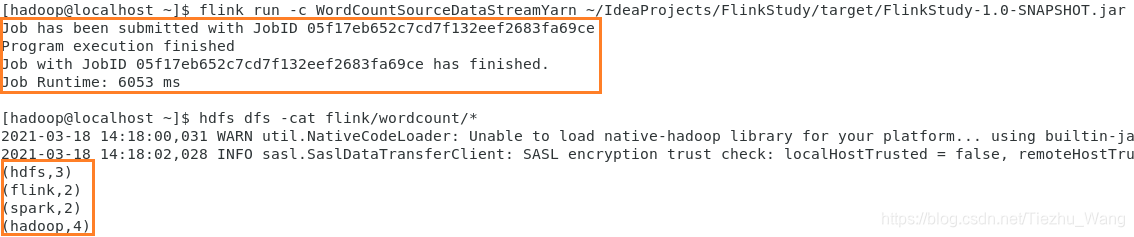

提交运行:

flink run -c WordCountSourceDataStreamYarn ~/IdeaProjects/FlinkStudy/target/FlinkStudy-1.0-SNAPSHOT.jar

运行结果:

4 Error和解决办法

Error:

Could not find a file system implementation for scheme ‘hdfs’

Solution:

https://blog.csdn.net/Tiezhu_Wang/article/details/114977487