本次使用3台阿里云主机进行集群部署:

**

1. 版本

**

| 组件 | 版本 | 备注及下载地址 |

|---|---|---|

| Centos | 7.2 64-bit | lsb_release -a 命令查看操作系统版本file /bin/ls 命令查看操作系统位数 |

| Hadoop | hadoop-2.6.0-cdh5.15.1.tar | 下载源码自行编译好的版本 |

| jdk | java version “1.8.0_45" | https://www.oracle.com/technetwork/java/javase/downloads/index.html |

| Zookeeper | zookeeper-3.4.6.tar.gz | 热切,Yarn存储数据使用的协调服务https://zookeeper.apache.org/doc/r3.4.6/releasenotes.html |

2.主机规划

| IP | host | 安装软件 | 进程 |

|---|---|---|---|

| 172.16.128.58 | ruozedata001 | Hadoop Zookeeper | NameNode DFSZKFailoverController JournalNode DataNode ResourceManager JobHistoryServer NodeManager QuorumPeerMain |

| 172.16.128.56 | ruozedata002 | Hadoop Zookeeper | NameNode DFSZKFailoverController JournalNode DataNode ResourceManager NodeManager QuorumPeerMain |

| 172.16.128.57 | ruozedata003 | Hadoop Zookeeper | JournalNode, DataNode NodeManager QuorumPeerMain |

3.环境准备

由于我使用的阿里云云主机,所以我只要配置ip绑定 与三台服务器互相通信就可以了,

- IP与hostname绑定

[root@ruozedata001~]vi /etc/hosts

172.16.128.58 ruozedata001

172.16.128.56 ruozedata002

172.16.128.57 ruozedata003

验证 ping hadoop002

- 设置三台机器互相通信

[root@ruozedata001~]#yum install -y lrzsz

#注意这里添加一个用户hadoop是没有密码的,在hadoop用户下完成集群的部署

[root@ruozedata001~]#useradd hadoop

[root@ruozedata001~]#su - hadoop

#配置ssh

[hadoop@ruozedata001~]#ssh-keygen

#将本机的公钥放入authorized_keys中,

[hadoop@ruozedata001~]#cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

#复制其他两台服务器上的公钥,到当前目录下 同时追加数据到authorized_keys中

[hadoop@ruozedata001 .ssh]# cat id_rsa.pub2 >> authorized_keys

#复制authorized_keys到另外两台服务器中,替换其他存在的authorized_keys

验证(每台机器上执行下面3条命令,只输入yes,不输入密码,则这3台互相通信了)

ssh hadoop@ruozedata001 date

ssh hadoop@ruozedata002 date

ssh hadoop@ruozedata003 date

-

JDK的安装

这个不详细描述了,不会的百度下 -

安装Zookeeper

1.解压文件

[hadoop@ruozedata001 software]# tar -zxvf zookeeper-3.4.6.tar.gz -C /home/hadoop/app/

#建立软链接

[hadoop@ruozedata001 software]# ln -s zookeeper-3.4.6/ zookeeper

2.修改配置

#进入zookeeper下的conf目录

[hadoop@ruozedata001 software]# cd root/app/zookeeper-3.4.6/conf

[hadoop@ruozedata001 conf]# cp zoo_sample.cfg zoo.cfg

#修改配置文件信息

[hadoop@ruozedata001 conf]# vi zoo.cfg

# The number of milliseconds of each tick

tickTime=2000

# The number of ticks that the initial

# synchronization phase can take

initLimit=10

# The number of ticks that can pass between

# sending a request and getting an acknowledgement

syncLimit=5

# the directory where the snapshot is stored.

# do not use /tmp for storage, /tmp here is just

# example sakes.

#注意这个data文件夹需要自己建立

dataDir=/home/hadoop/app/zookeeper-3.4.6/data

# the port at which the clients will connect

clientPort=2181

# the maximum number of client connections.

# increase this if you need to handle more clients

#maxClientCnxns=60

#设置集群信息,此处的ruozedata001可以用ip地址代替

server.1=ruozedata001:2888:3888

server.2=ruozedata002:2888:3888

server.3=ruozedata003:2888:3888

3.创建myid

[hadoop@ruozedata001 zookeeper-3.4.6]# mkdir data

[hadoop@ruozedata001 zookeeper-3.4.6]# touch data/myid

#注意echo 写入数据 >的前后需要空格

[hadoop@ruozedata001 data]#echo 1 > data/myid

4.配置环境变量

过…

ruozedata002 与003同样配置,其中myid写入的数据分别为2,3

- 安装Hadoop

#解压

[hadoop@hadoop001 software]# tar -xvf hadoop-2.6.0-cdh5.15.1 -C /home/hadoop/app/

#建立软链接

[hadoop@hadoop001 software]# ln -s hadoop-2.6.0-cdh5.15.1/ hadoop

#配置环境变量

export HADOOP_HOME=/home/hadoop/app/hadoop/

export PATH=$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

- 修改hadoop-env.sh

[hadoop@hadoop001 software]# cd ~/app/hadoop/etc/hadoop

[hadoop@hadoop001 hadoop ]# vim hadoop-env.sh

将export JAVA_HOME=${JAVA_HOME}替换为你JDK的路径

export JAVA_HOME= /home/hadoop/app/java

- core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!--Yarn 需要使用 fs.defaultFS 指定NameNode URI -->

<property>

<name>fs.defaultFS</name>

<value>hdfs://ruozeclusterg7</value>

</property>

<!--==============================Trash机制======================================= -->

<property>

<!--多长时间创建CheckPoint NameNode截点上运行的CheckPointer 从Current文件夹创建CheckPoint;默认:0 由fs.trash.interval项指定 -->

<name>fs.trash.checkpoint.interval</name>

<value>0</value>

</property>

<property>

<!--多少分钟.Trash下的CheckPoint目录会被删除,该配置服务器设置优先级大于客户端,默认:0 不删除 -->

<name>fs.trash.interval</name>

<value>1440</value>

</property>

<!--指定hadoop临时目录, hadoop.tmp.dir 是hadoop文件系统依赖的基础配置,很多路径都依赖它。如果hdfs-site.xml中不配 置namenode和datanode的存放位置,默认就放在这>个路径中 -->

<property>

<name>hadoop.tmp.dir</name>

<value>/home/hadoop/tmp/hadoop</value>

</property>

<!-- 指定zookeeper地址 -->

<property>

<name>ha.zookeeper.quorum</name>

<value>ruozedata001:2181,ruozedata002:2181,ruozedata003:2181</value>

</property>

<!--指定ZooKeeper超时间隔,单位毫秒 -->

<property>

<name>ha.zookeeper.session-timeout.ms</name>

<value>2000</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.hadoop.groups</name>

<value>*</value>

</property>

<property>

<name>io.compression.codecs</name>

<value>org.apache.hadoop.io.compress.GzipCodec,

org.apache.hadoop.io.compress.DefaultCodec,

org.apache.hadoop.io.compress.BZip2Codec,

org.apache.hadoop.io.compress.SnappyCodec

</value>

</property>

</configuration>

- 修改hdfs-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!--HDFS超级用户 -->

<property>

<name>dfs.permissions.superusergroup</name>

<value>hadoop</value>

</property>

<!--开启web hdfs -->

<property>

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>/home/hadoop/data/dfs/name</value>

<description> namenode 存放name table(fsimage)本地目录(需要修改)</description>

</property>

<property>

<name>dfs.namenode.edits.dir</name>

<value>${dfs.namenode.name.dir}</value>

<description>namenode粗放 transaction file(edits)本地目录(需要修改)</description>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>/home/hadoop/data/dfs/data</value>

<description>datanode存放block本地目录(需要修改)</description>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<!-- 块大小128M (默认128M) -->

<property>

<name>dfs.blocksize</name>

<value>134217728</value>

</property>

<!--======================================================================= -->

<!--HDFS高可用配置 -->

<!--指定hdfs的nameservice为ruozeclusterg7,需要和core-site.xml中的保持一致 -->

<property>

<name>dfs.nameservices</name>

<value>ruozeclusterg7</value>

</property>

<property>

<!--设置NameNode IDs 此版本最大只支持两个NameNode -->

<name>dfs.ha.namenodes.ruozeclusterg7</name>

<value>nn1,nn2</value>

</property>

<!-- Hdfs HA: dfs.namenode.rpc-address.[nameservice ID] rpc 通信地址 -->

<property>

<name>dfs.namenode.rpc-address.ruozeclusterg7.nn1</name>

<value>ruozedata001:8020</value>

</property>

<property>

<name>dfs.namenode.rpc-address.ruozeclusterg7.nn2</name>

<value>ruozedata002:8020</value>

</property>

<!-- Hdfs HA: dfs.namenode.http-address.[nameservice ID] http 通信地址 -->

<property>

<name>dfs.namenode.http-address.ruozeclusterg7.nn1</name>

<value>ruozedata001:50070</value>

</property>

<property>

<name>dfs.namenode.http-address.ruozeclusterg7.nn2</name>

<value>ruozedata002:50070</value>

</property>

<!--==================Namenode editlog同步 ============================================ -->

<!--保证数据恢复 -->

<property>

<name>dfs.journalnode.http-address</name>

<value>0.0.0.0:8480</value>

</property>

<property>

<name>dfs.journalnode.rpc-address</name>

<value>0.0.0.0:8485</value>

</property>

<property>

<!--设置JournalNode服务器地址,QuorumJournalManager 用于存储editlog -->

<!--格式:qjournal://<host1:port1>;<host2:port2>;<host3:port3>/<journalId> 端口同journalnode.rpc-address -->

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://ruozedata001:8485;ruozedata002:8485;ruozedata003:8485/ruozeclusterg7</value>

</property>

<property>

<!--JournalNode存放数据地址 -->

<name>dfs.journalnode.edits.dir</name>

<value>/home/hadoop/data/dfs/jn</value>

</property>

<!--==================DataNode editlog同步 ============================================ -->

<property>

<!--DataNode,Client连接Namenode识别选择Active NameNode策略 -->

<!-- 配置失败自动切换实现方式 -->

<name>dfs.client.failover.proxy.provider.ruozeclusterg7</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<!--==================Namenode fencing:=============================================== -->

<!--Failover后防止停掉的Namenode启动,造成两个服务 -->

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/home/hadoop/.ssh/id_rsa</value>

</property>

<property>

<!--多少milliseconds 认为fencing失败 -->

<name>dfs.ha.fencing.ssh.connect-timeout</name>

<value>30000</value>

</property>

<!--==================NameNode auto failover base ZKFC and Zookeeper====================== -->

<!--开启基于Zookeeper -->

<property>

<name>dfs.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!--动态许可datanode连接namenode列表 -->

<property>

<name>dfs.hosts</name>

<value>/home/hadoop/app/hadoop/etc/hadoop/slaves</value>

</property>

</configuration>

- 修改mapred-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- 配置 MapReduce Applications -->

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<!-- JobHistory Server ============================================================== -->

<!-- 配置 MapReduce JobHistory Server 地址 ,默认端口10020 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>ruozedata001:10020</value>

</property>

<!-- 配置 MapReduce JobHistory Server web ui 地址, 默认端口19888 -->

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>ruozedata001:19888</value>

</property>

<!-- 配置 Map段输出的压缩,snappy-->

<property>

<name>mapreduce.map.output.compress</name>

<value>true</value>

</property>

<property>

<name>mapreduce.map.output.compress.codec</name>

<value>org.apache.hadoop.io.compress.SnappyCodec</value>

</property>

</configuration>

- yarn-site.sh

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<!-- nodemanager 配置 ================================================= -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

<property>

<name>yarn.nodemanager.localizer.address</name>

<value>0.0.0.0:23344</value>

<description>Address where the localizer IPC is.</description>

</property>

<property>

<name>yarn.nodemanager.webapp.address</name>

<value>0.0.0.0:23999</value>

<description>NM Webapp address.</description>

</property>

<!-- HA 配置 =============================================================== -->

<!-- Resource Manager Configs -->

<property>

<name>yarn.resourcemanager.connect.retry-interval.ms</name>

<value>2000</value>

</property>

<property>

<name>yarn.resourcemanager.ha.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.resourcemanager.ha.automatic-failover.enabled</name>

<value>true</value>

</property>

<!-- 使嵌入式自动故障转移。HA环境启动,与 ZKRMStateStore 配合 处理fencing -->

<property>

<name>yarn.resourcemanager.ha.automatic-failover.embedded</name>

<value>true</value>

</property>

<!-- 集群名称,确保HA选举时对应的集群 -->

<property>

<name>yarn.resourcemanager.cluster-id</name>

<value>yarn-cluster</value>

</property>

<property>

<name>yarn.resourcemanager.ha.rm-ids</name>

<value>rm1,rm2</value>

</property>

<!--这里RM主备结点需要单独指定,(可选)

<property>

<name>yarn.resourcemanager.ha.id</name>

<value>rm2</value>

</property>

-->

<property>

<name>yarn.resourcemanager.scheduler.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.scheduler.fair.FairScheduler</value>

</property>

<property>

<name>yarn.resourcemanager.recovery.enabled</name>

<value>true</value>

</property>

<property>

<name>yarn.app.mapreduce.am.scheduler.connection.wait.interval-ms</name>

<value>5000</value>

</property>

<!-- ZKRMStateStore 配置 -->

<property>

<name>yarn.resourcemanager.store.class</name>

<value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value>

</property>

<property>

<name>yarn.resourcemanager.zk-address</name>

<value>ruozedata001:2181,ruozedata002:2181,ruozedata003:2181</value>

</property>

<property>

<name>yarn.resourcemanager.zk.state-store.address</name>

<value>ruozedata001:2181,ruozedata002:2181,ruozedata003:2181</value>

</property>

<!-- Client访问RM的RPC地址 (applications manager interface) -->

<property>

<name>yarn.resourcemanager.address.rm1</name>

<value>ruozedata001:23140</value>

</property>

<property>

<name>yarn.resourcemanager.address.rm2</name>

<value>ruozedata002:23140</value>

</property>

<!-- AM访问RM的RPC地址(scheduler interface) -->

<property>

<name>yarn.resourcemanager.scheduler.address.rm1</name>

<value>ruozedata001:23130</value>

</property>

<property>

<name>yarn.resourcemanager.scheduler.address.rm2</name>

<value>ruozedata002:23130</value>

</property>

<!-- RM admin interface -->

<property>

<name>yarn.resourcemanager.admin.address.rm1</name>

<value>ruozedata001:23141</value>

</property>

<property>

<name>yarn.resourcemanager.admin.address.rm2</name>

<value>ruozedata002:23141</value>

</property>

<!--NM访问RM的RPC端口 -->

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm1</name>

<value>ruozedata001:23125</value>

</property>

<property>

<name>yarn.resourcemanager.resource-tracker.address.rm2</name>

<value>ruozedata002:23125</value>

</property>

<!-- RM web application 地址 -->

<property>

<name>yarn.resourcemanager.webapp.address.rm1</name>

<value>ruozedata001:8088</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.address.rm2</name>

<value>ruozedata002:8088</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.rm1</name>

<value>ruozedata001:23189</value>

</property>

<property>

<name>yarn.resourcemanager.webapp.https.address.rm2</name>

<value>ruozedata002:23189</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property>

<name>yarn.log.server.url</name>

<value>http://ruozedata001:19888/jobhistory/logs</value>

</property>

<property>

<name>yarn.nodemanager.resource.memory-mb</name>

<value>2048</value>

</property>

<property>

<name>yarn.scheduler.minimum-allocation-mb</name>

<value>1024</value>

<discription>单个任务可申请最少内存,默认1024MB</discription>

</property>

<property>

<name>yarn.scheduler.maximum-allocation-mb</name>

<value>2048</value>

<discription>单个任务可申请最大内存,默认8192MB</discription>

</property>

<property>

<name>yarn.nodemanager.resource.cpu-vcores</name>

<value>2</value>

</property>

</configuration>

- 修改slaves

注意:slaves window格式 可能会与liunx格式对应不上

可使用dos2unix转化

ruozedata001

ruozedata002

ruozedata003

- 拷贝该机器的配置到另外两台服务器中,

启动集群

1.先启动zk

zkServer.sh start

2.在 journalnode 节点机器上先启动 JournalNode 进程

hadoop-daemon.sh start journalnode

3.NameNode 格式化

hadoop namenode -format

4.同步namenode元数据到ruozedata002服务器对应的目录中

scp -r data/dfs/name/ root@hadoop002:/home/hadoop/data/dfs/name/

5.初始化 ZFCK

hdfs zkfc -formatZK

6.启动 HDFS 分布式存储系统

start-dfs.sh

验证:

[hadoop@ruozedata001 ~]$ jps

7696 Jps

4714 JournalNode

4931 NameNode

5410 ResourceManager

2264 QuorumPeerMain

5083 DataNode

5322 DFSZKFailoverController

5530 NodeManager

[hadoop@ruozedata002~]$ jps

4231 DFSZKFailoverController

5213 Jps

3917 JournalNode

4025 NameNode

1502 ResourceManager

3359 NodeManager

3108 DataNode

4253 QuorumPeerMain

[hadoop@ruozedata003~]$ jps

29262 JournalNode

29361 DataNode

29492 NodeManager

29848 Jps

2251 QuorumPeerMain

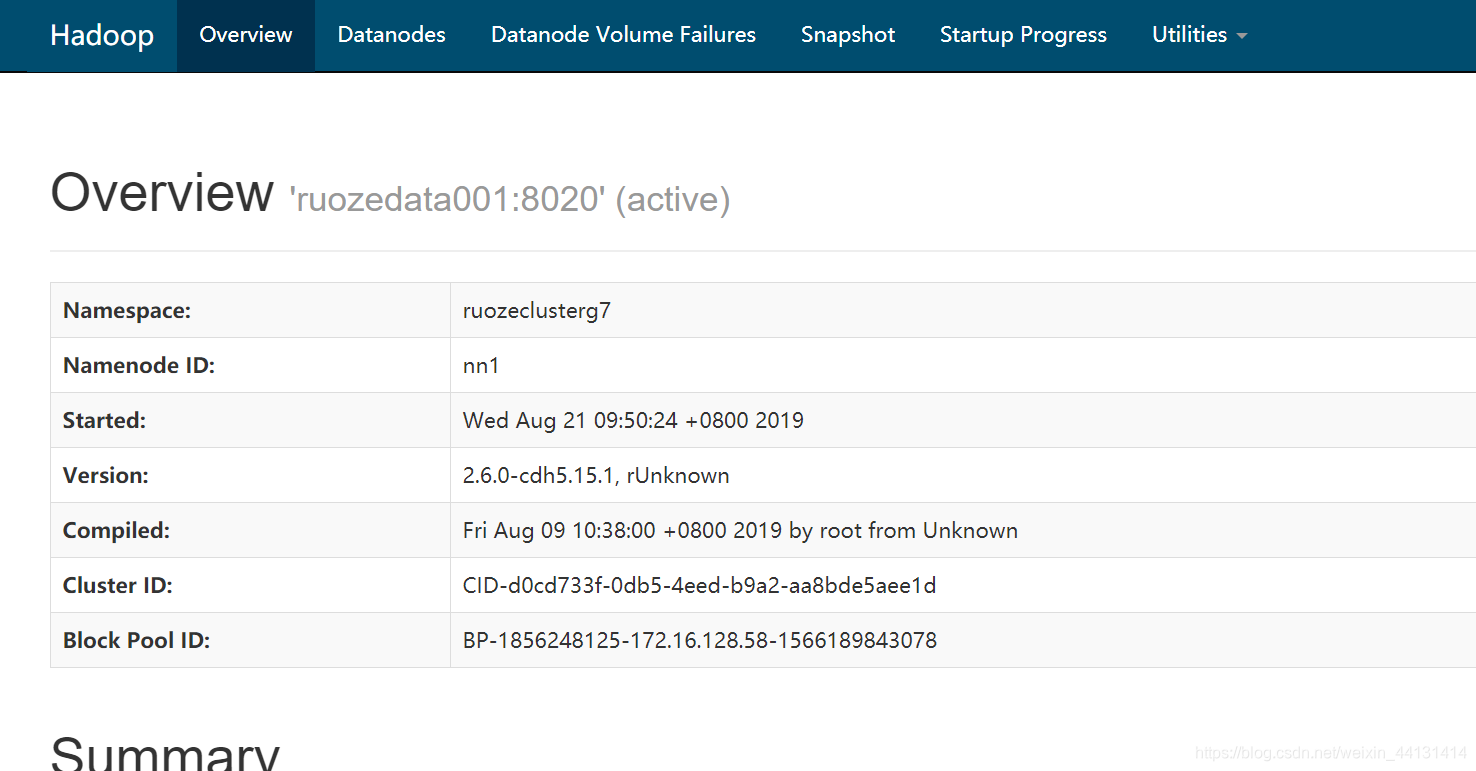

页面:

7.启动 YARN

#先在ruozedata001中单独启动

start-yarn.sh

#然后在ruozedata002中启动standby RM

yarn-daemon.sh start resourcemanager