实验环境:

172.25.66.1 namenode节点(上一篇博客已部署成功)

172.25.66.2 datanode 节点

172.25.66.3 datanode节点

server1:

1.停掉之前的hdfs和yarn集群

2.安装nfs服务,进行文件共享

[root@server1 ~]# yum install -y nfs-utils

[root@server1 ~]# /etc/init.d/rpcbind status

rpcbind is stopped

[root@server1 ~]# /etc/init.d/rpcbind start

Starting rpcbind: [ OK ]

vim /etc/exports

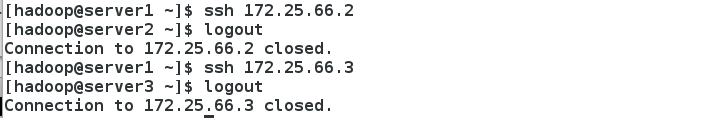

设置免密登陆server2和server3

扫描二维码关注公众号,回复:

4735040 查看本文章

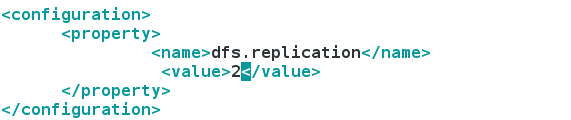

编辑hdfs-site.xml文件

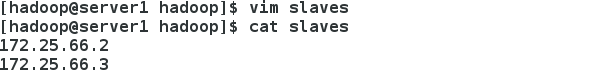

编辑slaves文件

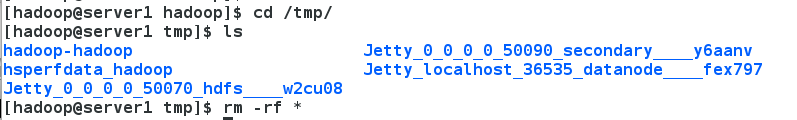

最好将/tmp下的文件删除

格式化并且启动

[hadoop@server1 hadoop]$ bin/hdfs namenode -format

[hadoop@server1 hadoop]$ sbin/start-dfs.sh

[hadoop@server1 hadoop]$ jps

server2和server3:

安装nfs-utils

登入hadoop用户,发现server1的数据已同步过来

测试:

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user

[hadoop@server1 hadoop]$ bin/hdfs dfs -mkdir /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -lsr /

lsr: DEPRECATED: Please use 'ls -R' instead.

drwxr-xr-x - hadoop supergroup 0 2018-12-04 18:27 /user

drwxr-xr-x - hadoop supergroup 0 2018-12-04 18:27 /user/hadoop

[hadoop@server1 hadoop]$ bin/hdfs dfs -put etc/hadoop/ test

[hadoop@server1 hadoop]$ bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.3.jar wordcount test output

查看java进程,发现为数据节点

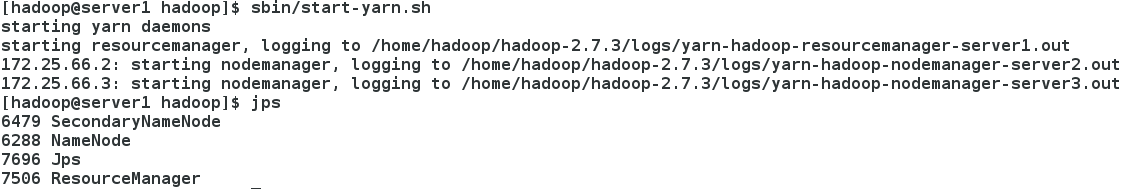

启动yarn集群

在server2上查看,多了nodemanager

集群节点的在线添加

1.在server4上:

[root@server4 ~]# yum install -y nfs-utils

[root@server4 ~]# useradd -u 800 hadoop

[root@server4 ~]# /etc/init.d/rpcbind start

Starting rpcbind: [ OK ]

[root@server4 ~]# mount 172.25.66.1:/home/hadoop /home/hadoop

[root@server4 ~]# df

Filesystem 1K-blocks Used Available Use% Mounted on

/dev/mapper/VolGroup-lv_root 19134332 930568 17231784 6% /

tmpfs 510188 0 510188 0% /dev/shm

/dev/vda1 495844 33469 436775 8% /boot

172.25.66.1:/home/hadoop 19134336 1944960 16217344 11% /home/hadoop

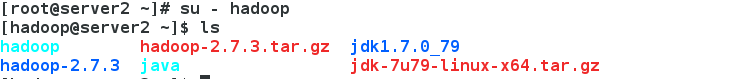

[root@server4 ~]# su - hadoop

[hadoop@server4 ~]$ ls

hadoop hadoop-2.7.3.tar.gz jdk1.7.0_79

hadoop-2.7.3 java jdk-7u79-linux-x64.tar.gz

开启数据节点并查看进程

在浏览器查看,数据节点添加成功

集群节点的在线删除

截取300M的文件并上传

在浏览器中查看文件上传成功

数据在节点上的分配

[hadoop@server1 hadoop]$ bin/hdfs dfsadmin -report ##查看各个节点信息

去除server3

[hadoop@server1 hadoop]$ cd etc/hadoop/

[hadoop@server1 hadoop]$ vim slaves ###将server3的ip去除

[hadoop@server1 hadoop]$ vim hosts-exclude ###将server3的ip写入

[hadoop@server1 hadoop]$ cat hosts-exclude

172.25.3.3

[hadoop@server1 hadoop]$ vim hdfs-site.xm

在浏览器中查看:

server2和server4两个平均分摊之前截取的300M