使用阿里云主机 编译 hadoop-2.6.0-cdh5.15.1

1.准备

1.系统环境配置

操作系统版本:CentOS Linux release 7.5.1804

依赖库的安装

[root@hadoop001 ~]# yum install -y svn ncurses-devel

[root@hadoop001 ~]# yum install -y gcc gcc-c++ make cmake

[root@hadoop001 ~]# yum install -y openssl openssl-devel svn ncurses-devel zlib-devel libtool

[root@hadoop001 ~]# yum install -y snappy snappy-devel bzip2 bzip2-devel lzo lzo-devel lzop autoconf automake cmake

所需搭建的环境配置

| 版本 | 资源 |

|---|---|

| hadoop-2.6.0-cdh5.15.1源码包 | http://archive.cloudera.com/cdh5/cdh/5/hadoop-2.6.0-cdh5.15.1-src.tar.gz |

| jdk1.7.X版本(注:必须1.7版本的) | https://download.oracle.com/otn/java/jdk/7u80-b15/jdk-7u80-linux-x64.tar.gz |

| maven3.X版本 | https://archive.apache.org/dist/maven/maven-3/ |

| protobuf-2.5.0版本 | https://github.com/protocolbuffers/protobuf/releases |

2.配置环境变量

- maven的配置

编辑 maven 的 conf 目录下的 settings.xml 文件,配置 maven 的仓库位置和镜像地址,配置环境变量

# 指定仓库地址,这里可以直接指定编译完成后的仓库,可以省去下载仓库文件的时间

<localRepository>/root/maven_repo</localRepository>

# 添加阿里云镜像地址,在 <mirrors></mirrors> 之间

<mirror>

<id>nexus-aliyun</id>

<mirrorOf>central</mirrorOf>

<name>Nexus aliyun</name>

<url>http://maven.aliyun.com/nexus/content/groups/public/</url>

</mirror>

# 环境变量,注意指定编译内存大小,防止内存不足导致编译失败

# vim ~/.bash_profile

MAVEN_HOME=/root/app/apache-maven-3.6.1

MAVEN_OPTS="-Xms2048m -Xmx2048m"

PATH=${MAVEN_HOME}/bin:$PATH

#使配置生效

source ~/.bash_profile

#查看是否配置成功

which mvn

- jdk配置与maven一致 同上

- protobuf 配置

protobuf下载的是源码,所有需要经过linux源码编译

#配置编译信息 --prefix 是编译好的文件存放位置

./configure --prefix=/root/app/protobuf-2.5.0

#编译

make

#安装

make install

# 配置环境变量

PROTOBUF_HOME=/root/app/protobuf-2.5.0

PATH=${PROTOBUF_HOME}/bin:$PATH

#验证是否编译成功

$ protoc --version

libprotoc 2.5.0

4.hadoop-2.6.0-cdh5.15.1.tar.gz 编译

# 解压文件,进入hadoop源码目录,执行命令,开始编译

mvn clean package -Pdist,native -DskipTests -Dtar

3.编译出现的问题 以及解决方式

- jar的下载失败 例如:

- 提示语:Could not transfer artifact com.cloudera.cdh:cdh-root:pom:5.15.1

均可前往https://repository.cloudera.com/artifactory/cloudera-repos下载缺失的jar

[heasy@demo hadoop-2.6.0-cdh5.15.1]$ mvn clean package -Pdist,native -DskipTests -Dtar

[INFO] Scanning for projects...

Downloading from cdh.repo: https://repository.cloudera.com/artifactory/cloudera-repos/com/cloudera/cdh/cdh-root/5.15.1/cdh-root-5.15.1.pom

Downloading from nexus-cdh: https://repository.cloudera.com/artifactory/cloudera-repos/com/cloudera/cdh/cdh-root/5.15.1/cdh-root-5.15.1.pom

[ERROR] [ERROR] Some problems were encountered while processing the POMs:

[FATAL] Non-resolvable parent POM for org.apache.hadoop:hadoop-main:2.6.0-cdh5.15.1: Could not transfer artifact com.cloudera.cdh:cdh-root:pom:5.15.1 from/to cdh.repo (https://repository.cloudera.com/artifactory/cloudera-repos): Remote host closed connection during handshake and 'parent.relativePath' points at wrong local POM @ line 19, column 11

@

[ERROR] The build could not read 1 project -> [Help 1]

[ERROR]

[ERROR] The project org.apache.hadoop:hadoop-main:2.6.0-cdh5.15.1 (/opt/module/hadoop-2.6.0-cdh5.15.1/pom.xml) has 1 error

[ERROR] Non-resolvable parent POM for org.apache.hadoop:hadoop-main:2.6.0-cdh5.15.1: Could not transfer artifact com.cloudera.cdh:cdh-root:pom:5.15.1 from/to cdh.repo (https://repository.cloudera.com/artifactory/cloudera-repos): Remote host closed connection during handshake and 'parent.relativePath' points at wrong local POM @ line 19, column 11: SSL peer shut down incorrectly -> [Help 2]

[ERROR]

[ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch.

[ERROR] Re-run Maven using the -X switch to enable full debug logging.

[ERROR]

[ERROR] For more information about the errors and possible solutions, please read the following articles:

[ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/ProjectBuildingException

[ERROR] [Help 2] http://cwiki.apache.org/confluence/display/MAVEN/UnresolvableModelException

- DynamoDBLocal 1.11.86 找不到

- 解决方案:

- 下载jar https://mvnrepository.com/artifact/com.amazonaws/DynamoDBLocal 进行maven 安装jar

mvn install:install-file -DgroupId=com.amazonaws -DartifactId=DynamoDBLocal -Dversion=1.11.86 -Dpackaging=jar -Dfile=DynamoDBLocal-1.11.86.jar

- commons-lang3jar的缺失 (没截图)

hadoop-2.6.0-cdh5.15.1\hadoop-common-project\hadoop-common下 pom.xml缺失 依赖 加上就ok了

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-lang3</artifactId>

<version>3.5</version>

</dependency>

注意事项:

如果使用本地已有的仓库 ,maven版本需要匹配一致 同时 maven仓库中存在下载失败的jar 需要全部删除

#编写sh

vim deleteLastUpdated.sh

# 这里写你的仓库路径

REPOSITORY_PATH=/root/maven_repo/

echo 正在搜索...

find $REPOSITORY_PATH -name "*lastUpdated*" | xargs rm -fr

echo 搜索完

#执行sh

deleteLastUpdated.sh

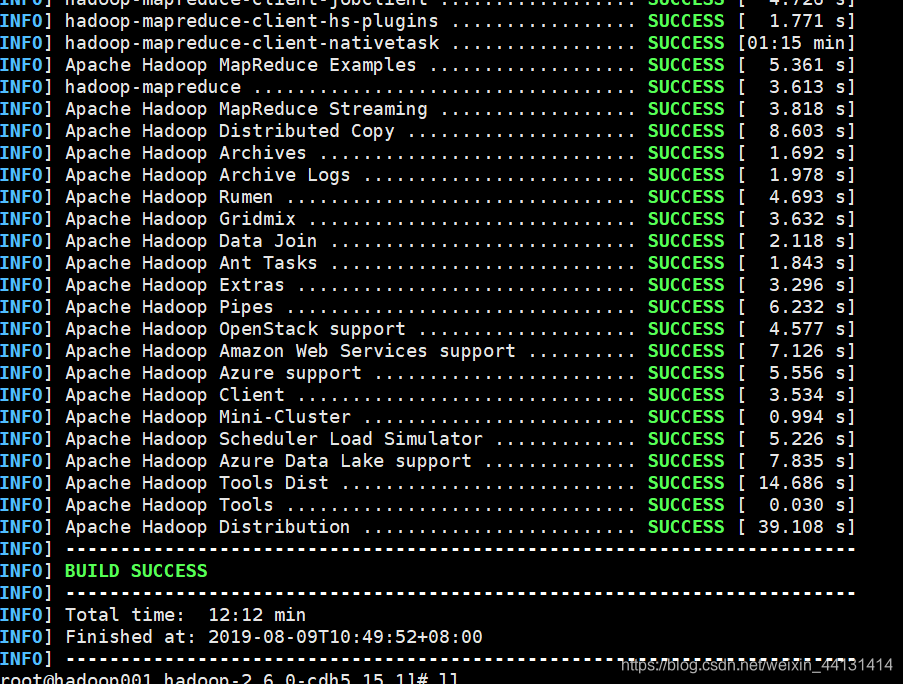

编译成功

编译好的包:在hadoop-2.6.0-cdh5.7.0/hadoop-dist/target/路径下

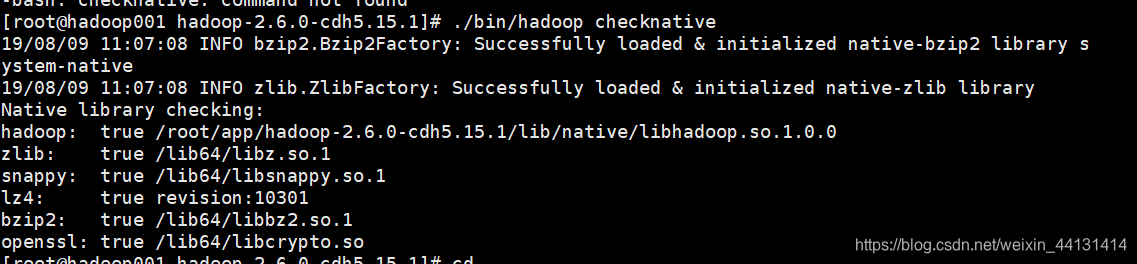

验证是否支持压缩

解压 配置变量环境 运行hadoop checknative验证是否成功