目录

目录

(2) 使用Manage State,Flink自动实现state保存和恢复

(3) 自定义state 自行实现实现checkpoint接口

背景

Flink相对于Storm和Spark Stream比较大的一个优势就是State,pipline中可以保存状态,这对于解决业务是有巨大的帮助,否则将需要借助三方工具来时间状态的存储和访问。

正文

State

什么是State(状态)?

- 某task/operator在某时刻的一个中间结果

- 快照(shapshot)

- 在flink中状态可以理解为一种数据结构

- 举例

对输入源为<key,value>的数据,计算其中某key的最大值,如果使用HashMap,也可以进行计算,但是每次都需要重新遍历,使用状态的话,可以获取最近的一次计算结果,减少了系统的计算次数 - 程序一旦crash,恢复

- 程序扩容

State类型

总的来说,state分为两种,operator state和key state,key state专门对keystream使用,所包含的Sate种类也更多,可理解为dataStream.keyBy()之后的Operator State,Operator State是对每一个Operator的状态进行记录,而key State则是在dataSteam进行keyBy()后,记录相同keyId的keyStream上的状态key State提供的数据类型:ValueState<T>、ListState<T>、ReducingState<T>、MapState<T>。

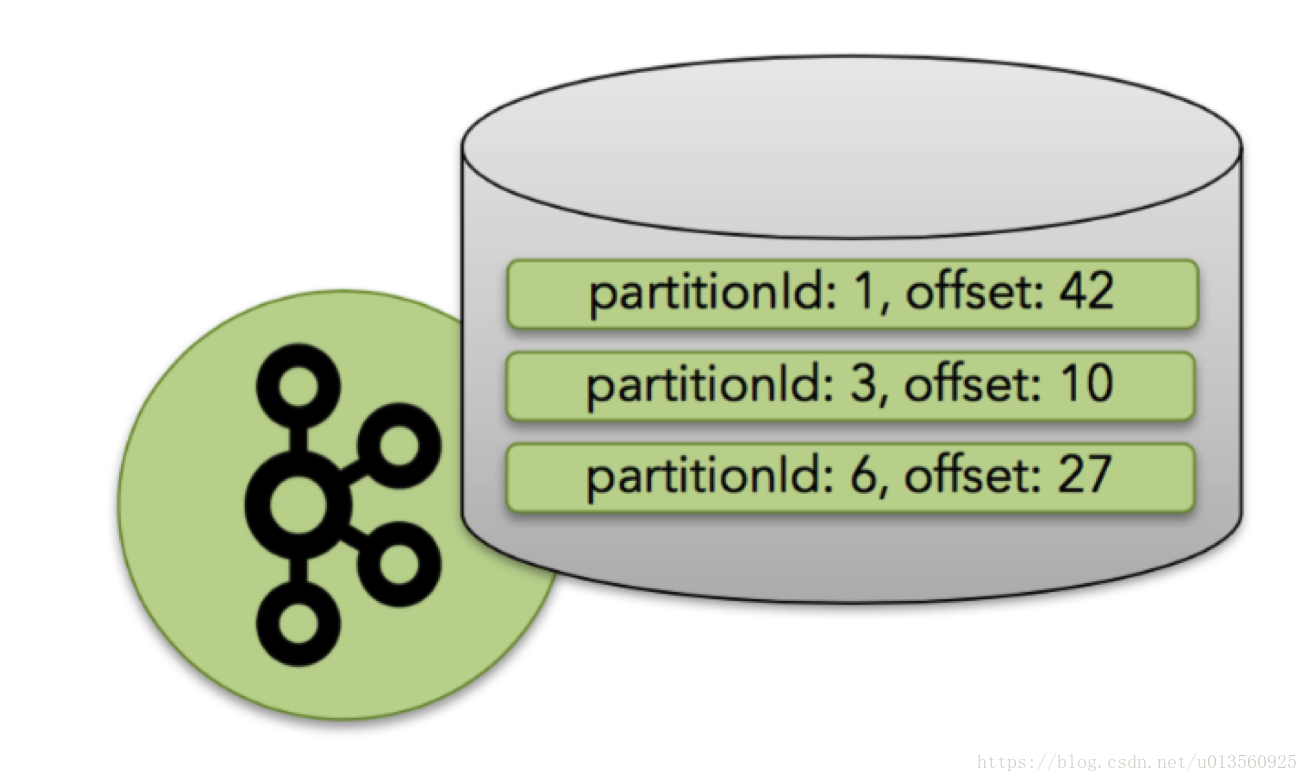

operator state种类只有一种就是ListState<T> ,flink官方文档用kafka的消费者举例,认为kafka消费者的partitionId和offset类似flink的operator state

State理解

state分为operator state和key state两种,都属于manage state,优势是可以结合checkpoint,实现自动存储状态和异常恢复功能,但是state不一定要使用manage state,在source、windows和sink中自己声明一个int都可以作为状态进行使用,只不过需要自己实现快照状态保存和恢复。

State实战

import org.apache.flink.api.common.functions.RichFlatMapFunction;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.typeinfo.TypeHint;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.api.java.tuple.Tuple2;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.util.Collector;

/**

* Created by authoe on 2018/9/12.

*/

public class StateTest {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setParallelism(4).fromElements(Tuple2.of(1L,1L),Tuple2.of(2L,2L),Tuple2.of(3L,2L),Tuple2.of(4L,3L))

.keyBy(0).flatMap(new RichFlatMapFunction<Tuple2<Long,Long>, Object>() {

private transient ValueState<Tuple2<Long,Long>> sum;

@Override

public void flatMap(Tuple2<Long, Long> longLongTuple2, Collector<Object> collector) throws Exception {

Tuple2<Long,Long> curSum = sum.value();

curSum.f0+=1;

curSum.f1+=longLongTuple2.f1;

System.out.println("+");

sum.update(curSum);

if(curSum.f0>0){

System.out.println("-");

collector.collect(Tuple2.of(curSum.f0,curSum.f1));

sum.clear();

}

}

@Override

public void open(Configuration parameters) throws Exception {

ValueStateDescriptor<Tuple2<Long,Long>> descriptor = new ValueStateDescriptor<Tuple2<Long, Long>>("avg", TypeInformation.of(

new TypeHint<Tuple2<Long, Long>>() {}),Tuple2.of(0L,0L));

sum = getRuntimeContext().getState(descriptor);

}

}).print();

env.execute();

}

}

CheckPointing

(1)介绍,实现方式分类

checkpoint可以保存窗口和算子的执行状态,在出现异常之后重启计算任务,并保证已经执行和不会再重复执行,检查点可以分为两种,托管的和自定义的,托管检查点会自动的进行存储到指定位置:内存、磁盘和分布式存储中,自定义就需要自行实现保存相关,实现checkpoint有如下两种方式:

- 使用托管State变量

- 使用自定义State变量实现CheckpointedFunction接口或者ListCheckpoint<T extends Serializable>接口

下面将会给出两种方式的使用代码

(2) 使用Manage State,Flink自动实现state保存和恢复

下面先给出托管状态变量(manage stata)使用代码,后面给出了代码执行的打印日志。

代码分析:

- 代码每隔2s发送10条记录,所有数据key=1,会发送到统一窗口进行计数,发送超过100条是,抛出异常,模拟异常

- 窗口中统计收到的消息记录数,当异常发生时,查看windows是否会从state中获取历史数据,即checkpoint是否生效

- 注释已经添加进代码中,有个问题有时,state.value()在open()方法中调用的时候,会抛出null异常,而在apply中 使用就不会抛出异常。

Console日志输出分析:

- 四个open调用,因为我本地代码默认并行度为4,所以会有4个windows函数被实例化出来,调用各自open函数

- source发送记录到达100抛出异常

- source抛出异常之后,count发送统计数丢失,重新从0开始

- windows函数,重启后调用open函数,获取state数据,处理记录数从checkpoint中获取恢复,所以从100开始

总结:

source没有使用manage state状态丢失,windows使用manage state,异常状态不丢失

package per.test;

import org.apache.flink.api.common.state.ValueState;

import org.apache.flink.api.common.state.ValueStateDescriptor;

import org.apache.flink.api.common.typeinfo.TypeHint;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.api.java.tuple.Tuple3;

import org.apache.flink.configuration.Configuration;

import org.apache.flink.runtime.state.filesystem.FsStateBackend;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import org.apache.flink.streaming.api.functions.windowing.RichWindowFunction;

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.util.Collector;

/**

* Created by betree on 2018/9/14.

*/

public class StateCheckPoint {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

//打开并设置checkpoint

// 1.设置checkpoint目录,这里我用的是本地路径,记得本地路径要file开头

// 2.设置checkpoint类型,at lease onece or EXACTLY_ONCE

// 3.设置间隔时间,同时打开checkpoint功能

//

env.setStateBackend(new FsStateBackend("file:///Users/username/Documents/程序数据/Flink/state_checkpoint/"));

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

env.getCheckpointConfig().setCheckpointInterval(1000);

//添加source 每个2s 发送10条数据,key=1,达到100条时候抛出异常

env.addSource(new SourceFunction<Tuple3<Integer,String,Integer>>() {

private Boolean isRunning = true;

private int count = 0;

@Override

public void run(SourceContext<Tuple3<Integer, String, Integer>> sourceContext) throws Exception {

while(isRunning){

for (int i = 0; i < 10; i++) {

sourceContext.collect(Tuple3.of(1,"ahah",count));

count++;

}

if(count>100){

System.out.println("err_________________");

throw new Exception("123");

}

System.out.println("source:"+count);

Thread.sleep(2000);

}

}

@Override

public void cancel() {

}

}).keyBy(0)

.window(TumblingProcessingTimeWindows.of(Time.seconds(2)))

//窗口函数,比如是richwindowsfunction 否侧无法使用manage state

.apply(new RichWindowFunction<Tuple3<Integer,String,Integer>, Integer, Tuple, TimeWindow>() {

private transient ValueState<Integer> state;

private int count = 0;

@Override

public void apply(Tuple tuple, TimeWindow timeWindow, Iterable<Tuple3<Integer, String, Integer>> iterable, Collector<Integer> collector) throws Exception {

//从state中获取值

count=state.value();

for(Tuple3<Integer, String, Integer> item : iterable){

count++;

}

//更新state值

state.update(count);

System.out.println("windows:"+tuple.toString()+" "+count+" state count:"+state.value());

collector.collect(count);

}

//获取state

@Override

public void open(Configuration parameters) throws Exception {

System.out.println("##open");

ValueStateDescriptor<Integer> descriptor =

new ValueStateDescriptor<Integer>(

"average", // the state name

TypeInformation.of(new TypeHint<Integer>() {}), // type information

0);

state = getRuntimeContext().getState(descriptor);

}

}).print();

env.execute();

}

}

给出日志打印结果:

/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/bin/java "-javaagent:/Applications/IntelliJ IDEA CE.app/Contents/lib/idea_rt.jar=59422:/Applications/IntelliJ IDEA CE.app/Contents/bin" -Dfile.encoding=UTF-8 -classpath /Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/charsets.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/deploy.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/cldrdata.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/dnsns.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/jaccess.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/jfxrt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/localedata.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/nashorn.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/sunec.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/sunjce_provider.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/sunpkcs11.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/ext/zipfs.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/javaws.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/jce.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/jfr.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/jfxswt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/jsse.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/management-agent.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/plugin.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/resources.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/rt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/ant-javafx.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/dt.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/javafx-mx.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/jconsole.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/packager.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/sa-jdi.jar:/Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/lib/tools.jar:/Users/wangqi/Documents/code/workplace/flink_t/target/classes:/Users/wangqi/.m2/repository/org/apache/flink/flink-java/1.6.0/flink-java-1.6.0.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-core/1.6.0/flink-core-1.6.0.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-annotations/1.6.0/flink-annotations-1.6.0.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-metrics-core/1.6.0/flink-metrics-core-1.6.0.jar:/Users/wangqi/.m2/repository/com/esotericsoftware/kryo/kryo/2.24.0/kryo-2.24.0.jar:/Users/wangqi/.m2/repository/com/esotericsoftware/minlog/minlog/1.2/minlog-1.2.jar:/Users/wangqi/.m2/repository/org/objenesis/objenesis/2.1/objenesis-2.1.jar:/Users/wangqi/.m2/repository/commons-collections/commons-collections/3.2.2/commons-collections-3.2.2.jar:/Users/wangqi/.m2/repository/org/apache/commons/commons-compress/1.4.1/commons-compress-1.4.1.jar:/Users/wangqi/.m2/repository/org/tukaani/xz/1.0/xz-1.0.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-shaded-asm/5.0.4-4.0/flink-shaded-asm-5.0.4-4.0.jar:/Users/wangqi/.m2/repository/org/apache/commons/commons-lang3/3.3.2/commons-lang3-3.3.2.jar:/Users/wangqi/.m2/repository/org/apache/commons/commons-math3/3.5/commons-math3-3.5.jar:/Users/wangqi/.m2/repository/org/slf4j/slf4j-api/1.7.7/slf4j-api-1.7.7.jar:/Users/wangqi/.m2/repository/com/google/code/findbugs/jsr305/1.3.9/jsr305-1.3.9.jar:/Users/wangqi/.m2/repository/org/apache/flink/force-shading/1.6.0/force-shading-1.6.0.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-streaming-java_2.11/1.6.0/flink-streaming-java_2.11-1.6.0.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-runtime_2.11/1.6.0/flink-runtime_2.11-1.6.0.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-queryable-state-client-java_2.11/1.6.0/flink-queryable-state-client-java_2.11-1.6.0.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-hadoop-fs/1.6.0/flink-hadoop-fs-1.6.0.jar:/Users/wangqi/.m2/repository/commons-io/commons-io/2.4/commons-io-2.4.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-shaded-netty/4.1.24.Final-4.0/flink-shaded-netty-4.1.24.Final-4.0.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-shaded-jackson/2.7.9-4.0/flink-shaded-jackson-2.7.9-4.0.jar:/Users/wangqi/.m2/repository/org/javassist/javassist/3.19.0-GA/javassist-3.19.0-GA.jar:/Users/wangqi/.m2/repository/org/scala-lang/scala-library/2.11.12/scala-library-2.11.12.jar:/Users/wangqi/.m2/repository/com/typesafe/akka/akka-actor_2.11/2.4.20/akka-actor_2.11-2.4.20.jar:/Users/wangqi/.m2/repository/com/typesafe/config/1.3.0/config-1.3.0.jar:/Users/wangqi/.m2/repository/org/scala-lang/modules/scala-java8-compat_2.11/0.7.0/scala-java8-compat_2.11-0.7.0.jar:/Users/wangqi/.m2/repository/com/typesafe/akka/akka-stream_2.11/2.4.20/akka-stream_2.11-2.4.20.jar:/Users/wangqi/.m2/repository/org/reactivestreams/reactive-streams/1.0.0/reactive-streams-1.0.0.jar:/Users/wangqi/.m2/repository/com/typesafe/ssl-config-core_2.11/0.2.1/ssl-config-core_2.11-0.2.1.jar:/Users/wangqi/.m2/repository/org/scala-lang/modules/scala-parser-combinators_2.11/1.0.4/scala-parser-combinators_2.11-1.0.4.jar:/Users/wangqi/.m2/repository/com/typesafe/akka/akka-protobuf_2.11/2.4.20/akka-protobuf_2.11-2.4.20.jar:/Users/wangqi/.m2/repository/com/typesafe/akka/akka-slf4j_2.11/2.4.20/akka-slf4j_2.11-2.4.20.jar:/Users/wangqi/.m2/repository/org/clapper/grizzled-slf4j_2.11/1.0.2/grizzled-slf4j_2.11-1.0.2.jar:/Users/wangqi/.m2/repository/com/github/scopt/scopt_2.11/3.5.0/scopt_2.11-3.5.0.jar:/Users/wangqi/.m2/repository/org/xerial/snappy/snappy-java/1.1.4/snappy-java-1.1.4.jar:/Users/wangqi/.m2/repository/com/twitter/chill_2.11/0.7.4/chill_2.11-0.7.4.jar:/Users/wangqi/.m2/repository/com/twitter/chill-java/0.7.4/chill-java-0.7.4.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-shaded-guava/18.0-4.0/flink-shaded-guava-18.0-4.0.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-clients_2.11/1.6.0/flink-clients_2.11-1.6.0.jar:/Users/wangqi/.m2/repository/org/apache/flink/flink-optimizer_2.11/1.6.0/flink-optimizer_2.11-1.6.0.jar:/Users/wangqi/.m2/repository/commons-cli/commons-cli/1.3.1/commons-cli-1.3.1.jar per.test.StateCheckPoint

objc[34882]: Class JavaLaunchHelper is implemented in both /Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/bin/java (0x10b8bc4c0) and /Library/Java/JavaVirtualMachines/jdk1.8.0_144.jdk/Contents/Home/jre/lib/libinstrument.dylib (0x10b9404e0). One of the two will be used. Which one is undefined.

SLF4J: Failed to load class "org.slf4j.impl.StaticLoggerBinder".

SLF4J: Defaulting to no-operation (NOP) logger implementation

SLF4J: See http://www.slf4j.org/codes.html#StaticLoggerBinder for further details.

source:10

##open

##open

##open

##open

err##

err##

err##

err##

windows:(1) 10 state count:10

3> 10

source:20

source:30

windows:(1) 30 state count:30

3> 30

source:40

windows:(1) 40 state count:40

3> 40

source:50

windows:(1) 50 state count:50

3> 50

source:60

source:70

windows:(1) 70 state count:70

3> 70

source:80

windows:(1) 80 state count:80

3> 80

source:90

windows:(1) 90 state count:90

3> 90

source:100

err_________________

windows:(1) 110 state count:110

3> 110

source:10

##open

err##

##open

err##

##open

##open

err##

err##

windows:(1) 10 state count:10

3> 10

windows:(1) 20 state count:20

3> 20

source:20

Process finished with exit code 130 (interrupted by signal 2: SIGINT)

(3) 自定义state 自行实现实现checkpoint接口

实现CheckpointedFunction接口或者ListCheckpoint<T extends Serializable>接口

分析说明:

因为需要实现ListCheckpoint接口,所以source和windows处理代码,单独写成了JAVA类的形似,实现逻辑和验证方法跟manage state相似,但是在如下代码中,Source和Window都实现了ListCheckpoint接口,也就是说,Source抛出异常的时候,Source和Window都将可以从checkpoint中获取历史状态,从而达到不丢失状态的能力。

代码列表:

AutoSourceWithCp.java Source代码WindowStatisticWithChk.java windows apply函数代码CheckPointMain.java 主程序,调用代码清单:

AutoSourceWithCp.java

package per.test.flink;

import org.apache.flink.api.common.state.ListState;

import org.apache.flink.api.java.tuple.Tuple4;

import org.apache.flink.streaming.api.checkpoint.ListCheckpointed;

import org.apache.flink.streaming.api.functions.source.RichSourceFunction;

import org.apache.flink.streaming.api.functions.source.SourceFunction;

import per.test.bean.UserState;

import java.util.ArrayList;

import java.util.List;

import java.util.Random;

/**

* Created by betree on 2018/9/13.

*/

public class AutoSourceWithCp extends RichSourceFunction<Tuple4<Integer,String,String,Integer>> implements ListCheckpointed<UserState> {

private int count = 0;

private boolean is_running = true;

@Override

public void run(SourceContext sourceContext) throws Exception {

Random random = new Random();

while(is_running){

for (int i = 0; i < 10; i++) {

sourceContext.collect(Tuple4.of(1, "hello-" + count, "alphabet", count));

count++;

}

System.out.println("source:"+count);

Thread.sleep(2000);

if(count>100){

throw new Exception("exception made by ourself!");

}

}

}

@Override

public void cancel() {

is_running = false;

}

@Override

public List<UserState> snapshotState(long l, long l1) throws Exception {

List<UserState> listState= new ArrayList<>();

UserState state = new UserState(count);

listState.add(state);

System.out.println("############# check point :"+listState.get(0).getCount());

return listState;

}

@Override

public void restoreState(List<UserState> list) throws Exception {

count = list.get(0).getCount();

System.out.println("AutoSourceWithCp restoreState:"+count);

}

}

WindowStatisticWithChk.java

package per.test.flink;

import org.apache.flink.api.java.tuple.Tuple;

import org.apache.flink.api.java.tuple.Tuple4;

import org.apache.flink.streaming.api.checkpoint.ListCheckpointed;

import org.apache.flink.streaming.api.functions.windowing.WindowFunction;

import org.apache.flink.streaming.api.windowing.windows.TimeWindow;

import org.apache.flink.streaming.api.windowing.windows.Window;

import org.apache.flink.util.Collector;

import per.test.bean.UserState;

import java.util.ArrayList;

import java.util.List;

/**

* Created by betree on 2018/9/13.

*/

public class WindowStatisticWithChk implements WindowFunction<Tuple4<Integer,String,String,Integer>,Integer,Tuple,TimeWindow> ,ListCheckpointed<UserState> {

private int total = 0;

@Override

public List<UserState> snapshotState(long l, long l1) throws Exception {

List<UserState> listState= new ArrayList<>();

UserState state = new UserState(total);

listState.add(state);

return listState;

}

@Override

public void restoreState(List<UserState> list) throws Exception {

total = list.get(0).getCount();

}

@Override

public void apply(Tuple tuple, TimeWindow timeWindow, Iterable<Tuple4<Integer, String, String, Integer>> iterable, Collector<Integer> collector) throws Exception {

int count = 0;

for(Tuple4<Integer, String, String, Integer> data : iterable){

count++;

System.out.println("apply key"+tuple.toString()+" count:"+data.f3+" "+data.f0);

}

total = total+count;

System.out.println("windows total:"+total+" count:"+count+" ");

collector.collect(count);

}

}

CheckPointMain.javapackage per.test.flink;

import org.apache.flink.api.java.tuple.Tuple4;

import org.apache.flink.runtime.state.filesystem.FsStateBackend;

import org.apache.flink.streaming.api.CheckpointingMode;

import org.apache.flink.streaming.api.datastream.DataStream;

import org.apache.flink.streaming.api.environment.StreamExecutionEnvironment;

import org.apache.flink.streaming.api.windowing.assigners.SlidingProcessingTimeWindows;

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows;

import org.apache.flink.streaming.api.windowing.time.Time;

/**

* Created by betree on 2018/9/13.

*/

public class CheckPointMain {

public static void main(String[] args) throws Exception{

StreamExecutionEnvironment env = StreamExecutionEnvironment.getExecutionEnvironment();

env.setStateBackend(new FsStateBackend("file:///Users/username/Documents/程序数据/Flink/checkpoint/"));

env.getCheckpointConfig().setCheckpointingMode(CheckpointingMode.EXACTLY_ONCE);

env.getCheckpointConfig().setCheckpointInterval(1000);

DataStream<Tuple4<Integer,String,String,Integer>> data = env.setParallelism(4).addSource(new AutoSourceWithCp());

env.setParallelism(4);

data.keyBy(0)

.window(TumblingProcessingTimeWindows.of(Time.seconds(2)))

.apply(new WindowStatisticWithChk())

.print();

env.execute();

}

}

借鉴文章

https://forum.huawei.com/enterprise/en/thread-452547.html

http://www.zhanghs.com/2016/10/23/first-steps-of-apache-flink