版权声明:本文为博主原创文章,未经博主允许不得转载。 https://blog.csdn.net/YunLaowang/article/details/89705720

整理资料发现未对Bumblebee的分离图像、保存成cv::Mat格式的使用方法留存笔记,这里补上,备忘。

使用须知

-

下述Bumblebee相机直接获取的图片是左右视图混合(interleaved)之后的结果,如果需要进行双目视觉处理,需要对interleaved的结果进行分离,得到左右相机采集的图片;

-

Bumblebee相机出厂时即被标定好,无需自己标定,使用官方提供的API即可获得标定好的左右视图;当然也可以获得raw图,自己进行标定。

-

标定好的stereo pairs只是进行了相机标定,如果使用该图片计算深度图,还需进行立体校正,使相机满足极线约束。

代码

环境:Win10+OpenCV3.4.1 +(Triclops_4.0.3.4_x64+FlyCapture_2.12.3.31_x64)

/*

可选分辨率:

320×240; 384×288; 400×300; 512×384;

640×480; 768×576; 800×600; 960×720;

1024×768; 1152×864; 1280×960;

*/

#include <iostream>

#include <fstream>

#include <string>

#include "captureimages.h"

#include "Example_Exception.h"

#include <opencv2/highgui.hpp>

#include <opencv2/opencv.hpp>

using namespace std;

using namespace cv;

int feed_height = 480, feed_width = 640; // 设置输出分辨率

int main(int /* argc */, char ** /* argv */)

{

TriclopsError triclops_status;

TriclopsContext context;

TriclopsColorStereoPair colorStereoInput;

// camera to be used to grab color image

FC2::Camera camera;

// grabbed unprocessed image

FC2::Image grabbedImage;

try {

// connect camera

FC2::Error flycap_status = camera.Connect();

handleError("FlyCapture2::Camera::Connect()", flycap_status, __LINE__);

// configure camera by setting stereo mode

//configureCamera(camera);

// 选择使用的相机:左+右 OR 中+右

FC2T::ErrorType fc2TriclopsError;

FC2T::StereoCameraMode mode = FC2T::TWO_CAMERA_WIDE;//TWO_CAMERA_WIDE TWO_CAMERA_NARROW

fc2TriclopsError = FC2T::setStereoMode(camera, mode);

handleError("Fc2Triclops::setStereoMode()", fc2TriclopsError, __LINE__);

// generate the Triclops context

generateTriclopsContext(camera, context);

// 注意:必须先设置triclopsSetCameraConfiguration,再设置分辨率:triclopsPrepareRectificationData,否则会导致同一分辨率下,不同基线对应的焦距不同

// Setting the camera configuration. This is useful only when using a triple sensor stereo camera (e.g. XB3)

triclops_status = triclopsSetCameraConfiguration(context, TriCfg_2CAM_HORIZONTAL_WIDE);// TriCfg_2CAM_HORIZONTAL_WIDE TriCfg_2CAM_HORIZONTAL_NARROW

triclops_examples::handleError("triclopsSetCameraConfiguration()", triclops_status, __LINE__);

// Prepare the rectification buffers using the input resolution as output resolution.

triclops_status = triclopsPrepareRectificationData(context, feed_height, feed_width, feed_height, feed_width);

triclops_examples::handleError("triclopsPrepareRectificationData()", triclops_status, __LINE__);

// 开始采集数据

FC2::Error fc2Error = camera.StartCapture();

handleError("FlyCapture2::Camera::StartCapture()", fc2Error, __LINE__);

while ((char)cv::waitKey(5) != 'q') // 'q'退出

{

// 由内存中提取图像(左右视图“纠缠”在一起)

fc2Error = camera.RetrieveBuffer(&grabbedImage); // grabbedImage为未标定原图(包含左右视图)

handleError("FlyCapture2::Camera::RetrieveBuffer()", fc2Error, __LINE__);

// right and left image extracted from grabbed image

ImageContainer imageCont;

// generate color stereo input from grabbed image

generateColorStereoInput(context, grabbedImage, imageCont, colorStereoInput);

// 对图像进行校正,或使用:triclopsRectifyColorImage

triclops_status = triclopsColorRectify(context, &colorStereoInput);

triclops_examples::handleError("triclopsColorRectify()", triclops_status, __LINE__);

// 获取校正后的左右图像--分离

TriclopsColorImage color_left, color_right; // rectified image

triclops_status = triclopsGetColorImage(context, TriImg_RECTIFIED_COLOR, TriCam_RIGHT, &color_right);

triclops_examples::handleError("triclopsGetColorImage()", triclops_status, __LINE__);

triclops_status = triclopsGetColorImage(context, TriImg_RECTIFIED_COLOR, TriCam_LEFT, &color_left);

triclops_examples::handleError("triclopsGetColorImage()", triclops_status, __LINE__);

/**************** 转换成Mat格式 ****************/

cv::Mat right, left;

cv::Mat imageR(color_right.nrows, color_right.ncols, CV_8UC4, color_right.data->value, color_right.rowinc);

cv::Mat imageL(color_left.nrows, color_left.ncols, CV_8UC4, color_left.data->value, color_left.rowinc);

cvtColor(imageL, left, CV_RGBA2RGB);

cvtColor(imageR, right, CV_RGBA2RGB); // 注意格式

imwrite("left.png", left);

imwrite("right.png", right);

/******************* END********************/

}

// Close the camera

camera.StopCapture();

camera.Disconnect();

// Destroy the Triclops context

triclops_status = triclopsDestroyContext(context);

triclops_examples::handleError("triclopsDestroyContext()", triclops_status, __LINE__);

}

catch (const triclops_examples::Example_Exception &exception) {

std::cout << exception.what() << std::endl;

triclopsDestroyContext(context);

triclopsFreeColorStereoPair(&colorStereoInput, false);

if (camera.IsConnected()) {

camera.StopCapture();

camera.Disconnect();

}

return -1;

}

cv::waitKey(0);

return 0;

}

// configure camera by setting stereo mode

void configureCamera(FC2::Camera &camera)

{

FC2T::ErrorType fc2TriclopsError;

FC2T::StereoCameraMode mode = FC2T::TWO_CAMERA_WIDE;

fc2TriclopsError = FC2T::setStereoMode(camera, mode);

handleError("Fc2Triclops::setStereoMode()", fc2TriclopsError, __LINE__);

}

// generate triclops context from connected camera

void generateTriclopsContext(FC2::Camera &camera, TriclopsContext &context)

{

FC2::CameraInfo camInfo;

FC2::Error fc2Error = camera.GetCameraInfo(&camInfo);

handleError("FlyCapture2::Camera::GetCameraInfo()", fc2Error, __LINE__);

FC2T::ErrorType fc2TriclopsError;

fc2TriclopsError = FC2T::getContextFromCamera(camInfo.serialNumber, &context);

handleError("Fc2Triclops::getContextFromCamera()", fc2TriclopsError, __LINE__);

}

// generate color stereo input

void generateColorStereoInput( TriclopsContext const &context,

FC2::Image const &grabbedImage,

ImageContainer &imageCont,

TriclopsColorStereoPair &stereoPair)

{

FC2T::ErrorType fc2TriclopsError;

TriclopsError te;

TriclopsColorImage triclopsImageContainer[2];

FC2::Image *tmpImage = imageCont.tmp;

FC2::Image *unprocessedImage = imageCont.unprocessed;

// Convert the pixel interleaved raw data to de-interleaved and color processed data

fc2TriclopsError = FC2T::unpackUnprocessedRawOrMono16Image( grabbedImage,

true /*assume little endian*/,

tmpImage[RIGHT],

tmpImage[LEFT]);

handleError("Fc2Triclops::unpackUnprocessedRawOrMono16Image()", fc2TriclopsError, __LINE__);

// preprocess color image

for (int i = 0; i < 2; ++i) {

FC2::Error fc2Error;

fc2Error = tmpImage[i].SetColorProcessing(FC2::HQ_LINEAR);

handleError("FlyCapture2::Image::SetColorProcessing()", fc2Error, __LINE__);

// convert preprocessed color image to BGRU format

fc2Error = tmpImage[i].Convert(FC2::PIXEL_FORMAT_BGRU,&unprocessedImage[i]);

handleError("FlyCapture2::Image::Convert()", fc2Error, __LINE__);

}

// create triclops image for right and left lens

for (size_t i = 0; i < 2; ++i) {

TriclopsColorImage *image = &triclopsImageContainer[i];

te = triclopsLoadColorImageFromBuffer(

reinterpret_cast<TriclopsColorPixel *>(unprocessedImage[i].GetData()),

unprocessedImage[i].GetRows(),

unprocessedImage[i].GetCols(),

unprocessedImage[i].GetStride(),

image);

triclops_examples::handleError("triclopsLoadColorImageFromBuffer()", te, __LINE__);

}

// create stereo input from the triclops images constructed above

// pack image data into a TriclopsColorStereoPair structure

te = triclopsBuildColorStereoPairFromBuffers(

context,

&triclopsImageContainer[RIGHT],

&triclopsImageContainer[LEFT],

&stereoPair);

triclops_examples::handleError("triclopsBuildColorStereoPairFromBuffers()", te, __LINE__);

}

void handleError(const std::string &description, const FlyCapture2::Error &error, int lineNumber)

{

if (error != FlyCapture2::PGRERROR_OK) {

throw triclops_examples::Example_Exception(description, error.GetDescription(), lineNumber);

}

}

void handleError(const std::string &description, const Fc2Triclops::ErrorType &error, int lineNumber)

{

if (error != Fc2Triclops::ErrorType::ERRORTYPE_OK) {

throw triclops_examples::Example_Exception(description, "FlyCapture 2 bridge error", lineNumber);

}

}

分离未标定原图的代码如下:

//Retrieve an image

FlyCapture2::Image rawImage;

error = cam.RetrieveBuffer(&rawImage);

if (error != PGRERROR_OK)

{

PrintError(error);

return -1;

}

//Separate the rawImage into right and left image

FlyCapture2::Image rawImgR, rawImgL;

unpackUnprocessedRawOrMono16Image(rawImage, true, rawImgR, rawImgL);

rawImgR.SetColorProcessing(HQ_LINEAR);

rawImgL.SetColorProcessing(HQ_LINEAR);

//Convert to RGB

FlyCapture2::Image rgbImgR, rgbImgL;

rawImgR.Convert(PIXEL_FORMAT_BGR, &rgbImgR);

rawImgL.Convert(PIXEL_FORMAT_BGR, &rgbImgL);

//convert to OpenCV Mat

unsigned int rowBytes = (double)rgbImgR.GetReceivedDataSize() / (double)rgbImgR.GetRows();

cv::Mat imgR(rgbImgR.GetRows(), rgbImgR.GetCols(), CV_8UC3, rgbImgR.GetData(), rowBytes);

cv::Mat imgL(rgbImgL.GetRows(), rgbImgL.GetCols(), CV_8UC3, rgbImgL.GetData(), rowBytes);

SDK使用

官方在SDK安装包中给出了很多例子和相应的API介绍文档(主要有用的是Triclops_API_Documentation.chm、FlyCapture2 Documentation.chm),主要分为两个部分,分别对应于Triclops SDK安装包和FlyCapture安装包,结合例子和API可以很快上手使用,这里进行简单的介绍,方便大家查阅。

FlyCapture安装包

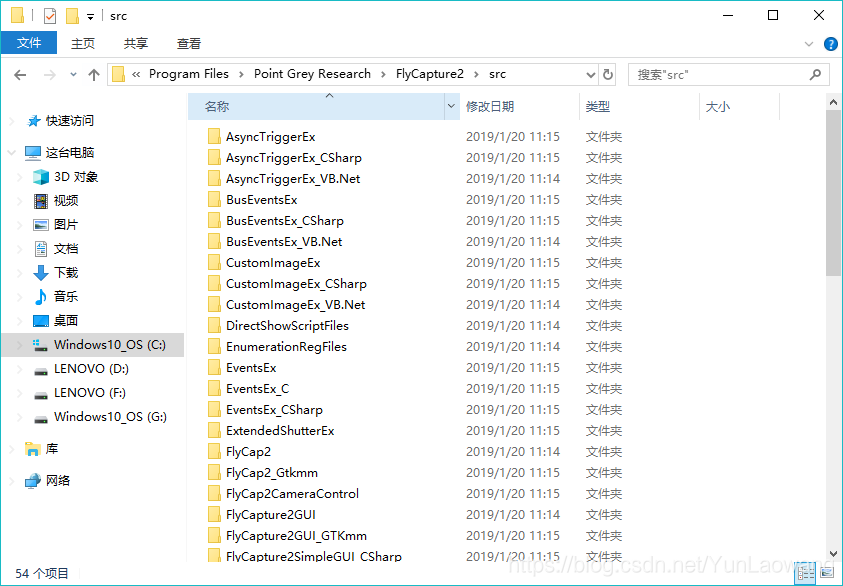

FlyCapture安装包里提供的主要是一些较为底层的API和例子,实现的是操作相机的基本功能,如采集相机图像、设置相机参数等,文件路径如下:

- C:\Program Files\Point Grey Research\FlyCapture2\src

- C:\Program Files\Point Grey Research\FlyCapture2\doc

例子如下:

Triclops SDK安装包

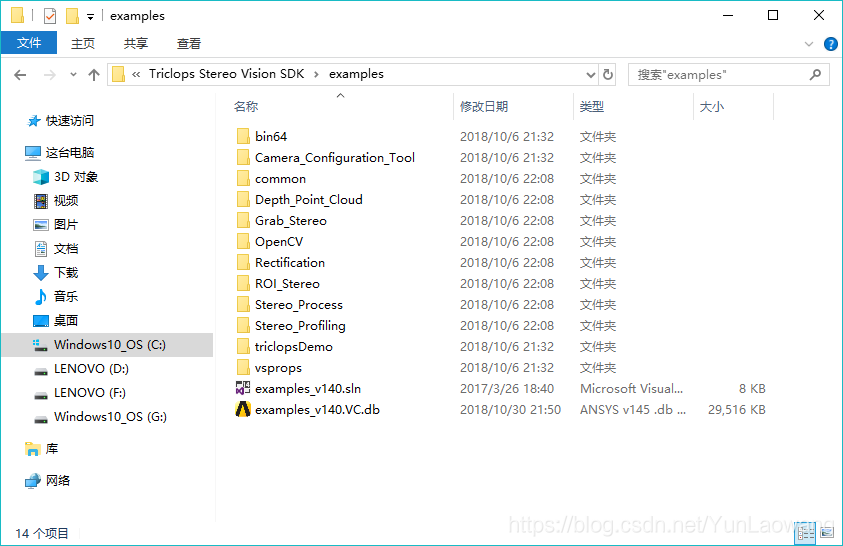

Triclops SDK提供的是对一些计算机立体视觉功能的封装,如实时获取深度点云、生成视差图、校正畸变、OpenCV接口等,文件路径如下:

- C:\Program Files\FLIR Integrated Imaging Solutions\Triclops Stereo Vision SDK\examples

- C:\Program Files\FLIR Integrated Imaging Solutions\Triclops Stereo Vision SDK\doc\API

例子如下:

备注

- 另附完整的工程+相关手册的【云盘下载链接】:ht?tps://pan.?baidu.c?om/s/1wvodHEKxOI4-fSbD1rJK6g

提取码:a48b (去掉链接中的"?"可用) - 更多格式转换方法参考:Triclops图片格式转换成OpenCV Mat格式

- 欢迎大家留言批评指正,互相交流、共同进步。