| 这个作业属于哪个课程 |

人工智能实战2019 |

| 这个作业的要求在哪里 |

第五次作业 |

| 我在这个课程的目标是 |

掌握人工智能方法基本原理 |

| 这个作业在哪个具体方面帮助我实现目标 |

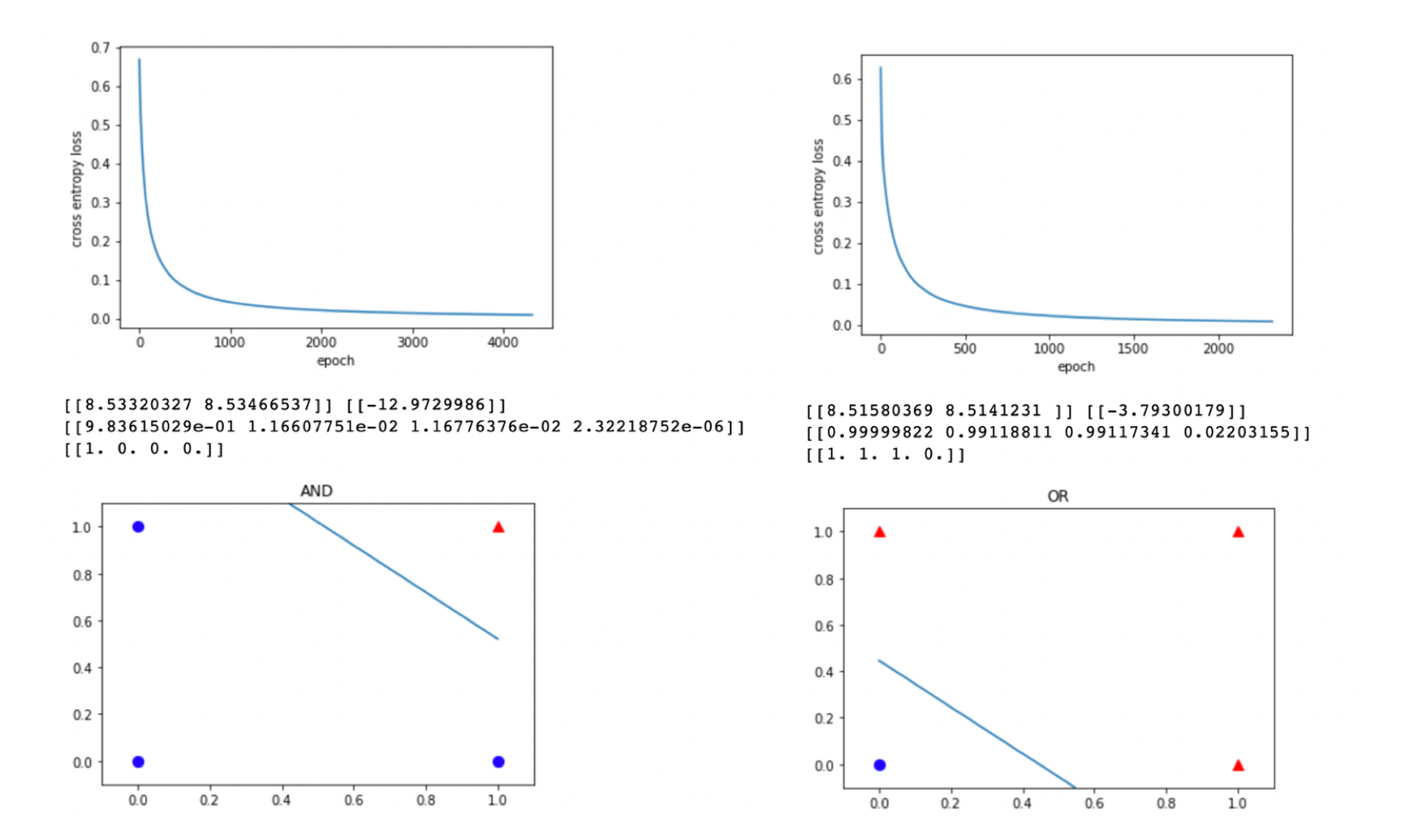

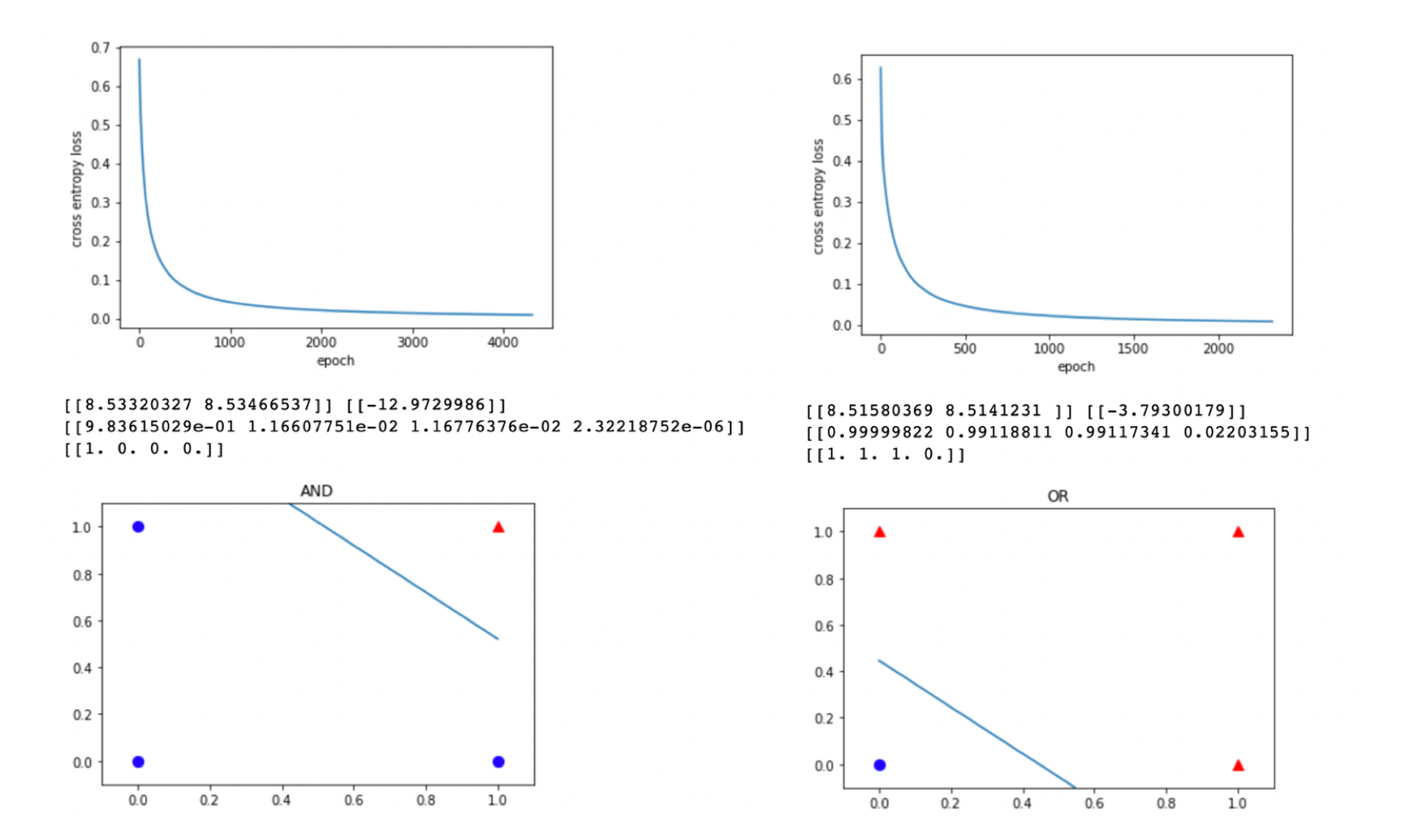

神经网络模型基础:通过训练与门与或门实现简单分类模型(线性二分类) |

| Reference |

神经网络基本原理简明教程 |

作业正文:

import numpy as np

import matplotlib.pyplot as plt

def ReadData_and():

X = np.array([1,1,0,0,1,0,1,0]).reshape(2,4)

Y = np.array([1,0,0,0]).reshape(1,4)

return X,Y

def ReadData_or():

X = np.array([1,1,0,0,1,0,1,0]).reshape(2,4)

Y = np.array([1,1,1,0]).reshape(1,4)

return X,Y

def Sigmoid(x):

s = 1/(1+np.exp(-x))

return s

def ForwardCalculation(W,B,X):

z = np.dot(W,X) + B

a = Sigmoid(z)

return a

def BackPropagation(X,y,A):

m = X.shape[1]

dZ = A - y

dB = dZ.sum(axis=1, keepdims=True)/m

dW = np.dot(dZ,X.T)/m

return dW, dB

def UpdateWeights(W, B, dW, dB, eta):

W = W - eta*dW

B = B - eta*dB

return W,B

#cross entropy loss - binary

def CheckLoss(W, B, X, Y):

count = X.shape[1]

A = ForwardCalculation(W,B,X)

p0 = np.multiply(1-Y,np.log(1-A))

p1 = np.multiply(Y,np.log(A))

LOSS = np.sum(-(p0 + p1))

loss = LOSS / count

return loss

def ShowResult(W,B,X,Y,title):

# 根据w,b值画出分割线

w = -W[0,0]/W[0,1]

b = -B[0,0]/W[0,1]

x = np.array([0,1])

y = w * x + b

plt.plot(x,y)

# 画出原始样本值

for i in range(X.shape[1]):

if Y[0,i] == 0:

plt.scatter(X[0,i],X[1,i],marker="o",c='b',s=64)

else:

plt.scatter(X[0,i],X[1,i],marker="^",c='r',s=64)

plt.axis([-0.1,1.1,-0.1,1.1])

plt.title(title)

plt.show()

if __name__ == '__main__':

#X, Y = ReadData_and()

X, Y = ReadData_or()

num_features = X.shape[0]

num_example = X.shape[1]

#w, b = np.random.random(),np.random.random()

W = np.zeros(num_features)

B = 0.0

eta = 0.1

eps = 1e-2

max_epoch = 5000

loss = 1

loss_list = []

for epoch in range(max_epoch):

for i in range(num_example):

x = X[:,i].reshape(num_features,1)

y = Y[:,i].reshape(1,1)

a = ForwardCalculation(W,B,x)

dW, dB = BackPropagation(x,y,a)

W, B = UpdateWeights(W, B, dW, dB, eta)

loss = CheckLoss(W, B, X, Y)

loss_list.append(loss)

if loss < eps:

break

#cross entropy loss figure

plt.figure()

plt.plot(loss_list)

plt.xlabel('epoch')

plt.ylabel('cross entropy loss')

plt.show()

#classification result

A = ForwardCalculation(W,B,X)

Class = np.around(A)

print(A)

print(Class)

#visualize

#ShowResult(W,B,X,Y,'AND')

ShowResult(W,B,X,Y,'OR')

运行结果