环境一览

hive 2.3.3配置HA

spark 2.2.0

hadoop 2.6.5

zookeeper 3.6.5

hbase 1.2.6

碰到的所有坑

1、jdbc连接zk时方法找不到异常

org.apache.curator.utils.ZKPaths.fixForNamespace,这个是因为curator-framework和curator-client版本不匹配问题,在集成的时候要注意梳理,这里附上我的pom(依赖部分)

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

......

<properties>

<jdk.version>1.8</jdk.version>

<junit.version>4.11</junit.version>

<spark.version>2.1.0</spark.version>

<hadoop.version>2.6.5</hadoop.version>

<hbase.version>1.2.6</hbase.version>

<!-- log4j日志文件管理包版本 -->

<slf4j.version>1.7.7</slf4j.version>

<log4j.version>1.2.17</log4j.version>

<hive.version>2.2.0</hive.version>

<zk.version>3.4.6</zk.version>

<curator.version>2.7.1</curator.version>

</properties>

<repositories>

<repository>

<id>m2</id>

<name>m2</name>

<url>http://central.maven.org/maven2/</url>

<layout>default</layout>

<snapshots>

<enabled>false</enabled>

</snapshots>

</repository>

</repositories>

<dependencies>

<dependency>

<groupId>org.apache.spark</groupId>

<artifactId>spark-core_2.10</artifactId>

<version>${spark.version}</version>

<exclusions>

<exclusion>

<artifactId>curator-recipes</artifactId>

<groupId>org.apache.curator</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-client</artifactId>

<version>${hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>zookeeper</artifactId>

<groupId>org.apache.zookeeper</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>${hadoop.version}</version>

<exclusions>

<exclusion>

<artifactId>zookeeper</artifactId>

<groupId>org.apache.zookeeper</groupId>

</exclusion>

<exclusion>

<artifactId>curator-recipes</artifactId>

<groupId>org.apache.curator</groupId>

</exclusion>

<exclusion>

<artifactId>curator-framework</artifactId>

<groupId>org.apache.curator</groupId>

</exclusion>

<exclusion>

<artifactId>curator-client</artifactId>

<groupId>org.apache.curator</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-hdfs</artifactId>

<version>${hadoop.version}</version>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-client</artifactId>

<version>${hbase.version}</version>

<exclusions>

<exclusion>

<artifactId>zookeeper</artifactId>

<groupId>org.apache.zookeeper</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase-server</artifactId>

<version>${hbase.version}</version>

<exclusions>

<exclusion>

<artifactId>zookeeper</artifactId>

<groupId>org.apache.zookeeper</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hbase</groupId>

<artifactId>hbase</artifactId>

<version>${hbase.version}</version>

<type>pom</type>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-jdbc</artifactId>

<version>${hive.version}</version>

<exclusions>

<exclusion>

<artifactId>zookeeper</artifactId>

<groupId>org.apache.zookeeper</groupId>

</exclusion>

<exclusion>

<artifactId>curator-framework</artifactId>

<groupId>org.apache.curator</groupId>

</exclusion>

<exclusion>

<artifactId>curator-client</artifactId>

<groupId>org.apache.curator</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-metastore</artifactId>

<version>${hive.version}</version>

<exclusions>

<exclusion>

<artifactId>jdk.tools</artifactId>

<groupId>jdk.tools</groupId>

</exclusion>

<exclusion>

<artifactId>zookeeper</artifactId>

<groupId>org.apache.zookeeper</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>${hive.version}</version>

<exclusions>

<exclusion>

<artifactId>zookeeper</artifactId>

<groupId>org.apache.zookeeper</groupId>

</exclusion>

<exclusion>

<artifactId>curator-framework</artifactId>

<groupId>org.apache.curator</groupId>

</exclusion>

</exclusions>

</dependency>

<dependency>

<groupId>org.apache.zookeeper</groupId>

<artifactId>zookeeper</artifactId>

<version>${zk.version}</version>

</dependency>

<dependency>

<groupId>org.apache.curator</groupId>

<artifactId>curator-framework</artifactId>

<version>${curator.version}</version>

</dependency>

<!-- log start -->

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>${log4j.version}</version>

</dependency>

<!-- 格式化对象,方便输出日志 -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>fastjson</artifactId>

<version>1.1.41</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-api</artifactId>

<version>${slf4j.version}</version>

</dependency>

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>slf4j-log4j12</artifactId>

<version>${slf4j.version}</version>

</dependency>

<!-- log end -->

<!-- host配置 -->

<dependency>

<groupId>io.leopard</groupId>

<artifactId>javahost</artifactId>

<version>0.9.6</version>

</dependency>

</dependencies>

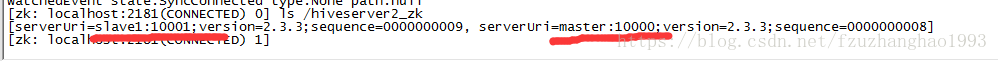

......2、jdbc连接hive失败,connection refused

单节点情况下连接成功,在zk模式下失败,这种情况往往是ip和host配置问题,即hive配置中的hive.server2.thrift.bind.host属性。必须要要保证两台机器的IP Java客户端可以访问到

这里附上测试源码以及我的hive配置

jdbc连接代码(测试)

import io.leopard.javahost.JavaHost;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.PreparedStatement;

import java.sql.ResultSet;

import java.sql.ResultSetMetaData;

import java.sql.SQLException;

import java.sql.Statement;

import java.util.Properties;

public class ConnectHive {

private static String driverName = "org.apache.hive.jdbc.HiveDriver";

// private static String url = "jdbc:hive2://master:10001/test";

private static String url = "jdbc:hive2://master:2181/test;serviceDiscoveryMode=zooKeeper;zooKeeperNamespace=hiveserver2_zk";

private static String user = "root";

private static String password = "123456";

private static Connection conn = null;

private static Statement stmt = null;

private static ResultSet rs = null;

public static void showDatabases() throws Exception {

String sql = "show databases";

System.out.println("Running: " + sql);

rs = stmt.executeQuery(sql);

while (rs.next()) {

System.out.println(rs.getString(1));

}

}

public static void showAllRecords(String tableName) throws Exception {

String sql = "select * from "+tableName;

System.out.println("Running: " + sql);

PreparedStatement pstmt = conn.prepareStatement(sql);

rs = pstmt.executeQuery();

while (rs.next()) {

ResultSetMetaData rsm = rs.getMetaData();

int count = rsm.getColumnCount();

for(int i = 1; i<=count; i++){

System.out.print(rs.getString(i));

System.out.print("\t|\t");

}

System.out.println(" ");

}

}

public static void main(String[] argvs) {

try {

Properties props = new Properties();

props.put("master", zk、master所在服务器IP);

props.put("slave1", slave1所在服务器IP);

JavaHost.updateVirtualDns(props);

Class.forName(driverName);

conn = DriverManager.getConnection(url, user, password);

stmt = conn.createStatement();

showDatabases();

showAllRecords("test.testanalyse");

} catch (Exception e) {

// TODO Auto-generated catch block

e.printStackTrace();

} finally {

try {

if(conn!=null){

conn.close();

}

} catch (SQLException e) {

e.printStackTrace();

}

}

}

}

hive-site.xml

https://gitee.com/fzuzhanghao/bigdata-config/blob/master/hive-config/hive-site.xml

关键部分

......

<property>

<name>hive.server2.thrift.port</name>

<value>10000</value>

</property>

<property>

<name>hive.server2.thrift.bind.host</name>

<value>master</value>

</property>

......

<!--ha config -->

<property>

<name>hive.server2.support.dynamic.service.discovery</name>

<value>true</value>

</property>

<property>

<name>hive.server2.zookeeper.namespace</name>

<value>hiveserver2_zk</value>

</property>

<property>

<name>hive.zookeeper.quorum</name>

<value>master:2181,slave1:2181,slave2:2181</value>

</property>

<property>

<name>hive.zookeeper.client.port</name>

<value>2181</value>

</property>3、hive use hbase table error

hbase表创建成功,lib也拷贝到hive下,在hive中创建external表也成功,但是查询的时候却报table not found的问题,这个也是因为hive的hive.server2.thrift.bind.host配置错误,如果采用网上很多教程配置为0.0.0.0就会导致2,3两点错误,出现以上两点问题,修改配置重启hive,jdbc连接问题即可解决,重建hive on hbase的external表即可解决表查询异常问题。

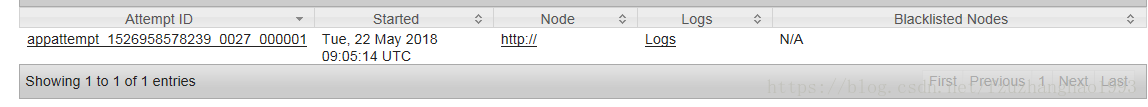

4、执行数次hive查询后yarn无法分配资源

执行数次hive on spark查询后yarn不再为spark分配资源。导致spark无法启动,不断处于application state: ACCEPTED状态,hive也在不断尝试执行语句。

经过检查,发现yarn未启动Container,也未将任务分配给任何一个node。

检查yarn的nodes,发现三个nodemanager节点全部挂掉,处于Unhealthy状态。检查原因,发现描述原因:local-dirs are bad:定位到是磁盘问题。经过排查发现磁盘使用已经超过90%,所以出现该异常。

两种解决方法:

1、清除spark上传的临时包以及其他无用文件;

2、调整yarn-site.xml

<property>

<name>yarn.nodemanager.disk-health-checker.max-disk-utilization-per-disk-percentage</name>

<value>95</value>

</property>