from keras.datasets import reuters import numpy as np from keras.utils.np_utils import to_categorical from keras import layers from keras import models import matplotlib.pyplot as plt def vectorize_sequences(sequences,dimension = 10000): result = np.zeros((len(sequences),dimension)) for i in range(len(sequences)): result[i,sequences[i]] = 1 return result #8982条训练数据,2246条测试数据 (x_train, y_train),(x_test, y_test) = reuters.load_data(num_words=10000) #训练数据向量化 x_train = vectorize_sequences(x_train) y_train = to_categorical(y_train) network = models.Sequential() network.add(layers.Dense(64,activation='relu')) network.add(layers.Dense(64,activation='relu')) #softmax返回一个概率值,每个概率是分到该类别的可能性 network.add(layers.Dense(46,activation='softmax')) network.compile(optimizer='rmsprop',loss='categorical_crossentropy',metrics=['accuracy']) history = network.fit(x_train,y_train,batch_size=256,epochs=20,validation_split=0.25) history_dict = history.history loss = history_dict['loss'] val_loss = history_dict['val_loss'] acc = history_dict['acc'] val_acc = history_dict['val_acc'] epochs = range(1,21) #loss的图 plt.subplot(121) plt.plot(epochs,loss,'g',label = 'Training loss') plt.plot(epochs,val_loss,'b',label = 'Validation loss') plt.xlabel('Epochs') plt.ylabel('Loss') #显示图例 plt.legend() plt.subplot(122) plt.plot(epochs,acc,'g',label = 'Training accuracy') plt.plot(epochs,val_acc,'b',label = 'Validation accuracy') plt.xlabel('Epochs') plt.ylabel('accuracy') plt.legend() plt.show()

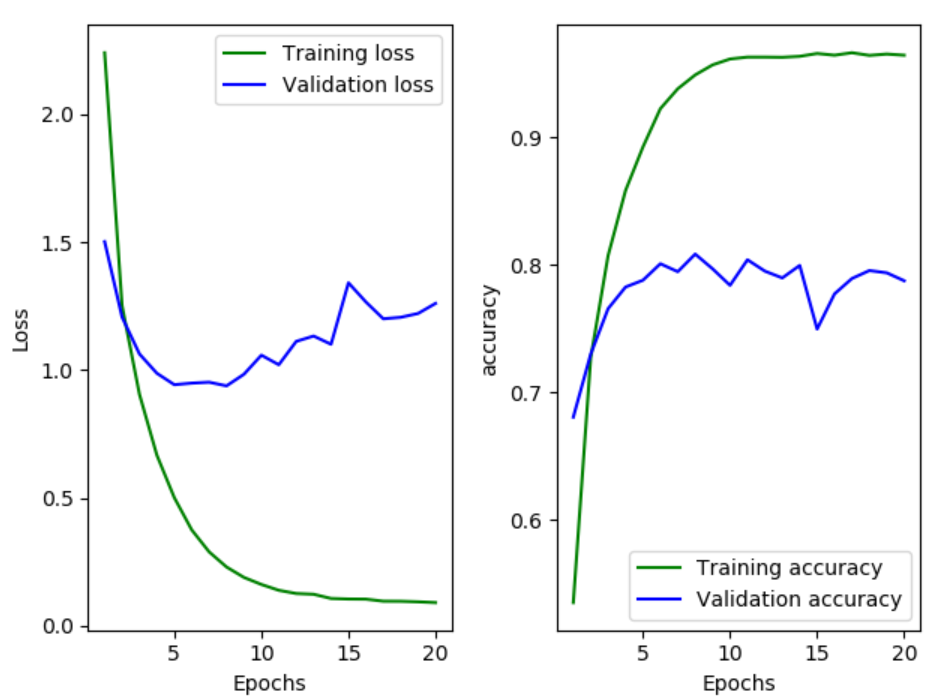

在第9轮以后,随之模型的训练,训练集的loss不断减少,但是验证集的loss开始增加,这种情况发生了过拟合,把轮次改成9即可