word vector: model of word meaning (vectors that can encode the word meanings)

用分类资源来处理词义 WordNet: taxonomy information about words

nltk.corpus语料库 import wordnet

synonyms同义词,一词多义;but 这些分类的描述存在很多nuances, incomplete. People will use words more flexible

很难对词汇的相似性给出准确的定义,can't get many nuances in WordNet

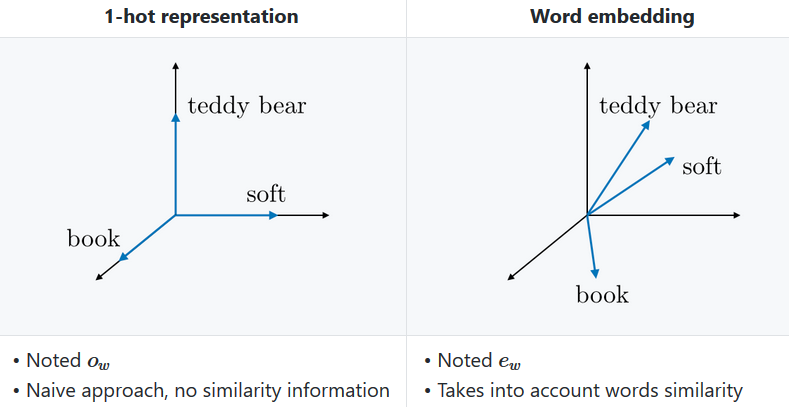

Can build a very big vector, each word assign for 1."one-hot" representation (localist store representation)→ dot product = 0

but doesn't give any inherent notion of relationships between words. We need to include similarity.

Encodes a way that you can just read the similarity between words!!! dot product

Use distributional similarity(分布相似性):

get a lot of value by looking at the contexts in which it appears, 并看到和它们一起出现的词,统计

“You shall know a word by the company it keeps”

Word vector(distributed representations, 通过分布相似性构建) 的数字要让它预测目标单词所在文本的其他词汇(词与词之间可以相互预测)

像概率分布的感觉,中心词+外边context words

What is word2vec?

recipe in general for learning neural word embeddings -> predict between a center word and words that appear in its context

p(context words| wt as center) = probability of perfectly predict the words around the word

J = 1 - p(w -t | w t) loss function (wt是中心词,w -t是围绕在中心词周围的其他单词)

Change the representaion of words to minimize our loss!!

Use deep learning: you set this goal and say nothing else about this, depend on DL! the output vectors are powerful!

powerful word vectors!

- Having distributed representations of words 2. and able to predict other words in context!

How that happens?

word2vec: predict between every words and its context words to learn word vectors

two algorithms:

1.skip-grams(SG)

for each estimation step, taking one word as the center word. Then try to predict words in its context in some window size(在一定句长内预测)

模型将定义一个概率分布:即给定一个中心词汇,某个单词在他上下文中出现的概率;选取词向量表示,以让概率最大化

对一个词汇,我们有且仅有一个概率分布

for each word t =1,2, ... T, predicting surrounding words in a window of radius m

- get big long sequences of words to learn (enough words in the context)

- go through each position in the text, have a window of 2m size around it

- have a probability distribution

- set the parameters of our model (that is, the representation vector of the word) to maximize p

lecture2: 27分钟左右

see ipad note

one word one vector representation?

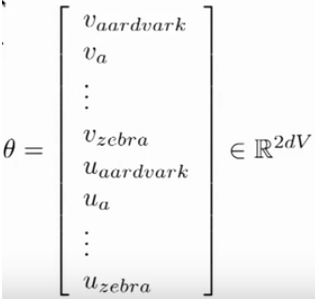

2 can be better: 一个是作为中心词的向量v,一个作为上下文词的向量u

don't pay attention to distance and position

for each position in the context, multiple the center vector by a matrix that stores the representation of context words

get the dot product for similarity(uoTvc,乘以每一行uo),uoTvc只有一个结果向量,上下结果代表三次vc变换

softmax, predict the context word 范围内最可能出现的词

w为所有词作为中心词组成的权重矩阵(word embedding词向量矩阵),w‘为所有上下文词组成的权重矩阵

different prediction: loss

w and w‘ (v and u):

Train the model: compute vector representations

put u and v vector of one word together to construct a 2d vector

this 2d vector is the thing going to be optimizing

Gradient!

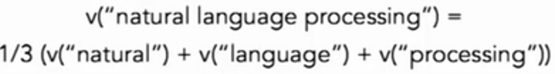

PAPER: A simple but Tough-to-beat baseline for sentence embeddings

How to encode the meaning of sentences? (not word as mentioned above)

Sentence Embedding:

- Compute sentence similarity through inner dot product

- Sentence classification task, like sentiment analysis

Compose word representations into sentence representations:

- the bag-of-words (BoW): average of word vector

- CNN, RNN

This paper: a very simple unsupervised method

weighted BoW + remove some special direction

Step 1:

compute word vector representations, each word has a seperate weight ???

weight = a/a+f(w)

Step 2:

compute first principle component

将句子嵌入与词频率,词与相关程度结合起来

(GD)梯度下降,斜率值在不断下降;learning rate a 必须足够小,防止跳过了最小值

如果对400亿个词求梯度,无法完成: not practical!!

作为代替:随机梯度下降(SGD,Stochastic Gradient Descent)

只选取一个中心词汇,有了它周围的词汇;移动这一个位置,对所有参数求解梯度(using that estimate of the gradient)?

不一定是一个好的方向的估计 (基线),但是比GD快几个数量级

neural networks love noise!